Clinical Governance: Department of Health

Snapshot

Has the Department of Health improved clinical governance, following the Targeting Zero report, to reasonably assure Victorians that public health services deliver quality and safe care?

Why this audit is important

In 2016, following a cluster of baby deaths at Djerriwarrh Health Services, a Victorian Government–commissioned report known as Targeting Zero, found that the then Department of Health and Human Services (DHHS) was not effectively leading and overseeing quality and safety across the health system.

The report recommended that we follow up on the Department of Health’s (DH) progress in improving clinical governance.

Who we examined

DH, including Safer Care Victoria (SCV) and the Victorian Agency for Health Information (VAHI).

What we examined

We examined how DH:

- oversees and manages quality and safety risks across the health system

- produces and uses information to identify and reduce risks.

What we concluded

DH has made some clinical governance improvements since Targeting Zero. Its risk assessment approach no longer masks poor quality and safety performance at public health services. SCV has also worked with health services to improve sentinel event reporting.

However, nearly five years after Targeting Zero, DH’s ability to reasonably assure Victorians of the health system’s quality and safety remains limited because:

- it cannot ensure that health services are operating within safe scopes of clinical practice

- it cannot regularly and easily detect trends and risks across the system

- Victoria still does not have a fully functioning statewide incident management system

- VAHI, DH's specialist analytics and reporting unit, is working to improve its reporting but can still do much more to consistently provide timely, meaningful and actionable insights that highlight risks and improvement opportunities.

What we recommended

We made 18 recommendations to DH. These recommendations aim to improve the Department’s systems and process for managing and detecting quality and safety risks across the health system. The Department accepted all recommendations.

Video presentation

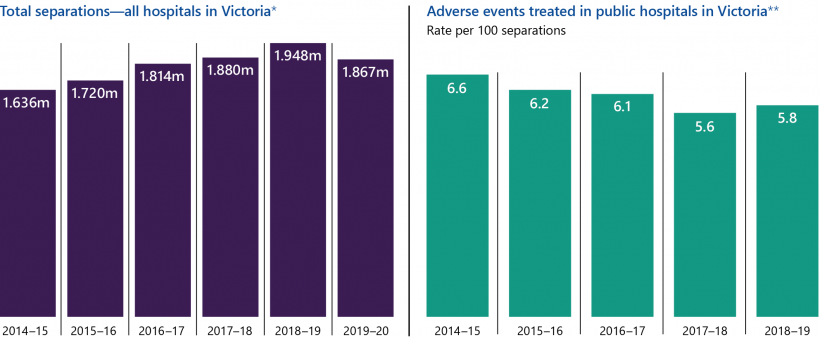

Key facts

Note: *Separations refer to patients discharged from their stay in hospitals. **An adverse event is an incident that results in harm to the patient.

Source: Victorian Government's Budget papers, and Productivity Commission's Report on Government Services.

What we found and recommend

We consulted with the audited agency and considered its views when reaching our conclusions. The agency’s full response is in Appendix A.

On 1 February 2021, the former DHHS was split into DH and the Department of Families, Fairness and Housing. We refer to DHHS when discussing actions taken before 2021.

Overseeing and managing risks across the health system

The 2016 report, Targeting Zero: Supporting the Victorian hospital system to eliminate avoidable harm and strengthen quality of care (Targeting Zero), found that the then DHHS was not overseeing and managing the health system to ensure that health services were providing safe and high-quality care.

DH has since made improvements to its systems and processes for monitoring and detecting quality and safety risks, however significant system level gaps remain. Figure A describes the improvements DH has made since Targeting Zero and what it still needs to improve.

Health services are a range of organisations that provide healthcare, including public hospitals, as defined by the Health Services Act 1988.

Clinical governance refers to the integrated systems, processes, leadership and culture that enable health services to provide safe and quality healthcare.

A capability framework outlines a health services' safe scope of practice based on its physical and human resources. It defines the minimum requirements that health services must meet to provide patients with safe care in clinical areas.

AHPRA regulates registrations for health practitioners. It is notified when there is a complaint about a practitioner. AHPRA notifies health services if a complaint is lodged against one of their practitioners.

HACs refer to complications that occur during a patient's stay in hospital.

Risk adjustment is a statistical method that adjusts crude numbers to consider additional factors, such as patient complexity. This enables health services to make more meaningful comparisons with others.

Figure A: Improvements DH has made and areas for further improvement

| Improvements | What needs further improvement |

|---|---|

| SCV updated the Victorian Clinical Governance Framework (VCGF) to set clinical governance expectations for all stakeholders across the health system. | DH has not fully aligned the VCGF with health services’ Statements of Priorities (SOPs), which are their key performance frameworks. While DH monitors each health service’s performance against its SOP, it does not monitor compliance with the VCGF. |

| DH no longer solely relies on health services’ accreditation status to detect quality and safety issues. | DH cannot routinely and easily detect quality and safety trends and risks across the health system. |

| DH implemented a better risk assessment approach that no longer masks poor quality and safety performance at public health services. | DH has not consistently documented its risk assessments for all public health services. |

| DH updated its Capability Frameworks for Victorian maternity and newborn services in March 2019. | DH has not implemented capability frameworks to cover all major areas of clinical practice (as recommended by Targeting Zero). |

| DH, including SCV and VAHI, has established information sharing agreements with a number of organisations, such as the Australian Health Practitioner Regulation Agency (AHPRA), and is using this information to better assess quality and safety risks across the health system. | DH and SCV may not be receiving timely notifications from review bodies, such as the Consultative Council on Obstetric and Paediatric Mortality and Morbidity (CCOPMM), to proactively prevent avoidable deaths across the health system. |

| SCV partnered with VAHI to develop and implement a comprehensive suite of new training sessions and tools for health services to better understand and meet their clinical governance roles and responsibilities. | SCV has not developed guidance for health services on how to evaluate the effectiveness of actions to address incidents. |

| VAHI has reduced duplication between its main quality and safety reports. | DH/VAHI has not implemented a fully functioning statewide incident management system that enables it to proactively detect quality and safety risks across the state. |

| VAHI improved the content of its Board Safety and Quality Reports (BSQRs) to provide better information for decision-makers in the health system. | VAHI has not fully implemented an interactive health information portal that enables clinicians to drill down from hospital-level outcomes to disaggregated information at the unit, clinician and patient levels (as recommended by Targeting Zero) |

| VAHI recently introduced a new supplementary report on hospital-acquired complications (HACs) that provides risk-adjusted measures to public health services every quarter. | There are examples in VAHI's main quality and safety reports where information is presented in a way that can misrepresent results. Also, timeliness of reports does not always meet stakeholders' needs. |

Source: VAGO.

Lack of an integrated approach for monitoring clinical governance

DH’s Policy and Funding Guidelines are system-wide terms and conditions for public health services.

DH has not provided health services with a single set of performance standards for clinical governance. It currently uses two separate documents—SOPs and the VCGF.

While DH's Policy and Funding Guidelines state that health services need to comply with both their SOP and the VCGF, the documents do not reference each other to explain their relationship. DH only monitors compliance against the clinical governance domains in the SOP and not those included in the VCGF.

The first report from this audit, Clinical Governance: Health Services, found that Djerriwarrh Health Services still does not comply with the VCGF almost five years after the Targeting Zero review.

Further, VAHI currently does not provide DH with a consolidated report on clinical governance across the Victorian health system, including changes, trends, risks and opportunities for improvement. Instead, VAHI produces two types of reports—‘Monitor’ reports, which outline a health service’s performance against its SOP, and BSQRs, which outline public health services' performance against the VCGF. As a result, DH cannot easily monitor clinical governance across the health system.

Capability frameworks

In 2016, Targeting Zero found that Djerriwarrh Health Services operated outside its safe scope of practice. Targeting Zero recommended DH to implement capability frameworks for all major areas of hospital clinical practice within three years. DH accepted this recommendation but, after almost five years, has only implemented a capability framework for one clinical service type. As a result, DH has not fully addressed the risk that Victorian health services could be knowingly or unknowingly operating outside their safe scope of practice.

DH is currently developing and implementing capability frameworks for the remaining 10 major and identified clinical service types. It told us that it cannot provide exact timelines for implementing these frameworks because:

- health services have not been able to engage with this work during the coronavirus (COVID-19) pandemic

- significant numbers of DH staff have been deployed to respond to COVID-19 since March 2020.

Monitoring quality and safety risks

Targeting Zero found that DHHS's performance monitoring method was fundamentally flawed. It combined a health service’s scores across different performance domains, which masked poor quality and safety performance. From 2017–18, DH started using four separate performance domains to determine its monitoring level for each public health service.

Now, to determine monitoring levels:

- firstly, DH uses a risk assessment database that automatically rates risks in public health services using their performance against SOP measures

- secondly, DH's performance monitoring teams consider other information, such as underlying risk factors and third-party intelligence, and can modify the automatically produced ratings.

Structured Query Language, or SQL, is a programming language designed for managing data stored in databases.

We examined DH's risk assessments for all 86 Victorian public health services from 2017–18 to 2019–20 and audited the SQL codes that DH uses for its database. We identified gaps in how DH documents its assessment process and decisions. We also found that DH's risk assessment process does not allow it to assess quality and safety risks at a system level.

Gaps in DH's risk assessment process

DH does not have clear and documented guidance for its staff to assess risks at public health services. While we did not identify any unreasonable risk assessments, our review took considerable effort and consultation with DH staff due to its documentation gaps.

DH's process relies on its staff using their local knowledge of the health service to change the automatically generated risk ratings and monitoring levels. However, DH's risk assessment database does not include mandatory fields for its staff to outline reasons for downgrading or upgrading risk ratings and monitoring levels. As a result, DH cannot easily access all documentation to justify its reasons for assigning risk ratings and monitoring levels to public health services.

No system-level risk assessments

While DH manages the public health system, it does not regularly analyse risks across it. This is because:

- DH’s staff cannot easily access detailed quarterly risk assessments of all public health services, which makes it difficult to consolidate the information

- DH's performance monitoring teams cannot easily access public health services' performance improvement plans to see how they are addressing risks

- VAHI's Monitor reports, which DH staff do have access to, only record DH’s overall monitoring levels of public health services from the previous quarter and not how DH determined these levels.

This severely limits DH's ability to identify potential systemic risks and opportunities concerning patient safety and quality of care.

DH funds VASM, which systematically reviews deaths associated with surgical care. The VASM annual report details clinical reviews and provides information on preventable harm.

Missing intelligence

Targeting Zero found that DHHS did not use findings from external review bodies, such as the Victorian Audit of Surgical Mortality (VASM) and CCOPMM, to identify quality and safety risks and monitor health services' performance. Since then:

- SCV receives an annual VASM report and is currently seeking to receive more regular information from VASM, including annual and monthly progress reports that contain de-identified information on surgical mortality across the health system. This would improve SCV's capability to identify quality and safety risks in surgical units at Victorian health services.

- CCOPMM can still take up to six months to notify SCV and DH of suspected preventable harm cases due to its internal review process and the amount of time it takes to receive records from health services.

VMIA is the Victorian Government's insurer and risk adviser. It covers all Victorian Government departments and public health services.

Further, despite DH, SCV and VAHI having an information-sharing agreement with the Victorian Managed Insurance Authority (VMIA), SCV asserts it still cannot access relevant claims information to monitor and assess quality and safety risks in Victorian public health services. However, VMIA advised that it has provided DH and SCV with claims information. These varying views indicate that DH and SCV are not fully using VMIA's claims information.

As a result, DH and SCV could further improve their access to and use of information to assess risks and monitor performance to more promptly prevent avoidable harm that may be occurring across the health system.

Outdated incident management guidelines

Both Targeting Zero and our 2016 Patient Safety in Victorian Public Hospitals audit recommended that DHHS:

- implement a statewide incident management policy that clearly specifies its aims

- develop guidance for health services to evaluate the effectiveness of their recommended actions from incident investigations.

DH accepted these recommendations but has not fully implemented them. While SCV streamlined and updated the 2011 Victorian Health Incident Management Policy with the Policy: Adverse patient safety events in 2019, it does not:

- clarify the roles and responsibilities of health services, SCV and DH for clinical incidents across all severity levels

- support health services to:

- investigate all clinical incidents

- evaluate the effectiveness of recommended actions from their investigations.

The lack of focus on lower severity incidents means a useful source of risk information is missed. While DH's policy includes steps to monitor actions taken in response to incident reviews, it does not explicitly set expectations for health services to evaluate whether or not those actions are effective in addressing the risk and preventing future harm.

Incomplete statewide incident management system

VHIMS is Victoria's current incident management system. DHHS established VHIMS in 2009. It categorises all incidents that occur in health services by four incident severity ratings (ISRs) based on the level of injury or harm that an incident causes. The ratings include:

- ISR 1: severe/death (including sentinel events)

- ISR 2: moderate

- ISR 3: mild

- ISR 4: no harm/near miss.

We first recommended DHHS to develop a minimum dataset for incidents across the Victorian health systems in our 2005 Managing Patient Safety in Public Hospitals report. Targeting Zero also recommended for DH to implement a system capable of supporting the incident management policy. While VAHI has made some improvements to the Victorian Health Incident Management System (VHIMS), DH has still not implemented a fully functioning statewide incident management system that it can use to detect systemic risks.

VHIMS should provide reliable and accurate incident data from public health services so DH can regularly and systematically analyse all clinical incidents. However, VAHI has not developed a data dictionary that comprehensively and consistently defines all data fields in VHIMS. This means that health services have inconsistent data collection methods and statewide incident reporting is flawed. As a result, DH's ability to confidently collate and systematically assess the data is reduced. Due to this, DH cannot:

- compare reporting and results between public health services to detect system level risks

- proactively detect underperformance or emerging risks across the system by routinely analysing lower severity incidents, such as ISR 2–4s.

Recommendations about overseeing and managing risks across the health system

| We recommend that: | Response | |

|---|---|---|

| Department of Health | 1. works with health services and relevant internal stakeholders to ensure its performance monitoring framework aligns with the Victorian Clinical Governance Framework to capture all recognised outcome areas and activity domains relating to high-quality care (see Section 2.2) | Accepted by: Department of Health |

| 2. finalises and implements capability frameworks to cover all major and identified areas of hospital clinical practice as a matter of priority (see Section 2.3) | Accepted by: Department of Health | |

| 3. regularly monitors and reports on health services' compliance against all capability frameworks and considers incorporating it in its performance monitoring process (see Section 2.3) | Accepted by: Department of Health | |

| 4. reviews its risk assessment systems, processes and procedures to ensure its staff are accurately and consistently assessing, monitoring and documenting quality and safety risks in public health services by applying agreed rules stated in its Victorian health services performance monitoring framework (see Section 2.4) | Accepted by: Department of Health | |

| 5. updates Policy: Adverse patient safety events to include expectations for all clinical incidents, including lower severity incidents (see Section 2.5) | Accepted by: Department of Health | |

6. develops and publishes the associated guidelines for Policy: Adverse patient safety events to:

|

Accepted by: Department of Health | |

7. works with public health services and internal stakeholders to finalise and implement a consistent and comprehensive data dictionary for the Victorian Health Incident Management System. This includes ensuring that:

|

Accepted by: Department of Health | |

| 8. regularly analyses and publishes insights from all clinical incident data, including lower-severity incidents, to identify potential or emerging patterns of risk or underperformance across the Victorian health system (see Section 2.5) | Accepted by: Department of Health | |

| 9. works with the Consultative Council on Obstetric and Paediatric Mortality and Morbidity to obtain timely and relevant notifications about potentially avoidable and/or avoidable harm, including perinatal morbidity and mortality, across the health system (see Section 2.6) | Accepted by: Department of Health | |

| 10. finalises arrangements to obtain annual and monthly reports from the Victorian Audit of Surgical Mortality, and implements processes to monitor and review the effectiveness of these arrangements to better detect quality and safety risks across the health system (see Section 2.6) | Accepted by: Department of Health | |

| 11. works with the Victorian Managed Insurance Authority to obtain relevant claims information to monitor and assess quality and safety risks in Victorian public health services (see Section 2.6) | Accepted by: Department of Health |

Producing and using information to identify and reduce risks

Targeting Zero stressed the importance of using credible information and analytics to drive quality and safety improvements across the health system. While DH, through VAHI, now provides more information than it did before Targeting Zero, VAHI is still uplifting its workforce capability and it does not meet some of its stakeholders’ information needs.

Workforce capability challenges

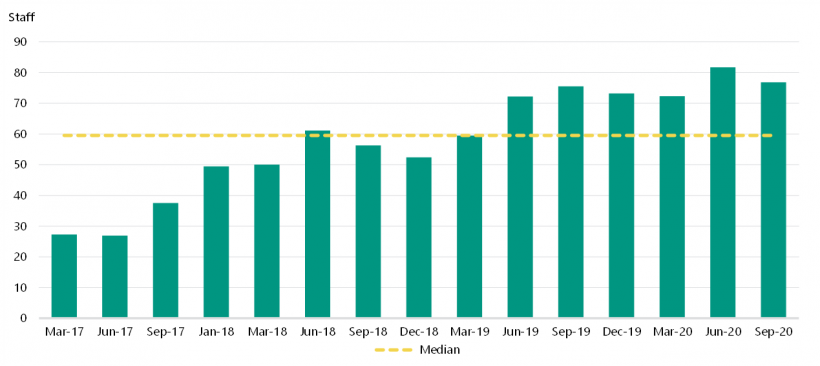

Since VAHI was established in 2017, it has not fully met its intended functions due to limitations in its workforce capability. At its outset, VAHI experienced slow workforce mobilisation and was sidetracked by work outside of its scope.

In April 2020, the VAHI 2020 workforce review estimated that only approximately 40 per cent of its workforce could undertake complex statistical analysis. Most of those staff also had management and leadership responsibilities, reducing their capacity for analytics work. The review also found that some health services lacked confidence in VAHI's statistical capabilities and contextual knowledge of the health system.

VAHI advise that it has started to improve its internal capability through recruitment and training and estimates that 55 per cent of its staff can now undertake complex statistical analysis. It also started developing a strategy to strengthen its analytics capability in May 2021.

Lack of internal monitoring systems and processes

We found that VAHI has not finalised its stakeholder engagement strategy and established internal systems and processes to:

- centrally record information collected on stakeholder feedback

- proactively and regularly track and assess its performance in meeting stakeholders' information needs. For example, VAHI conducted two sector wide stakeholder surveys in five years, even though VAHI's newsletter to the sector and its draft stakeholder engagement strategy both state its intention to conduct them annually.

These gaps mean VAHI risks missing valuable stakeholder feedback, or not identifying emerging feedback themes through the inability to easily collate feedback.

Opportunities for reporting improvements

VAHI is making progress in addressing stakeholders' information needs. VAHI's 2021 stakeholder survey showed that 77 per cent of survey respondents rated VAHI's overall performance as positive. However, the survey also showed the respondents would like to see further improvements in a range of areas, including in:

- timeliness of reporting

- stakeholder engagement and consultation

- accessibility of data and reports.

Varied reporting

While VAHI has sought to tailor its main quality and safety reports to different audiences, its reporting approach is burdensome for DH, SCV and public health services because:

|

VAHI's main quality and safety reports … |

As a result … |

|

report users cannot easily find the same or similar groups of indicators across different reports to obtain a comprehensive picture of quality and safety across the system. |

|

can each present a slightly different picture of quality and safety performance to different audiences. |

different report users may obtain different understandings of health services' performance and risks. In particular, health services executives who receive three of the four reports, may not be able to easily bring together and obtain actionable insights from multiple differing reports. |

From the start of 2020–21, VAHI also began producing a separate supplementary report, called 'Hospital-acquired complications in Victorian public health services', for all public health services that includes risk-adjusted HAC measures. This is a positive step. However, we note that it introduces another report, which presents indicators differently again.

To ease report users' burden of interpreting indicators across multiple reports, VAHI could instead present all relevant quality and safety indicators in one consolidated report, with summaries tailored for particular audiences.

VAHI’s information does not meaningfully highlight risks

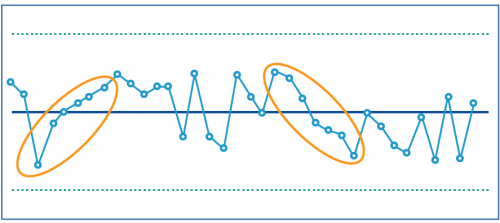

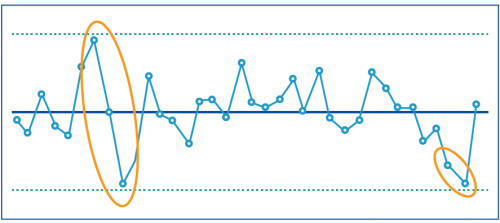

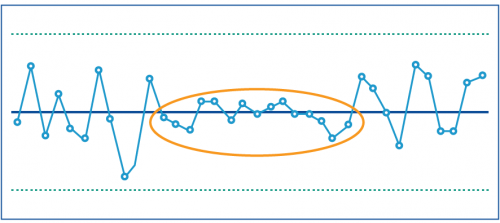

While VAHI's reporting to health services and their boards at times shows 'outliers'—areas of performance outside normal levels—this is not a consistent feature of its reporting. As such, VAHI's main quality and safety reports miss opportunities to clearly highlight areas of risk to health services and at times risk presenting information in a misleading way. For example:

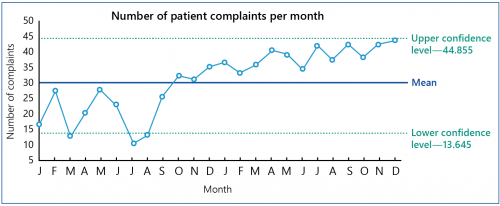

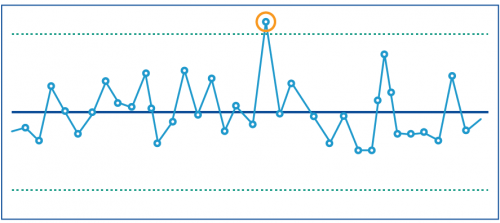

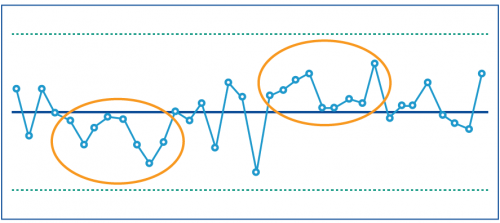

A control chart is a graph that shows data over time. It can help health services and DH to distinguish between:

- performance changes over time that are to be expected within the system being measured

- performance changes that reflect a 'special cause' and are not expected, reflecting that something has significantly impacted the performance result.

This type of reporting can alert health services and DH to direct attention to specific areas for improvement

|

VAHI's main quality and safety reports do not … |

As a result, DH and public health services cannot always… |

|

always, where it would be beneficial to do so, use statistical methods to explain whether a health services’ performance is unusual compared to others. |

|

|

use control charts, or other statistical methods, where appropriate, to show whether changes in individual health services' performance over time are significant or not (see Appendix D). |

|

|

accurately report results for indicators of 'patient safety culture' in public health services. |

|

|

cater for small and rural health services’ specific needs, despite Targeting Zero identifying the need to do this. |

A health service has a positive patient safety culture if:

- staff feel safe to speak up when they have concerns about patient safety

- the health service is committed to learning from errors

- the health service responds to warning signs early and avoids catastrophic incidents.

Too many quality and safety indicators

VAHI gives stakeholders too many quality and safety indicators. From 2017–18 to 2019–20, VAHI provided stakeholders with an average of 430 indicators every quarter across its four main quality and safety reports. This is because:

|

VAHI … |

As a result … |

|

initially did not have a formal process for developing and testing new quality and safety indicators. It introduced one in 2019–20. |

public health services and DH cannot easily and quickly digest the information to identify key quality and safety risks and opportunities for improvement. |

|

has not comprehensively reviewed existing quality and safety indicators to remove indicators that are no longer relevant. |

Lack of timely information

To best prevent harm, both DH and health services need timely information about quality and safety risks. At present, VAHI is not always able to meet stakeholder expectations for timely information. VAHI's reporting timeliness is at least partly affected by DH's current six-week minimum time period to validate data inputs. While VAHI has made some improvements to report timeliness, VAHI's 2021 stakeholder survey found that respondents indicated 'timely reporting' as the top opportunity for VAHI to further improve.

We also examined VAHI’s reports from 2017–18 to 2020–21 and found:

- VAHI's four main quarterly quality and safety reports from 2017–18 to 2019–20 were routinely delivered more than a full quarter after the reporting period

- VAHI's bespoke reports that analyse VHIMS data both took 10 months to produce, again partly due to data validation challenges

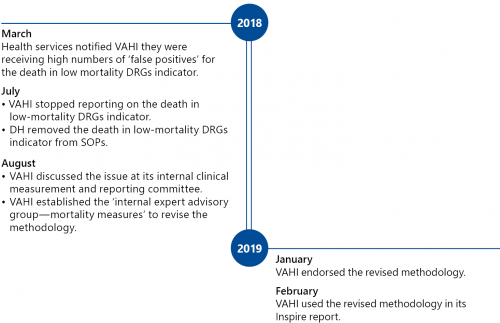

- VAHI was slow to implement changes when health services raised specific issues. For example, VAHI:

VIME is a data asset that integrates all available health datasets across Victoria. The ‘Portal’ is the front end website for VIME.

- took 12 months to revise its calculation methods for the ‘death in low-mortality diagnosis-related groups (DRG)' indicator after health services identified an issue

- took more than two and a half years to improve its BSQR after first publishing it in March 2017

- took more than four years to start improving its quality and safety reports to meet the needs of small rural health services after the Targeting Zero report identified this particular stakeholder information need

- has not addressed health services' concerns raised in August 2020 about the accuracy of patient safety culture indicators in the Monitor reports.

Delays in implementing an interactive health information portal

ICD-10 codes are globally standard codes for health data, clinical documentation and statistical aggregation.

AR-DRGs is an Australian admitted patient classification system that provides a clinically meaningful way of comparing the number and type of patients in a hospital to the resources the hospital requires.

Since June 2017, VAHI has been developing an interactive online portal to give health services and internal stakeholders better access to quality and safety data, which Targeting Zero recommended. This project, called the VAHI Information Management Environment (VIME)/Portal project, has experienced significant delays, partly due to the COVID-19 pandemic. Despite this, VAHI has built a solid foundation for it to successfully complete this work by December 2021.

Developing new interactive dashboards

As a part of the second stage of the VIME/Portal project, VAHI started an eight-week pilot of the new interactive Monitor dashboards in November 2019. We surveyed the pilot participants and intended users of these new dashboards at four health services and found that VAHI had not adequately engaged them during the pilot. Additionally, VAHI currently uses the World Health Organization’s International Classification of Diseases version 10 (ICD-10) to categorise patients in VIME. However, most Victorian health system stakeholders are more familiar with classifications based on the Australian Refined Diagnosis Related Groups (AR-DRGs), such as major diagnostic categories or major clinical related groups. VAHI advises that it is exploring its application of AR-DRG classifications to relevant patient data.

Recommendations about producing and using information to identify and reduce risks

| We recommend that: | Response | |

|---|---|---|

| Department of Health | 12. finalises an analytics capability framework that outlines its required internal workforce capability to meet stakeholders' information needs, and continues to monitor its adherence to the framework over time (see Section 3.1) | Accepted by: Department of Health |

| 13. finalises and continually improves its strategy and/or plan for engaging stakeholders to understand their quality and safety information needs, including outlining clear accountabilities and implementing a central system to monitor progress in meeting stakeholder needs (see Section 3.1) | Accepted by: Department of Health | |

| 14. consolidates its existing quality and safety reports to meet the specific needs of their target audiences and present a comprehensive and consistent view of quality and safety across the health system, including risks and opportunities for improvement (see Section 3.2) | Accepted by: Department of Health | |

| 15. periodically reviews all quality and safety indicators in reporting products to ensure they are all meaningful and provide actionable insights that help stakeholders to easily and quickly identify risks and opportunities to drive improvements across the system (see Section 3.3) | Accepted by: Department of Health | |

| 16. reviews the process for report production, including data submission and validation, to reduce delays in providing stakeholders with the most up to-date and timely quality and safety information (see Section 3.4) | Accepted by: Department of Health | |

| 17. develops and regularly reports on quality and safety indicators that relate to risks in rural and regional health services (see Section 3.4). | Accepted by: Department of Health | |

| 18. further engages key users at health services to ensure that the interactive dashboards that VAHI is developing as a part of its health information portal enable them to access critical and useful information to drive quality and safety improvements (see Section 3.5). | Accepted by: Department of Health |

1. Audit context

Clinical governance is central to delivering quality and safe healthcare. The 2016 Targeting Zero review found that DHHS could not assure Victorians that the health system was safe and providing high-quality care. It recommended 179 actions to improve clinical governance across the state.

To help address these actions, the Victorian Government created SCV and VAHI within DH to drive improvements across the health system and improve access to health information and analytics. Together, DH, SCV and VAHI are responsible for improving the quality and safety of the Victorian health system.

This chapter provides essential background information about:

1.1 Clinical governance

DH's VCGF describes clinical governance as:

… the integrated systems, processes, leadership and culture that are at the core of providing safe, effective and person-centred healthcare, underpinned by accountable continuous improvement.

Effective clinical governance:

|

Leads to healthcare that is … |

because health services can … |

|

safe |

eliminate avoidable harm to patients while delivering healthcare. |

|

effective |

deliver integrated healthcare in the right way, at the right time and to achieve the best health outcomes. |

|

patient centred |

deliver healthcare that considers a patient's values, beliefs and individual circumstances. |

Past clinical governance failures

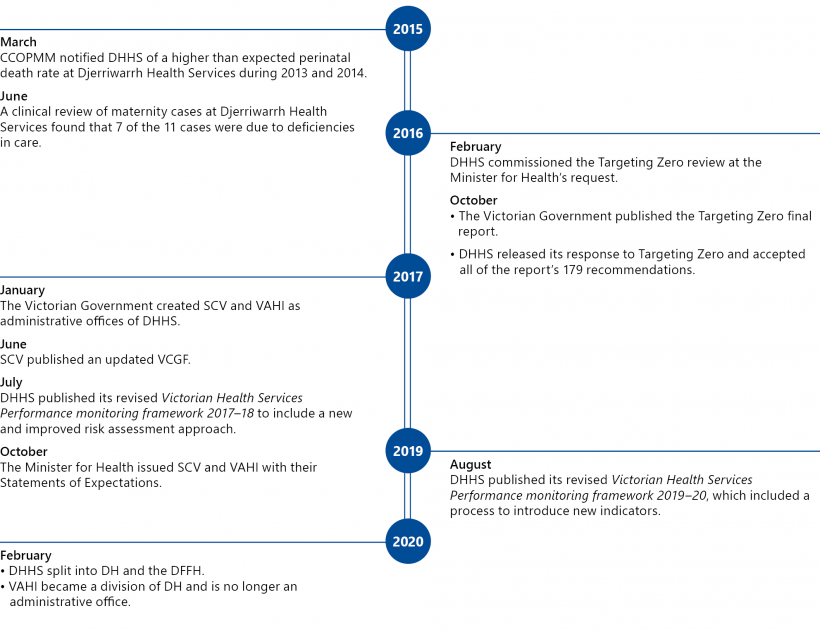

In March 2015, CCOPMM notified DHHS about a cluster of baby deaths at Djerriwarrh Health Services during 2013 and 2014. At the Minister for Health’s request, DHHS commissioned an independent review in 2016. The review examined DHHS's systems and processes for governing and assuring the quality and safety of health services. The review panel advised DHHS on ways to improve its systems to achieve best practice.

In October 2016, the Victorian Government published the review's final report—Targeting Zero. Targeting Zero found that DHHS could not assure Victorians of the quality and safety of the health system. The catastrophic clinical governance failures at Djerriwarrh Health Services illustrated DHHS’s inadequate oversight of health services’ quality and safety.

Targeting Zero recommended 179 actions across 10 themes, which the Victorian Government accepted. It also recommended that we assess DHHS’s progress in implementing these recommendations.

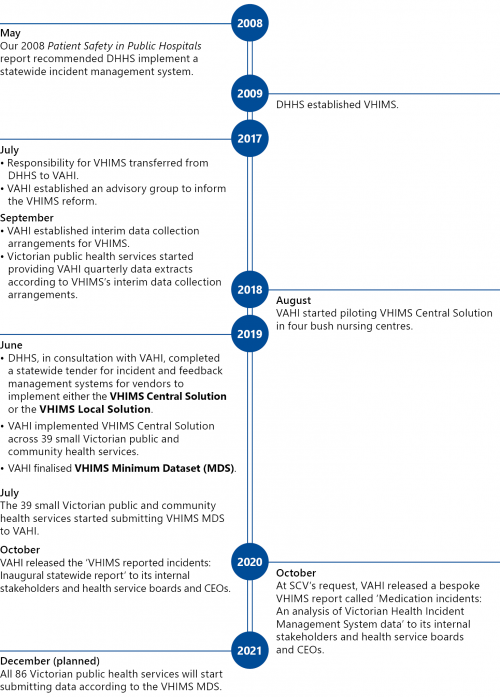

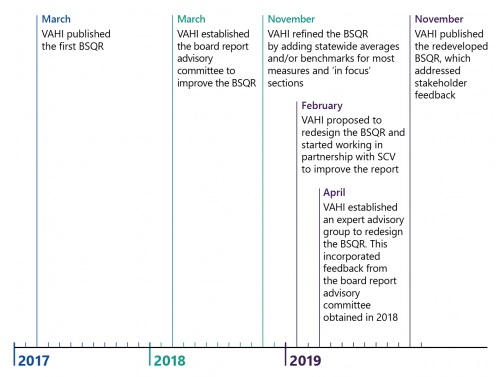

Figure 1A presents the timeline of relevant clinical governance events in the Victorian health system.

FIGURE 1A: Timeline of relevant clinical governance events in the Victorian health system

Source: VAGO.

Past audit findings

Targeting Zero noted that it was not the first review that identified DHHS was failing to adequately perform important statewide functions and not prioritising patient safety. It referenced the three past VAGO reports, which Figure 1B outlines.

FIGURE 1B: Key findings from past VAGO reports

| Published | Report | Findings |

|---|---|---|

|

March 2005 |

Managing Patient Safety in Public Hospitals |

|

|

May 2008 |

Patient Safety in Public Hospitals |

|

|

March 2016 |

Patient Safety in Victorian Public Hospitals |

|

A sentinel event is an incident that results in death or serious harm to a patient that is wholly preventable.

Source: VAGO.

This report

This is the second of our two performance audit reports to follow up on DH's progress in implementing Targeting Zero's recommendations. It examines how DH oversees and manages quality and safety across the Victorian health system.

The first report—Clinical Governance: Health Services— tabled in June 2021 and examined clinical governance systems and processes in a representative selection of Victorian public health services—Ballarat Health Services, Djerriwarrh Health Services, Melbourne Health and Peninsula Health.

Given the considerable number and breadth of the recommendations, we consulted Targeting Zero's lead author while scoping this audit and decided to examine a selection of key themes the recommendations raised, rather than all of the individual recommendations.

An administrative office is a public service agency that is separate from a department but reports to the department's secretary.

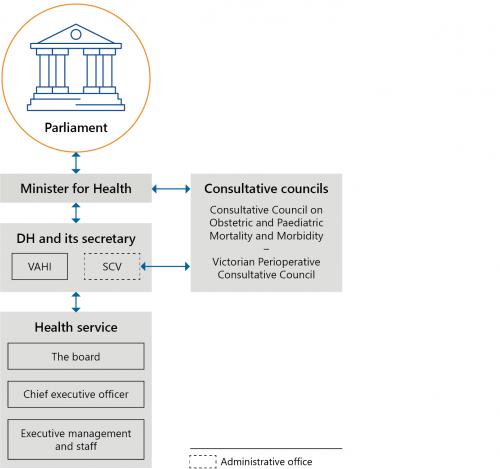

1.2 The Victorian health system

Following Targeting Zero, in 2017 the Victorian Government created SCV and VAHI as administrative offices of DHHS. From 1 February 2021, VAHI stopped being an administrative office and is now a business unit within DH. Figure 1C outlines the current structure of the Victorian health system in relation to quality and safety.

FIGURE 1C: Bodies responsible for quality and safety in the Victorian health system

Source: VAGO.

Department of Health

DH leads, manages and regulates the Victorian health system.

From 2017, DH’s strategic plans have consistently included objectives to improve clinical governance. For example, DHHS's 2019–20 strategic plan includes ’reduce the incidents of avoidable harm in Victorian hospitals’ and ’improved patient and client reported experiences of care and treatment’ as two key results areas.

The Health Services Act 1988 gives DH’s secretary a range of functions to meet the Act's objectives. These include:

- developing policies and plans with respect to healthcare provided by health services

- funding or purchasing health services and monitoring, evaluating and reviewing publicly funded or purchased health services

- developing criteria or measures that enable DH to compare the performance of health services that provide similar services

- encouraging safety and improving the quality of healthcare provided by health services

- collecting and analysing data.

Each year, on behalf of the Minister for Health, DH negotiates annual service agreements, called SOPs, with all Victorian public health services. SOPs set out activity and performance expectations for health services in return for government funding (see Section 1.3 for more information).

While VAHI is now a business unit in DH, it continues to have its own chief executive officer (CEO) and retains its key functions

Victorian Agency for Health Information

VAHI is DH's specialist analytics and reporting unit. It aims to enable DH and health services to monitor performance, identify risks and continuously improve the quality and safety of their services. Figure 1D contains an extract from the Statement of Expectations (SoE) that the Minister for Health provided to VAHI in 2017 to outline its functions.

FIGURE 1D: VAHI's functions

- Ensure that government information and data are accessible to organisations and individuals by:

- publishing regular reports on public and private services that impact health, wellbeing, quality and safety to support transparency, oversight, risk assessment and improvement

- monitoring and reporting on the performance of organisations and services.

- Ensure meaningful information and data are available for health services, the public, businesses and researchers.

- Support a culture of information sharing to drive continuous improvement by:

- publishing timely reports benchmarking performance in ways that support identification of opportunities for improvement

- building sector capacity to create and use improvement information.

- Collect, use, store, link and manage data to ensure it is meaningful, accurate, protected from unauthorised access, available when needed and shared as required in order to fund, manage, monitor, improve and evaluate health services.

- Recommend standards and guidelines relating to collecting, linking and reporting on data, and creating and recommending indicators to measure performance.

- Undertake and/or commission research and collaborate and/or share data with other agencies of government to support its functions.

- Provide advice to the relevant Minister and Secretary on issues arising out of its functions.

Source: VAHI's SoE.

Safer Care Victoria

SCV is DH's quality and safety improvement administrative office. Figure 1E contains an extract from the SoE that the Minister for Health provided to SCV in 2017 to outline its functions.

FIGURE 1E: SCV's functions

- Support all public and private health services to prioritise and improve safety and quality for patients.

- Strengthen clinical governance, lead clinician engagement and drive quality improvement programs and processes for health services.

- Provide independent advice and support to public and private health services to respond and address serious quality and safety concerns.

- Review public and private health services and health service performance, in conjunction with the department, in order to investigate and improve safety and quality for patients.

-

Lead Victoria’s contribution to the development of national accreditation and other clinical care standards by the Australian Commission on Safety and Quality in Health Care.

The Australian Commission on Safety and Quality in Health Care was established by the Council of Australian Governments in 2006 to lead and coordinate national improvements in the safety and quality of healthcare.

- Undertake research and coordinate the provision of evidence-based research and guidelines throughout the sector.

- Coordinate the efforts of clinical networks to:

- reduce clinical variation and issue best-practice guidelines

- report annually on improvement strategies

- ensure improvement activities are coordinated.

- Reduce avoidable harm by:

- sharing trends and learnings from significant harm incident reports

- respond to and anticipate health system issues relating to patient safety

- coordinate system responses to specific safety events.

- Provide advice to the Minister and Secretary on any issues arising out of its functions.

Source: SCV's SoE.

Victorian public health services

A denominational hospital is a private not‑for-profit provider of public health services. Victoria has three denominational hospitals—Calvary Health Care Bethlehem Limited, Mercy Hospitals Victoria Limited, and St Vincent's Hospital (Melbourne) Limited.

Under the Health Services Act 1988, the Minister for Health appoints independent boards for all public health services, except for denominational public hospitals. Boards are responsible for implementing effective and accountable risk management systems, including systems and processes to monitor and improve the quality, safety and effectiveness of the health services they provide.

CEOs are responsible for:

- managing their public health service according to the framework set by its board

- ensuring their public health service comply with all relevant requirements set by DH

- managing day-to-day operations and governance.

1.3 Relevant agreements, frameworks and guidelines

The National Safety and Quality Health Service Standards is a set of nationally agreed standards for quality and safety in Australian healthcare. It was developed by the Australian Commission on Safety and Quality in Health Care. All Australian health services must comply with these standards.

Through its Policy and Funding Guidelines, DH requires all Victorian public health services to comply with a range of clinical governance requirements, including the VCGF and the National Safety and Quality Health Service Standards.

Statements of Priorities

Each year, all Victorian public health services and the Minister for Health enter into annual service agreements, or SOPs, as per section 26 of the Health Services Act 1988. SOPs set the basis for DH's ongoing performance monitoring of all Victorian public health services.

A SOP consists of four parts, including:

- part A—an overview of the health service’s service profile, strategic priorities and deliverables in the year ahead

- part B—performance priorities and agreed targets

- part C—funding and associated activities

- part D—the service agreement between the health service and the State of Victoria for the purposes of the National Health Reform Agreement.

Victorian health services performance monitoring framework

Since 2007, DH has used Victorian health services performance monitoring frameworks (PMFs) to monitor and manage public health services' performance against their SOP targets every quarter. DH reviews the PMF annually to include improvements and ensure it aligns with health services' SOPs.

Targeting Zero found that the PMF was fundamentally flawed. This was because DHHS had previously graded public health services' performance by generating an overall performance assessment score, which could mask issues of poor quality and safety performance.

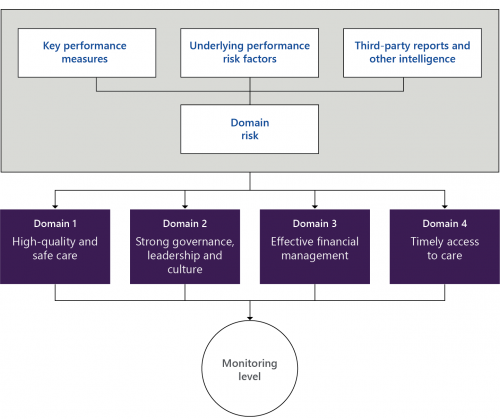

DHHS's Victorian Health Services Performance monitoring framework 2017–18 replaced the performance assessment score method with a new risk assessment approach. As Figure 1F shows, DH now assesses a public health service's risk level by considering a mix of quantitative and qualitative information, including:

- the health service's performance against key performance measures

- the health service's underlying risk factors

- third-party reports and other intelligence.

DH assesses this information against the PMF's four domains:

- high quality and safe care

- strong governance, leadership and culture

- effective financial management

- timely access to care.

DH uses the results to determine a public health service's risk level and how closely it needs to monitor it.

FIGURE 1F: DH's current risk assessment approach

Source: VAGO, based on DH's 2017–18 PMF.

The Victorian Clinical Governance Framework

SCV published an updated VCGF in June 2017. The VCGF sets the Victorian Government's expectations for clinical governance in health services. It also specifies the clinical governance roles and responsibilities of stakeholders across the Victorian health system, including DH, SCV, VAHI and Victorian health services. Figure 1G shows DH and health service boards’ roles and responsibilities.

FIGURE 1G: Roles and responsibilities of DH and health service boards

| DH (including SCV and VAHI) | Health service boards |

|---|---|

| Setting expectations and requirements regarding health services’ accountability for quality, safety and continuous improvement | Setting a clear vision, strategic direction and ‘just’ organisation culture |

| Providing leadership, support and direction to ensure health services provide safe, high-quality healthcare | |

| Ensuring board members have the required skills and knowledge to fulfil their responsibilities | Ensuring they have the skills, composition, knowledge and training to lead and pursue quality and excellence in healthcare |

| Ensuring health services have the necessary data to fulfil their responsibilities, including benchmarked and trend data | Monitoring and evaluating all aspects of care they provide by regularly and rigorously reviewing benchmarked performance data and information |

| Proactively identifying and decisively responding to emerging clinical quality and safety trends | |

| Monitoring health services’ clinical governance implementation and performance by continually reviewing key quality and safety indicators | |

| Monitoring health services’ implementation and performance of clinical governance systems and ensuring they identify risks and red flags early8 | Understanding key risks and putting appropriate mitigation strategies in place |

| Ensuring there are robust clinical governance structures and systems that effectively support and empower staff to provide high-quality care |

Source: VCGF.

Clinical governance domains

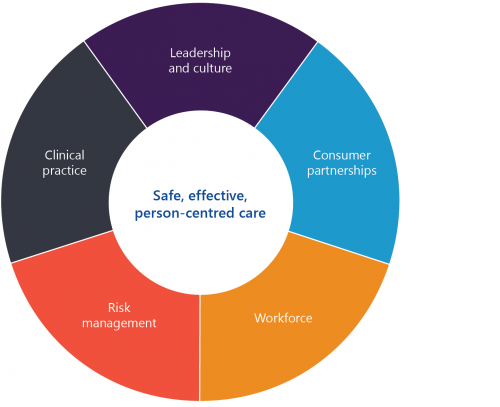

As Figure 1H shows, there are five interrelated clinical governance domains in the VCGF that underpin safe, effective and person-centred care.

FIGURE 1H: Clinical governance domains

Source: VAGO, based on the VCGF.

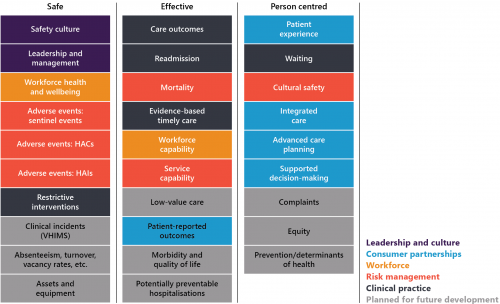

Performance measures

VAHI developed performance measures as a part of its BSQRs to monitor public health services' performance against the VCGF’s clinical governance domains, as Figure 1I shows. It also shows that VAHI is still developing indicators in some areas.

FIGURE 1I: Performance measures against the VCGF domains

Note: HAI stands for hospital-acquired infection.

Source: VAGO, based on VAHI's June 2021 BSQR.

2. Overseeing and managing risks across the health system

Conclusion

DH has improved its clinical governance leadership and risk assessment processes for health services. However, it has not yet addressed key Targeting Zero recommendations to sufficiently oversee the system, such as:

- setting clear frameworks to ensure health services deliver healthcare within the limits of their physical and human resources and monitoring their compliance

- having a fully functioning statewide incident management system and using it to assess system-wide risks.

As such, DH still can do more to reduce risks and assure Victorians that health services provide safe and high-quality care.

This chapter discusses:

2.1 DH’s improvements to clinical governance

Less reliance on accreditation

Djerriwarrh Health Services was accredited during the whole period when avoidable deaths occurred during 2013 and 2014.

Targeting Zero found that DHHS was incapable of detecting catastrophic clinical governance failings. One reason was that DHHS relied too heavily on health services achieving accreditation to assure itself of quality and safety across the health system. The review recommended DHHS overhaul its performance monitoring approach and systems.

In response, DH reclassified accreditation as a minimum requirement for public health services and has included additional quality and safety indicators in all health services' SOPs, which it uses as part of its risk assessment.

New risk assessment approach

DH’s current risk assessment approach means that public health services can no longer mask poor quality and safety performance by performing well in other areas, such as financial management. As Figure 1F shows, DH assesses each health service’s risk level against the PMF’s four domains separately to determine how closely it needs to monitor it.

Every quarter, staff from DH's performance monitoring units, which includes one unit for metropolitan and specialist health services and one for regional and rural health services, meet with SCV staff to discuss performance risks across the four domains and tentatively determine health services' monitoring levels. DH (and SCV when required) meets with each public health service’s CEO every quarter to discuss risks and performance. In these meetings, DH finalises the monitoring level it applies to each public health service going forward.

Executives at the four health services we interviewed told us that they have more substantial discussions about quality and safety with DH and SCV now and that quality and safety is the first discussion topic in all of DH's performance monitoring meetings.

Using more information

Since Targeting Zero, DH and SCV have established information sharing agreements with relevant organisations to improve how they assess risks at health services. For example, from November 2016 to August 2018, DHHS (including SCV and VAHI):

- established an agreement with AHPRA to exchange information with each other

- established a formal memorandum of understanding with the VMIA to share information.

As a result, SCV has used information obtained from AHPRA to review several complaints about practitioners.

We undertook a detailed analysis of the four health services’ quarterly risk assessments from 2017–18 to 2019–20. On average, DH and SCV used information from five to six separate sources to determine each health service’s quality and safety risks, including changes to a health service’s board, intelligence from clinical networks and patient complaints. Accreditation information is now only one information source that DH and SCV consider in determining health services’ quality and safety risks.

Better clinical governance training

Targeting Zero recommended DHHS provide training and support to health services to help them meet their clinical governance roles and responsibilities. In response, SCV developed a suite of new training and supports for health services, including:

- clinical governance training and a toolkit for health service boards, CEOs and executives

- team-based training for health services’ quality teams

- data literacy training in partnership with VAHI.

Since August 2018, SCV and VAHI have delivered clinical governance training sessions to a total of 540 board directors and staff across public health services. SCV initially targeted health service board directors, CEOs and executives for this training, followed by quality teams. VAHI has advised us that its data literacy training will also be delivered to other stakeholder groups.

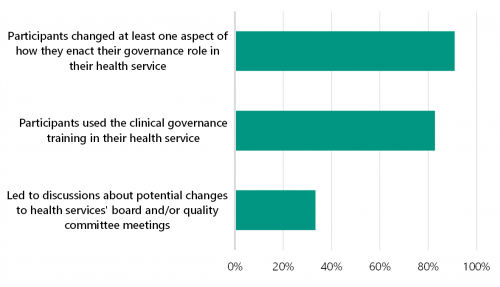

In 2018, DH's centre for evaluation and research evaluated SCV's clinical governance training. As figures 2A and 2B show, this evaluation, which included 278 board directors, found that the training has significantly improved participants' understanding of their clinical governance roles and capabilities.

FIGURE 2A: Impact of training on public health service board directors

Source: DH.

FIGURE 2B: Impact of training on health service board directors and quality teams

Source: DH.

Board chairs we surveyed at four health services told us that DH's induction and updated director's toolkit provides clear information about their clinical governance roles and responsibilities. Figure 2C contains examples of what board chairs told us.

FIGURE 2C: Selected quotes from health service board chairs about DH’s clinical governance supports

’I was impressed at the time with the level of support offered by DHHS ... DHHS also provided me with a buddy chair from another network that I could call at any time. I found this very useful particularly in the first three months of my appointment. I was at all times advised that DHHS had a very strong focus on safety and quality and that the board should be on top of their key safety and clinical governance issues.’

’I was provided with a very detailed induction folder after meeting with the Chair of the Board of Directors ... In the first 6 months of my term, I also participated in a DHHS evening learning session held at the health service, to clarify the role and responsibilities of health service non-executive directors.’

’I believe I had a thorough induction in that I attended a number of sessions put on by DHHS on quality and clinical governance and I also attended a briefing on the Health Services Act which clearly sets out our responsibilities. I was also given an induction pack which included everything from the roles and responsibilities of directors to statements on the high value placed on safety and quality ...’

2.2 DH's lack of a clear approach for monitoring clinical governance

DH's approach to monitoring clinical governance is unclear because it uses two separate, overlapping frameworks—the PMF (and its associated SOPs) and the VCGF (see Section 1.3). The inconsistencies between the two frameworks, and the comparatively lesser status of the VCGF, risk reducing clarity of focus on the importance of patient safety and quality.

DH's Policy and Funding Guidelines specify that health services need to comply with the VCGF. However, unlike their SOPs, DH does not explicitly require them to report against the VCGF and DH does not assess health service compliance with it.

The PMF/SOPs and the VCGF do not refer to each other to explain their relationship. There are also inconsistencies between them that place different emphasis on, and understanding of relationships between, various elements of healthcare practice. For example:

- the VCGF is silent on the issue of 'timeliness' of care, which has a clear relationship to patient safety and quality of care. Conversely the PMF includes 'timely access to care' as a specific performance domain necessary to achieve 'best patient outcomes'.

- the PMF positions 'strong governance, leadership and culture' as a separate performance domain from the domain of 'high quality and safe care', whereas the VCGF positions 'leadership and culture' as necessary to achieve 'safe, effective, person-centred care'.

- the SOPs do not cover several areas of clinical governance that the VCGF includes, such as continuous improvement, innovation and consumer co-design of care and services.

VAHI produces two separate reports to monitor health services’ performance against the SOPs and VCGF:

|

VAHI uses … |

to assess public health services’ performance against … |

|

Monitor reports |

their SOPs. |

|

the BSQR |

the VCGF’s measures. |

However, the Monitor reports and the BSQR do not measure all of the clinical governance requirements that health services need to meet. This means that DH currently does not have access to a consolidated report on health services' compliance with clinical governance requirements across the Victorian health system, including changes and trends, risks and opportunities for improvement.

Targeting Zero called for DHHS to focus on monitoring health services' safety and quality performance by recommending that it develop a dedicated performance framework that is separate from the financial performance and activity levels in the SOPs. To date, DH has not enacted this.

2.3 DH's lack of capability frameworks

Targeting Zero recommended DHHS implement capability frameworks, covering all major areas of hospital clinical service type within three years, to prevent health services from operating outside of their safe scopes of practice. However, after almost five years, DH has only published one updated capability framework—Capability Frameworks for Victorian maternity and newborn services—in March 2019.

As a result, DH has not addressed the risk of Victorian health services knowingly or unknowingly operating outside of their safe scopes of practice.

The absence of capability frameworks means that:

- health service boards may be unaware of discrepancies between the clinical services their hospitals provide and the resources they require to deliver safe care

- DH is missing vital information to inform its resourcing, service and capital planning decisions across the state to best ensure that Victorians can access safe healthcare

- DH cannot ensure that Victorian health services are operating within their scopes of practice.

As Figure 2D shows, DH is currently developing capability frameworks for the remaining clinical service types. DH told us that it cannot provide exact timelines for implementing these frameworks because:

- health services have not been able to engage with this work during the COVID-19 pandemic

- significant numbers of DH staff have been deployed to respond to COVID-19 since March 2020.

FIGURE 2D: Status of capability frameworks that DH is developing

| Clinical service type | Current status of capability frameworks |

|---|---|

|

Critical and intensive care |

DH published draft frameworks for consultation in October 2019. Consultation findings are being incorporated into the final versions. |

|

Anaesthetics |

|

|

Medical imaging and nuclear medicine |

|

|

Pharmacy and medicines management |

|

|

Pathology |

|

|

Urgent, emergency and trauma care |

Implementation phase. DH is still developing implementation guidelines for health services. |

|

Renal care |

Implementation phase. DH has provided health services with implementation guidelines and health services self assessed their capability in late 2019. |

|

Surgical and procedural care |

Implementation phase. DH has provided health services with implementation guidelines and health services self assessed their capability in late 2019. DH has provided support for health services whose self assessment identified a higher risk. |

|

Cardiac care |

Implementation phase. DH is still developing implementation guidelines for health services. |

|

Mental health |

To implement a recommendation from the 2021 Royal Commission into Victoria’s Mental Health System, DH is now developing this capability framework. |

Key performance measures refer to quantifiable data on a defined set of key performance indicators that DH specifies for each health service.

2.4 Monitoring quality and safety risks

DH applies a set of rules to determine its monitoring level for each public health service.

Firstly, as Figure 2E shows, DH determines a health service’s risk rating based on its key performance measures, underlying performance risk factors, and third-party reports and other intelligence.

FIGURE 2E: DH's rules for assigning risk ratings

| Risk rating | Key performance measure | Underlying performance risk factor | Third-party reports and other intelligence |

|---|---|---|---|

| Low risk | <10% of key performance indicators are not met and have deteriorated | No concern | No concern |

| Medium risk | 10–30% of key performance indicators are not met and have deteriorated | Some underlying risk factors are present | Some concern |

| High risk | >30% of key performance indicators are not met and have deteriorated | Significant underlying risk factors are present | Significant outstanding concerns |

Source: DH, 2019–20 PMF.

DH's database automatically determines a health service’s risk rating based on changes in its performance against its SOP key performance indicators. DH's performance monitoring teams can then modify the rating by considering underlying risk factors and other intelligence.

Secondly, DH determines a health service’s risk level for each PMF domain by applying the following rules:

- low risk: low-risk ratings in key performance measures, underlying performance risk factors, and third-party reports and other intelligence

- medium risk: any medium-risk ratings in key performance measures, underlying performance risk factors, or third-party reports and other intelligence, and no high-risk ratings

- high risk: any high-risk ratings in key performance measures, underlying performance risk factors, and third-party reports and other intelligence.

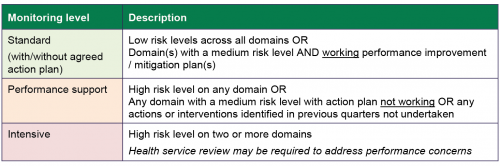

Lastly, DH determines the overall monitoring level it applies to a public health service based on its risk level for each domain. Figure 2F shows the monitoring level definitions.

FIGURE 2F: DH’s rules for determining monitoring levels

Source: DH, 2019–20 PMF.

To apply the above rules, DH's risk assessment database uses SQL codes to automatically determine risk measure ratings, domain risk ratings and monitoring levels based on changes in performance indicators. DH's performance monitoring teams can then manually adjust the risk measure ratings, domain risk ratings and monitoring levels by considering underlying risk factors and other intelligence.

We examined DH's risk assessments for all 86 Victorian public health services from 2017–18 to 2019–20 and audited SQL codes from DH's risk assessment database.

We found that while DH's risk assessments were reasonable, we identified methodological and documentation gaps that introduce the potential for incorrect assessments. These gaps also made it time-consuming and difficult for us to understand the decisions DH made. The gaps we identified included:

|

DH does not … |

because … |

As a result … |

|

include data about health services' risk management plans in its automated risk assessment tool, despite these plans being a key element in calculating risk and monitoring levels |

DH has not coded its risk assessment database to include this. |

DH's risk assessment database may not always generate correct risk ratings and monitoring levels because it does not incorporate all required elements. |

|

DH relies on its staff to apply their local knowledge of the health services to modify the automatically generated risk ratings and monitoring levels, which poses a risk for errors. |

||

|

include mandatory fields in its risk assessment tool for its staff to outline reasons for downgrading or upgrading risk ratings |

DH has no formal requirement for its performance monitoring team to do this. |

DH cannot regularly and easily assure the quality of the risk measure ratings, domain risk ratings and monitoring levels determined by staff. It also cannot understand historical decisions that may be critical for reviewing performance over time. |

|

record health services' detailed risk assessments and improvement and/or mitigation plans in an easily accessible way |

DH records detailed risk assessments in individual electronic records. |

DH cannot regularly and easily analyse trends across the heath system, identify potential systemic risks and identify opportunities for systemic improvements. |

|

DH's consolidated risk assessment database does not produce reports that include the detailed risk ratings underpinning the results for all public health services. |

||

|

VAHI's Monitor reports only record DH’s overall monitoring levels of public health services from the previous quarter and not how DH determined these levels. |

2.5 Using incident reporting to detect risks

Targeting Zero stressed the importance of Victoria having a fully functioning statewide incident management system to detect system-wide risks and drive system wide improvements. This echoed recommendations from our 2005 Managing Patient Safety in Public Hospitals, 2008 Patient Safety in Public Hospitals and 2016 Patient Safety in Victorian Public Hospitals reports. Concerningly, DH has still not implemented a fully functioning incident management system.

Outdated incident management guidelines

Our 2016 Patient Safety in Victorian Public Hospitals report also recommended DHHS review its 2011 Victorian Health Incident Management Policy and associated guidelines. This recommendation included DHHS developing guidance for health services on evaluating the effectiveness of recommended actions from incident investigations. Targeting Zero also reiterated that DH needs to develop a transparent and evidence-based incident management policy that clearly specifies what it aims to achieve through incident reporting and how it will achieve it.

To date, DH has not fully implemented either our or Targeting Zero’s recommendations. SCV streamlined and updated the 2011 Victorian Health Incident Management Policy with the Policy: Adverse patient safety events in 2019. However, the policy does not include information for all clinical incidents, only ISR 1 and 2 incidents.

Currently, the Policy: Adverse patient safety events does not:

- clarify health services, SCV and DH’s roles and responsibilities for all clinical incidents

- support health services to:

- investigate all clinical incidents

- evaluate the effectiveness of recommended actions from investigations.

While SCV is developing guides for health services, its current lack of guidance is a missed an opportunity to reinforce the importance of not only incident reporting but learning and improving from incidents.

Incomplete statewide incident management system

DHHS established VHIMS in 2009 in response to recommendations initially made in our 2005 Managing Patient Safety in Public Hospitals audit and reiterated in our 2008 follow up audit, Patient Safety in Public Hospitals. Responsibility for VHIMS was transferred from DHHS to VAHI in February 2017.

Targeting Zero found that DHHS was not systematically analysing VHIMS information to understand incidents and risks across the sector. While VAHI has made some improvements to VHIMS, Victoria still does not have a fully functioning statewide incident management system that allows DH to proactively detect quality and safety risks across the health system.

Figure 2G details key changes and events related to VHIMS.

FIGURE 2G: Timeline of VHIMS reform

VHIMS Central Solution is an in house built system that VAHI and DHHS jointly developed.

VHIMS Local Solution is a modifiable version of VHIMS Central Solution.

All Victorian public health services can opt to:

- use VHIMS Central Solution

- use VHIMS Local Solution

- implement a bespoke arrangement that enables them to meet the VHIMS MDS requirements.

VHIMS MDS is a new minimum dataset developed by VAHI in collaboration with the sector.

Source: VAGO.

VHIMS data quality deficiencies

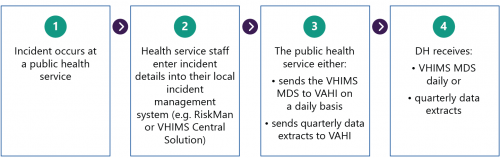

Figure 2H outlines the current VHIMS data collection and reporting process.

All public health services that do not currently use VHIMS Central Solution but have their own local incident management systems, such as RiskMan, currently send VAHI their incident reports quarterly.

FIGURE 2H: Current VHIMS data collection and reporting process

Source: VAGO.

To date, VAHI has not developed a data dictionary for all of the data fields in the VHIMS, including the VHIMS MDS, to give public health services consistent definitions to help them collect data accurately. Also, DH and VAHI have not provided guidance to public health services to help their staff accurately record clinical incidents. VAHI note that COVID-19 has delayed its work on VHIMS.

As a result, DH and VAHI cannot currently ensure that public health services are reporting incidents accurately and completely and using the same data definitions in their local incident systems, such as RiskMan, to record incident data.

We obtained available VHIMS data from 2017–18 to 2019–20, including the 2019–20 VHIMS MDS from the 39 smaller public and community health services. The quality of VHIMS data submitted, excluding data from the 39 health services, is significantly flawed. We found approximately 200 000 blank entries for the ISR field as well as invalid ISR entries of '0', '5', 'Unknown', and 'NA'.

VAHI states that health services record the ISR field as blank when patients arrive from another setting with preventable injuries, such as pressure ulcers. It is unclear why, in such circumstances, health services record this in their incident management systems that are intended to capture incidents that occur during the patients' treatment at the health service. Presumably, many patients attend health services with pre-existing preventable injuries. This highlights the need for a data dictionary and guidance to health services on recording incidents.

While data quality improved from July 2019 when the 39 health services started to report against the VHIMS MDS, we still identified some inconsistencies. For example, we found 39 clinical incidents that recorded as sentinel events and were also classified in the ISR field as either 'Minor' or 'Routine'.

Without a clear data dictionary, we were unable to verify the accuracy of the data and undertake further analysis. Additionally, the quality of the data we obtained did not align with the key dimensions in the Victorian Government’s Data Quality Guideline, including accuracy, consistency, fit for purpose, completeness, timeliness/currency, collectability and representativeness.

Analysing incident data to detect emerging risks

A consequence of the data quality issues outlined is that DH cannot proactively detect underperformance or emerging risks across the system by routinely analysing lower-severity incidents, such as ISR 2–4 incidents.

DH regularly analyses sentinel events that health services report to SCV to monitor risks across the health system. However, these represent only the most serious incidents and are relatively few in number. DH could obtain meaningful additional information about potential or emerging system-level risks by analysing lower severity incidents.

Since 2017, VAHI has released just two reports that analyse VHIMS data and each took 10 months to produce. In particular:

- in October 2019, VAHI published the 'VHIMS reported incidents inaugural statewide report', which covered incidents that occurred in health services from July 2017 to December 2018

- in October 2020, VAHI published the 'Medication incidents: An analysis of Victorian Health Incident Management System data', which examined incidents from July 2017 to December 2019.

While these reports provide valuable insights across the system, they took a considerable amount of time to prepare due to poor data quality and the time needed to clean VHIMS data. As such, they are not part of VAHI’s routine quality and safety reporting. The time taken to produce the reports also limits DH's and health services' ability to respond to identified issues in a timely way.

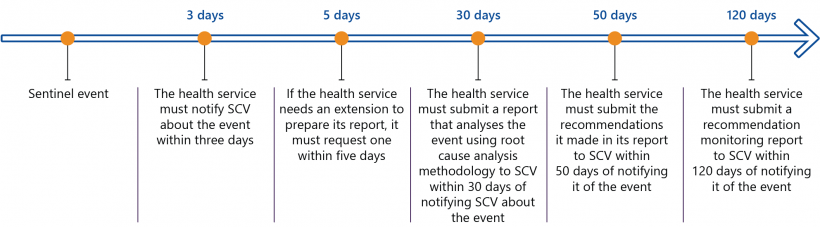

SCV's information needs for sentinel events

SCV's Policy: Adverse patient safety events and the Victorian Sentinel Event Guide require health services to notify SCV when a sentinel event occurs according to the timeframes in Figure 2I. Health services must record the event under one of the 10 national categories or the one Victoria-specific category of 'other'.

FIGURE 2I: Timeframes for notifying SCV about sentinel events

Source: SCV.

VHIMS should meet SCV's information needs for sentinel event reporting. However, health services can currently only select from the 10 national categories of sentinel events and cannot select the Victoria-specific category ('other') when recording incident data through VHIMS. Also, it is only recently that a minority of health services can currently submit VHIMS data daily. Most submit data quarterly, which does not meet the set timelines for reporting sentinel events within three days.

As a result, the then DHHS established a separate sentinel events database as an interim solution, which SCV continues to use. This requires health services to report the same information twice.

VAHI and SCV launched a new sentinel events notification portal, using the same platform as VHIMS Central Solution, in August 2021. SCV advises that it started using this new portal for recording sentinel events data from July 2021, and it will improve the timeliness, efficiency and security of sentinel events reporting from health services.

2.6 Using other intelligence to detect risks

Targeting Zero found that DHHS did not have a formal process for incorporating and using findings from review bodies in its performance monitoring process. DH has undertaken some work to address this, with varying success.

DH, SCV and VAHI now have formal information-sharing agreements with the VMIA. However, SCV asserts it is still unable to access relevant claims information from VMIA to support monitoring and assessment of quality and safety risks in Victorian public health services but was unable to show us evidence to support its position. In contrast, VMIA advised us that it has shared claims information with DH and SCV through an information sharing committee the parties established, however this arrangement stopped in May 2020 due to COVID-19 and has not resumed. These varying views indicate that DH and SCV are not fully using VMIA's claims information to monitor and assess quality and safety risks in Victorian public health services and should seek to address this.

SCV also now receives the annual VASM report and is seeking to receive more regular information from VASM, including an annual report and monthly progress reports that contain de-identified information on surgical mortality across the health system. This would improve SCV's capability in identifying system-level quality and safety risks in surgical units at Victorian health services.

Targeting Zero stated that CCOPMM notified the former DHHS in March 2015 that a cluster of perinatal deaths had occurred at Djerriwarrh Health Services during 2013 and 2014. Nearly five years since Targeting Zero, DH and SCV still may not receive timely alerts of suspected preventable perinatal deaths from CCOPMM.

Due to CCOPMM's internal review processes and the amount of time it takes to receive records from health services, CCOPMM can take up to six months to inform SCV of any potentially preventable deaths. CCOPMM's delay in publishing its annual report—Victoria's Mothers, Babies and Children—which includes statewide perinatal mortality and morbidity findings, further adds to this information gap. For example, CCOPMM published its most recent Victoria's Mothers, Babies and Children 2019 report in January 2021 and reissued it in May 2021. As a result, DH and SCV remain limited in their ability to use the information CCOPMM holds to detect and prevent avoidable harm, including perinatal deaths, in a timely manner.

3. Producing and using information to identify and reduce risks

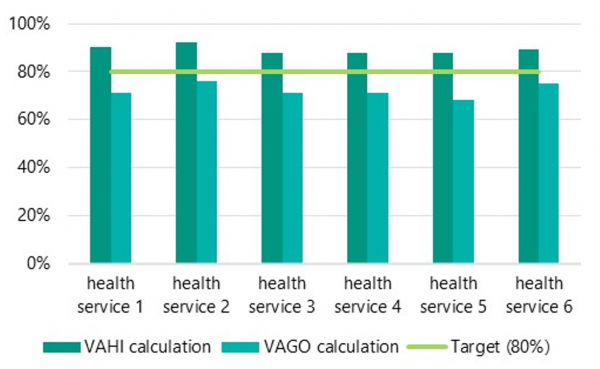

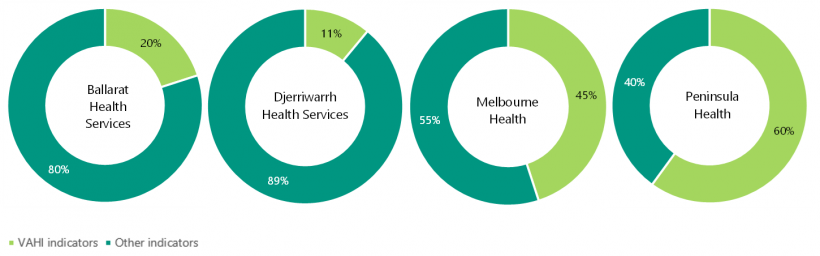

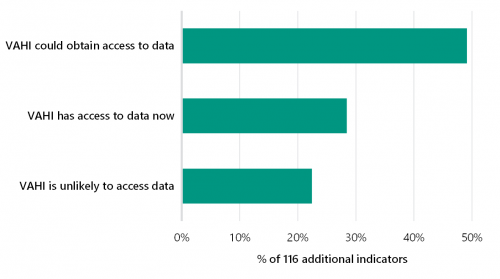

Conclusion