Responses to Performance Audit Recommendations 2015–16 to 2017–18

Overview

In our first Assurance Review, we looked at how public entities monitored and responded to performance audit recommendations made by VAGO between 2015-16 and 2017-18.

Nothing has come to our attention to indicate that overall, agencies are not effectively implementing past performance audit recommendations.

Most agencies report having governance arrangements that allow their senior management and audit committees to monitor progress in implementing our audit recommendations.

The effectiveness of these governance arrangements has enabled agencies to report that most of our recommendations are complete. However, they do not always complete recommendations as quickly as intended or needed. All agencies can work to improve this.

A minority of agencies do not provide necessary progress reports on audit recommendations to their audit committees or give their audit committees a role in assessing when recommendations are satisfactorily addressed. This prevents those committees from fulfilling their legislated oversight function.

Click here to download the data for this dashboard.

Transmittal letter

Independent assurance report to Parliament

Ordered to be published

VICTORIAN GOVERNMENT PRINTER June 2020

PP No 137, Session 2018–20

President

Legislative Council

Parliament House

Melbourne

Speaker

Legislative Assembly

Parliament House

Melbourne

Dear Presiding Officers

Under the provisions of the Audit Act 1994, I transmit my report Responses to Performance Audit Recommendations 2015–16 to 2017–18.

Yours faithfully

Andrew Greaves

Auditor-General

17 June 2020

Acronyms

Acronyms

| DEDJTR | Department of Economic Development, Jobs, Transport and Resources |

| DELWP | Department of Environment, Land, Water and Planning |

| DET | Department of Education and Training |

| DHHS | Department of Health and Human Services |

| DJCS | Department of Justice and Community Safety |

| DJPR | Department of Jobs, Precincts and Regions |

| DPC | Department of Premier and Cabinet |

| DoT | Department of Transport |

| DTF | Department of Treasury and Finance |

| PSP | Precinct Structure Plan |

| VAGO | Victorian Auditor-General’s Office |

| VPA | Victorian Planning Authority |

Overview

Context

Through our performance audits, we identify opportunities for public agencies to improve how they work. We do this by uncovering risks, weaknesses and poor performance, as well as by sharing examples of better practice. We then make recommendations to agencies to address areas for improvement.

There is no legislative requirement for agencies to accept or implement our recommendations, or publicly report on actions they have taken. This means the Victorian public and parliamentarians often do not know what actions, if any, agencies have taken following an audit.

This assurance review provides insights into how agencies have addressed our audit findings.

We examined how public entities monitored and responded to our performance audit recommendations between 2015–16 and 2017–18. We focused on whether agencies:

- are implementing recommendations in a timely way

- have governance arrangements that enable their senior management and audit committees to monitor progress in implementing accepted recommendations.

Conclusion

Nothing has come to our attention to indicate that overall, agencies are not effectively implementing past performance audit recommendations. Most agencies report having governance arrangements that allow their senior management and audit committees to monitor progress in implementing our audit recommendations.

The effectiveness of these governance arrangements has enabled agencies to report that most of our recommendations are complete. However, they do not always complete recommendations as quickly as intended or needed. All agencies can work to improve this.

A minority of agencies do not provide necessary progress reports on audit recommendations to their audit committees or give their audit committees a role in assessing when recommendations are satisfactorily addressed. This prevents those committees from fulfilling their legislated oversight function.

Findings

Agency responses to recommendations

Of the 465 recommendations we made that are the subject of this report, 455 (or 98 per cent) were agreed to. As at December 2019, the status of the agreed recommendations was as follows:

- 60 per cent were complete

- 13 per cent were almost complete

- 25 per cent were in progress or had just begun

- 2 per cent were yet to begin.

On average, agencies took 15 months to complete a recommendation. The longest implementation time for a single recommendation was just over four years.

By comparison, when we surveyed agencies at the end of 2018, those that responded advised us they took on average only seven months to complete a recommendation.

Agencies initially set time frames for implementation for just over half of the accepted recommendations. Of these, they later revised and extended time frames in 42 per cent of cases.

Agencies were unable to fully realise the value of audit recommendations in about a quarter of all cases due to constraints or challenges. Examples included:

- securing funds and resources to operationalise recommendations

- the need to replace or update ICT systems

- an increased demand for frontline services that diverted attention from implementing recommendations.

The actions proposed by agencies in response to recommendations were adequate in 80 per cent of cases. Where we considered proposed actions were inadequate, it is because agencies need more detail to clarify the steps they intend to take.

Progress made on proposed actions is adequate in 75 per cent of cases. Where progress was considered inadequate, delays in commencing and completing implementation was the main factor.

Monitoring and oversight arrangements

All but one of the 64 agencies assigned responsibility for implementing recommendations to an accountable individual or business unit.

Eighty-nine per cent of agencies reported that they set time frames to complete recommendations, which supports accountability. However, many agencies did not provide time frames for inclusion in the tabled audit report. Just over half of the recommendations in the survey had implementation time frames set at the time of the audit.

Ninety-two per cent of agencies reported monitoring progress on implementing all recommendations, while eight per cent monitor some based on risk assessments and consideration of relevance. Once an agency accepts a recommendation, failing to oversee its implementation creates a risk it will not be completed.

Ninety-five per cent of agencies reported that an accountable individual or business unit monitors progress on actions taken in response to audit recommendations. Eighty per cent of agencies advised that audit committees also play a role.

Portfolio monitoring

We also expected departments to oversee their portfolio agencies’ progress on performance audit recommendations. Under the Standing Directions 2018, issued as part of the Financial Management Act 1994, departments are responsible for supporting the relevant Minister in the oversight of portfolio agencies, including providing information on financial management and performance.

Only the Department of Treasury and Finance (DTF) reported that it monitors recommendation implementation for entities within its portfolio. The Department of Education and Training (DET), Department of Environment, Land, Water and Planning (DELWP) and the Department of Health and Human Services (DHHS) reported that they monitor progress for some but not all entities.

The remaining four departments, (Department of Jobs, Precincts and Regions (DJPR), Department of Justice and Community Safety (DJCS), Department of Premier and Cabinet (DPC) and Department of Transport (DoT)) reported that they do not monitor the progress of portfolio agencies' on VAGO audit recommendations. These departments reported that individual entities monitor many of their own audit recommendations and have their own audit committees. The risk of this approach is that the department may not be in a position to fully acquit their function to support the relevant Minister(s) in oversighting the performance of all portfolio agencies.

Reporting on progress

Agencies report on the progress of actions to a variety of individuals and business areas. Eighty-four per cent report progress to executive management, while 69 per cent of agencies provide progress updates to the Secretary or Chief Executive Officer.

Fifty-four agencies (84 per cent) provide regular progress reports to their audit committees, including all eight departments. Audit committees at the remaining agencies do not receive progress reports and/or do not play a role in monitoring actions taken to respond to recommendations. However, the Standing Directions 2018 require audit committees to consider the actions taken to resolve issues raised in external audits.

Just over half of agencies advised that their audit committees have final authorisation to close off actions as complete. Many agencies require approval from more than one committee or individual, such as the board and audit committee at health service providers, or executive management and the Chief Audit Executive at a department.

For 25 of the remaining 30 agencies, this authorisation sat with one or more senior leadership positions. The other five agencies identified the relevant business unit as having final authority to close off recommendations.

1 Context

We published 71 performance audits between 2015–16 and 2017–18.

In this review, we examined progress made by agencies on recommendations from 44 of those audits—including the 17 performance audit reports we published in 2017–18, for which we assessed the status of recommendations for the first time. The other 27 are audits from earlier years that had recommendations outstanding.

1.1 VAGO's role and audit activities

The Auditor-General provides independent assurance to the Parliament of Victoria and the Victorian community on the financial integrity and performance of the state. To provide assurance, the Auditor-General conducts three key activities:

- Performance audits—these focus on the efficiency, effectiveness and economy of government agencies and whether they comply with legislation.

- Financial audits—these examine the financial statements of an agency, providing assurance that the statements present fairly the financial position, cashflows and results of operations for the year.

- Assurance reviews—these may focus on either financial issues or matters of performance.

The difference between a review and an audit is the degree of assurance provided. An audit is designed to provide reasonable assurance; a review provides limited, but still meaningful, assurance.

The level of assurance is based on the extent and type of audit evidence obtained. To this extent, the two are sometimes distinguished between those that provide ‘positive’ assurance (audits) and ‘negative’ assurance (reviews).

1.2 Why this review is important

This assurance review is important because it:

- provides greater transparency on the actions agencies take in response to audit findings and recommendations. This makes them more accountable for how they respond to our audits.

- gives agencies the opportunity to compare and learn from each other and so determine whether their actions, and the time taken to implement them, are reasonable and appropriate.

1.3 Performance audits

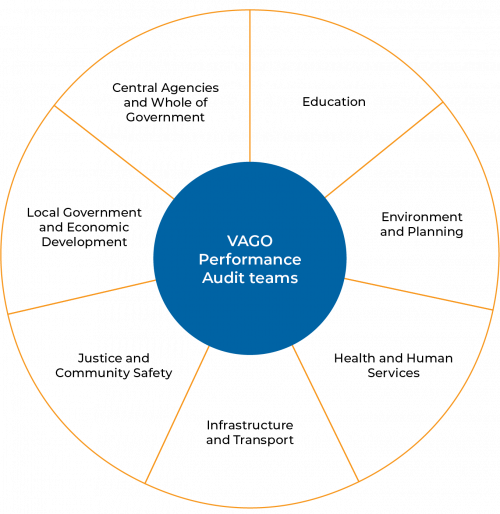

Each year, we undertake a range of performance audits covering seven portfolio areas, as shown in Figure 1A.

Figure 1A

VAGO portfolio teams

Source: VAGO.

Audit purpose and outputs

Our performance audits assess whether government agencies have effective programs and services and whether they are using resources economically and efficiently. We also identify activities that work well and reflect better practice.

Our reports contain recommendations for audited agencies to address deficiencies and improve key aspects of their operations. Under the Audit Act 1994, the Auditor-General must ask audited agencies for comments or submissions on proposed reports. As part of this submission, we request agencies respond to our audit recommendations by stating:

- whether they accept them

- what action they will take

- anticipated time frames for implementation.

We publish these responses in the final report tabled in Parliament.

The Standing Directions 2018 require each agency's audit committee to review implementation of actions to address our recommendations.

1.4 Assurance reviews

Under amendments to the Audit Act 1994, effective from 1 July 2019, VAGO has the power to conduct assurance reviews. We have the discretion to determine when an assurance review should occur, and what form it will take.

We use our assurance review powers to examine more targeted, limited scope, and time-sensitive issues that do not warrant a full performance audit. We may conduct assurance reviews on the basis of:

- issues we identify when carrying out our other audit activities

- information provided by to us other integrity offices, parliamentarians or members of the community.

Tracking agency responses and progress

VAGO has tracked agency responses to our audit recommendations since 2015 through a follow-up survey.

We use this survey to determine which past audits we should follow up on.

We also use the results of the survey to report to Parliament in our Budget Papers and our Annual Report the percentage of accepted performance audit recommendations that audited agencies report as implemented across a two year period. Our 2019–20 target for this performance measure is 80 per cent, which we expect to achieve.

This year we have taken the further step of publicly reporting on each agency’s progress through this assurance review.

1.5 Oversight of agency responses to audits

All public sector agencies are responsible for monitoring their progress in implementing the audit recommendations we make to them, and that they agree with.

Audit committees

Agencies subject to the Financial Management Act 1994 are, under the Standing Directions 2018, required to establish an audit committee. Their role with regard to external audits is to:

- maintain effective communication with external auditors

- consider recommendations made by external auditors and the actions to be taken to resolve issues raised

- review actions taken in response to external audits, including actions to rectify poor performance.

External audits include those undertaken by VAGO.

Agency reporting

Agencies may choose to report publicly on the extent to which they implement and oversee audit recommendations. They are not required to do so, but public reporting promotes transparency and encourages greater accountability across the public sector.

1.6 What the review examined and how

Objective

Our review objective was to assess whether agencies have effectively addressed our past performance audit recommendations. The review examined whether agencies:

- can demonstrate they are implementing recommendations in a timely way

- have governance arrangements that enable senior management and audit committees to monitor progress in implementing accepted performance audit recommendations.

Review scope and methods used

The review included all public sector agencies that received recommendations through VAGO performance audits:

- tabled in Parliament in 2017–18

- tabled in 2016–17 or 2015–16 and had outstanding recommendations from audits as of November 2018.

In total, the review examined 44 audits, 64 agencies and 465 recommendations, as set out in Figure 1B.

Figure 1B

Audits and recommendations included in assurance review

|

|

2017–18 |

2016–17 |

2015–16 |

Total |

|---|---|---|---|---|

|

Audits |

17 |

15 |

12 |

44 |

|

Recommendations |

284 |

149 |

32 |

465 |

|

Agencies* |

|

|

64 |

Note: *Data not shown by year as agencies can be included in multiple audits in multiple years.

Source: VAGO.

We asked agencies to complete an online survey comprising three parts:

- Part 1—monitoring and oversight arrangements at agencies to track implementation of audit recommendations, including audit committee processes

- Part 2—perceived value of the audit to the agency or sector

- Part 3—detailed information about agency responses to each audit recommendation, including the level of acceptance, actions taken, current status, and the timing of implementation.

Agencies self-attested to the accuracy and completeness of their survey responses when submitting them.

We then assessed agency submissions against criteria to determine whether in our view:

- actions taken by agencies directly address the recommendation and are being implemented in a timely way

- governance arrangements are adequate to ensure senior management can monitor progress in implementing accepted performance audit recommendations.

We list audits and agencies included in this review in Appendix B.

The review was conducted in accordance with the relevant Australian Standard on Assurance Engagements ASAE 3000 Assurance Engagements other than Audits or Reviews of Historical Financial Information.

VAGO will continue to track agency progress in responding to performance audit recommendations on an annual basis.

Previous recommendations

For audits that tabled in 2015–16 and 2016–17, this review only included recommendations that were not complete in our previous survey, conducted in November 2018.

This review does not focus on the recommendations from these audits that agencies have already reported as implemented. To provide a complete picture of their performance, we have included the status and response of the agency to all recommendations in each audit in Appendix C. This information is also available in dashboard form at www.audit.vic.gov.au.

The total cost of the assurance review was $290 000.

1.7 Submissions

As required by the Audit Act 1994, we gave a draft copy of this report to all relevant agencies and asked for their submissions or comments.

DET, DHHS, DJPR, DoT and Victoria Police responded. The following is a summary of those responses. The full responses are included in Appendix A.

- DET welcomed the review and stated that the department also monitors progress on actions that progressively address recommendations.

- DHHS noted additional responsibilities of the Audit Committee.

- DJPR provided detail on arrangements in place to support oversight responsibilities of the Minister.

- DoT welcomed the review.

- Victoria Police welcomed the review and noted its existing governance structures and completion of recommendations.

1.8 Report structure

The remainder of this report is structured as follows:

- Part 2 examines the extent to which agencies have accepted and implemented performance audit recommendations.

- Part 3 examines what arrangements agencies have to monitor and oversee the implementation of audit recommendations.

2 Agency responses to recommendations

This Part provides information on the extent to which agencies accept and implement our performance audit recommendations, including their timeliness.

2.1 Conclusion

Nothing has come to our attention to indicate that, overall, agencies are not effectively implementing past performance audit recommendations.

Most agencies accepted audit recommendations and have taken action to complete or progress their implementation. However, this action is not always timely, which all agencies can work to improve.

2.2 Accepting recommendations

Acceptance at time of tabling

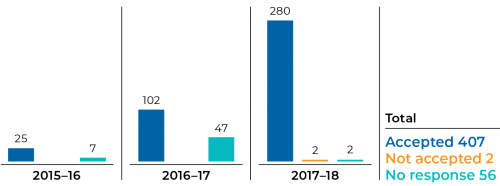

Before we table a report, we ask agencies to tell us whether they accept our audit recommendations. Of the 465 recommendations included in this review, the audited agency accepted or supported 407 (88 per cent) at the time of the audit. Two recommendations were not accepted. For the remaining 56 (12 per cent), agencies either did not respond, or did not clearly indicate support for a recommendation.

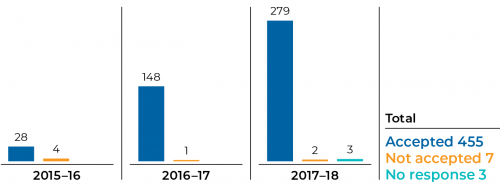

Acceptance at the time of this review

At the time of this review, we asked agencies again whether they now accepted or did not accept our audit recommendations. This enabled us to gather new data including from those agencies that did not respond previously.

Agencies accepted 455 of the 465 recommendations (98 per cent) and rejected six. Four recommendations required no further action due to a change in government policy. Acceptance levels were higher for audits tabled in 2017–18 and 2016–17 (98 and 99 per cent respectively) than 2015–16 (87 per cent).

Figures 2A and 2B show agency acceptance at the time of tabling, and at the time for this review.

Figure 2A

Acceptance of recommendations at time of tabling

Source: VAGO.

Figure 2B

Acceptance of recommendations at time of assurance review

Source: VAGO.

Agencies provided clear reasons when they did not accept recommendations. These included:

- legislative change and the introduction of a broader reform strategy meaning recommendations were no longer relevant (three recommendations)

- the agency saying that the recommendation would duplicate services provided by other agencies (one)

- agency preferring not to go beyond mandatory compliance requirements (two).

The following explanation provided by DTF demonstrates this final point above.

Figure 2C

Case study: DTF

|

Audit: Internal Audit Performance (2017–18) Recommendation 5: DELWP, DHHS, Department of Justice and Regulation (DJR), DPC and DTF complete a self-assessment of compliance with the International Standards for the Professional Practice of Internal Auditing (the IIA Standards), consistent with the adoption of the IIA Standards in their internal audit charters, and report the results and action plans to address gaps to the audit committee, and conduct future assessments annually. Does agency currently accept recommendation: No Explain why: From a whole of government framework perspective, DTF confirms that application of the International Standards for the Professional Practice of Internal Auditing is supplementary to the mandatory requirement of the Standing Directions (the Directions). Moreover, the Financial Management Act 1994 only permits the mandating of Australian accounting standards. The Department does not consider that conducting a complete self-assessment of compliance with the international standards as a necessary part of the internal audit cycle. The appropriateness of such a review for individual agencies may depend on the nature, complexity and scale of their operations. |

Source: VAGO.

2.3 Completing recommendations

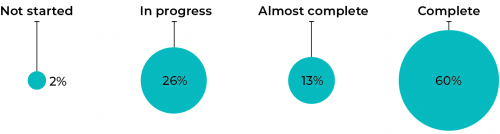

Agencies attested to us that they have completed 60 per cent of the 455 accepted recommendations. Figure 2D shows implementation status by category.

Figure 2D

Recommendation status 2015–16 to 2017–18

Source: VAGO.

Overall, agencies have taken some form of action towards implementing almost all audit recommendations (98 per cent). This indicates a high level of engagement by agencies to address areas for improvement.

Agencies reported that 13 per cent of accepted recommendations were almost complete, with a further 25 per cent in progress. Implementation had not begun for seven recommendations (2 per cent).

Implementation by year

Figure 2E shows the status of recommendations by audit year.

Figure 2E

Recommendation status by year

Source: VAGO.

2017–18 audits

Of audits we tabled in 2017–18, agencies reported that they have completed 168 (60 per cent) of the 279 accepted recommendations. Actions were underway on a further 104 recommendations.

No action had been taken for seven recommendations. Six of these recommendations were directed to DHHS in the performance audit Victorian Public Hospital Operating Theatre Efficiency. DHHS advised us that action had not begun because:

- three recommendations were reallocated due to machinery of government changes. Two were transferred to Safer Care Victoria and one to the Victorian Agency for Health Information. We note, however, that these are administrative offices within DHHS.

- two recommendations were dependent on the outcome of a third, which was in progress but was not expected to be completed until June 2020.

- one had not started due to competing priorities. The department advised us the implementation date for the recommendation was July 2020.

DHHS advised us in May 2020 that implementation had commenced for one recommendation that had been reallocated and two recommendations remaining with the department.

2016–17 audits

Eighty-seven of the 148 accepted recommendations (59 per cent) from 2016–17 audits are now complete. While actions have begun for most of the outstanding recommendations, it is two and a half years since they were made. This is an excessive delay.

2015–16 audits

Recommendations from older audits are more likely to be implemented as agencies have had more time. Despite this, some recommendations still remain outstanding for 2015–16 audits, with eight of the 28 remaining recommendations (29 per cent) in the review unresolved. These recommendations are more than three years old and are directed at eight different agencies across seven different audits.

These agencies should act immediately to resolve these long outstanding recommendations to ensure the risks we identified have been addressed and to make necessary improvements.

Implementation by agency

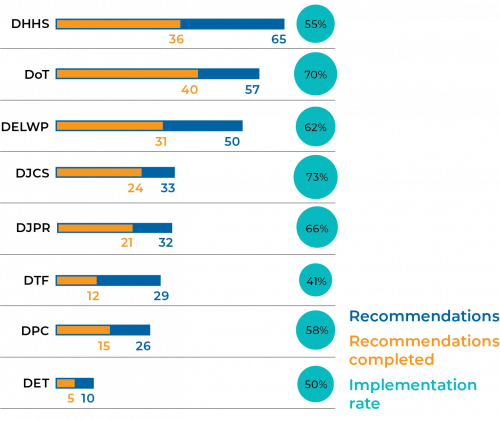

Departments

The eight government departments have a greater number of accepted and unfinished recommendations than other types of agencies. This is largely because they are included in more VAGO audits.

DET had the fewest outstanding recommendations at the commencement of the review, with 10. DHHS had the most, with 65 recommendations to address.

DJCS had the highest implementation rate of recommendations in the review, reporting completion of 24 of its 33 recommendations (73 per cent). DoT also reported a high completion rate, reporting that it had implemented 40 of the 57 surveyed recommendations in full (70 per cent).

DTF had lower implementation rates, with just 12 of 29 recommendations completed (41 per cent). Despite having the fewest number of recommendations among departments, DET's implementation rate was also low, having implemented just half of its outstanding recommendations. Figure 2F sets out departmental implementation rates.

Figure 2F

Departmental implementation rates

Source: VAGO.

Agencies other than departments

Twenty of the 56 non-departmental agencies included in this review reported that they had completed all their recommendations. These agencies had four or fewer recommendations.

A full list of agency implementation status is included in Appendix C and on the review dashboard at www.audit.vic.gov.au.

2.4 Timeliness of implementation

Average implementation times

On average, agencies took 457 calendar days, or 15 months, to implement a recommendation after the audit was tabled in Parliament. This was a significant increase on our 2018 survey results, where the average implementation time was reportedly 205 days, or nearly seven months.

The time to implement recommendations will change due to their wide range and varying complexity. There are also vast differences in agency size and resources.

The apparent increase in implementation times this year may in part be due to the higher response rate to the assurance review survey. In previous years participation was voluntary. In our 2018 survey, we received updated information for 78 per cent of recommendations. Our 2019 assurance review survey had a 100 per cent response rate.

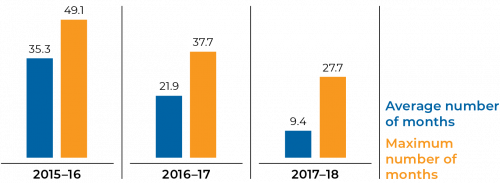

Figure 2G shows the average and maximum implementation times by year for recommendations included in this review.

Figure 2G

Average and maximum number of months to implement recommendations

Source: VAGO.

2015–16 recommendations

As expected, outstanding recommendations from 2015–16 audits had the longest implementation times, with an average of 1 060 days (two years, 11 months). Some of these recommendations involved the establishment of monitoring, reporting and evaluation frameworks, the development of performance indicators and the rollout of new training programs to staff.

While many recommendations from 2015–16 audits have been implemented in previous years and are no longer outstanding, eight recommendations still remain open following this review.

Maximum implementation times

The maximum implementation time for a single completed recommendation in the survey was just over four years. This case study is set out in Figure 2H.

Figure 2H

Case Study: DJPR

|

Audit: Biosecurity: Livestock (2015–16) Recommendation 4: That the Department of Economic Development, Jobs, Transport and Resources (DEDJTR) adopts a systematic approach to

This recommendation was initially made to the former DEDJTR and was accepted. When the audit was tabled in August 2015, DEDJTR provided a detailed agency action plan indicating it would complete recommendation 4 by 30 June 2016. In our 2017 survey, DEDJTR advised the recommendation was between 51 and 75 per cent complete and the implementation date was revised to July 2019. DEDJTR provided the following reasons for the change:

In our 2018 survey, DEDJTR advised us that the recommendation was between 75 and 99 per cent implemented and no further changes had been made to the timeline for completion of July 2019. DJPR was formed in 2019 and the recommendation was reallocated from DEDJTR. DJPR advised that the recommendation had been fully implemented with completion achieved prior to the timeframe of July 2019. Departmental processes to validate supporting evidence of implementation was finalised in September 2019. The time elapsed from tabling of the report to completion was 1 474 days, or just over four years. |

Source: VAGO.

Revised implementation times

We request that agencies set expected time frames for implementation when an audit is complete. Agencies established initial time frames for completion in just 232 of the 455 accepted recommendations (51 per cent). Of these, they later revised time frames in 98 cases (42 per cent).

By agency

Some agencies have been slow to implement performance audit recommendations. Implementation times for individual recommendations have extended up to four years after the audit tabling date.

Agencies, audits and recommendations that have taken significant time frames to implement are listed in Figure 2I.

Figure 2I

Recommendations with extended implementation times

|

Audit name |

Agency |

Rec no. |

Months to complete |

|---|---|---|---|

|

Biosecurity: Livestock |

DJPR |

4 |

49 |

|

Implementing the Gifts, Benefits and Hospitality Framework |

National Gallery of Victoria |

6 |

47 |

|

Biosecurity: Livestock |

DJPR |

5 |

43 |

|

Patient Safety in Victorian Public Hospitals |

DHHS |

13 |

42 |

|

Local Government Service Delivery: Recreational Facilities |

City of Greater Bendigo |

1 |

39 |

|

Local Government Service Delivery: Recreational Facilities |

Moreland City Council |

1 |

39 |

|

Public Safety on Victoria's Train System |

Victoria Police |

3 |

39 |

Source: VAGO.

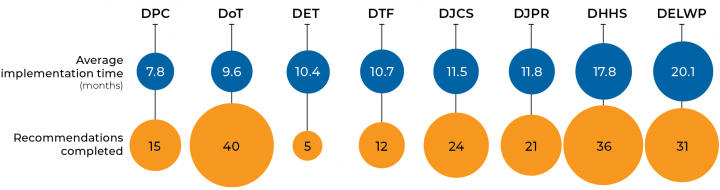

The average time taken for a department to implement an audit recommendation is between eight and 20 months.

DELWP had the longest average implementation times of any department. Its 31 completed recommendations required on average 20 months to implement in full. DHHS followed, at 17.8 months.

All remaining departments had an average implementation time of less than a year. DPC was the most timely, implementing its 15 completed recommendations within eight months on average. Figure 2J shows average departmental implementation times.

Figure 2J

Average implementation times — departments

Source: VAGO.

2.5 Challenges to implementing recommendations

We asked agencies if there were any constraints or challenges, aside from the time frames required, that would impact on their ability to fully realise the value of the audit recommendation. Agencies advised us of further implementation challenges for 27 per cent of recommendations (122). Common obstacles identified across agency responses included:

- resourcing and the need to take staff away from other responsibilities

- financial/budgetary constraints and impact on other programs areas

- competing priorities

- workforce attraction and retention

- infrastructure challenges and physical constraints

- increase in demand and pressure on frontline services

- outdated and unsupported ICT systems.

Figure 2K and 2L provide specific examples.

Figure 2K

Case study—City of Greater Bendigo

|

Audit: Local Government Service Delivery: Recreational Facilities (2015–16) Agency: City of Greater Bendigo Recommendation: Councils should improve aquatic recreation centre monitoring, reporting and evaluation activities so that they can demonstrate the achievement of council objectives and outcomes. Internal or external constraints or challenges that will impact on the agency's ability to fully realise the value of this audit recommendation: Financial constraints. The cost of providing this service continues to rise significantly as a result of revised Guidelines for Safe Pool Operations (Royal Life Saving Society of Australia) and Health Act. The revised Guidelines for Safe Pool Operations require increased staffing at small rural sites with low attendance numbers and regular independent facility safety audits in addition to Council’s. There is also increased regulation as a result of the revised Health Act which requires licencing of sites and increased independent water testing of public swimming pools. The cost increases for this service have the potential to reduce operating hours/service levels and may result in programs/activities which deliver benefit to the community ceasing. With increased and changing compliance requirements and limited resources, Council’s focus is upon meeting regulatory standards rather than monitoring, evaluating and changing the service to produce the best outcomes for the community. |

Source: VAGO.

Figure 2L

Case study—Victorian Planning Authority

|

Audit: Effectively Planning for Population Growth (2017–18) Agency: Victorian Planning Authority (VPA) Recommendation: Implement the Plan Melbourne 2017–2050 action to 'prepare a sequencing strategy for precinct structure plans in growth areas for the orderly and coordinated release of land and the alignment of infrastructure plans to deliver basic community facilities with these staged land-release plans'. Internal or external constraints or challenges that will impact on the agency's ability to fully realise the value of this audit recommendation: The VPA has implemented a number of sequencing measures in response to this recommendation. The VPA has strengthened the criteria-based prioritisation of its Precinct Structure Plan (PSP) program and is moving to improve guidance on the staging of development within PSPs. In addition, the VPA has instituted a new process for reporting to Government on the infrastructure demands arising from its planning work in order to better inform agency business case and funding decisions. While the VPA prepares place-based structure plans for designated areas, planning for infrastructure networks and services is the responsibility of infrastructure providers. Each provider operates according to agency priorities and government budgetary constraints, both in relation to the quantum of funds available, and in the four-year horizon imposed by the budget cycle. Delivery of infrastructure is a shared responsibility across multiple government agencies, so ensuring effective coordination is an ongoing challenge. Given that the VPA has no formal powers to coordinate or direct infrastructure providers in the way they undertake planning or delivery, the VPA’s approach is to inform and advocate within government for the timely delivery of infrastructure. This reliance on influencing and advocacy makes it difficult for the VPA to authoritatively promulgate a comprehensive and binding whole-of-government sequencing strategy. Finally, the VPA has limited resources to allocate to progressing further sequencing work. |

Source: VAGO.

2.6 Adequacy of agency actions

We assessed the adequacy of:

- the proposed agency action in response to a recommendation

- progress made to date on the agency's action.

We judged that the action proposed by an agency in response to an accepted recommendation was adequate in 80 per cent of cases. Two per cent of recommendations were excluded because details provided by agencies on what actions they would take were insufficient to be assessed.

We assessed the remaining 18 per cent of proposed actions as inadequate because:

- the agency response lacked clarity about how it will address the issues raised or agencies were non-committal about what actions they will take

- some aspects of the recommendation have not been addressed

- agencies did not collaborate on responses where they were required.

We judged that progress made against proposed actions was adequate in 75 per cent of cases. Where progress was considered inadequate, delays in commencing and completing implementation was the main factor.

3 Monitoring and oversight of recommendations

We asked the 64 agencies included in this review to provide information on how they monitor the implementation of performance audit recommendations.

This Part provides information on how agencies allocate responsibility for tracking proposed actions. We also considered what arrangements are in place for agencies to review progress and close off recommendations when complete.

3.1 Conclusion

Based on our work and the information obtained, nothing has come to our attention to indicate that most agencies do not have appropriate governance arrangements in place.

Most agencies (80 per cent) have governance arrangements that enable senior management and their audit committees to monitor progress in implementing our audit recommendations.

However, a minority of agencies do not enable their audit committees to fully acquit their legislative function to oversight the implementation of external audit recommendations.

3.2 Accountability for action

When an audit is complete, we ask that agencies develop an action plan. This plan should establish what action they intend to take and when by, in response to any recommendation directed to them.

Responsibility for actions

Assigning responsibility for actions to an individual or business unit is good practice to ensure recommendations are acted on. It provides a clear line of accountability for timely and comprehensive implementation.

We found 63 of the 64 agencies assign responsibility for actions in response to performance audit recommendations to an accountable individual or business unit.

Only the East Gippsland Shire Council did not take this approach. It explained that specific actions supporting the recommendation were identified and assigned to individuals and business units, but there was no strategic owner of the audit itself or for each recommendation.

3.3 Accountability for monitoring

Fifty-nine of the 64 agencies in this review (92 per cent) reported that they monitor the progress of actions taken in response to all VAGO performance audit recommendations.

Five agencies reported they monitor some, but not all, recommendations. Of these, four report that they base their decision about monitoring by assessing risk and relevance. One agency advised us it was establishing a more robust process to monitor and follow up recommendations through a register.

Where agencies accept a recommendation, they have the responsibility to oversee its implementation. Failure to do so creates a risk the recommendation will not be acted on.

Setting timelines for implementation

Providing timelines helps agencies to manage work schedules and staff responsibilities, while addressing risks and gaining efficiencies in a timely way.

Most agencies (89 per cent) reported they set indicative time frames for tracking the completion of audit recommendations.

Agencies most often considered resource availability when they set a due date to implement recommendations, with 92 per cent identifying it as a consideration point. This was closely followed by risk level (91 per cent) and recommendation complexity (83 per cent).

Frequency of monitoring

Sixty-one agencies (95 per cent) reported that progress on actions taken in response to audit recommendations were monitored by an accountable individual or business unit. Most often, this occurred on a monthly basis.

Eighty per cent of agencies (51 of 64) advised us that audit committees also played a role. How frequently committees monitor progress varies among agencies, with most doing so every quarter (34 agencies).

Monitoring the response of portfolio agencies

DTF reported that it monitored progress against VAGO audit recommendations for eight entities within its portfolio. Three departments (DET, DELWP and DHHS) reported that they monitor progress for some but not all entities. DHHS monitor public health services and DELWP reported over 90 agencies subject to monitoring. DET monitors the Adult, Community and Further Education Board.

DJPR, DJCS, DPC and DoT stated that they do not monitor portfolio agencies' progress against VAGO audit recommendations, as these are monitored at the individual entity level. DJPR, DJCS and DPC indicated that portfolio agencies had their own audit committees with oversight responsibilities.

Under the Standing Directions 2018, departments are responsible for supporting the relevant Minister in the oversight of portfolio agencies, including providing information on financial management and performance. On this basis we would expect departments to oversee their portfolio agencies’ progress against performance audit recommendations.

Reporting progress

Agencies report on the progress of actions to a variety of individuals and business areas. Eighty-four per cent report progress to executive management, while about two thirds (69 per cent) of agencies provide progress updates to the Secretary or Chief Executive Officer.

Fifty-four agencies (84 per cent) provide regular progress reports to their audit committees, including all eight departments. However, none of the remaining 10 agencies, half of which are councils, report on progress to their audit committee. Eight of these agencies advised their audit committee had no role in monitoring the actions taken in response to recommendations.

This approach does not meet the requirements of the Standing Directions 2018, under which audit committees must consider the actions taken to resolve issues raised in external audits and review the actions taken in response to them.

All agencies with outstanding recommendations had processes to deal with incomplete or late recommendations. Most included escalation to the Chief Executive Officer, audit committee or executive management, a review of actions taken and development of a revised response and timeline to assist completion.

Closing recommendations

We asked agencies to advise who has final authority for closing off recommendations. Thirty-four of the 64 agencies in the review (53 per cent) reported that their audit committees filled this role. At 16 agencies, the audit committee shared this authority with the Board or Council, Secretary, Chief Executive Officer or Leadership Team.

For 25 of the remaining 30 agencies, this authority sat with one or more senior leadership positions. Five agencies identified the relevant business unit as having final authority to close off recommendations.

Under the Standing Directions 2018, audit committees have a role in reviewing the actions taken in response to external audits. Committees are also required to determine whether actions taken resolve the issues raised. Current practices at some agencies do not meet these requirements.

Appendix A. Submissions and comments

We have consulted with the agencies listed in Appendix B, and we considered their views when reaching our review findings. As required the Audit Act 1994, we gave a draft copy of this report, or relevant extracts, to those agencies and asked for their submissions and comments.

Responsibility for the accuracy, fairness and balance of those comments rests solely with the agency head.

Responses were received as follows:

RESPONSE provided by the Associate Secretary, DET

RESPONSE provided by the Associate Secretary, DHHS

RESPONSE provided by the Associate Secretary, DJPR

RESPONSE provided by the Associate Secretary, DoT

RESPONSE provided by the Chief Commissioner, Victoria Police

Appendix B. Audits and agencies

Figure B1

2017–18 audits and agencies

|

Audit |

Date tabled |

Audited agencies |

|---|---|---|

|

Assessing Benefits from the Regional Rail Link Project |

10-05-2018 |

Department of Premier and Cabinet, Department of Transport, Department of Treasury and Finance, V/Line |

|

Community Health Program |

06-06-2018 |

Department of Health and Human Services |

|

Effectively Planning for Population Growth |

23-08-17 |

Department of Education and Training, Department of Environment, Land, Water and Planning, Department of Health and Human Services, Department of Jobs, Precincts and Regions, Victoria Planning Authority |

|

Follow-up of selected 2012-13 and 2013-14 Performance Audits: Managing Victoria's Native Forest Timber Resources |

20-06-2018 |

Department of Environment, Land, Water and Planning, |

|

Fraud and Corruption Control |

29-03-2018 |

Department of Jobs, Precincts and Regions, |

|

ICT Disaster Recovery Planning |

29-11-2017 |

Department of Environment, Land, Water and Planning, |

|

Improving Victoria's Air Quality |

08-03-2018 |

Department of Environment, Land, Water and Planning, |

|

Internal Audit Performance |

09-08-2017 |

Department of Education and Training, |

|

Local Government and Economic Development |

08-03-2018 |

Bass Coast Shire Council, |

|

Maintaining the Mental Health of Child Protection Practitioners |

10-05-2018 |

Department of Health and Human Services |

|

Managing Surplus Government Land |

08-03-2018 |

Department of Education and Training, |

|

Managing the Level Crossing Removal Program |

14-12-2017 |

Department of Transport, |

|

Protecting Victoria's Coastal Assets |

29-03-2018 |

Department of Environment, Land, Water and Planning, |

|

Safety and Cost Effectiveness of Private Prisons |

29-03-2018 |

Department of Justice and Community Safety, |

|

The Victorian Government ICT Dashboard |

20-06-2018 |

Department of Health and Human Services, Department of Premier and Cabinet, |

|

V/Line Passenger Services |

09-08-2017 |

Department of Transport, |

|

Victorian Public Hospital Operating Theatre Efficiency |

18-10-2017 |

Alfred Health, |

Source: VAGO.

Figure B2

2016–17 audits and agencies

|

Audit |

Date tabled |

Audited agencies |

|---|---|---|

|

Board Performance |

11-05-2017 |

Department of Health and Human Services, |

|

Effectiveness of the Environmental Effects Statement Process |

22-03-2017 |

Department of Environment, Land, Water and Planning |

|

Effectiveness of the Victorian Public Sector Commission |

8-06-2017 |

Victorian Public Sector Commission |

|

Efficiency and Effectiveness of Hospital Services: Emergency Care |

26-10-2016 |

Albury Wodonga Health, |

|

ICT Strategic Planning in the Health Sector |

24-05-2017 |

Ballarat Health Services, |

|

Maintaining State-Controlled Roadways |

22-06-2017 |

Department of Transport |

|

Managing Community Corrections Orders |

08-02-2017 |

Department of Health and Human Services, |

|

Managing Public Sector Records |

08-03-2017 |

Department of Education and Training, |

|

Managing School Infrastructure |

11-05-2017 |

Department of Education and Training |

|

Managing the Performance of Rail Franchisees |

07-12-2016 |

Department of Transport |

|

Managing Victoria's Planning System for land Use and Development |

22-03-2017 |

Department of Environment, Land, Water and Planning |

|

Managing Victoria's Public Housing |

21-06-2017 |

Department of Health and Human Services, |

|

Public Participation and Community Engagement: Local Government Sector |

10-05-2017 |

Cardinia Shire Council, |

|

Public Participation in Government Decision-Making |

10-05-2017 |

Department of Environment, Land, Water and Planning, Department of Health and Human Services, |

|

Regulating Gambling and Liquor |

08-02-2017 |

Victoria Police |

Source: VAGO.

Figure B3

2015–16 audits and agencies

|

Audit |

Date tabled |

Audited agencies |

|---|---|---|

|

Biosecurity: Livestock |

19-08-2015 |

Department of Jobs, Precincts and Regions |

|

Bullying and Harassment in the Health Sector |

23-03-2016 |

Department of Health and Human Services |

|

Delivering Services to Citizens and Consumers via Devices of Personal Choice: Phase 2 |

07-10-2015 |

State Revenue Office |

|

Digital Dashboard: Status Review of ICT Projects and Initiatives - Phase 2 |

09-03-2016 |

City West Water Corporation, |

|

Implementing the Gifts, Benefits and Hospitality Framework |

10-12-2015 |

National Gallery of Victoria |

|

Local Government Service Delivery: Recreational Facilities |

23-03-2016 |

City of Greater Bendigo, |

|

Managing and Reporting on the Performance and Cost of Capital Projects |

04-05-2016 |

Coliban Region Water Corporation, |

|

Monitoring Victoria's Water Resources |

25-05-2016 |

Department of Environment, Land, Water and Planning, |

|

Patient Safety in Victorian Public Hospitals |

23-03-2016 |

Department of Health and Human Services |

|

Public Safety on Victoria's Train System |

24-02-2016 |

Victoria Police |

|

Realising the benefit of Smart Meters |

16-09-2015 |

Department of Environment, Land, Water and Planning |

|

Unconventional Gas: Managing Risks and Impacts |

19-08-2015 |

Department of Jobs, Precincts and Regions |

Source: VAGO.

Appendix C. Agency responses to Assurance Review

To gather relevant information for this review we conducted a survey for agencies in November 2019. Agencies were required to self-attest to the accuracy and completeness of their survey response.

The survey sought information on agency governance and monitoring arrangements along with information on the status of performance audit recommendations:

- from all VAGO performance audits tabled in Parliament in 2017–18

- that were reported to us in our 2018 survey as outstanding from audits tabled in 2016–17 and 2015–16.

In total, the survey included 465 recommendations from 44 audits involving 64 agencies.

For audits that tabled in 2016–17 and 2015–16, agencies may have already implemented some recommendations. To provide a more complete picture of agency performance, in this Appendix we have included the status and response of the agency to all recommendations in each audit included in the Assurance Review survey. This includes recommendations that have been implemented in previous years. Agency responses have not been edited by VAGO.

Click the link below to download a PDF copy of the survey responses.

Click here to download survey responses PDF

This information is also available in dashboard form at www.audit.vic.gov.au.