Fair Presentation of Service Delivery Performance: 2024

Review snapshot

Do Victorian Government departments fairly present their service delivery in their department’s performance statement?

Why we did this review

All government departments publish a performance statement each year. This is the main source of information for the Parliament and the community to understand how well the government provides publicly funded services. It is important that departments fairly present information in performance statements so the Parliament and the community can easily understand their responsibilities and performance.

We started doing annual reviews in 2022 to assess if departments fairly present changes to their performance statements. Each year we choose a focus department to examine in detail. This year we assessed if the Department of Treasury and Finance’s (DTF) performance statement helps users understand its service delivery responsibilities and performance.

Our 2022 and 2023 reviews found that departments:

- did not fairly present their service delivery performance

- did not adequately explain all changes to their department’s performance statement, such as changes to their objectives and performance measures.

Key background information

Source: VAGO.

What we concluded

There are systemic issues with the Resource Management Framework’s (RMF) requirements and how departments apply them. This means that the Parliament and the community cannot properly assess performance and cannot fully hold government to account for its performance.

In each of the last 3 years we have found that much of the output information reported by departments is not relevant or reliable, so it is less useful than it otherwise could or should be. Significant effort goes into reviewing, updating, reporting and monitoring measures. Given our consistent findings it is now time to consider what needs to change to ensure the intent of output-based service performance reporting is being achieved.

Data dashboard

1. Our key findings

What we examined

Our review followed 3 lines of inquiry:

- Do changes to performance information help users assess departments' service delivery performance?

- Does DTF’s performance statement help users understand its service delivery responsibilities and performance?

- Are the processes for developing and reviewing information in the department performance statements clear?

To answer these questions, we examined all departments’ 2024–25 performance statements, with DTF as our agency in focus.

Background information about performance reporting

Department Performance Statement

As part of the annual state Budget, DTF prepares information for the Treasurer to table in Parliament about the goods and services (outputs) the government will deliver that year.

Each department outlines this information in its performance statement. This information is presented for all departments in the Department Performance Statement, published by DTF (previously reported in Budget Paper Number 3, or BP3).

Through its performance statement, each department specifies the outputs they are funded to provide to the community for that financial year. Departments set out their expected performance through a set of measures and targets.

The Treasurer uses this information, with advice from DTF, to release funding at the end of each financial year (known as appropriation revenue) to departments based on their success in delivering their outputs and meeting their targets.

The information in the Department Performance Statement is presented so that readers can understand how outputs support the objectives that departments aim to achieve. Departments can publish non-output performance information elsewhere – for example, in annual reports.

Parliament and the community use information in departments' performance statements to understand what services the government delivers and how well departments deliver these services. Parliament and the community can only hold the government accountable for its performance if the information in departments’ performance statements is presented in a way that is easy to understand and provides accurate information on how departments are performing.

Resource Management Framework

The Resource Management Framework (RMF) is a guideline issued by the Deputy Secretary, Budget and Finance Division at DTF under the terms of the Financial Management Act 1994.

The RMF outlines departments’ requirements for developing and reporting information in their performance statements. The RMF also articulates the standard that we can assess departments’ performance reporting against.

This includes:

- mandatory requirements (which require attestation by the Accountable Officer)

- supplementary requirements (which do not require attestation)

- guidance (which is non-mandatory).

DTF manages and implements the RMF. An important part of DTF’s oversight role is ensuring departments comply with any mandatory requirements of the RMF.

Our fair presentation framework, which is based on the RMF’s performance reporting requirements, intends to promote accountability about how the public sector spends public resources.

What is fair presentation?

Service delivery performance information is fairly presented when it:

- represents what it says it represents

- can be measured

- is accurate, reliable and auditable.

What we found

This section focuses on our key findings, which fall into 3 areas:

1. All departments made changes to their performance statements that do not allow the Parliament and the community to properly assess their performance.

2. DTF’s performance statement helps users understand its service delivery responsibilities but not its performance over time or compared with other jurisdictions.

3. Departments’ processes for reviewing their performance statements do not support them to fully comply with the RMF’s reporting requirements.

The full list of our recommendations, including agency responses, is at the end of this section.

Consultation with agencies

When reaching our conclusions we consulted with the reviewed agencies and considered their views.

You can read their full responses in Appendix A.

Key finding 1: All departments made changes to their performance statements that do not allow the Parliament and the community to properly assess their performance

Updates to performance information

The RMF requires departments to update their performance information in a way that enables the Parliament and the community to hold the government accountable for spending public money on services for Victorian citizens. This includes:

- introducing performance measures that clearly reflect services delivered by the department and that can help users compare the department’s performance

- correctly reporting changes to objectives and performance measures.

If departments do not update their performance information in line with these requirements, it makes it difficult for users to properly assess the government’s service delivery performance.

New measures

Departments introduced a total of 81 new performance measures in 2024–25. All departments introduced measures that do not meet some of the RMF’s requirements. For example, only:

- 59 per cent of the new measures measure service delivery

- 62 per cent of the new measures are useful for informing government resourcing decisions

- 60 per cent of the new measures help users compare the department’s performance over time.

These measures do not present the Parliament or the community meaningful and complete information about departments' service delivery performance.

Changes to objectives and measures

All departments made changes to their objectives or performance measures in 2024–25.

Only one department correctly reported changes in line with the RMF’s requirements. We found:

- 6 departments did not adequately provide a reason for changing their objectives

- 8 departments incorrectly described changes to their performance measures.

If departments incorrectly describe changes to information from year to year it makes it difficult to track changes to performance results over time. This means that the Parliament and the community cannot properly assess a department’s performance and cannot fully hold government to account for its performance.

Key issue: We have identified similar issues in previous reviews

Over the last 3 years our reviews have consistently identified:

- new performance measures that do not meet some of the RMF’s reporting requirements

- departments not transparently explaining changes to their objectives or measures.

The way in which this new information is presented does not enable the Parliament or the community to properly assess service delivery performance.

Key finding 2: DTF’s performance statement helps users understand its service delivery responsibilities but not its performance over time or compared with other jurisdictions

A department’s performance measures must allow the Parliament and the community to:

- understand what services the department provides

- meaningfully assess the department’s performance in providing these services

- accurately and reliably assess performance results.

DTF’s services

DTF’s performance statement informs the Parliament and the community about the services it provides to the government and other stakeholders.

In line with RMF requirements, DTF’s performance information:

- demonstrates a clear link between its outputs and objectives

- has quality, quantity and timeliness measures that provide insights on its performance across a broad range of indicators.

However, the department could improve its performance statement by reducing the number of measures that do not measure outputs or do not inform government decision making. A department's performance statement should describe the services it is funded to deliver. Departments' funding for delivering these services is dependent on its results against its performance measures. Accordingly, measures of non-outputs, for example internal departmental processes, should not be published in a department's performance statement.

Comparing DTF’s performance

Many of DTF’s performance measures do not provide complete information about its performance over time or against comparable agencies in other jurisdictions.

| Only … | of DTF’s measures allow users to compare its performance … |

|---|---|

| 67 per cent | over time. |

| 56 per cent | with similar agencies in other jurisdictions. |

DTF’s performance statement also includes measures that may not be able to provide meaningful information about its performance over time. Seven of DTF's performance measures have targets and results consistently reported at 100 per cent. Three of these measures also assess if DTF fulfils its basic legislative requirements. The RMF states that departments should avoid using these types of performance measures where possible because they may not be sufficiently challenging and may not be able to demonstrate continuous improvement.

Key issue: More than half of DTF’s targets for its measures have not been updated since the measure was introduced

The RMF provides that departments should reassess and amend their performance targets where there is constant over or underperformance against the current target or there is a change to funding allocation. Departments can do this as part of the annual performance statement review.

Results for 16 of DTF's measures have consistently met or exceeded their target since 2019–20. This indicates that DTF may not be reviewing its performance targets with full consideration of past performance.

Accuracy of DTF’s results

DTF gave us documentation to show how it has calculated its published performance results since 2019–20.

Based on this documentation, we could only reproduce its results for 39 per cent of its performance measures.

We also identified issues in this documentation for 76 per cent of DTF's performance measures. For example, for some of these measures, we could not clearly understand the business rules or methodology used to calculate the result.

This means that the Parliament and the community cannot have full confidence that all of the performance results DTF published between 2019–20 and 2024–25 are accurate.

Key issue: We could not verify the accuracy of DTF’s results for 61 per cent of its measures

This was because:

- we identified calculation or reporting errors

- the data definitions were unclear or not provided.

Additionally, data quality issues impacted our ability to reproduce results for some measures.

Addressing this finding

To address this finding we made 2 recommendations to DTF about:

- reviewing its performance measures to ensure they meet the RMF’s requirements

- ensuring it keeps performance reporting records that allow independent verification.

Key finding 3: Departments’ processes for reviewing their performance statements do not support them to fully comply with the RMF’s reporting requirements

The RMF requires departments to review their outputs and output performance measures annually to ensure they remain relevant. Throughout this process:

- departments review their performance statements in consultation with DTF

- DTF sets out timeframes and processes for these reviews in its annual information request

- departments are required to comply with the reporting requirements of the RMF.

Reviewing performance information

All departments review their performance information annually to ensure that it remains relevant. Departments dedicate resources and time to this review, and all departments have a central coordinating team that is responsible for reviewing, developing and approving information in their performance statements.

These teams provide guidance and support on RMF requirements to business areas and executives during the annual review process.

Review timeframes

Each year, DTF outlines the timeframes for departments to review and submit their updated performance information. DTF also provides advice to the Assistant Treasurer on departments’ proposals to update their performance information.

Seven departments told us that the timeframes DTF specifies in its information requests are not long enough. These departments told us that the timeframes provided by DTF:

- do not allow them to comprehensively review the feedback on their proposed updates provided by DTF and the Assistant Treasurer

- are not provided well enough in advance of the date this information is to be provided to DTF.

DTF told us that the reporting timelines it sets for departments depend on the timing of the annual state Budget, which the government determines.

Implementing RMF requirements

The RMF requires departments to have a meaningful mix of quality, quantity, timeliness and cost measures, but it does not define ’meaningful’. Four departments told us they find it difficult to identify suitable timeliness measures to satisfy this RMF requirement.

There are other requirements of the RMF that departments must comply with that are not defined. For example, the requirement that departments ensure their mix of measures reflect 'service efficiency and effectiveness' and 'major activities' of the output, and also reflect the impact of 'major policy decisions'.

Central coordinating teams within 6 departments told us that they do not require business areas to consult the RMF when developing and reviewing performance information.

Role of DTF

As the department responsible for the RMF, DTF has a role to play in supporting departments to comply with its requirements.

Five departments told us they want more guidance and engagement from DTF to help them develop their performance statement.

Three departments told us DTF provided less support in 2024–25 than in previous years.

Working well: Central coordinating teams encourage better practice within departments

Most department staff we spoke to were from central coordination teams. They told us they oversee performance reporting and encourage better practice through:

- checking if performance measures can be easily understood

- producing templates and guidance materials based on the RMF

- meeting regularly with business areas.

Key issue: Departments find DTF’s processes and timeframes for departments’ submission of information challenging

Departments told us that DTF’s communication of timeframes and the process for reviewing their performance information makes it difficult for them to adequately plan for their review of performance information and fully incorporate feedback from DTF and the Assistant Treasurer.

Key issue: The current system does not support departments to accurately and consistently report on their performance

Our previous fair presentation reviews found that departments have introduced or changed performance information in ways that are not compliant with the RMF. Through our interviews with departments, we identified some factors that inhibit their ability to fully comply with the requirements of the RMF. This includes the timeframes and process for reviewing and updating their information, and the guidance provided in the RMF itself.

Addressing this finding

To address this finding we made one recommendation to DTF about identifying why departments are consistently not applying the RMF as intended. This includes reviewing if there are issues with the information and processes in the RMF that relate to developing and reporting information in the performance statements.

See section 2 for the complete list of our recommendations, including agency responses.

2. Our recommendations

We made 3 recommendations to address our findings. The Department of Treasury and Finance has accepted these recommendations.

| Agency response | ||||

|---|---|---|---|---|

| Finding: The Department of Treasury and Finance's performance statement helps users understand its service delivery responsibilities but not its performance over time or compared with other jurisdictions | ||||

Department of Treasury and Finance

| 1

| Review its performance measures to ensure they:

(See Section 5). | Accepted in full

| |

2

| Ensure it keeps performance reporting records in a manner that allows for independent verification. (See Section 5). | Accepted in full

| ||

| Finding: Departments’ processes for reviewing their performance statements do not support them to fully comply with the Resource Management Framework's reporting requirements | ||||

Department of Treasury and Finance

| 3

| Investigate why departments are not consistently applying the Resource Management Framework's performance reporting requirements as intended, including identifying any systemic issues in the performance reporting framework and supporting guidance. Develop a plan to address the identified issues. (See Section 6). | Accepted in full

| |

3. Assessing service delivery performance

Departments must present their performance information clearly and accurately so the Parliament and the community can properly assess their performance.

This section explains how we assess if departments are fairly presenting their performance information. Our previous fair presentation of service delivery performance reviews have found that departments are not presenting performance information in a way that allows users to meaningfully compare their service delivery performance.

Covered in this section:

- Our framework for assessing how departments present performance information

- Our previous fair presentation reviews

Our framework for assessing how departments present performance information

Our fair presentation framework

We use our fair presentation framework to help us assess if departments fairly present information in their performance statements.

Our framework is based on the RMF’s reporting requirements and guidance. Appendix D explains each assessment step in our framework.

Key terms

The government funds public service departments to deliver goods and services (’outputs’) to the Victorian community in clear alignment with departmental ‘objectives’ (what they aim to achieve). Performance measures and targets show how departments are delivering these outputs to agreed expectations.

| To determine if performance information … | we assess if… |

|---|---|

represents what it says it represents

|

|

| can be measured | the department’s measures can demonstrate its performance over time or between jurisdictions (comparable). |

| is accurate, reliable and auditable | the department has controls to assure its data is accurate and reliable. |

Our previous fair presentation reviews

Our previous reviews

Since 2022 we have annually assessed if departments fairly present their service delivery performance in their performance statements. This is our third review.

Our 2022 and 2023 reviews found that departments did not fully meet the RMF’s requirements.

| Our review in … | found that … |

|---|---|

| 2022 | departments reported measures in their performance statements that did not relate to service delivery. |

| 2023 | departments continued to report measures that did not meet RMF requirements and did not consistently report changes to their objectives, outputs and measures. |

Continued issues with performance reporting

Our 2024 review has found that all departments did not follow some requirements of the RMF when making changes to their performance objectives or measures in 2024–25 (see Section 4).

We found that DTF’s performance statement includes measures that do not enable the Parliament or the community to compare its performance over time or with other jurisdictions. It also did not provide information to assure the accuracy of published results for 61 per cent of its performance measures (see Section 5).

These findings are consistent with our past reviews.

Our reviews indicate that the RMF is not providing an effective reporting framework that enables the Parliament or the community to properly assess the performance of government departments. This suggests there are systemic issues in the performance reporting framework and requirements of the RMF.

For our 2024 review, we interviewed department officers to gain greater insights into their reporting processes (see Section 6).

4. Changes to performance information in 2024–25

All departments introduced new performance measures in 2024–25 that do not comply with some of the RMF’s reporting requirements. Nine departments amended their objectives or performance measures and either did not explain the changes or explained them incorrectly.

As a result, some information published in the 2024–25 Department Performance Statement does not allow users to properly understand and assess departments’ service delivery performance.

Covered in this section:

- All departments introduced measures that do not meet some reporting requirements

- Nine departments did not transparently report changes to their objectives or measures

- We identified similar issues in our previous reviews

What we examined

Each year we review changes to departments’ performance statements since the previous year. This year we examined:

- new performance measures introduced in 2024–25

- changes to objectives and outputs from 2023–24

- other changes to performance measures, including renamed, replaced and discontinued measures.

All departments introduced measures that do not meet reporting requirements

New measures in 2024–25

Departments introduced a total of 81 new performance measures in 2024–25.

We assessed these measures against the criteria of our fair presentation framework. We also assessed each new performance measure to determine if it is a measure of service efficiency or service effectiveness. These criteria are not part of our fair presentation framework. However, it is a mandatory requirement of the RMF that departments have measures of this type.

Service efficiency and effectiveness

Service efficiency and effectiveness

The RMF requires that departments have a mix of measures for each output that can help assess service efficiency and effectiveness. The RMF does not however define service efficiency and service effectiveness.

For the purposes of this review, we have defined these terms as follows:

Service efficiency: a measure of cost efficiency, expressed as a ratio of cost to services delivered (unit cost).

Service effectiveness: a measure of the department's service delivery that contributes to the achievement of the departmental objective.

Fair presentation of new measures

The RMF has requirements for departments to follow when specifying outputs and performance measures.

For new measures to fairly present a department’s performance, they must meet the following RMF requirements:

- the department’s measures reflect the goods or services it delivers

- the department’s measures can demonstrate its performance over time or between jurisdictions

- the measures have a logical relationship to the department’s outputs and objectives

- the department is responsible for its performance or delivering the goods and services

- the department’s measures are useful to inform the Parliament and the community’s decisions or understand its service delivery performance

- it is clear what the department intends to achieve.

Measures that do not meet these requirements should not be reported in a department's performance statements and may be more suitable to be reported elsewhere.

We found that all departments introduced at least one new measure that does not meet some of these requirements. These measures may not provide meaningful information to the Parliament and the community about how well the government delivers services.

The results for all our assessments are provided below.

| We assessed … | of departments’ measures as … | which means that … |

|---|---|---|

| 59 per cent | outputs | they measure a department’s performance in producing or delivering a good or service to the community or another public sector agency. |

| 60 per cent | comparable over time | they can show a department’s performance over time. |

| 80 per cent | relevant | they are aligned to a department’s objectives. |

| 93 per cent | attributable | performance results are directly or partly attributable to the department. |

| 62 per cent | useful | they can inform the government’s strategic decision-making about priorities and resourcing or provide an understanding of the department’s performance. |

| 89 per cent | clear | they are written clearly and clearly explain what they measure. |

| 56 per cent | effective | they reflect how the outputs provided by the department help achieve the department’s objective. |

| 0 per cent | efficient | they are expressed as a ratio of cost to services delivered. |

Mix of measures

The RMF requires departments to have a ’meaningful mix’ of measures across 4 attributes: quality, quantity, timeliness and cost.

Departments introduced more quantity measures in 2024–25 than any other attribute. Of the 81 new measures in 2024–25:

- 36 are quantity measures (44 per cent)

- 31 are quality measures (38 per cent)

- 13 are timeliness measures (16 per cent)

- one measure (2 per cent) is a cost measure, introduced by the Department of Government Services (DGS).

This is similar to the distribution of all measures. Of the 1,315 measures departments reported in 2024–25:

- 49 per cent are quantity measures

- 25 per cent are quality measures

- 16 per cent are timeliness measures

- 9 per cent are cost measures.*

The RMF states that the interaction between different types of measures can provide insights into service performance. For example, the interaction between 2 different measures could provide an indication of a potential trade-off between the quantity and quality of the service.

If departments do not have a balance of measures across all their outputs, the Parliament and the community cannot properly assess the quality, quantity, timeliness and cost of their services.

*Percentages have been rounded.

Mix of measures across outputs

Performance measures provide information on the quality, quantity, timeliness and cost of departments’ service delivery. A balanced mix of these measures can provide a complete picture of what a department is trying to achieve and how it is performing.

In 2024–25 departments will report their performance across 121 outputs. Of these 121 outputs, 70 per cent have measures that cover all 4 measure attributes. However, only 2 departments have measures that assess their performance for these 4 attributes for all their outputs. The Department of Education (DE) does not have measures of all 4 attributes for any of its outputs.

Figure 1 shows that 5 departments will report outputs in 2024–25 with only 2 measure types (cost and one other).

Figure 1: Departments and outputs that include measures covering only 2 dimensions (cost and one other) for some outputs (2024–25)

| Department | Output/s |

|---|---|

| Department of Energy, Environment and Climate Action (DEECA) | ‘Sustainably Manage Forest Resources’ (sub-output) |

| Department of Families, Fairness and Housing (DFFH) | ‘LGBTIQA+ equality policy and programs’ and ‘Office for Disability’ |

| Department of Health (DH) | ‘Home and Community Care Program for Younger People’ and ‘Mental Health Community Support Services’ |

| Department of Jobs, Skills, Industry and Regions (DJSIR) | ‘Medical Research’ (sub-output), ‘Sport and Recreation’ and ‘Investment Attraction’ (sub output)* |

| Department of Transport and Planning (DTP) | ‘Suburbs’ |

Note: *In the 2024–25 Department Performance Statement, the ’Investment Attraction’ sub-output reported by DJSIR is shown as only having a cost measure. This is a mistake. DJSIR told us that the Investment Attraction sub-output performance measures were incorrectly listed in the Trade and Global Engagement sub-output due to an error in DTF’s final presentation of the Budget papers.

Source: VAGO, based on the 2024–25 Department Performance Statement.

Difficulty in determining a meaningful mix

We asked departments how they determine if their outputs have a meaningful mix of measures.

Departments told us that the mix of quality, quantity, timeliness and cost measures depends on a few factors, including the:

- ‘range of services they deliver through the output’

- ‘availability of data’.

Four departments told us they find it difficult to identify suitable timeliness measures.

One department told us that it ‘notes the requirement for a meaningful spread of measures, but the nature of services [in this portfolio] means measurement leans towards long-term outcomes. This means that creating measures for a dimension such as timeliness may be arbitrary, as there are no broad community expectations around timeliness in [this portfolio] (to the extent there are in other portfolios such as health or transport)’.

Nine departments did not transparently report changes to their objectives or measures

Changes to objectives

The RMF requires that departmental objectives support government objectives and priorities. Objectives should show progress over time so departments should not change them each year.

Six departments changed one or more of their objectives between 2023–24 and 2024–25. If a department changes its objectives, the RMF requires it to explain the reason for each objective change in its performance statement. It does not, however, provide guidance as to how to explain the change.

None of the 6 departments specifically explained changes to their objectives. For example, 3 departments explained the change, but they included it in explanations for an accompanying output change.

Appendix F shows the changes departments made to their objectives in 2024–25.

Changes to outputs

The RMF requires that departments make changes to their outputs only as part of the annual budget process. There is no accompanying guidance about how a department should report a change to an output.

Eight departments made changes to their outputs in 2024–25 and all clearly explained the changes in their performance statement. They did this in either:

- their individual performance statement

- the introductory table at the beginning of the document.

Appendix G lists the changes departments made to their outputs in 2024–25.

New measures

When departments introduce a new performance measure, the RMF requires them to provide an explanatory footnote.

Departments included a footnote for all new measures in 2024–25 except one, which was a total output cost measure that DGS introduced for a new output. It is unclear from the RMF guidance if DGS needed to explain this new measure because each output requires a total output cost measure.

We assessed 3 of the 80 explanations provided by departments for new measures as unclear. These measures replaced measures from 2023–24. However, departments did not explain this.

Changes to measures

All departments renamed, replaced or proposed to discontinue a performance measure in their 2024–25 performance statements.

The RMF requires departments to provide an explanatory footnote when they change a performance measure.

Figure 2 shows the number of measures that departments renamed, introduced as replacement measures or proposed to discontinue in 2024–25. It also shows the number of measures where the explanation for the change was unclear, reported across 8 departments.

Figure 2: Renamed, replacement and discontinued measures in 2024–25

| Type of change | Measures in 2024–25 | Measures with unclear explanations for the change |

|---|---|---|

| Renamed measures | 111 | 18 |

| Replacement measures* | 35 | 17 |

| Discontinued measures* | 110 | 0 |

Note: *According to the RMF, departments should report replacement measures as discontinued measures.

Source: VAGO, based on the 2024–25 Department Performance Statement.

Some of the measures that were changed in 2024–25 were only introduced in the previous year. This includes:

- 16 measures that were renamed

- 6 measures that were replaced

- 13 measures that were proposed to be discontinued.

Why departments discontinue measures

Departments proposed to discontinue 110 performance measures in 2024–25. All departments proposed to discontinue at least one measure.

Of these 110 measures, 13 were new in 2023–24. In 2023–24 departments introduced 120 new performance measures. This means that departments now consider 11 per cent of the new measures they introduced in 2023–24 as no longer relevant.

The RMF provides guidance on when a department should discontinue a measure. It states that performance measures may be discontinued because:

- they are no longer relevant due to a change in government policy or priorities and/or departmental objectives

- projects or programs have been completed, substantially changed, or discontinued

- milestones have been met

- funding is not provided in the current budget for the continuation of the initiative

- improved measures have been identified for replacement.

We asked departments what leads them to discontinue performance measures. Most departments reported that they discontinued a measure if it could be replaced with a more appropriate measure (10 departments) or where the funding for the service had lapsed (8 departments).

Five departments reported they would discontinue a measure if they no longer considered it useful.

Three departments also told us they discontinued a measure in response to feedback from VAGO, Parliament’s Public Accounts and Estimates Committee (PAEC), DTF and ministers.

PAEC reviews performance information

PAEC reviews the changes that departments make to their output performance measures and targets. This includes proposals to discontinue any measures. The Committee may also make observations about new measures that departments have introduced.

PAEC highlights any issues it finds with a department’s proposed changes. It can also make recommendations for a department to add new measures related to certain investments or initiatives. PAEC publishes the results of its review on its website, which can include recommendations for amendments to departments’ performance measures.

Parliament also publishes the government’s response to PAEC’s recommendations.

We identified similar issues in our previous reviews

Our previous reviews

This is our third fair presentation review. We continue to find similar issues with departments reporting information in their performance statements in ways that do not meet the RMF's requirements. This continued non-compliance with the RMF indicates that this is a systemic issue.

| In … | we found that… |

|---|---|

| 2022 | departments do not fully follow the requirements of the RMF, and their performance statements include too much information that is not relevant to output budgeting. |

| 2023 | departments continue to introduce new measures that do not meet the requirements of the RMF and are not consistently reporting changes to their objectives, outputs and measures. |

5. DTF's performance statement

DTF’s performance statement helps users understand its role of providing financial, economic and commercial advice to the government. And its performance measures provide insights into the quality, quantity and timeliness of its performance.

However, we identified that only 67 per cent of performance measures give users the information they need to meaningfully assess DTF’s performance over time. We also could not reproduce DTF’s results for 61 per cent of its published performance measures, which means we cannot assure the accuracy of these results.

Both factors limit the value of DTF’s performance statements in informing the Parliament and the community about how well it is fulfilling its role.

Covered in this section:

- DTF’s performance statement helps users understand its role in providing advice to the government

- DTF’s performance measures do not always provide information on its performance over time or compared to other jurisdictions

- DTF did not provide sufficient information to assure the accuracy of its published performance results

What we examined

We take a closer look at a different department’s performance information each year. This year we focused on how DTF reports its performance under the following outputs:

- Budget and Financial Advice

- Economic and Policy Advice

- Commercial and Infrastructure Advice.

Our findings in this section relate to these 3 outputs.

DTF’s performance statement helps users understand its role in providing advice to the government

DTF’s services

In its 2024–25 performance statement DTF says its mission statement is ‘to provide economic, commercial, financial and resource management advice to help the Victorian Government deliver its policies’.

DTF expects to spend $184.9 million on providing budget and financial advice, economic and policy advice and commercial and infrastructure advice in 2024–25. This is 38.7 per cent of its total expected output budget for 2024–25.

Figure 3: The cost and number of measures for the DTF outputs we assessed

| Output | Number of performance measures | Cost |

|---|---|---|

| Budget and Financial Advice | 11 | $39.0m |

| Economic and Policy Advice | 15 | $60.3m |

| Commercial and Infrastructure Advice | 17 | $85.6m |

| Total | 43 | $184.9m |

Source: VAGO, based on DTF’s 2024–25 department performance statement.

Link between outputs and objectives

The RMF requires that departmental objectives must have a clear and direct link with outputs. We were able to find that the key activities of each output had a link to the achievement of its objective. This means DTF’s performance statement helps users understand how its services help it achieve its objectives.

| For the following output ... | these key activities … | support the objective … |

|---|---|---|

Budget and Financial Advice

|

| optimise Victoria’s fiscal resources.

|

Economic and Policy Advice

|

| strengthen Victoria’s economic performance.

|

Commercial and Infrastructure Advice

|

| improve how the government manages its balance sheet, commercial activities and public sector infrastructure.

|

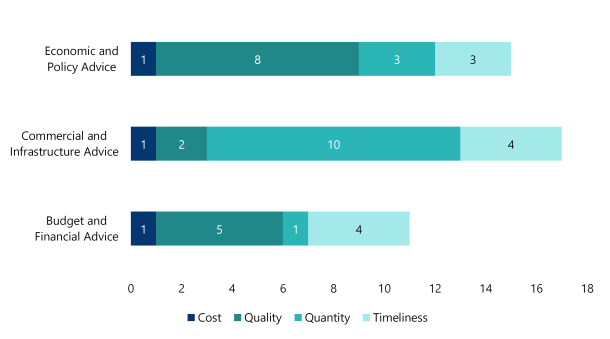

Mix of measures

DTF has quality, quantity, timeliness and cost measures for each of the 3 outputs we looked at.

DTF is one of only 2 departments that have measures that cover all 4 attributes for all outputs.

However, as Figure 4 shows, each of DTF’s 3 outputs have more measures for one attribute over others. For example, the ‘Commercial and Infrastructure Advice’ output has significantly more quantity measures.

These measures can provide useful insights into the volume of services DTF provides through this output. However, the mix of measures cannot provide the same level of information on the quality and timeliness of DTF's service provision, given the fewer number of these measures.

Figure 4: Number of cost, quality, quantity and timeliness measures for selected DTF outputs (2024–25)

Source: VAGO, based on DTF’s 2024–25 department performance statement.

Our assessment of DTF’s measures

We assessed DTF’s 43 performance measures for the selected 3 outputs against our fair presentation framework.

We also assessed if each performance measure evaluates the efficiency or effectiveness of DTF’s services.

Ninety-three per cent of DTF’s performance measures reflect services attributable to the department, and around three-quarters are clearly written, reflect output delivery, and support the departmental objective.

| We assessed … | of DTF's measures as … | which means that … |

|---|---|---|

| 65 per cent | outputs | they measure DTF's performance in producing or delivering a good or service to the community or another public sector agency. |

| 60 per cent | useful | they can inform the government’s strategic decision-making about priorities and resourcing or provide an understanding of the DTF's performance. |

| 93 per cent | attributable | performance results are directly or partly attributable to DTF. |

| 81 per cent | relevant | they are aligned to DTF's objectives. |

| 72 per cent | clear | they are written clearly and clearly explain what they measure. |

| 60 per cent | effectiveness measures | they reflect how the outputs provided by DTF help achieve its objective. |

| 0 per cent | efficiency measures | they are expressed as a ratio of cost to services delivered. |

Areas to improve performance measures

Our analysis shows that DTF could improve some aspects of its performance measures.

We assessed 65 per cent of DTF’s measures as output measures, which means that 35 per cent of its measures reflect something other than outputs. This includes 19 per cent of measures that reflect internal departmental processes DTF uses to provide or develop its services.

DTF should report these measures elsewhere because they do not give the Parliament and the community meaningful information on its service delivery performance.

We also assessed 60 per cent of DTF’s measures as useful for informing government decisions about allocating resources. The remaining 40 per cent of measures should not be reported in its performance statement and are more suitable for its internal reporting and monitoring.

DTF also do not report any measures of service efficiency.

DTF’s performance measures do not always provide information on its performance over time or compared to other jurisdictions

Comparability of performance measures

The RMF requires that performance measures enable meaningful comparison and benchmarking over time (where possible, across departments and against other jurisdictions).

Of the 43 measures we looked at, only:

- 67 per cent allow users to compare DTF’s performance over time (and 33 per cent do not)

- 33 per cent allow users to directly compare DTF’s performance with agencies in other jurisdictions (we acknowledge that measures are developed within the specific context of each jurisdiction, meaning that some may not be directly comparable).

We assessed 23 per cent of DTF’s measures as having indirect or weak alignment with reporting across other jurisdictions and 44 per cent as not being able to support comparisons.

| To assess if DTF’s measures … | we … | and assessed… |

|---|---|---|

| enable users to compare its performance cover time | looked at the measure and unit of measure | 67 per cent of measures as supporting comparison of results over time. |

enable comparison with other jurisdictions

| reviewed budget portfolio statements from other Australian jurisdictions

|

|

Analysing changes to performance targets

The RMF states that departments need to change their performance measures and targets to reflect the impact of any major policy decisions. This may include funding for a new initiative or a change in service levels.

However, performance measures should remain consistent where possible so users can assess a department’s performance over time.

Departments should also reassess targets of performance measures when performance results have been consistently over or under target.

The RMF provides the following guidance to departments when setting performance targets:

- targets should be challenging but achievable

- targets of 100 per cent should not be used in most cases because they cannot demonstrate continuous improvement and may not be sufficiently challenging

- measures that relate to a department’s basic legislative requirements may not be challenging enough.

Reflecting past performance

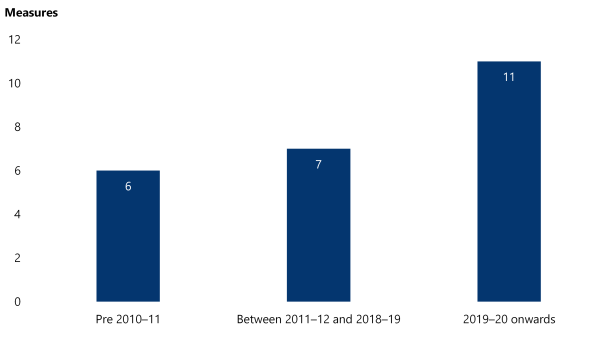

More than half of DTF’s performance measures (24 of 41 measures) for 2024–25 have the same target as when DTF first introduced them.

DTF introduced 6 of these measures prior to 2010–11. This means DTF has included these measures in its performance statement for over 15 years without revising the target.

Figure 5: DTF’s 2024–25 performance measures with targets that have not changed since it introduced the measure

Note: We excluded 2 of DTF’s 43 performance measures from this analysis because DTF reclassified the type of measure (quality, quantity or timeliness). However, DTF still reports historical data against the new measure despite this being a significant change.

Source: VAGO, based on DTF’s department performance statements from 2019–20 to 2024–25.

Measures not sufficiently challenging

DTF has some measures in its performance statement that the RMF recommends departments not use.

| DTF has … | According to the RMF, these measures … |

|---|---|

| 3 measures for legislative requirements. | may not be sufficiently challenging because they represent a basic minimum standard rather than the quality of a service. |

| 7 measures with a target of 100 per cent (which it has consistently achieved). | have no capacity to demonstrate continuous improvement from year to year and may not be sufficiently challenging. |

DTF did not provide sufficient information to assure the accuracy of its published performance results

Reproducing DTF’s results

We asked DTF for the data, documentation and methodology it has used to calculate its performance results for 38 measures since 2019–20. This excludes 3 total output cost measures and 2 measures that were only introduced in 2024-25.

We used this information to try to reproduce DTF’s results.

| For this percentage of measures … | we could… |

|---|---|

| 61 | not reproduce published results using the data provided. |

| 39 | reproduce published results using the data provided. |

DTF’s data quality

We assessed the quality of the data and documentation that DTF used to calculate its performance results. We found data quality issues with the data underpinning 76 per cent of DTF’s measures.

| For this percentage of measures … | we could … |

|---|---|

39

|

|

37

|

|

24

|

|

6. Processes to support compliance with reporting requirements

Departments annually review their performance information and have processes to check if they comply with the RMF’s requirements.

However, our analysis of updates to the 2024–25 performance statements shows that these processes do not support departments to consistently meet the RMF’s requirements.

Some departments told us they find it difficult to meet some of the RMF’s requirements and review their performance information within required timeframes.

Covered in this section:

- Departments dedicate time and resources to review their performance information

- Seven departments reported difficulties in reviewing their performance information within set timeframes

- Four departments told us they have had difficulty applying the RMF's requirements

- Five departments want DTF to have a more proactive role

What we examined

We interviewed each department to understand how they develop, use and review their performance information.

We then had a follow-up discussion with the 3 departments (DE, DEECA and DTF) that have been focus agencies for our fair presentation reviews.

Departments dedicate time and resources to review their performance information

Departments’ review processes

We asked all departments how often they review their performance information.

All departments told us they review their performance information at least annually, following the process that DTF outlines in the information request that it sends to departments each year. Figure 6 lists the steps in this process.

Some departments said they internally review their performance information more frequently. But this frequency differed between departments and there was not a consistent approach across all departments.

Figure 6: Process for reviewing performance information outlined in DTF’s information requests

| Stage | Description |

|---|---|

| First review | The department submits the initial review of its performance statement to DTF. DTF then provides advice to the Assistant Treasurer on changes proposed by the department. |

| Second review | The department reflects any feedback from the Assistant Treasurer it has accepted in its revised performance statement and submits this to DTF. |

| Third review | The department submits its updated performance statement to DTF, incorporating impacts of any budget decisions. |

| Publication | The department receives approval of the final performance statement by the relevant minister, and the department submits the performance statement to DTF for publication. |

Source: VAGO, based on DTF’s 2024 information requests and information we gathered during our interviews with departments.

Responsibilities for performance reporting

Departments told us that multiple areas of the department have a part in developing, reviewing and reporting performance information.

| Departments’ … | are responsible for ... |

|---|---|

central coordinating teams

|

|

business areas

|

|

| accountable officers | ensuring the department’s performance statement complies with the RMF’s mandatory requirements. |

Role of central coordinating teams

All departments told us they have a central coordinating team that:

- supports business areas to prepare their performance information

- is responsible for coordinating the department’s information submission to DTF.

The central coordinating teams at 6 departments told us they do not require business areas to consult the RMF to review or update their performance information. Instead, these teams embed relevant guidance from the RMF into other materials, such as templates or data dictionaries, to help business areas comply with the RMF.

This enables the central team to take on a guidance role and encourage better practice across the department. However, this can leave staff in business areas less familiar with the RMF’s requirements.

One department’s central coordinating team told us 'the level of understanding between the people [in business areas] is variable. There is a gap in training’. They also told us that they do not have the capacity or technical knowledge to monitor compliance with the RMF for every aspect of every measure.

Seven departments reported difficulties in reviewing their performance information within set timeframes

Timeframes for processes

Seven departments told us that DTF’s information requests do not give them enough time to prepare and review their performance information.

DTF told us that it sets these timeframes in line with the timeframes the government sets each year for the Budget process.

Five departments told us that DTF sends the information request too late in the process for them to properly review their performance information.

One department told us that DTF has previously given it one week to incorporate the Assistant Treasurer’s feedback into its performance statement, which was not enough time.

Two departments told us that DTF did not give them the templates for the review process until a few weeks before the submission date. They told us this meant they could not adequately plan for the performance statement review process and had to internally estimate dates.

Four departments told us they want DTF to provide templates and due dates earlier in the review process. Three departments said they want more timely feedback from DTF to give them enough time to incorporate it into their performance statement.

Some departments reported regular contact with DTF and were satisfied with the level of support it provided. For example, one department reported having fortnightly catchups with its DTF portfolio area. Two other departments said they proactively engage with DTF before the formal performance statement review process begins to get feedback and advice.

Four departments told us they have had difficulty applying the RMF’s requirements

Applying the RMF’s requirements

All departments told us they do not provide specific training on the RMF to staff in business areas. Five departments told us this is because the RMF is long and detailed, or because it would not be practical for each business area to engage with it in detail.

For example, one department told us ‘for our [staff in business areas], this is a very small portion of their day job, so it is unreasonable to expect them to go and research the RMF’.

Another department told us the RMF was ’difficult to understand’ for staff in program areas.

One department told us in a follow-up interview that staff find it challenging to create performance measures that both capture the department’s practical work and comply with the RMF’s requirements.

This department said: 'If the RMF had more detailed guidance and examples that might help. [DTF] could provide more practical examples of [what information should be included or excluded] and why and build on that understanding. We have to balance competing needs – wanting to be transparent and informative, and also satisfying the RMF.'

Unclear definitions

The RMF does not define or provide guidance on key terms it uses in its performance reporting requirements. For example, there are no definitions for the following terms, which all relate to departments’ mandatory requirements:

- ‘meaningful’ mix of measures

- major activities

- major policy decisions

- service effectiveness

- service efficiency.

This creates ambiguity and can make it challenging for departments’ staff to interpret and consistently apply the RMF’s requirements.

Challenges meeting RMF requirements

Case study 1: Challenges meeting RMF requirements

Four departments told us they had difficulty identifying suitable timeliness measures

We asked departments how they determine a meaningful mix of quality, quantity and timeliness measures for each output.

Six departments told us that the mix of measures is dependent on the types of services their department provides, and 2 departments said it can depend on data availability. This can make it challenging for some departments to introduce certain types of measures.

For example, one department told us:

'types of services vary in terms of quality, quantity and timeliness. There are very few timeliness measures for [some portfolios]. Timeliness measures of statutory requirements are not good BP3 measures. We don’t want to add timeliness measures just to round it out'.

Another department told us ‘if we don’t have a certain type of measure, we are pretty much obliged to make one’.

Source: VAGO, based on quotes from a follow-up interview with a previous focus department.

Developing service efficiency measures

In our 2021 report Measuring and Reporting on Service Delivery we made a recommendation for all departments to develop output performance measures that use unit costing to measure service efficiency (recommendation 3).

DTF accepted this recommendation, stating that it: 'supports the introduction of efficiency measures and improving the balance of QQTC [quality, quantity, timeliness and cost] measures to enhance the ability to assess efficiency. DTF will include advice on unit costings and efficiency measures in the RMF’.

DTF initially provided a completion date of June 2023 for this action.

In its response to Responses to Performance Engagement Recommendations: Annual Status Update 2024, DTF has updated the completion date for this action to June 2025. DTF stated it is: 'preparing future updates to the Resource Management Framework. Due to the complexity and size of the proposed reforms, this process requires significant consultation and testing. DTF will consider this recommendation [recommendation 3] as part of the broader performance reforms and continue to improve the RMF’.

In their updates on this recommendation as part of the Responses to Performance Engagement Recommendations: Annual Status Update 2024, DEECA, DFFH and DTP indicated that they are waiting for updated advice from DTF to help them develop efficiency measures.

Introducing new measures

We asked departments why they develop new performance measures.

All departments identified the performance statement review process and significant policy or funding changes as the main reasons for developing new measures. This is consistent with the RMF’s requirement for departments to update their measures to reflect major policy decisions. For example, to fund a new initiative or change the level of service they provide.

Six departments also identified VAGO or PAEC recommendations as key triggers for developing new measures.

Challenges in developing new measures

Our findings from this review and our previous fair presentation reviews show that departments continue to introduce new measures that do not meet the RMF’s requirements.

We asked DE, DEECA and DTF (as the 3 focus agencies) about their challenges in meeting these requirements.

One department told us that DTF requires it to develop performance measures to submit as part of its funding bids. However, if an initiative is partially funded, the department must revise the proposed measure within a short timeframe. This may mean that it introduces a performance measure that does not fully comply with the RMF’s requirements.

One department told us that the RMF does not clearly outline financial thresholds for developing measures for programs with smaller funding levels. This department wants the RMF to have rules around the expected size (in terms of funding allocation) or number of measures departments should have. For example, a funding threshold trigger to create a new measure when $20 million or more of funding is allocated.

One department told us that PAEC had previously recommended for it to introduce measures that the department considered inconsistent with the RMF’s requirements. The department gave us an example where PAEC recommended for it to introduce outcome measures into its performance statement for a specific service area, when performance statements should only include output measures.

Three departments also told us that portfolio ministers, who are accountable for their department’s performance statement, may influence the process for setting performance targets.

Five departments want DTF to have a more proactive role

DTF’s role

The RMF outlines DTF’s specific responsibilities as the department responsible for managing and implementing the framework.

| DTF … | it is responsible for … |

|---|---|

supports the Assistant Treasurer to agree and publish departments’ performance statements in the Budget papers

|

|

| supports the Assistant Treasurer to manage the RMF | consulting with key stakeholders. |

Departments support their secretary and portfolio ministers to achieve government’s objectives through performance management activities, such as delivering outputs to the agreed performance standards.

DTF does not determine the final content of departments’ performance statements. The relevant portfolio ministers do this with the Assistant Treasurer.

DTF’s engagement and support

Three departments told us that DTF’s level of engagement and support to help departments comply with the RMF has reduced in recent years.

For example, one department told us that DTF used to provide training sessions on how to apply the RMF’s requirements but no longer does.

Another department told us there is ‘room for improvement around the level of engagement’ it receives from DTF and the current level of support has ‘dropped compared to how it used to be’.

Five departments told us they want more guidance and engagement from DTF to help them prepare their performance statements and comply with the RMF’s requirements. Two of these departments said they wanted a more collaborative approach from DTF that involves:

- earlier engagement in the performance statement review process

- more frequent meetings

- more opportunities to ask questions.

Appendix A: Submissions and comments

Download a PDF copy of Appendix A: Submissions and comments.

Appendix B: Abbreviations, acronyms and glossary

Download a PDF copy of Appendix B: Abbreviations, acronyms and glossary.

Appendix C: Review scope and method

Download a PDF copy of Appendix C: Review scope and method.

Appendix D: How VAGO assesses departments' performance measures

Download a PDF copy of Appendix D: How VAGO assesses departments' performance measures.

Download Appendix D: How VAGO assesses departments' performance measures.

Appendix E: Fair presentation framework assessment criteria

Download a PDF copy of Appendix E: Fair presentation framework assessment criteria.

Download Appendix E: Fair presentation framework assessment criteria

Appendix F: Changes to objectives

Download a PDF copy of Appendix F: Changes to objectives.