Measuring and Reporting on Service Delivery

Snapshot

Does the way Victorian government departments measure and report on their service delivery support accountability and good decision-making?

Why this audit is important

Departments are accountable to Parliament and the community for what they achieve using public funds. They must accurately report their performance in the Budget papers and their annual reports because this information is essential to identify what is working and what areas need improvement.

Over the last 20 years, our audits have found significant and persistent weaknesses in departments' performance reporting, including weak links between the objectives they set and the way they measure success.

Who we examined

We examined all eight departments and selected the following three for further analysis as case studies: the departments of Treasury and Finance (DTF), Education and Training (DET) and the former Health and Human Services (DHHS).

What we examined

We examined if departments:

- meet their responsibilities to measure and report on performance in compliance with DTF’s Resource Management Framework (the Framework)

- ensure their performance information is accurate

- report their performance information in a way that users can readily understand.

What we concluded

Departments do not measure or report on their performance well.

They do not:

- fully comply with the Framework

- measure their service efficiency or effectiveness

- present their performance information in a way that enables efficient and effective analysis.

It is also apparent that the process of adding new measures into the Budget papers is failing.

The Framework requires departments to measure output delivery and outcome achievement. However, there are too many input and process measures and poorly constructed output measures and objective indicators in the Budget papers. This obfuscates departments' performance reporting and diminishes their accountability.

We continue to find the same issues whenever we examine departments' performance reporting, which indicates the need for a 'root and branch' review of the entire performance reporting framework.

What we recommended

This audit report provided 11 recommendations to all departments to improve the quality of the information in their performance statements, maintain complete data dictionaries, and improve their explanations of variances between actual and targets.

Video presentation

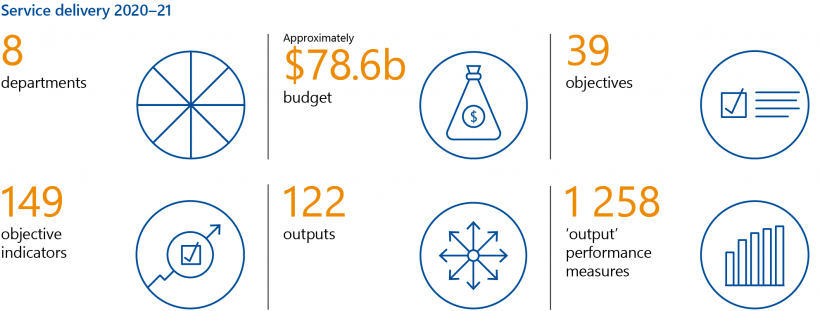

Key facts

Data dashboard

Each year, as part of the budget process, departments set output performance measures and targets to monitor how well they are delivering public goods and services.

Our dashboard brings together the publicly reported results for departments’ output performance measures from 2008–09 to 2020–21, as publicly reported in the budget papers and agency annual reports.

This dashboard allows you to compare departments’ performance against each other, and drill down to examine performance trends for individual measures over time. You can also download raw data on output performance measures, so you can conduct your own analysis.

What we found and recommend

We consulted with the audited agencies and considered their views when reaching our conclusions. The agencies’ full responses are in Appendix A.

Measuring outcomes

Clear objectives are the foundation of a meaningful performance measurement system because they define the desired outcomes that performance will be measured against. The Department of Treasury and Finance's (DTF) Resource Management Framework (the Framework) requires departments to set clear objectives and report on their progress towards achieving them.

BP3 outlines the government's priorities for the services it provides and sets out the costs of the services. It includes a breakdown of all output funding with associated performance targets.

Departments report their objectives in the Budget Paper No. 3: Service Delivery (BP3). While most departments have set clear objectives, BP3 includes examples of objectives that do not clearly express the desired outcome the department aims to achieve. For example:

- the Department of Justice and Community Safety's (DJCS) objective, ‘Effective management of prisoners and offenders and provision of opportunities for rehabilitation and reparation’, states DJCS's responsibilities in regards to correctional services, not the intended outcome, which would likely relate to reduced recidivism

- the Department of Premier and Cabinet's (DPC) objective, 'High-performing DPC', does not express the intended outcome for the community or other departments for the services it provides.

In these circumstances, it is difficult to understand the goals that departments are working towards.

We also found multiple examples of objective indicators that do not meet the Framework's requirements and subsequently do not provide useful information about outcome achievement.

|

Many objective indicators in BP3 are not informative about outcome achievement because they … |

For example … |

|

Measure outputs (for example, the quantity of services provided) rather than outcomes |

The Department of Education and Training's (DET) objective indicator 'Engagement: Increase the number of Victorians actively participating in education, training, and early childhood development services' counts the 'outputs' DET delivers, not the outcomes of enrolments, which would be course completions or employment |

|

Are vague because it is difficult to interpret what is being measured |

For the Department of Transport's (DoT) objective indicator 'Reliable travel', there is no further detail in BP3 to explain what is being measured or how |

|

Lack any business rules to explain how results are calculated and where data is sourced |

Around 60 per cent of objective indicators in the 2019–20 BP3 have no documented business rules |

|

Lack baseline data to measure progress against |

No departments have baseline data for any of their objective indicators. This is particularly problematic for the many objective indicators that aim to 'reduce', 'improve' or 'increase' something |

As a result, departments' performance reporting is missing key information about whether service delivery is achieving intended outcomes. This is a significant gap. Without information on outcome achievement, the government lacks a sound basis for its future investment and policy decisions.

In 2019, DPC introduced Outcomes Reform in Victoria (the Outcomes policy), which aims to improve the way departments report on their outcomes and support the creation of bespoke outcomes frameworks for specific service delivery areas. However, the policy does not articulate what relationship or priority departmental outcomes should have to departments’ objectives and objective indicators, and makes no reference to the Framework at all.

As a result, there is a risk that departments may develop conflicting sets of outcomes and measures, de-prioritise their BP3 objectives and objective indicators, or create confusion among staff, government decision-makers, Parliament and the community about what the departments' objectives are and which performance information to use.

Measuring output performance

An output should capture all the specific activities that make up a service and should contribute to the achievement of a department’s objective. The 2020–21 BP3 includes examples of outputs that that are too large in size or combine too many separate activities. This reduces transparency and accountability by making it difficult for Parliament and the community to understand the cost and performance of the individual services the output covers.

Across all departments and service delivery areas, there are many output performance measures that provide useful insights into departments’ performance. However, no department consistently meets the requirements of the Framework for designing output performance measures.

A unit cost is the cost of providing one instance of a service, rather than the total cost of all activities that a department delivers. For example, the unit cost for an ambulance service could be 'cost per ambulance trip'.

This is despite the Framework describing output performance measures as the 'building blocks of the accountability system' and the 'basis for the certification of departmental revenue'. The lack of clear and relevant output measures is a significant failure of the state's key performance and accountability framework. Without well designed output performance measures, departments cannot be held properly accountable to the government, Parliament and the community for their output performance.

Figure A outlines the wide range of issues that limit the usefulness of departments’ current output performance measures.

Figure A: Limitations of 2020–21 output performance measures

| Issue | Breach of mandatory requirements? | Inconsistent with Framework guidance? | Example |

|---|---|---|---|

| Only 64 per cent of outputs have at least one output performance measure in each of the four dimensions of quantity, quality, timeliness and cost. Where outputs have output performance measures that lack one or more of the four dimensions, it is not possible to see if departments are making trade offs, such as sacrificing quality for timeliness. | Yes | Yes | DET has no timeliness measures for any of its outputs, which include activities where timeliness is important, such as its regulatory oversight functions, delivery of various supports to students or training programs to teachers. |

| Across the 1 258 output performance measures for all government departments in 2020–21, there are only two direct measures of technical efficiency. This represents a significant gap in performance reporting for public service delivery in Victoria. | Yes | Yes | Many output performance measures that simply count the number of services provided could be converted to show unit costs. For example, DJCS's measure 'Annual daily average number of male prisoners' would be more valuable as an efficiency measure, such as cost per prisoner. |

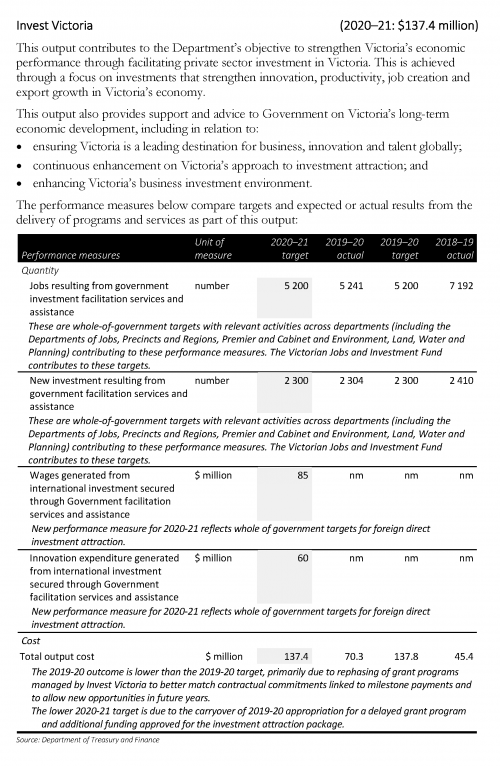

| Many measures do not measure outputs and instead measure inputs, processes or outcomes. This results in significant gaps in service performance information. | Yes, because the Framework requires departments to develop output measures | Yes | For DTF's Invest Victoria output group, where service delivery aims to increase business investment in Victoria, there is only one true output measure, which counts the number of visits to the Invest Victoria website. Aside from this, one input measure is included ('total cost') and the rest are all outcome measures that outline the numbers of jobs created, businesses attracted to Victoria and funds generated. These results may be influenced by factors outside of DTF's control. |

| Some output performance measures are too vague for the user to understand what is being measured. BP3 does not provide any further detail to explain them. |

No | Yes | Output performance measures, such as the following, fail to describe what is being measured and how:

|

| Some departments use output performance measures where performance results are not attributable to them. | No | Yes | Some output performance measures count things that departments cannot control, such as:

|

| Some departments use output performance measures and targets that only reflect meeting minimum standards or legal requirements. | No | Yes | Output performance measures that only show that a department has not breached legal requirements are not useful in showing performance, such as:

|

| Some departments’ output performance measures prevent comparison of performance over time. | Yes | Yes | Raw counts of services delivered prevent comparison over time because they do not consider changes in population, service user numbers or funding amounts. For example, DHHS's measure 'Total community service hours' could be tracked if converted to an efficiency measure, such as cost per community service hour or community service hours per capita to demonstrate levels of service usage. |

*Note: As the time period of this audit predates relevant machinery of government changes, throughout this report we refer to DHHS, which is the predecessor agency of what are now the Department of Health (DH) and the Department of Families, Fairness and Housing (DFFH).

Source: VAGO, based on the Framework and the 2020–21 BP3.

A service logic explains how activities lead to a desired outcome. For example, a service logic approach explains how departments transform their inputs into outputs to achieve their desired outcomes. We discuss this in Section 1.1.

The frequency of issues in output measure design we observed across departments shows a lack of understanding of the Framework’s requirements and the service logic of the activities being measured.

As DTF has an important role in reviewing and providing advice about departments' measures and as the owner of the Framework, it could do more to address this. However, DTF does not comply with some of its own requirements either. Further, despite accepting the recommendation in our 2014 audit, Public Sector Performance Measurement and Reporting, to improve its guidance material on performance measurement by including examples of efficiency and effectiveness measures and how to link outputs to departmental objectives, DTF has not done this this effectively.

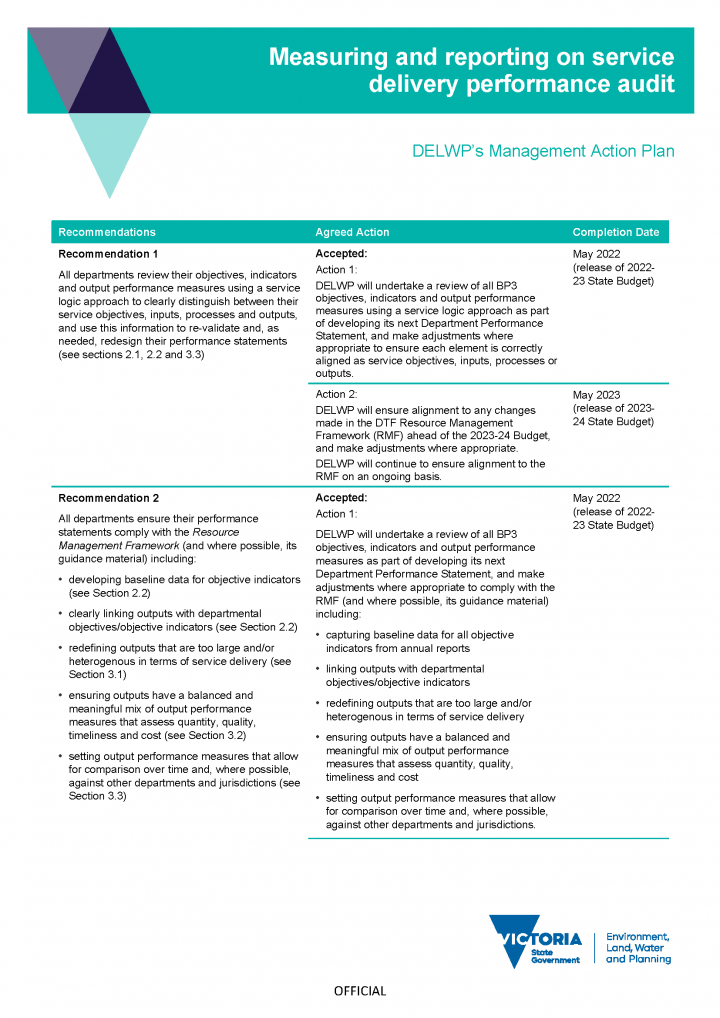

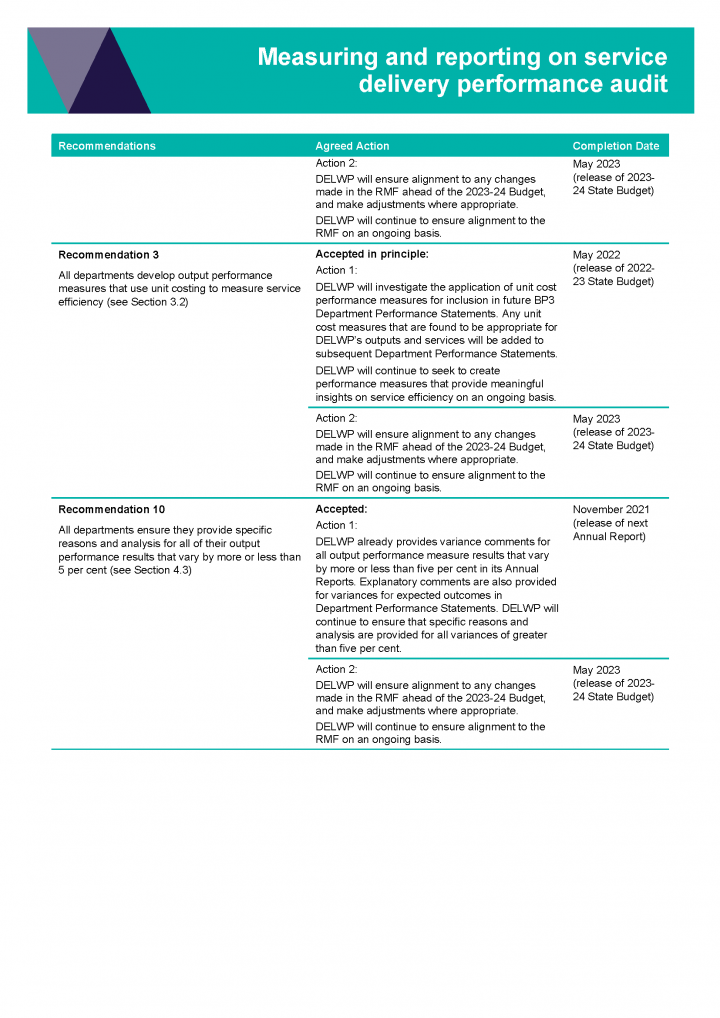

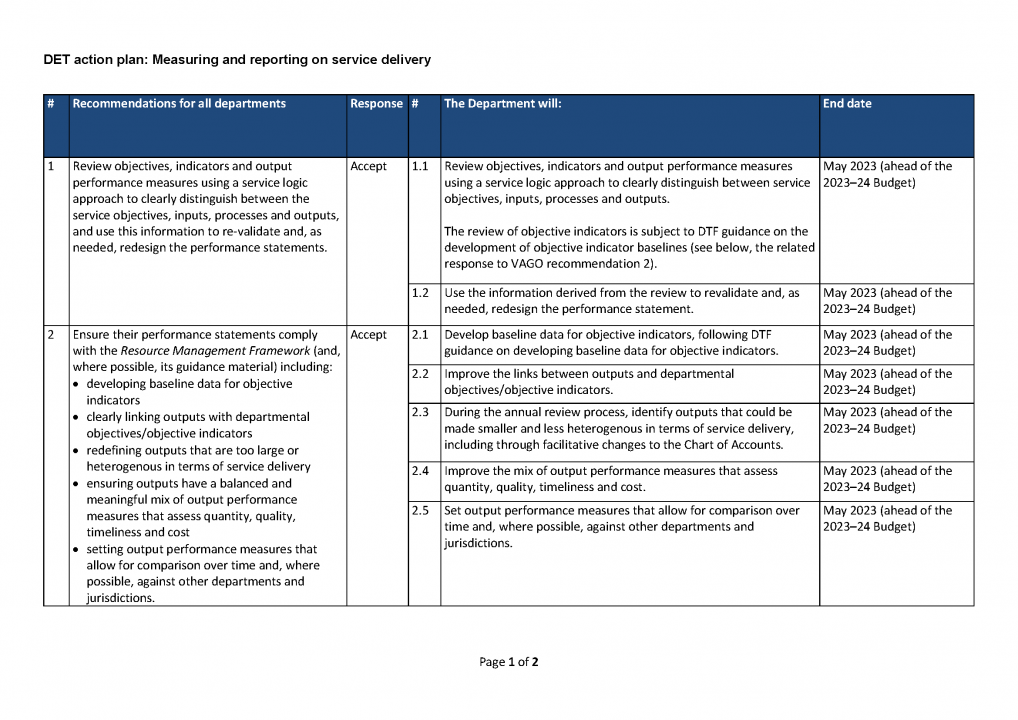

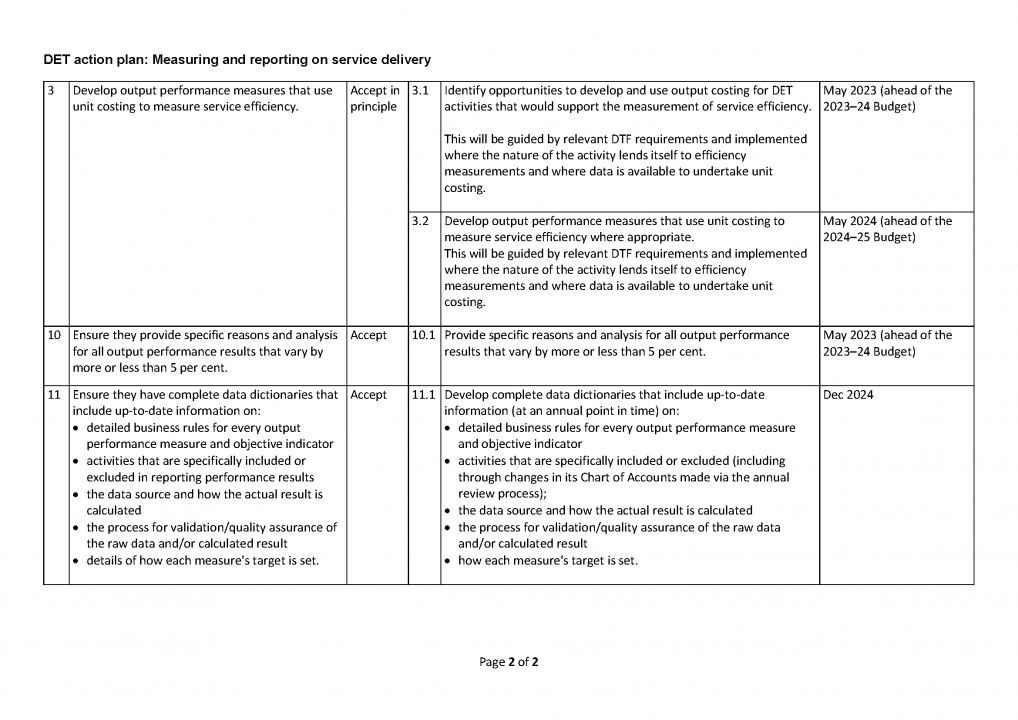

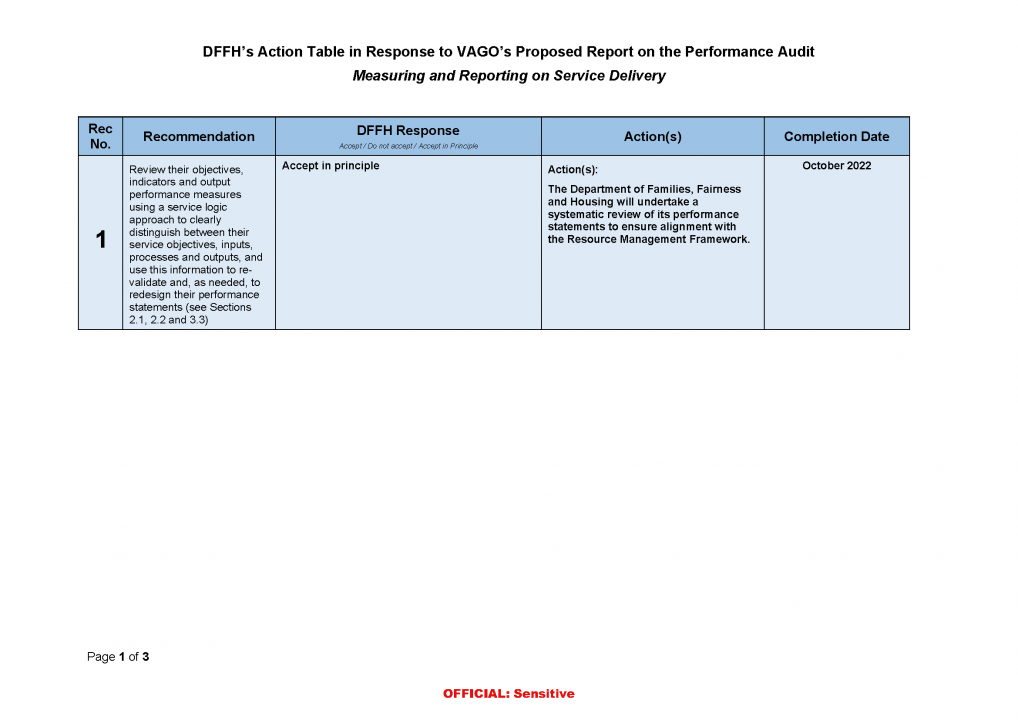

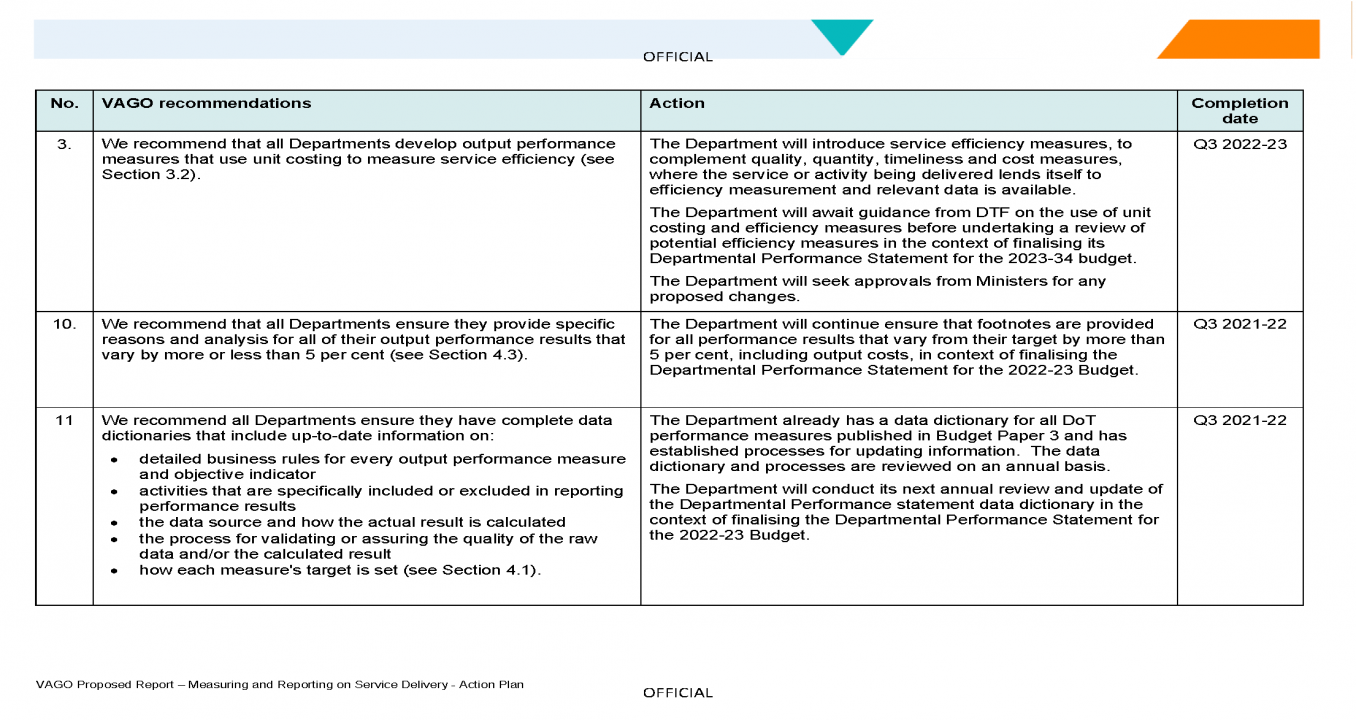

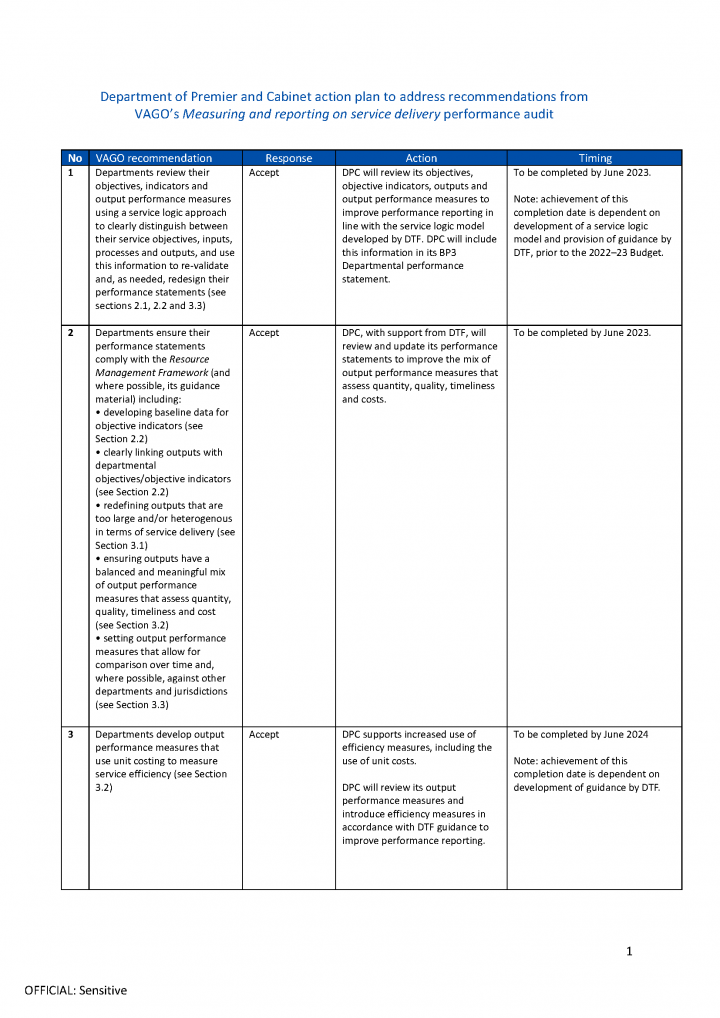

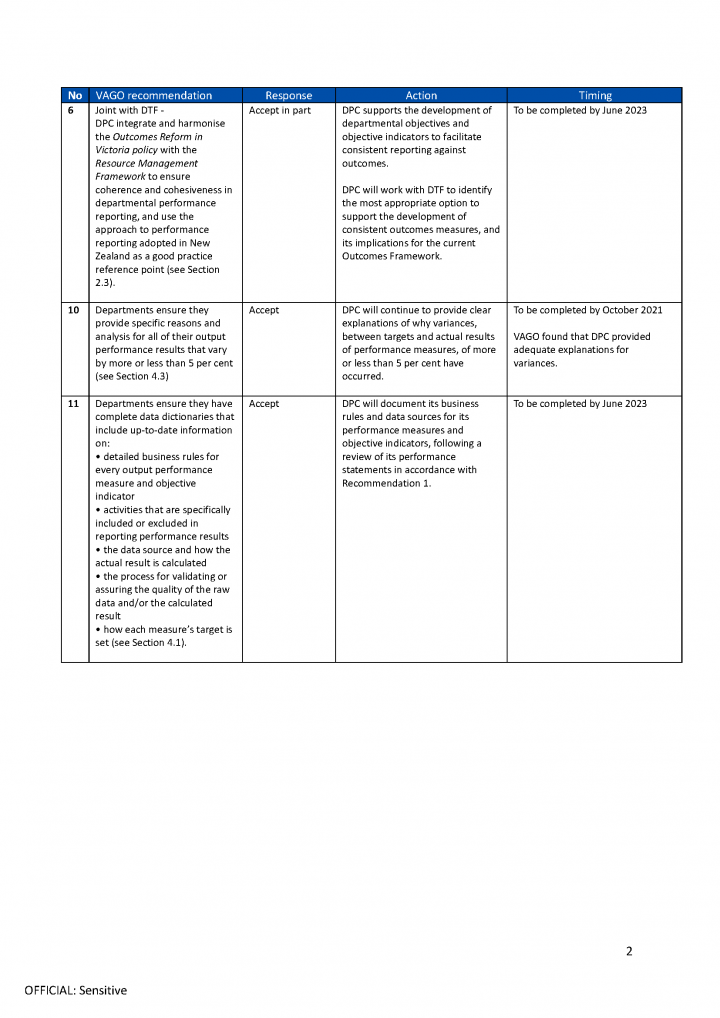

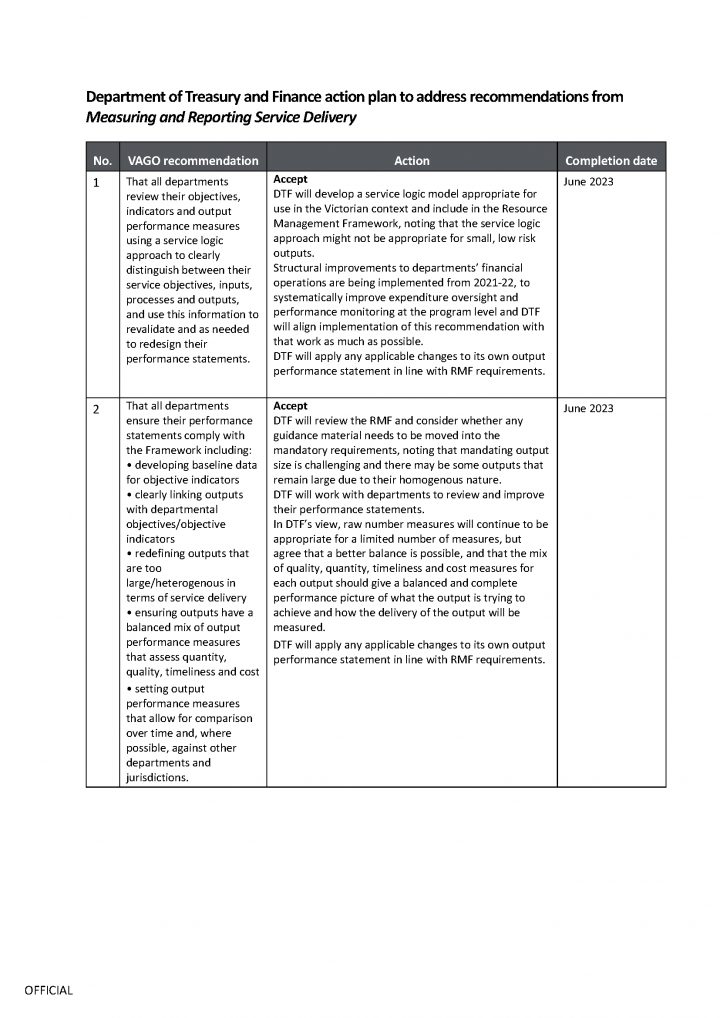

Recommendations about measuring objectives and output performance

| We recommend that: | Response | |

|---|---|---|

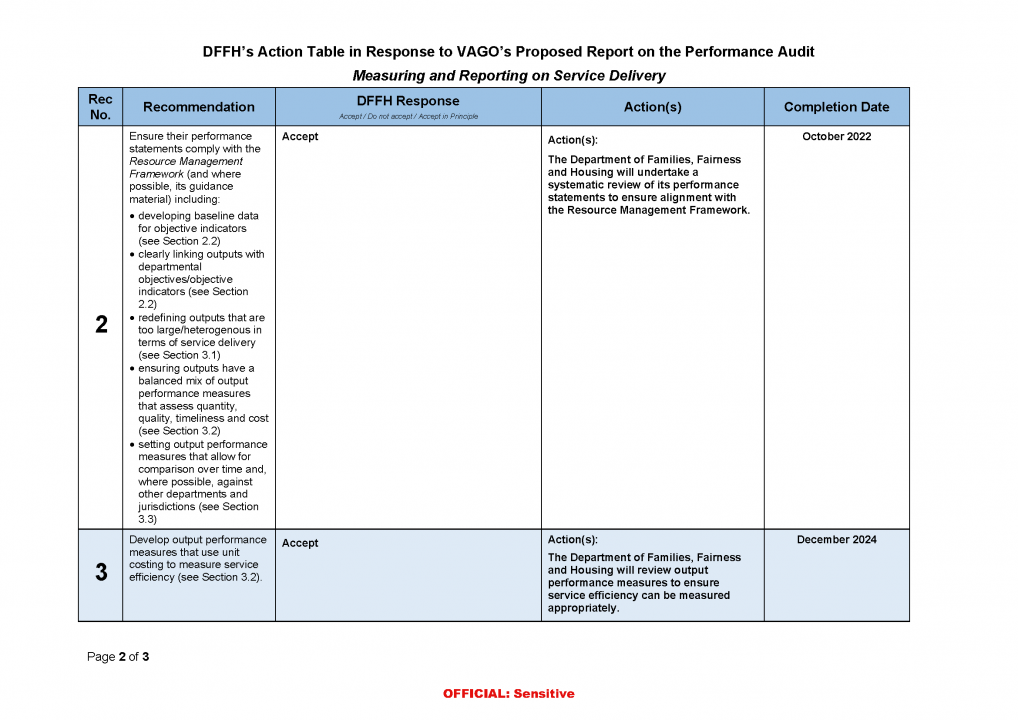

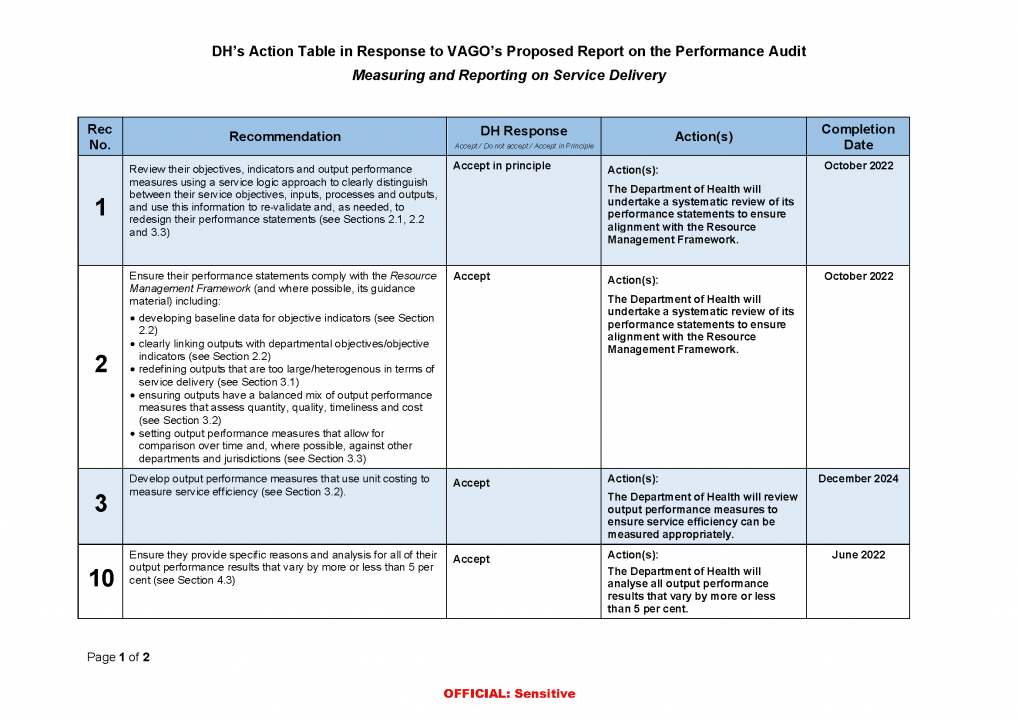

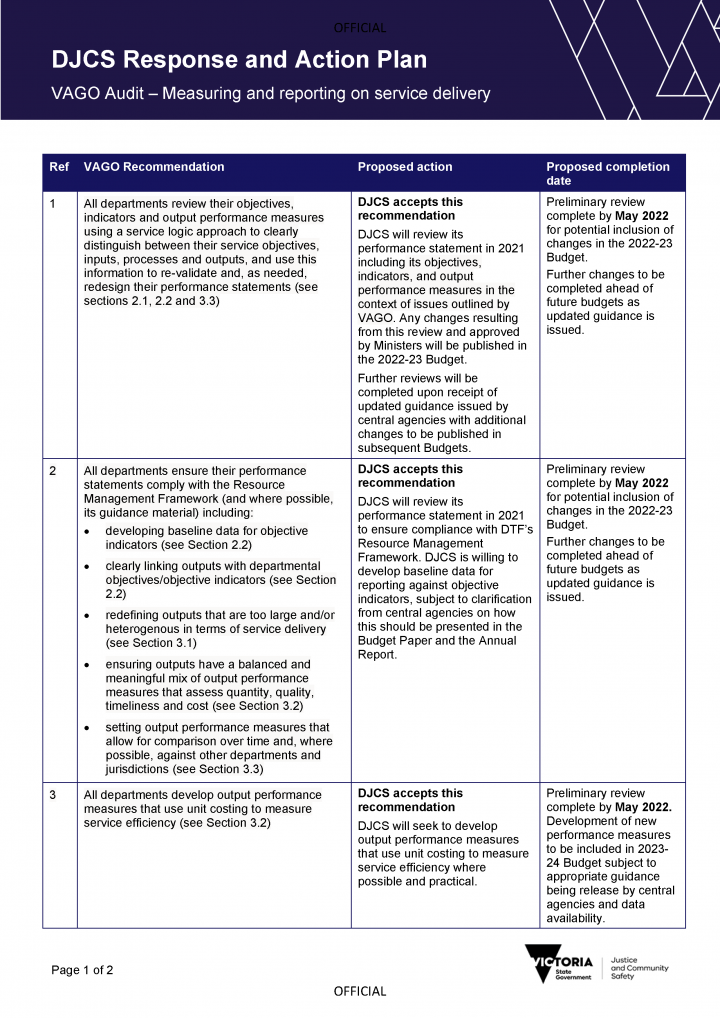

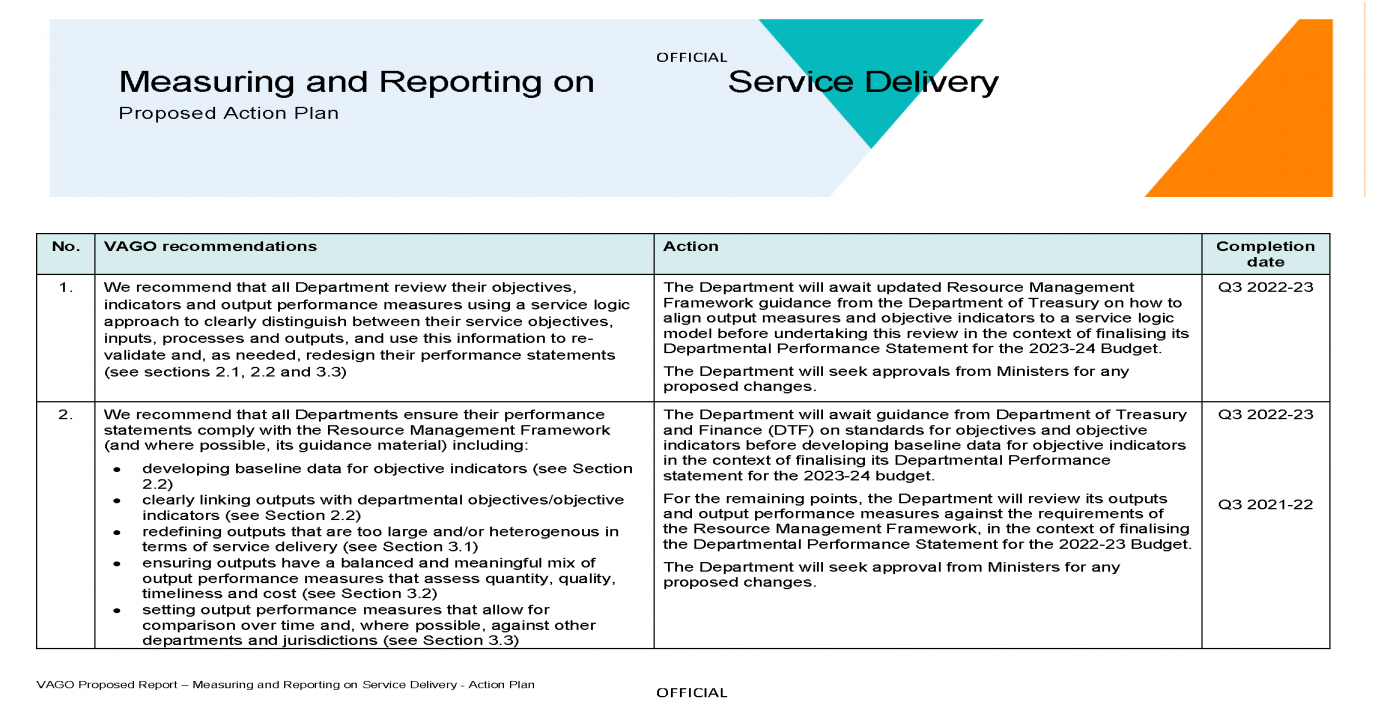

| All departments | 1. review their objectives, indicators and output performance measures using a service logic approach to clearly distinguish between their service objectives, inputs, processes and outputs, and use this information to re-validate and, as needed, redesign their performance statements (see Sections 2.1, 2.2 and 3.3) | Accepted by: DELWP, DET, DJCS, DJPC, DPC, DTF and DoT Accepted in principle by: Department of Families, Fairness and Housing (DFFH), Department of Health (DH) |

2. ensure their performance statements comply with the Resource Management Framework (and, where possible, its guidance material) including:

|

Accepted by: All departments | |

| 3. develop output performance measures that use unit costing to measure service efficiency (see Section 3.2). | Accepted by: DFFH, DH, DJCS, DJPR, DPC, DTF Accepted in principle by: DELWP, DET, DoT |

|

| Department of Treasury and Finance | 4. improves the Resource Management Framework's guidance materials to:

|

Accepted |

| 5. in its annual review of departmental performance statements as part of the Budget process, advise the Assistant Treasurer on the extent to which each department’s performance statements comply with all mandatory requirements of the Resource Management Framework (see Sections 2.1, 2.2, 3.1, 3.2 and 3.3). | Accepted in principle | |

| Department of Treasury and Finance and Department of Premier and Cabinet | 6. integrate and harmonise the Outcomes Reform in Victoria policy with the Resource Management Framework to ensure coherence and cohesiveness in departmental performance reporting, and use the approach to performance reporting adopted in New Zealand as a good practice reference point (see Section 2.3). | Partially accepted by: DPC Accepted in principle by: DT |

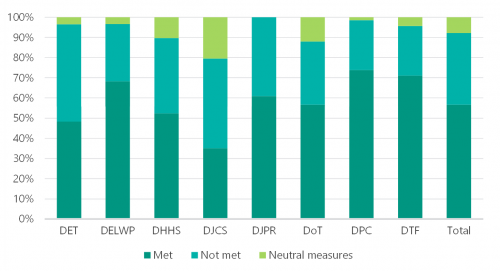

Neutral measures are measures where meeting or not meeting the target does not provide meaningful information about a department’s performance. For example, with DHHS’s output performance measure, ‘Reports to Child Protection Services about the wellbeing and safety of children’, it is not clear what the department is aiming to achieve. A result below the target may mean that preventative services to support child safety are working as intended. On the other hand, a result above the target may mean that there are higher levels of reporting on the wellbeing and safety of children, which could also be a positive result.

A data dictionary is a centralised repository of information about data, such as its meaning, relationships to other data, origin, usage and format. An alternative term is a 'metadata repository'.

Using performance information

The information that departments publish provides some useful insights about elements of their performance. However, current publications of departments’ performance do not clearly demonstrate performance over time to show whether a department’s service delivery is improving or not.

Not all departments publicly report performance results across multiple years in their annual reports, and BP3 only compares expected performance for the current year to results from the previous year. While DTF also publishes all departments' historical performance results as Microsoft Excel files on its website, the format means the user must manually create their own graphs to show performance trends.

Given that identifying performance successes and issues is the purpose of performance reporting, the lack of trended data is a significant missed opportunity.

To address this, we developed an interactive dashboard to show departments' performance information in a more meaningful and user-friendly way. It presents data from DTF’s website and departments' annual reports since 2008–09.

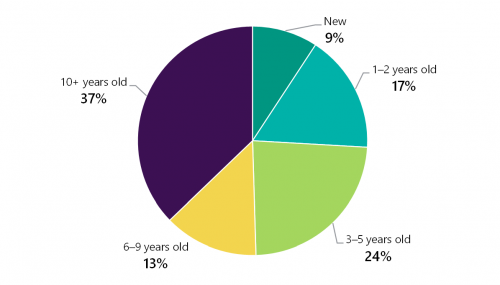

Our dashboard shows that in 2019–20, departments reported meeting a combined total of 57 per cent of their output performance measure targets. They did not meet 37 per cent of their targets. We categorised the remaining 6 per cent as neutral measures. The dashboard is accessible on our website.

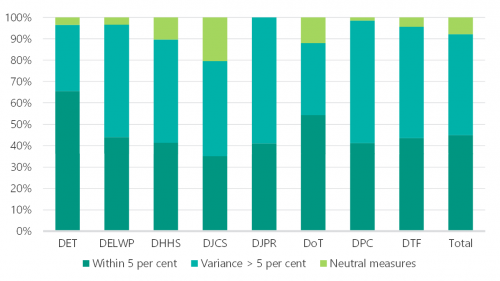

In addition to the lack of trended performance information, departments do not always meet requirements to give clear explanations when their output performance results vary by more than 5 per cent above or below target. They either fail to provide any reason or simply state that the target was exceeded or not met. Without proper explanations of the cause of variances, departments are not fulfilling Framework requirements and are therefore impairing accountability.

Data accuracy

With the exception of DJCS, departments are also not properly documenting the business rules and data sources for their measures, which creates risks to data integrity. This is inconsistent with the Framework’s guidance. DPC has no data dictionary for its measures, and other departments' dictionaries do not include all of the required information. For example, some are missing vital items such as detailed measure definitions, calculation formulas and data sources. This lack of documentation creates a risk that departments may not collect and present their performance data consistently and accurately.

For the selection of departments (DET, DHHS and DTF) and measures (across seven outputs) where we checked controls over performance reporting and recalculated the results, we found reasonable processes and confirmed accurate results.

Unlike departments' financial statements, which we independently audit, there is no legislated requirement for departments’ performance statements to be independently audited either in BP3 or in departments’ annual reports.

In BP3, departments present performance statements that report their objectives, objective indicators and output performance measures and targets. This includes their expected performance for that year and their actual performance for the previous year.

In contrast, local government, water authorities and Technical and Further Education (TAFE) entities in Victoria are required to have their annual performance statements independently audited. Western Australia requires an independent audit of its departments' performance statements and this will also commence in New Zealand from January 2022.

The present scenario in Victoria means that Parliament and the community only have independent assurance of the accuracy and fair presentation of public sector agencies’ financial statements. Yet financial statements of public sector agencies only report on how much is spent, not how well resources have been used in the provision of goods and services.

From this perspective, it is arguable that service delivery performance reporting on an outcome and output basis is at least equally, if not more, important than input-based financial reports. It is unclear then why non-financial service performance information obtains less assurance than financial information.

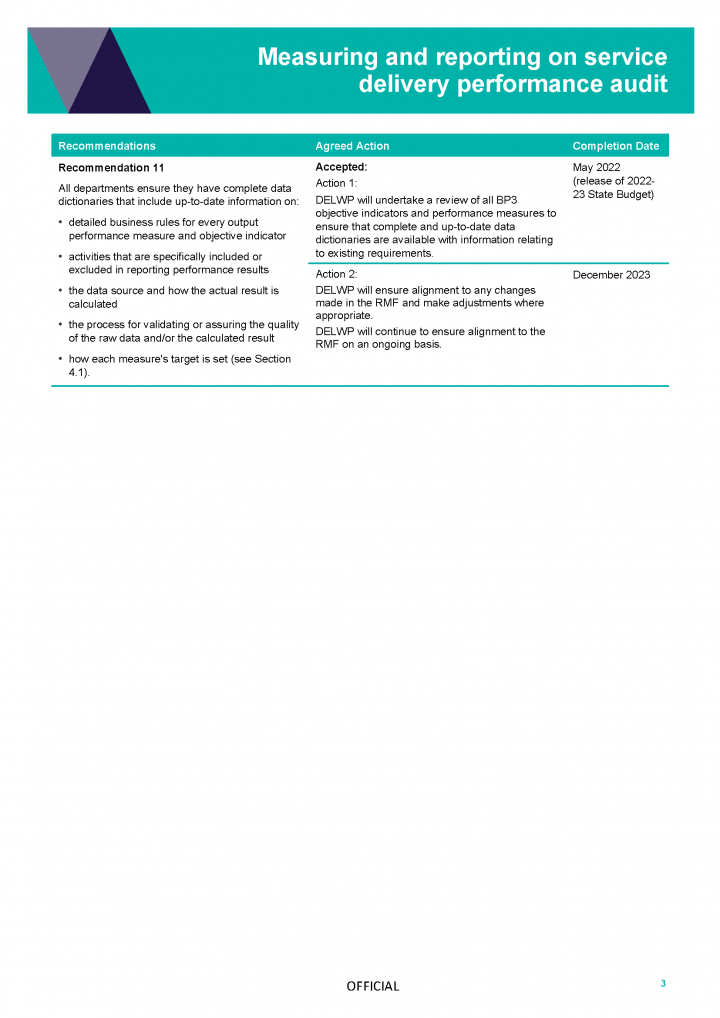

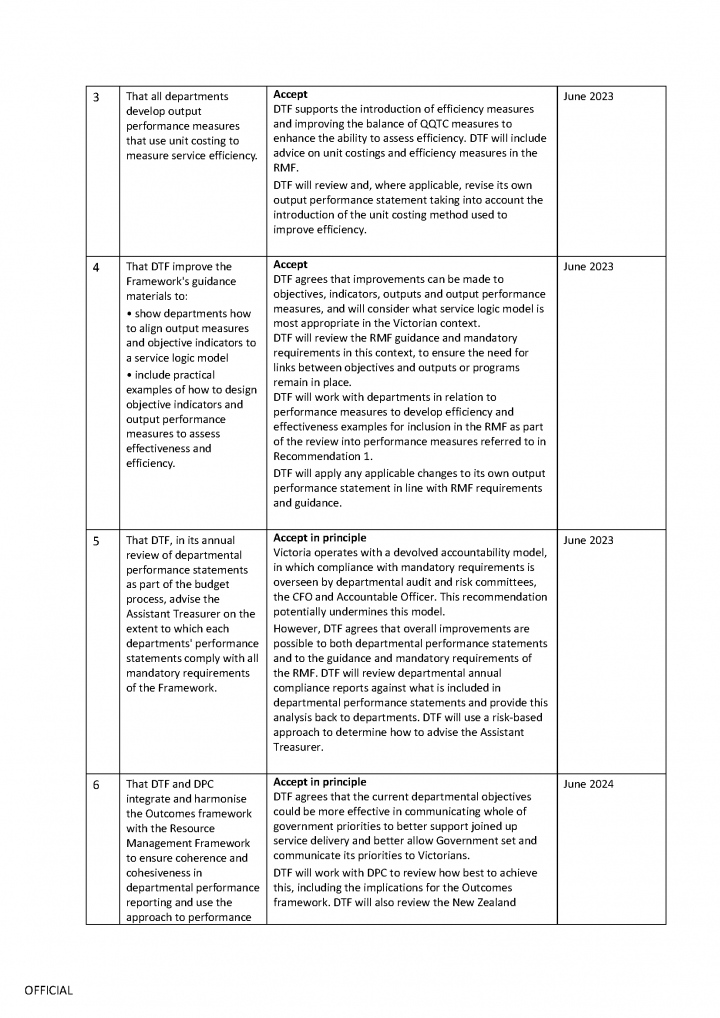

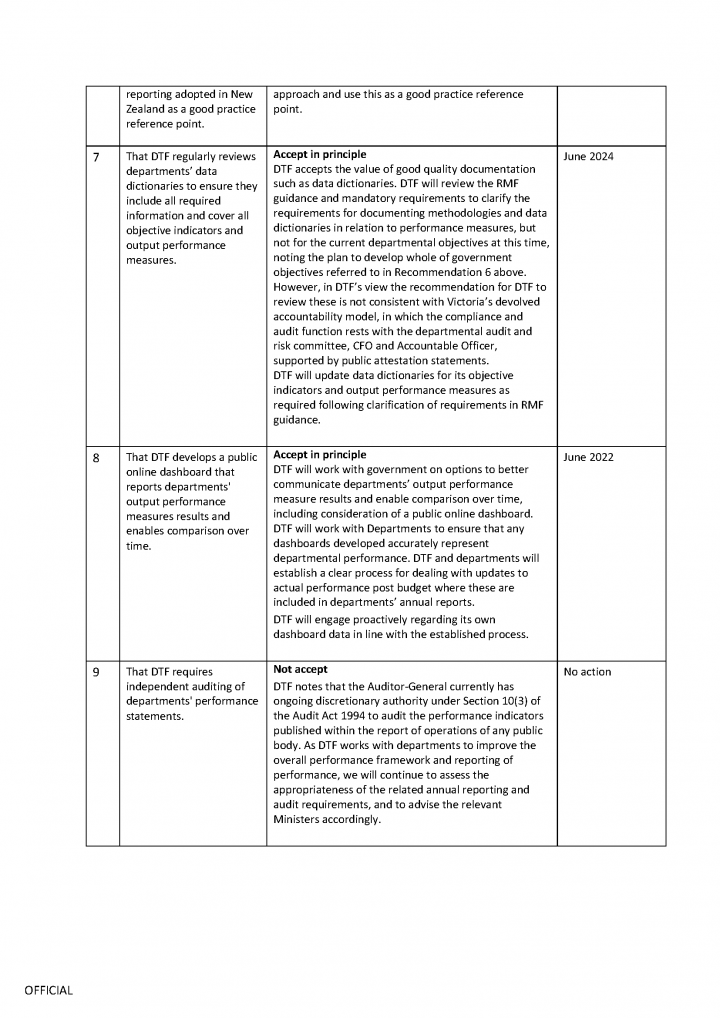

Recommendations to support useful performance reporting

| We recommend that: | Response | |

|---|---|---|

| Department of Treasury and Finance | 7. regularly reviews departments’ data dictionaries to ensure they include all of the required information and cover all of their objective indicators and output performance measures (see Section 4.1) | Accepted in principle |

| 8. develops a public online dashboard that reports departments' output performance measures results and enables comparison over time (see Section 4.3) | Accepted in principle | |

| 9. requires independent auditing of departments' performance statements (see Section 4.4). | Not accepted | |

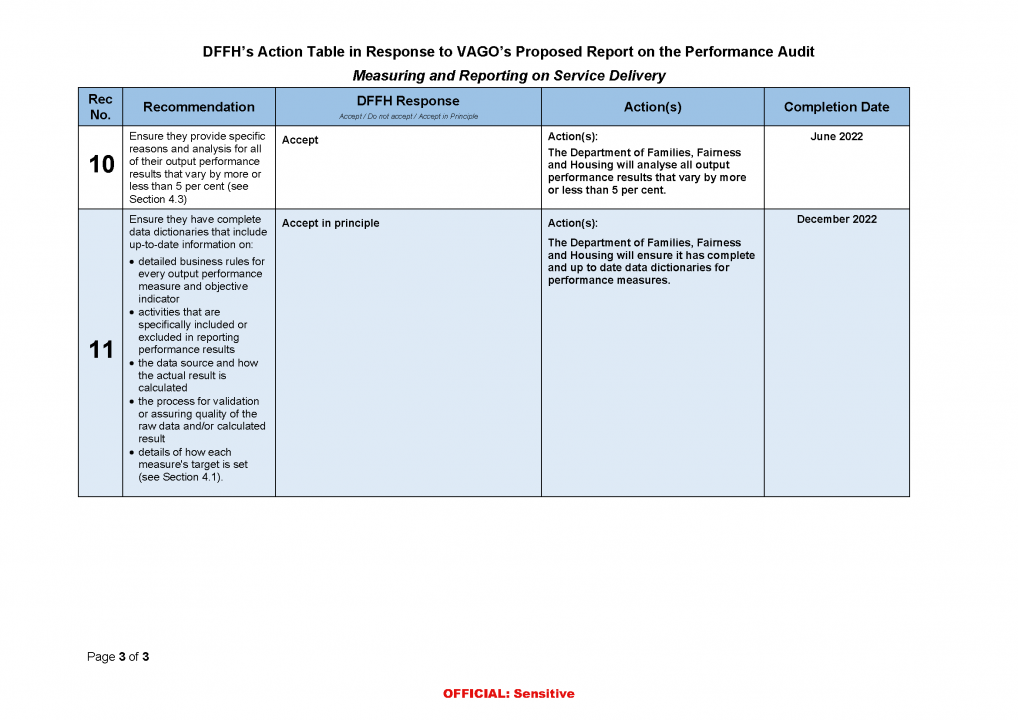

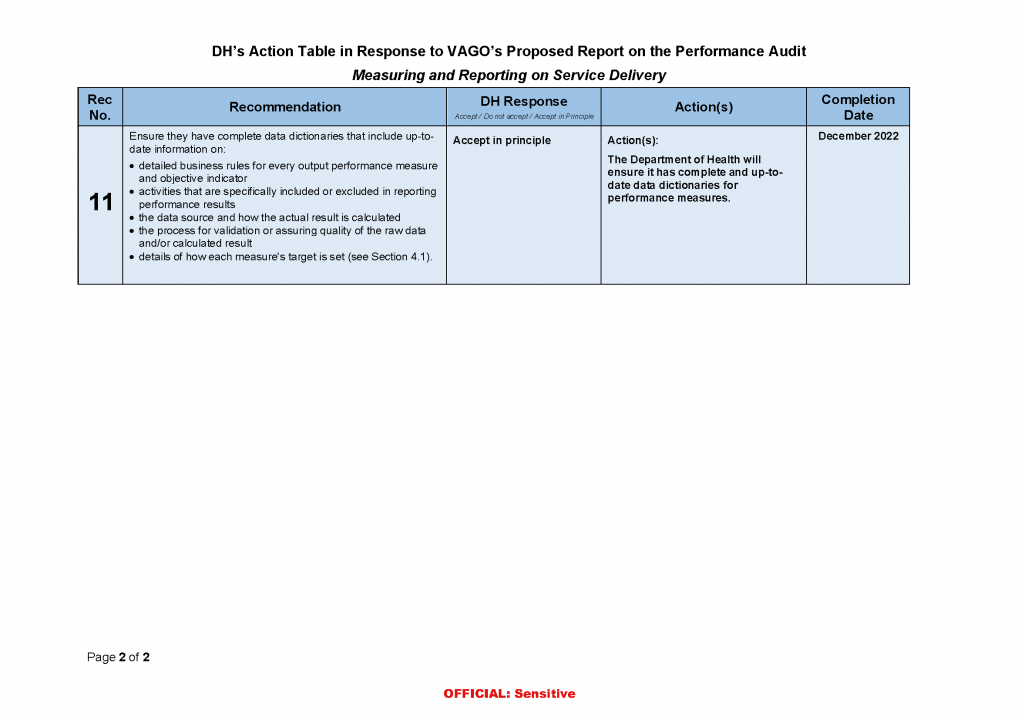

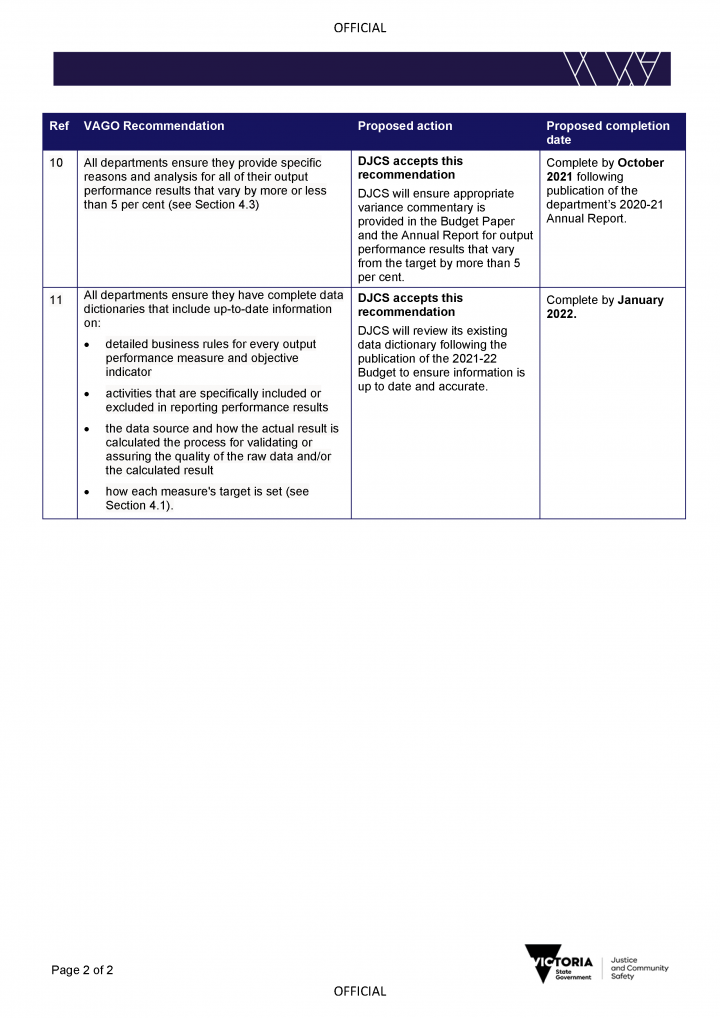

| All departments | 10. ensure they provide specific reasons and analysis for all of their output performance results that vary by more or less than 5 per cent (see Section 4.3) | Accepted by: All departments |

11. ensure they have complete data dictionaries that include up to date information on:

|

Accepted by: DELWP, DET, DJCS, DJPR, DoT, DPC, DTF Accepted in principle by: DFFH, DH |

1. Audit context

Departments measure and report on their service performance to show what they have delivered with public money. This information helps the government to allocate funding, and Parliament and the community to understand if departments are delivering efficient and effective services.

DTF sets performance reporting requirements for departments. Each year, departments provide details of their objectives and associated performance measures, targets and results in the state's Budget papers. Departments also publicly report on their performance in their annual reports.

This chapter provides essential background information about:

1.1 Measuring performance

Governments have a broad range of service delivery obligations set in legislation as well as specific objectives expressed through government policies. Governments make investment decisions to support the achievement of their objectives and allocate funding to departments to deliver these objectives through the annual budget process.

Departmental objectives relate to the most fundamental aspects of community life. They focus on delivering health, education and justice services, constructing and maintaining transport infrastructure, and efforts to protect the environment. As such, it is critical that departments use a performance measurement system that allows the government, Parliament and the community to understand the impact that taxpayer funded government services have on achieving these objectives.

Government departments need to measure and report on their performance to:

- be accountable for, and transparent about, how they use public money

- monitor and benchmark their performance over time and identify opportunities to improve their services

- support government decision-making

- enable the government to assess if it is achieving its policy objectives.

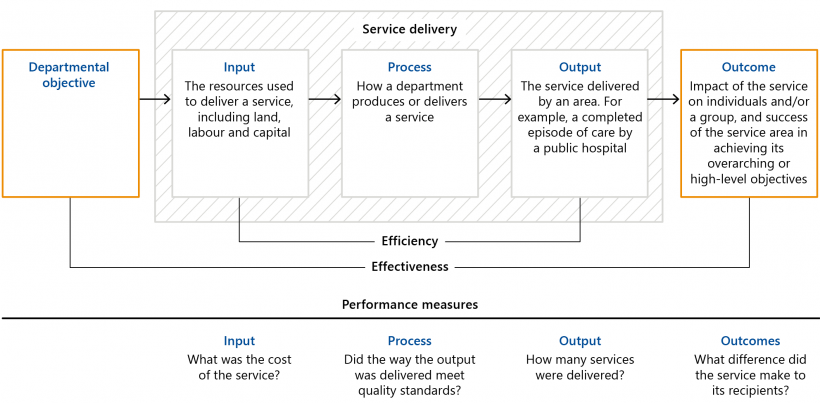

To effectively measure performance, it is important that departments understand the 'service logic' of the policy initiatives and services they deliver. By using a service logic model, departments can identify the distinct parts of a 'service' and show how its funding and activities relate to its desired outcome. By identifying the parts that make up a service, departments can then design relevant performance measures that can show if the desired outcomes are being met.

This method is demonstrated by the Productivity Commission in its Report on Government Services (RoGS).

Productivity Commission's RoGS

Each year, the Productivity Commission produces RoGS to provide comparable, public information on the equity, efficiency and effectiveness of government services in Australia.

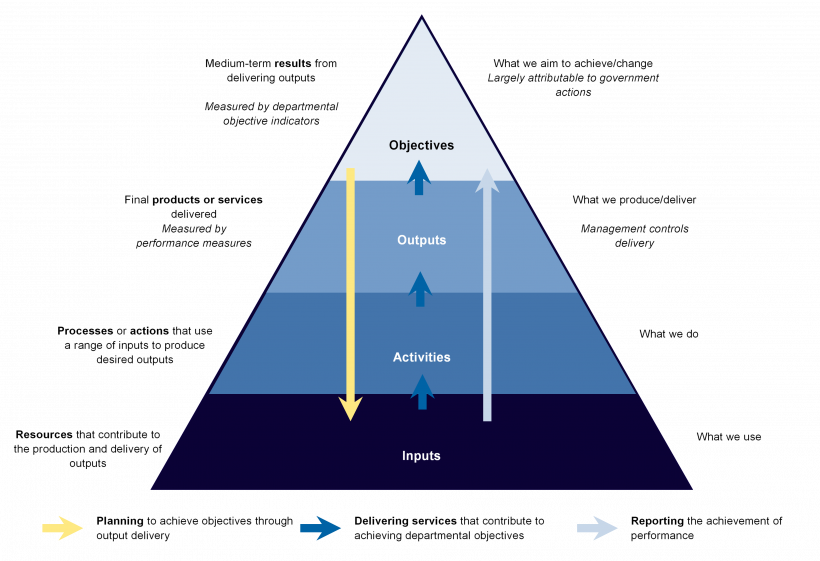

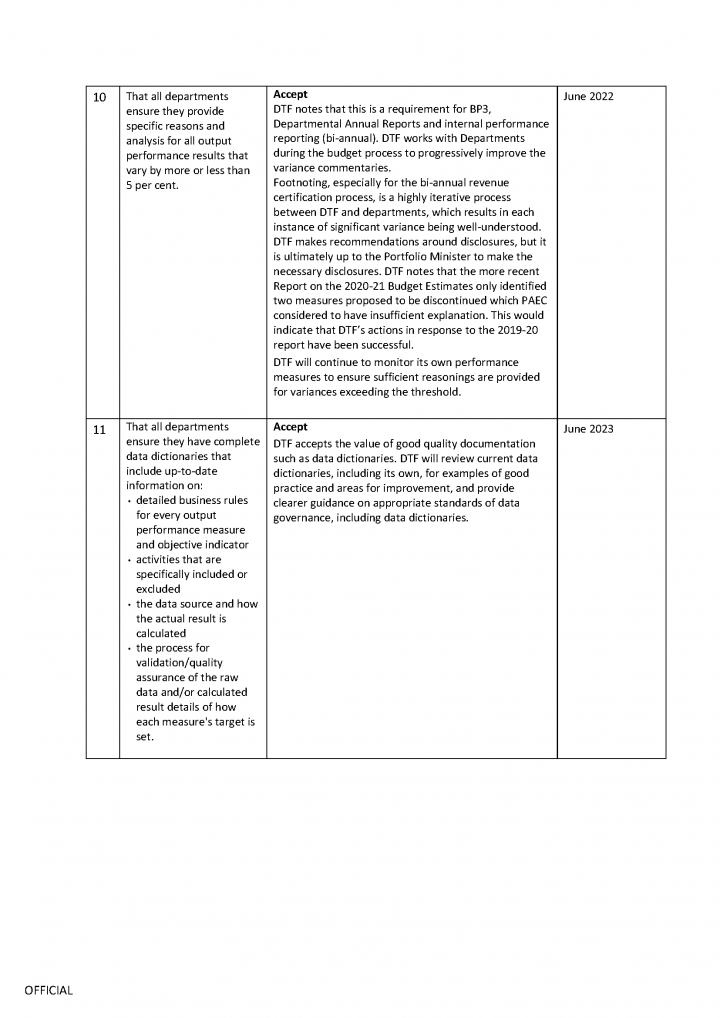

As shown in Figure 1A, the Productivity Commission uses a service logic model to produce RoGS. This allows it to report on how government departments transform their inputs into outputs to achieve their desired outcomes. The figure also shows how performance measures can align with each part in the model.

FIGURE 1A: The Productivity Commission's service logic model and definitions

Note: Service element definitions are from RoGS.

Source: VAGO, based on information from the Productivity Commission.

Resource Management Framework

The Framework, which DTF updated in May 2020, is the overarching policy for the state Budget process and performance reporting. It also sets out a service logic that is similar to the one used by the Productivity Commission. Figure 1B shows that to meet government priorities, departments need to determine how their inputs and activities are converted into outputs that contribute to their objectives.

It is important that departments design performance measures that clearly relate to the part in the service logic they wish to measure.

1.2 Measuring outcomes

Performance reporting that measures outcomes allows departments to better understand and demonstrate their impact in the community. Measuring outcomes can identify when a particular government policy is working and should be continued or expanded, or when it is not and requires change.

Measuring the outcomes of government service delivery can be challenging because the types of outcomes that governments often seek, such as better education, are influenced by many different factors. This highlights the value of using a service logic to understand how a policy or program contributes to achieving an outcome and how best to measure it.

In Victoria, government departments are required to report on their progress in achieving their outcomes through 'objective indicators'. These are expressed in the annual state Budget papers and departments report on their achievement against these objective indicators in their annual reports.

As set in government policy, departmental objective indicators:

- reflect the effects or impacts that the government, through departments, seeks to have on the community and other key stakeholders

- are usually set with a medium to long term (four years or more) timeframe

- describe the department’s contributions to government objectives.

In February 2019, DPC introduced the Outcomes policy to strengthen outcome reporting. The Outcomes policy acknowledges that a focus on measuring outputs does not provide information about the impact of a government activity. The policy aims to embed a more consistent approach to measuring:

- outcomes across the government

- the impact of cross-department initiatives and projects.

In alignment with this work, departments have developed a range of outcomes for specific service areas that overlap to varying degrees with their reporting on objective indicators in the Budget papers and their annual reports. These include, for example, outcomes specific to:

- family violence

- mental health

- public health and wellbeing

- community safety

- multicultural affairs

- gender equality.

Departments often undertake their own bespoke reporting against these frameworks.

1.3 Measuring outputs

Each year, departments receive funding appropriations to deliver specific services, or 'outputs'. This is the ‘price’ the government pays for public goods and services.

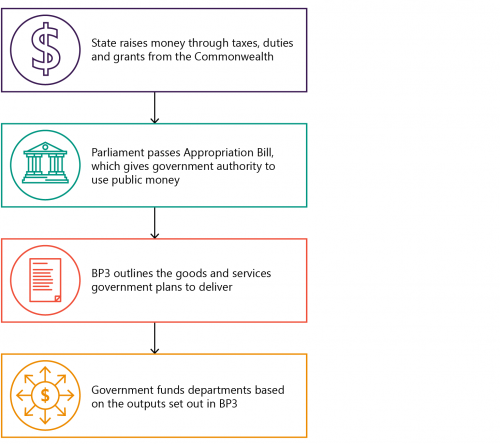

As shown in Figure 1C, BP3 outlines the goods and services that the government plans to deliver across all departments. Parliament then endorses this plan by passing the annual Appropriation Bill (the Bill). The Bill gives the government the legal authority to use public money. Once the Bill is passed in Parliament, the government allocates funding to departments based on the outputs set in each department’s performance statement.

FIGURE 1C: The appropriation and state Budget process

Source: VAGO, based on information in the Framework.

Each department is required to submit an invoice claim twice a year to certify its revenue. DTF assesses the amount claimed in the invoice against the department's output performance measure results.

As defined in government policy, an output:

- is a final product, good or service produced or delivered by, or on behalf of a department or public agency to external customers/recipients

- includes products and services delivered to the community or to other departments.

Prior to the mid-1990s, the Victorian Government funded agencies based on inputs. However, this method cannot provide assurance that departments are using their funds to optimise their outputs.

The value in reporting against output measures and targets (which generally identify the desired volume of an output), is that it should allow the government, Parliament, and the community to identify the cost-efficiency of departmental service delivery. The results can then inform the government of the need to make funding changes or other interventions to improve efficiency where necessary.

1.4 Legal and policy framework for performance reporting

Departments' reporting obligations are governed by the:

- Financial Management Act 1994 (FMA)

- Standing Directions 2018 (the Standing Directions) issued by the Assistant Treasurer under section 8 of the FMA

- Framework, which is issued under section 4.3 of the Standing Directions

Financial Management Act 1994

The FMA allows departments to use public money in Victoria. It outlines the accountability processes that departments and other government agencies must follow and details how they should report their expenditure.

The Standing Directions establish standards for financial management accountability, governance, performance, sustainability, reporting and practice for government agencies.

Under the Standing Directions, DTF issued the Framework to support departments to meet the FMA's requirements.

The Framework

Portfolio agencies are ‘stand-alone’ entities that departments oversee in their sector. They also deliver government’s outputs or services, and can include health services, TAFEs and certain transport related agencies.

Departments must comply with the Framework and account for how they use public resources and achieve value for money in service delivery. Portfolio agencies that deliver services on behalf of departments must also use it. It guides departments on how to:

- set their performance objectives

- develop measures and targets to assess and report on their performance.

Requirements for departments’ performance statements

The Framework outlines how departments need to develop their yearly performance statements. It states that good-quality performance statements:

- help the government make informed decisions about allocating resources

- allow departments to develop and assess standards of service delivery in line with the government’s expectations

- allow Parliament and the community to understand the government’s performance and expenditure

- drive continuous improvement by analysing historical performance and negotiating agreed targets from year to year.

According to the Framework, departments should:

- document the assumptions and methodology they use to collect, analyse and report on their performance results. This includes specifying how they calculate their data, the source and frequency of data collection, and any other business rules and assumptions

- maintain performance records to a standard that allows an independent auditor to verify their integrity

- represent an appropriate proportion of the departments’ and state’s Budget. An output should not be too large or combine different services or activities because this reduces transparency and accountability.

Figure 1D sets out the Framework's requirements and guidance for performance statements.

FIGURE 1D: The Framework's requirements and guidance for performance statements

| Departmental objectives | Objective indicators |

|---|---|

|

Must:

Should:

|

Must:

Should:

|

| Outputs | Output performance measures |

|

Must link to a departmental objective.

|

Must:

Should:

|

| Performance statement reviews | |

Departments must:

|

|

Source: VAGO, based on the Framework.

1.5 Reporting on performance

Departments use objective indicators and output performance measures to monitor and report on their progress against their overall objectives. They do this through their internal reporting process as well as publicly reporting their results in BP3 and their annual reports.

BP3 sets out the goods and services (outputs) that departments expect to deliver with government funding. This is organised by departmental objectives and their associated outputs.

In BP3, departments present performance statements that report their objectives, objective indicators and output performance measures and targets. This includes the expected performance for the current financial year and actual performance for the previous year.

All departments must also produce an annual report that details their financial and service performance for the previous financial year. DTF's Model Report for Victorian Government Departments (the Model Report) outlines the information departments must include. It states that departments must report four years of results against their departmental objective indicators.

Performance statements

Performance statements in BP3 complement the financial information in Budget papers.

|

Performance statements … |

Financial statements … |

|

|

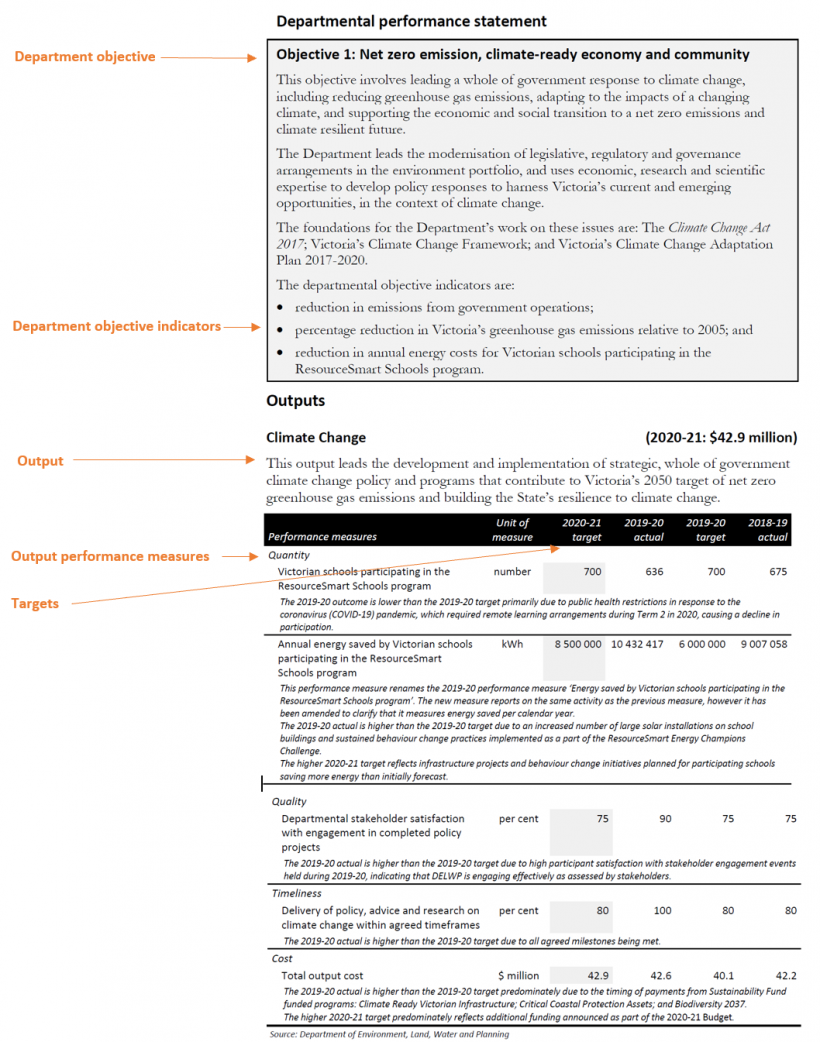

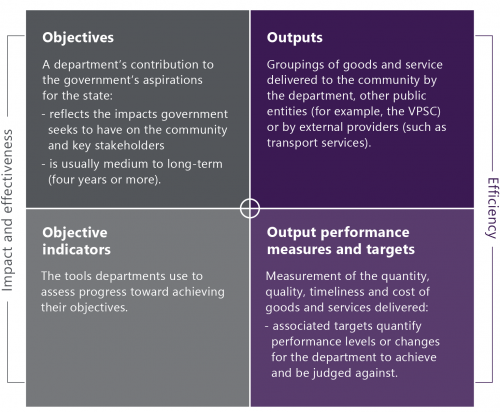

Figure 1E outlines the information contained in departments’ performance statements.

FIGURE 1E: Components of departments’ performance statements

Note: Victorian Public Sector Commission (VPSC) works to strengthen the efficiency, effectiveness and overall capability of the public sector while ensuring professionalism and integrity in all aspects of its operation.

Source: VAGO, based on information from the Framework.

Figure 1F is an example of a performance statement, in this case from DELWP, for one of its departmental objectives.

1.6 Roles and responsibilities

Department of Treasury and Finance

DTF provides advice to departments about their objectives and output performance measures but does not endorse or approve them. The relevant minister approves the sections of a department's performance statement that relate to their portfolio.

DTF supports the Assistant Treasurer by:

- providing advice on the quality and relevance of the suite of objectives, objective indicators, outputs and output performance measures in the departments' performance statements

- reviewing the departments' output performance and advising the government on risks that may impact service delivery.

DTF also briefs the government in February on agencies' achievements against their targets in BP3.

Our 2014 audit Public Sector Performance Measurement and Reporting identified the need for DTF to better support departments to develop meaningful performance statements and clear efficiency measures. At that time, we recommended that DTF:

- improves its guidance material on performance measurement to include more practical examples to help departments measure efficiency and effectiveness

- more rigorously and consistently assesses and communicates performance back to portfolio departments and government.

Government departments

Departments support their portfolio ministers in achieving the government’s objectives and priorities. As the accountable officer, a department’s secretary is responsible for:

- approving their department’s plans

- delivering outputs to the agreed performance standards

- supporting portfolio ministers to develop their department’s performance statement, medium-term plan and annual report.

Parliament

Parliament holds the government accountable for its overall performance and authorises the Bill following the annual Budget.

To strengthen accountability and transparency for performance management, Parliament's Public Accounts and Estimates Committee (PAEC), at the invitation of the Assistant Treasurer, reviews output performance measures as part of the annual Budget process.

1.7 Previous audits on service performance reporting

Numerous VAGO audits in the last two decades have found significant weaknesses in the way that departments measure and report performance. Figure 1G summarises the findings from these audits.

FIGURE 1G: Key findings from previous VAGO audits on service performance reporting

| Audit title | Year | Findings |

|---|---|---|

|

Departmental Performance Management and Reporting |

2001 |

The performance management and reporting framework was not complete. Key components, including the government’s desired outcomes, measures of progress, departmental objectives and associated performance indicators, were yet to be finalised and publicly released. |

|

Performance Management and Reporting: Progress Report and a Case Study |

2003 |

The progress measures and performance indicators were poorly specified and did not allow the government to easily track departments' overall performance or assess their contributions to achieving the government's outcomes. |

|

Performance Reporting by Departments |

2010 |

Departments did not consistently measure or clearly report how well they were achieving outcomes that were consistent with government policy objectives. Only a few departments were able to demonstrate the extent to which they had met their objectives. Stronger central agency leadership was needed due to little progress in measuring and communicating outcomes over the previous decade. |

|

Public Sector Performance Measurement and Reporting |

2014 |

BP3 and annual reports that were meant to explain performance were impenetrable documents because:

DTF's oversight of the performance measurement and reporting system was only partly effective. Its efforts to guide, support and check on departments' progress were visible but inadequate. |

Source: VAGO.

VAGO’s December 2012 Reflections on audits 2006–12: Lessons from the past, challenges for the future summarised repeated and significant weaknesses, including:

- departments not using appropriate measures of performance

- departments failing to measure outcomes

- insufficient guidance, advice and oversight by central agencies to support departments to implement the performance measurement system.

2. Measuring outcomes

Conclusion

Departments have not consistently developed or reported on objective indicators that show their achievement against their stated objectives. This means departments are not meeting the Framework's mandatory requirements. More importantly, it weakens departments' accountability and transparency by preventing the government, Parliament and the community from accessing vital information about their performance. Without information on departments' outcome achievement, the government lacks a sound basis for future investment and policy decisions.

Common issues that weaken outcome measurement across departments include:

- incorrectly using output rather than outcome objective indicators

- setting vague objective indicators that are hard to interpret and calculate results against

- not having baseline data to assess performance against.

While DPC's recent Outcomes policy aims to improve how departments approach measuring their outcomes, it misses a significant opportunity by not linking to the Framework, which is the state's primary accountability mechanism.

This chapter discusses:

2.1 How departments set objectives

Objectives must express a clear, measurable achievement

The starting point for a performance measurement system is to be clear about the desired objective of the activity you are measuring. Most departmental objectives for 2020–21 meet the Framework’s requirement that departments clearly set out the outcomes they intend to achieve with their funding.

Examples of clear objectives that focus on outcomes include:

- ‘Raise standards of learning and development achieved by Victorians using education, training, and early childhood development services’ (DET)

- ‘Net zero emission, climate-ready economy and community’ (DELWP)

- ‘Victorians are healthy and well’ (DHHS)

- ‘Ensuring community safety through policing, law enforcement and prevention activities’ (DJCS)

- ‘Optimise Victoria’s fiscal resources’ (DTF).

In these examples, the objectives meet the expectations set out in the Framework. The intended achievement is clear, which means it is measurable. The beneficiaries are also clear—in these examples, the public.

However, we found some examples where the objective does not meet required or recommended aspects of the Framework. In some of these instances, the stated departmental objective does not identify the intended beneficiaries, although it is generally possible to infer it based on the aligned departmental indicators. The more problematic issue is where an objective expresses no intended result or outcome. This is a missed opportunity because an objective should signal to public servants the tangible purpose of their work and tell the community what benefits a department is striving to deliver.

Figure 2A gives more detailed examples.

FIGURE 2A: Examples of departmental objectives that do not clearly express the intended result (outcome) of their output delivery

| Departmental objective | Problem |

|---|---|

| High-performing DPC (DPC) | This objective focuses on DPC’s internal performance rather than the intended impact for the community or other departments from the services it provides. As such, no outcome is expressed. |

| Promote productive and sustainably used natural resources (DJPR) | This objective states the service that DJPR provides—promotion—rather than the intended outcomes of that work. The objective indicators in BP3 that align to this objective focus on maximising the value of agriculture exports and mineral extraction. The departmental objective should therefore directly articulate this intended outcome regarding economic results. |

| Effective management of prisoners and offenders and provision of opportunities for rehabilitation and reparation (DJCS) | This objective states the responsibilities of the department in regard to correctional services. It does not state the outcome intended from provisioning these services, which would likely relate to reduced recidivism. |

Source: VAGO, based on the 2020–21 BP3.

Objectives must represent the totality of the department’s output budget

The Framework requires departmental objectives to represent the totality of the department’s output budget. Departments largely comply with this requirement. However, we identified one major initiative with significant expenditure in the 2020–21 Budget without relevant output performance measures. This example is shown in Figure 2B.

FIGURE 2B: Example of a departmental initiative without relevant output performance measures

| Departmental initiative | Funding over four years ($ million) | Comment |

|---|---|---|

| Big housing build: Victorian homebuyer fund (DTF) This fund aims to help first homebuyers afford their homes sooner by contributing to the purchase price in exchange for equity interest in the property, which therefore reduces the size of the deposit required. |

500 | This initiative contributes to DTF's Economic and Policy Advice output under its objective 'Strengthen Victoria’s economic performance'. However, there are no output performance measures to assess DTF's progress against this initiative in the 2020–21 BP3. |

Source: VAGO, based on the 2020–21 BP3.

As part of its yearly inquiry into the Budget estimates, PAEC has repeatedly identified initiatives that lack performance measures, despite being of significant public interest and expenditure. For example, PAEC's Report on the 2019–20 Budget Estimates found:

- DELWP had no performance measures or targets in the 2019–20 BP3 for diverting waste from landfill. This was despite the fact that the 2019–20 Budget provided an additional $66 million for related initiatives, which brought the government’s total investment to more than $135 million. DELWP has addressed this in the 2020–21 BP3.

- DHHS had no performance measures or targets in the 2019–20 BP3 to assess the impact of the government's new $322 million free dental care pilot for school students. DHHS did not introduce any new dental measures to address this in 2020–21.

2.2 How departments set objective indicators

Measuring outcomes

While an objective must be clear about what a department is aiming to achieve, an objective indicator must measure its success. The Framework requires departments to design objective indicators that assess the outcome of the outputs they deliver. There are many examples of departmental objective indicators that achieve this, including:

- ‘Secondary students meeting the expected standard in national and international literacy and numeracy assessment’ (DET)

- ‘Reduce infant mortality’ (DHHS)

- ‘Rate of deaths from fire events’ (DJCS)

- ‘Change in Victoria’s real gross state product’ (DJPR)

- ‘General government net debt as a percentage of Gross State Product to stabilise in the medium term’ (DTF).

However, we also found that many departmental objective indicators measure outputs and not outcomes. This shows that some departments are not complying with the Framework and are failing to apply a service logic model when designing their objective indicators. As a result, there are significant gaps in departments' reporting of what government service delivery is achieving. This means that government decision-makers, Parliament and the community cannot properly examine departmental performance.

Figure 2C outlines examples of this issue.

FIGURE 2C: Examples of objective indicators not measuring outcomes

| Departmental objective | Objective indicator(s) | Comment |

|---|---|---|

| Optimise Victoria’s fiscal resources (DTF) | Agency compliance with the Standing Directions under the FMA | Agency compliance with the Standing Directions reflects the way agencies deliver their outputs and is therefore a process measure. An agency could comply, yet still not provide effective services. Also, DTF is not accountable for the compliance of other departments with the Standing Directions. Such a measure is therefore not attributable to DTF. |

| Productive and effective land management (DELWP) | Efficient provision of timely and authoritative land administration and property information services | As these services are outputs the department provides, this is an output measure rather than a measure of the outcome that these services achieve or contribute to. |

| Number of visits to public land estate managed by the department's portfolio agency (Parks Victoria) | Visitor numbers is an output. This indicator does not describe the extent to which land is productive or effectively managed. | |

| Raise standards of learning and development achieved by Victorians using education, training, and early childhood development services (Primary) (DET) | Percentage of positive responses to teacher collaboration within primary schools | This measures satisfaction with teacher collaboration activities. This is not an objective indicator, as it does not measure the standards of learning achieved by students. It is instead a proxy measure of the quality of a process used to improve teaching. |

| Engagement (DET) | Increase the number of Victorians actively participating in education, training, and early childhood development services | The objective is focused on enrolment numbers in various educational services, which is an output. The related outcomes would be the number of Victorians attaining a qualification, completing a level of schooling or academic standard, or gaining employment. |

| Victorians are protected with equal opportunities, secure identities, information freedoms and privacy rights (DJCS) | Complaint files received and handled by the Victorian Equal Opportunity and Human Rights Commission (VEOHRC) | All six indicators measure outputs and therefore do not describe if the department is achieving its objective. |

| People assisted through Public Advocate advice and education activities | ||

| Services provided to victims of crime against the person | ||

| Births, deaths and marriages registration transaction accuracy rate | ||

| Working with Children Checks processed (negative notices issued within three days of receiving decision) | ||

| Education and training activities delivered by the Office of the Victorian Information Commissioner | ||

| Foster a competitive business environment (DJPR) | Engagement with businesses | The number of engagements with businesses is a count of the services provided by DJPR and is therefore an output measure. This indicator does not describe if these outputs result in a more competitive business environment in the state. |

| Build prosperous and liveable regions and precincts (DJPR) | Precincts developed and delivered | Delivering precincts is an output and does not describe whether these precincts are prosperous or liveable or not. Community satisfaction with public places measures the quality of the output delivered rather than describing if the public space is prosperous or liveable. |

| Community satisfaction in public places | ||

| Strong policy outcomes (DPC) | DPC’s policy advice and its support for Cabinet, committee members and the Executive Council are valued and inform decision-making | The objective and both objective indicators are vague—it is unclear what is intended to be measured and how. |

| The development and effective use of technology supports productivity and competitiveness |

Source: VAGO, based on the 2020–21 BP3.

Objective indicators must link to departmental objectives and outputs

As required by the Framework, almost all of the objective indicators that departments are using have a clear and direct link to their related departmental objective. However, in some instances, objective indicators do not measure the intended objective, or they fail to cover key elements of the objective. This means that some departments are missing information about their performance against some of their objectives.

Figure 2D shows examples of objective indicators that measure something other than the departmental objective. Figure 2E shows examples of objective indicators that address only part of the objective or do not align to the outputs (services) linked to those indicators.

FIGURE 2D: Examples of objective indicators that do not measure the intended objective

| Departmental objective | Objective indicator | Comment |

|---|---|---|

| Victorians have the capabilities to participate (DHHS) | Increase the satisfaction of those who care voluntarily for people with a disability, people with mental illness, and children in out-of-home care | There is no direct link between carer satisfaction and the departmental objective. It is also unclear what service is being measured. DHHS provides a wide range of carer supports, and carer satisfaction could also capture carers’ views on the supports provided to the person they care for. |

| Net zero emission, climate‑ready economy and community (DELWP) | Reduction in annual energy costs for Victorian schools participating in the ResourceSmart Schools program | This indicator does not measure the degree to which the departmental objective is met—for example, the level of emission reduction achieved. Cost reduction may be a secondary outcome, but it is not aligned to the departmental objective—it is a side benefit of reducing greenhouse gas emissions and an incentive for schools to participate in the program, not the primary outcome being sought. |

| Build prosperous and liveable regions and precincts (DJPR) | Community satisfaction with the performance of councils as measured through the Local Government Community Satisfaction survey | This is a measure of council performance, not DJPR’s service delivery. |

| A fair marketplace for Victorian consumers and businesses with responsible and sustainable liquor and gambling (DJCS) | Responsive Gamblers Help services | The objective refers to a fair and responsible liquor and gambling sector. However, the indicator intended to measure achievement of the objective focuses on the responsiveness of a service that supports people with gambling problems. There is no relationship between the responsiveness of this public health service with how well DJCS regulates and oversees the liquor and gambling sector. Even if there was a relationship, the proposed measure is an output rather than an outcome measure. |

Source: VAGO, based on the 2020–21 BP3.

FIGURE 2E: Examples of objective indicators that address only part of the departmental objective or do not align to the corresponding outputs

| Departmental objective | Objective indicator | Comment |

|---|---|---|

| Victorians are connected to culture and community (DHHS) | Increase rates of community engagement, especially for Aboriginal children and young people | The objective indicators appear to have logical links to the departmental objective. However, the outputs described in BP3 that are linked to these indicators do not specifically relate to cultural connection services for Aboriginal children or young people, or those in out-of-home care services. Instead, the output group is described as funding community support programs, such as Men's Sheds, neighbourhood houses and the Office for Disability and, through that, disability advocacy services. This demonstrates a lack of service logic in the performance measurement design. |

| Increase cultural connection for children in out-of-home care, especially Aboriginal children | ||

| Reduce the impact of, and consequences from, natural disasters and other emergencies on people, infrastructure, the economy and the environment (DJCS) | Value of domestic fire insurance claims | The objective aims to deliver a coordinated, 'all-communities, all-emergencies' approach to emergency management that focuses on mitigating risks and actively partnering with the Victorian community. However, the two objective indicators only focus on fire emergencies. |

| Rate of deaths from fire events | ||

| Deliver investments that achieve social and economic benefits (DoT) | Improved transport infrastructure and planning | It is unclear how this objective indicator would be measured. DoT has no business rule for the indicator, and the related outputs in BP3 do not contribute to understanding the economic or social benefits related to transport infrastructure. Instead, they focus on, for example, roads meeting service standards and the timeliness of transport infrastructure project completion. |

Source: VAGO, based on the 2020–21 BP3.

Objective indicators must be clear and measurable

It is a mandatory requirement of the Framework that departments 'demonstrate the contribution of departmental outputs to the achievement of the objective through performance data'. However, some objective indicators are too vague to understand the actual desired outcome, which makes it unclear how to measure performance against the indicator.

In some instances, this is likely because it is difficult to attribute an outcome to the service the department provides, such as advice or support to other entities. In such cases, departments should consider if they need to specify an objective and objective indicator for that service or, using a service logic to assist, consider if the outcome that can be measured is stakeholder satisfaction with the advice the department provides.

In other instances, departments have not articulated an indicator, but only described the subject matter of the indicator.

Another issue is that some objective indicators incorporate a number of different aims, which makes it impossible to develop a single metric to capture performance against all of the elements.

Figure 2F provides examples that illustrate these issues.

FIGURE 2F: Examples of objective indicators that are not clear or measurable

| Departmental objective | Objective indicator | Comment |

|---|---|---|

| Strengthen Victoria's economic performance (DTF) | Advice contributes to the achievement of government policies and priorities relating to economic and social outcomes | The term 'contributes' is very subjective, which makes measuring it difficult. |

| Ensuring community safety through policing, law enforcement and prevention activities (DJCS) | Crime statistics | No further description of the indicator is provided in BP3. It is therefore unclear what is to be measured and what success looks like. |

| Reliable and people focused transport services (DoT) | Reliable travel | The indicator essentially restates the objective and lacks sufficient detail to explain what is to be measured. |

| Professional public administration (DPC) | A values-driven, high-integrity public service characterised by employees who collaborate across government and in partnership with the community and other sectors, and who use evidence to support decisions that drive the progress of Victoria socially and economically | This is an aspiration rather than a measurable objective indicator. Given the number of different impacts sought, it is not possible to measure them collectively. |

Source: VAGO, based on the 2020–21 BP3.

Underpinning business rules

A business rule is the detailed definition of a performance measure. They are important to ensure accurate and consistent calculation of results. Departmental business rules are not publicly published.

According to the Framework, each objective indicator should be underpinned by a 'business rule' that explains in detail how results against the indicator should be calculated, including the data used.

However, of the 145 departmental objective indicators used in 2019–20, departments were unable to provide the rules for calculating results, which outline the data used, for 91 of the indicators.

Figure 2G shows examples that represent better practice, which clearly define what is included and excluded in the measure.

FIGURE 2G: Examples of objective indicators with well-explained business rules in place

| Objective indicator | Business rule in place |

|---|---|

| Escapes from corrective facilities (DJCS) | The indicator counts escapes by prisoners from prison facilities/precincts regardless of whether or not there was a breach of a physical barrier. It also includes escapes by prisoners during prison–to–prison, prison–to–hospital, or prison–to–court transport/escort, and escapes while under direct one-to-one supervision outside a prison facility (for example, to attend a funeral or medical appointment). |

| International students attracted to Victoria (DJPR) | International student enrolment data covers onshore international students studying on student visas only (visa subclasses from 570 to 575). It does not include students studying Australian courses offshore (such as on an offshore campus or online), overseas students on Australian-funded scholarships or sponsorships, or students undertaking study while holding a tourist or other temporary entry visa (for example, visitors studying an English-language course while on a holiday visa). Students from New Zealand are not included in this data because they do not require a student visa to study in Australia. Students will be counted as enrolled in Australia even if they have left Australia temporarily. For example, during end of year holidays. |

Source: VAGO, based on DJCS and DJPR’s business rules.

DPC, DET, DHHS and DoT could not provide business rules for any of their objective indicators. This is despite guidance in the Framework that departments should document their calculation methods and maintain records to allow independent auditing.

Where departments have documented business rules for indicators, some of the instructions are far too general. This allows different ways of calculating the result, which therefore risks inaccurate reporting and varying calculation methods from year to year. Figure 2H shows examples of this issue.

FIGURE 2H: Examples of business rules that are too general to support accurate and consistent calculation of the objective indicator

| Objective indicator | Business rule | Comment |

|---|---|---|

| Benefits delivered as a percentage of expenditure by mandated agencies under DTF-managed state purchasing contracts, including reduced and avoided costs (DTF) | Benefits delivered ($)/expenditure under management ($) | The business rule does not provide sufficient detail of what benefits are included or calculated. There is no definition of 'benefit' or what is acceptable to include in regards to reduced or avoided costs. The data source is not documented either. |

| Percentage reduction in Victoria's greenhouse gas emissions relative to 2005 (DELWP) | The latest State and Territories Greenhouse Gas Inventories report was published in February 2018, and contains emissions data to 2016. According to this report, Victoria's emissions were 10.8 per cent below 2005 levels in 2015. Based on internal projections of Victoria's emissions, emissions are on track to meet the 2020 target. | This is not a business rule because there is no explanation of the calculation method or the data source for Victoria's results. |

Source: VAGO, based on DTF and DELWP’s business rules.

This lack of rigour is a serious issue. Without clear calculation methods and identified data sources, it is unclear how departments arrive at the performance results they publish.

Objective indicators must have baseline data

The Framework also requires departments to set a baseline for their objective indicators. However, none have done this. Without baseline data it is difficult to assess departments' progress towards achieving their objectives.

Many of the departmental objective indicators in the 2020–21 BP3 include words such as 'reduce', 'increase' or 'improve'. For example:

- ‘Reduction in emissions from government operations’ (DELWP)

- ‘Improved transport infrastructure and planning’ (DoT)

- ‘Increase rates of community engagement, including through participation in sport and recreation’ (DJPR).

However, without a baseline to compare against, departments cannot provide meaningful information about the extent of change or improvement.

The Framework does not provide guidance on what a baseline should be. However, it could be interpreted as requiring departments to establish a minimum performance level to measure their objective indicators against. This would be consistent with the guidance in DTF's Model Report, which suggests that departments should develop a baseline dataset for their objective indicators and publish the associated medium term targets in their annual reports.

2.3 The Outcomes policy

In addition to the Framework, DPC has introduced a new Outcomes policy for departments to use to measure their outcomes. The policy states:

‘The Victorian public sector is driven by a strong moral purpose to improve the lives of all Victorians. The best way to ensure that we deliver public value to the people of Victoria is to clearly define the outcomes we are trying to achieve, and measure our progress along the way’.

The Outcomes policy encourages and supports departments to determine their outcomes and measures for program and service delivery areas as required. However, it does not articulate what relationship or priority these outcomes should have to their departmental objectives and objective indicators. It does not reference the Framework either. As a result, there is risk that departments may:

- develop conflicting sets of outcomes and outcome measures

- focus on metrics within their outcomes frameworks to the detriment of their departmental objective indicators, which have formal requirements for public reporting

- create confusion among staff, government decision-makers, Parliament and the public about what their objectives are and which performance information to use.

The policy's focus on upskilling departments’ staff in identifying outcomes and appropriate measures is warranted, as shown by our assessment of current departmental objective indicators. However, it is a significant missed opportunity that the policy does not outline how it aligns with the state's primary system of performance measurement and accountability through the Budget process and annual reporting.

3. Measuring output performance

Conclusion

Across all departments and service delivery areas, there are many output performance measures that provide little genuine insight into departmental performance. This is despite the Framework describing output performance measures as the 'building blocks of the accountability system' and the 'basis for the certification of departmental revenue'. This is a significant failure by departments in the application of the state's key performance and accountability framework. Contributing issues include:

- outputs that combine too many separate activities

- output measure selections that impair transparency

- output measures that do not measure output delivery

- output measures that are vague, outside the department's control, and/or only reflect meeting a minimum standard

- output measures that prevent comparison of performance over time or against other jurisdictions.

This chapter discusses:

3.1 Setting outputs

Outputs are services that departments provide either to the community or other departments. An output should capture all the specific activities that make up a service and should contribute to the achievement of a departmental objective.

Outputs that are too large or combine too many different activities

The 2020–21 BP3 includes examples of outputs that combine too many separate activities. This reduces departments’ transparency and accountability by making it difficult to understand the cost and performance of the individual services that an output covers.

The Framework provides the following review criteria to help departments determine their output groupings:

- Are the services closely related or homogenous in nature?

- Are the services targeting a specific problem for the same customer?

- Is the purpose of the services the same?

- Is the output less than 10 per cent of the department’s total output cost and less than 0.5 per cent of the state’s total Budget?

The Framework states that if the answer is 'no' to any of these questions, then the output is too large.

Despite this guidance, there are many examples that breach it. For example, DJCS’s output shown in Figure 3A, which has $237 million of funding for 2020–21.

FIGURE 3A: Example of an output that combines too many different activities

| Departmental output | Activities covered by the output | Comment |

|---|---|---|

| Justice Policy, Services and Law Reform (DJCS) |

|

This output group fails the test set out in the Framework because the services are not homogenous. Spanning from provisioning clinical forensic evidence to negotiating native title agreements, these activities serve a wide range of different consumers and purposes. |

Source: VAGO, based on the 2020–21 BP3.

In other instances, output groups are very large in terms of the funding amount. Despite the Framework's requirements, if the activities within an output are truly homogenous, then it may be reasonable to group them together as one output. In this instance, the large amount of funding merely reflects the high cost and/or volume of the activities. However, it becomes problematic when too many disparate services are grouped together. In that instance, it makes it is hard to identify the performance of the various services within the output group.

This issue was also raised by PAEC in its Report on the 2016–17 Financial and Performance Outcomes. PAEC recommended that departments improve the usefulness of their performance reporting by splitting some of their larger outputs by speciality, size or location.

Examples of current output groups that are larger than what the Framework recommends include:

- DHHS's 'Acute Health Services' output, which has a budgeted cost of $17.065 billion (55 per cent of DHHS’s total funding and 21.4 per cent of the state Budget

- DJCS's 'Policing and Community Safety' output, which has a budgeted cost of $3.793 billion (42.4 per cent of DJCS's total funding and 4.8 per cent of the state Budget)

- DET's 'School Education—Primary' output, which has a budgeted cost of $6.431 billion cost (37.8 per cent of DET's total funding and 8.1 per cent of the state Budget).

There is an opportunity for departments to split these output groups into smaller, more meaningful outputs. For example, 'Acute Health Services' incorporates elective and emergency services, acute and subacute (rehabilitation) services, and outpatient and inpatient services. This indicates that there is an opportunity to create more defined and homogenous output groups. Similarly, 'School Education—Primary' incorporates operational school funding and capital funding, which offers the potential for separate, smaller output groups aligned to specific purposes.

3.2 Determining a balanced suite of output performance measures

Departments need a suite of output performance measures to show accountability for their funding and demonstrate how their outputs have contributed to a departmental objective.

The Framework sets mandatory requirements for output performance measures. It specifies that departments need to have a meaningful mix of quality, quantity, timeliness and cost performance measures for each output that assesses:

- service efficiency and effectiveness

- all major activities of the output.

However, we found numerous examples of suites of output performance measures that do not meet these requirements.

How output measures contribute to a departmental objective

Not all departments' performance statements present a clear link between departmental objectives, objective indicators, outputs and output performance measures. This makes it difficult for readers to understand how well a department is delivering its outputs, and whether its output delivery is making a meaningful contribution towards achieving an objective.

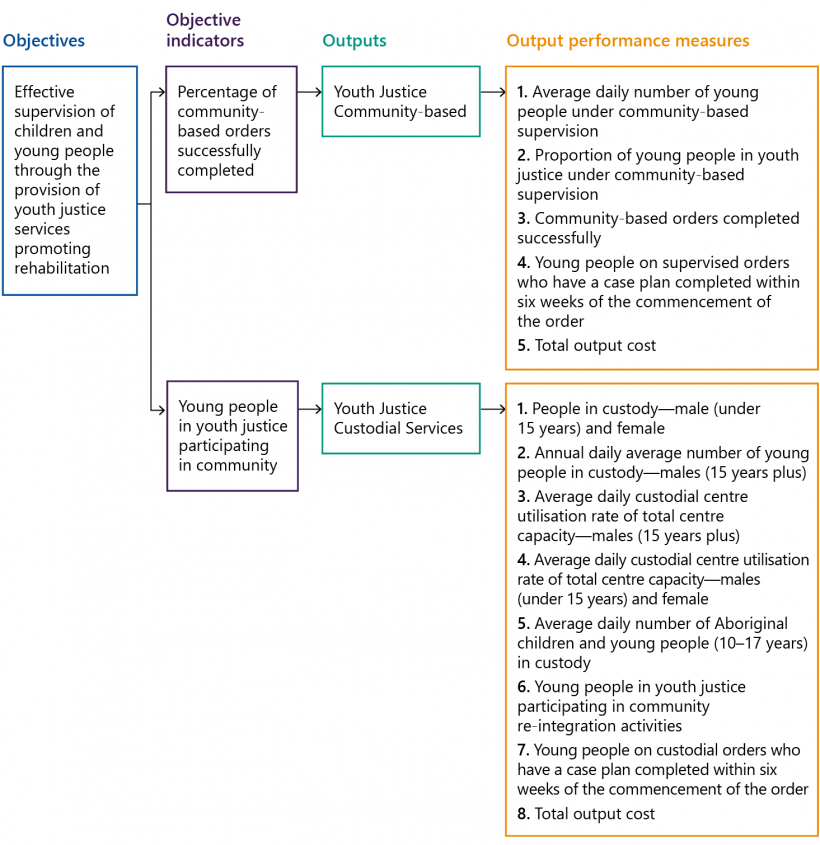

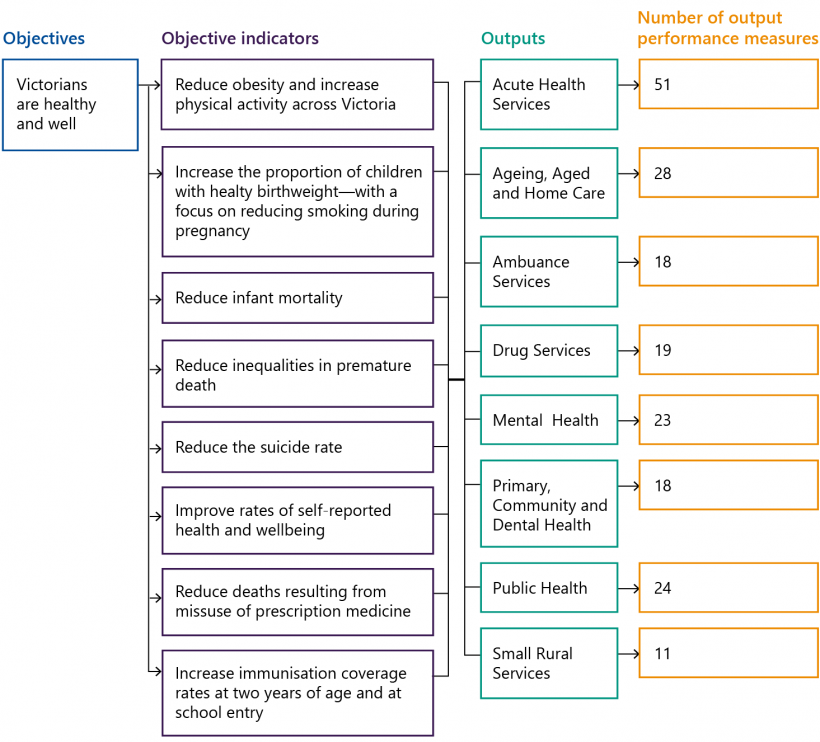

To demonstrate this, Figures 3B and 3C compare objectives from DJCS's and DHHS's performance statements. While DJCS's statement presents a clear relationship between all its parts, DHHS does not have clear links between its objective indicators, outputs and output performance measures.

FIGURE 3B: Extract from DJCS's performance statement for the objective 'Effective supervision of children and young people through the provision of youth justice services promoting rehabilitation'

Source: VAGO, based on the 2020–21 BP3.

FIGURE 3C: Extract from DHHS's performance statement for the objective 'Victorians are healthy and well'

Source: VAGO, based on the 2020–21 BP3.

Comparing these performance statement extracts highlights the importance of clear links between objectives, objective indicators and output performance measures:

|

For its departmental objective … |

The department has set … |

For the reader, this means … |

|

Effective supervision of children and young people through the provision of youth justice services promoting rehabilitation (DJCS) |

Two objective indicators that each align to their own output group and set of output performance measures. |

They can clearly follow the alignment from output performance measure to output group, and then from objective indicator to the overall objective. |

|

Victorians are healthy and well (DHHS) |

Eight objective indicators and eight separate outputs, with no links expressed between the outputs and the objective indicators. 192 output performance measures spread across the outputs. |

It is difficult to know which outputs and output performance measures relate to which objective indicators. This creates the impression that all of the outputs and output performance measures contribute to all of the objectives and objective indicators. For example, this is unlikely because:

It would be more useful for the reader if the department clearly expressed which outputs and output measures relate to which departmental objectives and objective indicators. |

A mix of quality, quantity, timeliness and cost measures

If outputs do not have a good balance of measures, departments cannot provide a comprehensive and transparent view of their performance and make informed decisions about trade‑offs in their service delivery. While this does not necessarily mean an equal number of measures across the four dimensions—quality, quantity, timeliness and cost—the Framework does require departments to have a meaningful mix. This is so users accessing the information can determine if the department may be:

- reducing quality standards to meet quantity, timeliness or cost targets

- reducing the quantity of outputs to meet quality or timeliness targets

- delaying project delivery to meet quality and quantity targets.

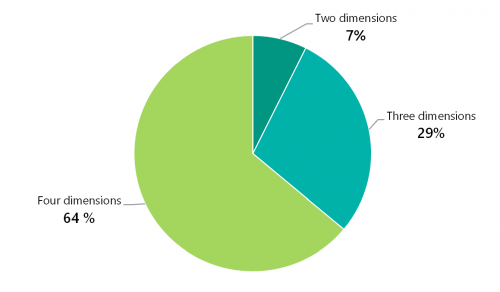

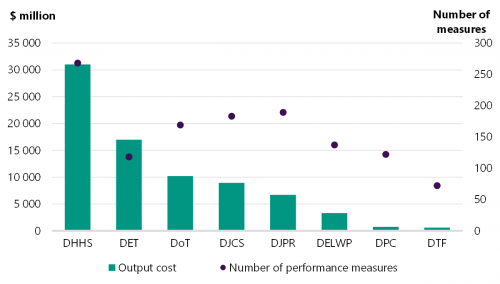

Figure 3D shows that despite the expectation set in the Framework that all outputs have a mix of output measures across all four dimensions, only 64 per cent of departments’ outputs meet this mandatory requirement.

FIGURE 3D: Percentage of 2020–21 outputs that have output measures covering either two, three or all four required dimensions of quantity, timeliness, cost and quality

Source: VAGO, based on the 2020–21 BP3.

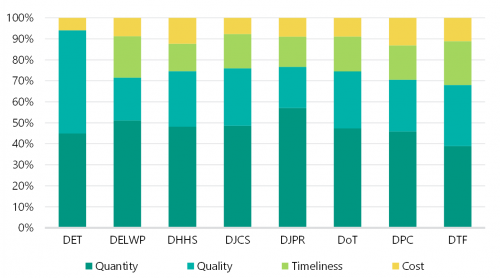

Figure 3E shows that while there is some variation in the mix of 2020–21 output performance measures between departments, 'quantity' is the most frequently used. The exception is DET, which uses more 'quality' measures and no measures of timeliness.

FIGURE 3E: Mix of quantity, quality, timeliness and cost measures by department

Source: VAGO, based on the 2020–21 BP3.

Figure 3F gives an example of an output without a balanced mix of output performance measures.

DHHS's output 'Small Rural Services' includes a range of health and aged care services delivered in small rural towns and is divided into four sub-outputs: 'acute health', 'aged care', 'primary health' and 'home and community care services'. Only two of these sub-outputs have quality measures and none of them have a timeliness measure. Without these measures, DHHS cannot know whether it is providing timely, quality health services in rural communities. It is also not possible to see if DHHS is making performance trade-offs.

FIGURE 3F: Balance of sub-output performance measures for DHHS's output group 'Small Rural Services'

| DHHS sub-output | Quantity | Quality | Timeliness | Cost |

|---|---|---|---|---|

| Acute health | 2 | 1 | 0 | 1 |

| Aged care | 1 | 1 | 0 | 1 |

| Home and community care services | 1 | 0 | 0 | 1 |

| Primary health | 1 | 0 | 0 | 1 |

Source: VAGO, based on the 2020–21 BP3.

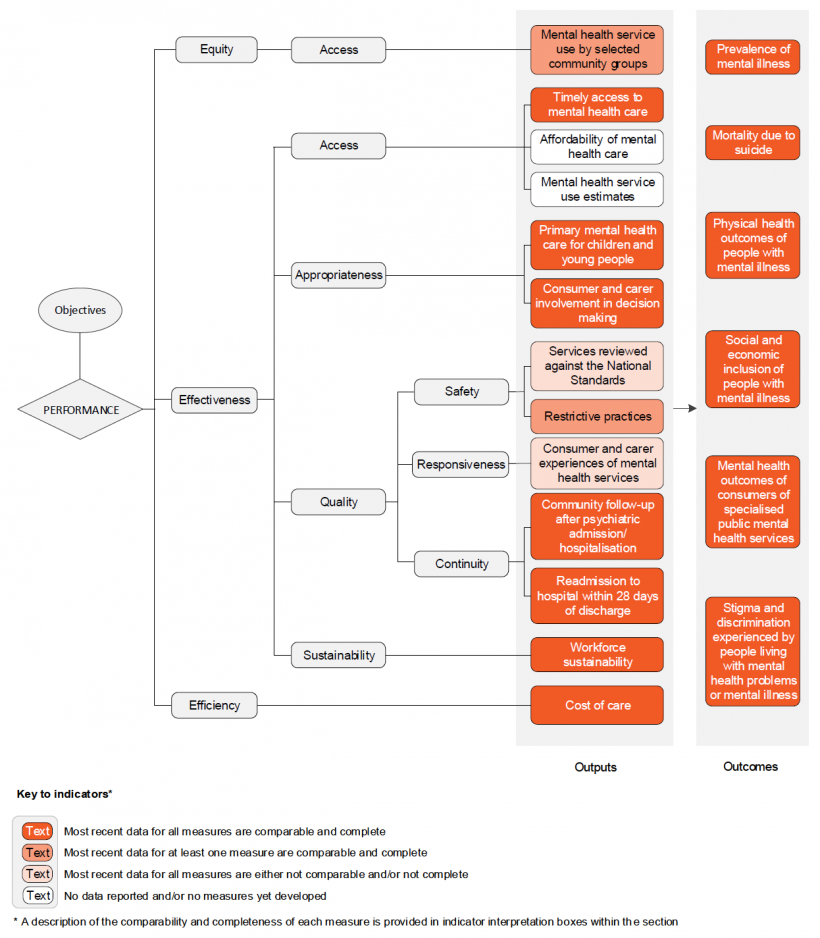

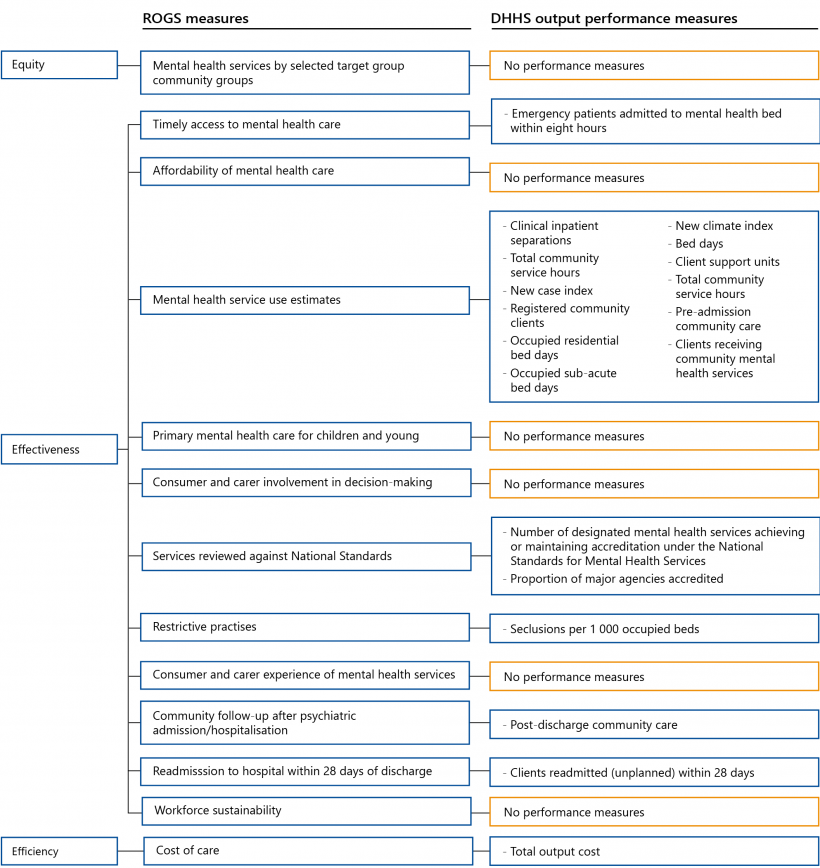

Appendix D provides a further example to illustrate gaps in current departmental performance statements by comparing the measures that DHHS uses to assess the performance of its mental health services with those used by RoGS.

Efficiency output measures

Despite requiring departments to set output efficiency measures, DTF includes no guidance in the Framework on how to construct efficiency output measures. In particular, it does not require departments to define the unit cost of their services. This makes it difficult to benchmark service efficiency across departments and other similar jurisdictions, and to understand if individual outputs provide value for money.

Across all departmental output performance measures, there are only two (both for DTF) that truly measure efficiency:

- ‘Total accommodation cost ($ per square metre per year)’

- ‘Workspace ratio (square metre per FTE) [full-time equivalent]’.

DET also has four measures that measure service efficiency. However, it has incorrectly categorised these as departmental objective indicators rather than output performance measures.

This absence of true efficiency measures across government departments reflects a lack of focus on an important aspect of government service delivery performance.

The most common output measures in the 2020–21 BP3 are those measuring 'quantity'. It is possible to convert quantity measures into efficiency measures by combining them with cost to show the unit cost for a service. Figures 3G and 3H provides examples of this.

As shown in Figure 3G, instead of simply listing the number of emergency road transports, the Western Australian Department of Health uses the measure ‘Cost per trip for road-based ambulance services’ to measure the cost-efficiency of the service.

FIGURE 3G: Extract from the Western Australian Department of Health’s 2019–20 Annual Report

Cost per trip for road-based ambulance services, based on the total accrued costs of these services for the total number of trips

Rationale