The Victorian Government ICT Dashboard

Overview

Information and communications technology (ICT) is integral to how governments manage information and deliver programs and services. Comprehensive reporting of ICT expenditure and projects is important to improve transparency and provide assurance that public sector agencies have used public resources in an efficient, effective and economic way.

In our 2015 report Digital Dashboard: Status Review of ICT Projects and Initiatives, we found that public sector agencies’ financial and management processes did not enable the comprehensive reporting of actual ICT expenditure across the public sector. As a result, we recommended that the Department of Premier and Cabinet (DPC) publicly report on ICT projects across the public sector.

In March 2016, DPC launched the Victorian Government ICT Dashboard for public sector ICT projects valued at more than $1 million. The ICT Dashboard shows key metrics such as cost, expected completion time frame and implementation status.

In this audit, we examined whether transparency in government ICT investments has improved since the development of the ICT Dashboard. We examined DPC’s role as the dashboard owner, and undertook a detailed review of selected projects in the Department of Health and Human Services (DHHS), Melbourne Water and Public Transport Victoria (PTV). We also examined the Department of Treasury and Finance’s (DTF) role in cross-checking with High Value High Risk reporting, as well as overseeing the process for agencies to make financial disclosures about ICT expenditure.

We made six recommendations. We directed four recommendations to DPC, one recommendation to DTF, and one recommendation to DHHS, DPC, Melbourne Water and PTV.

Transmittal letter

Ordered to be published

VICTORIAN GOVERNMENT PRINTER June 2018

PP No 398, Session 2014–18

President

Legislative Council

Parliament House

Melbourne

Speaker

Legislative Assembly

Parliament House

Melbourne

Dear Presiding Officers

Under the provisions of section 16AB of the Audit Act 1994, I transmit my report The Victorian Government ICT Dashboard.

Yours faithfully

Dave Barry

Acting Auditor-General

20 June 2018

Acronyms

| BAU | Business as usual |

| CFO | Chief Financial Officer |

| CIO | Chief Information Officer |

| DEDJTR | Department of Economic Development, Jobs, Transport and Resources |

| DHHS | Department of Health and Human Services |

| DPC | Department of Premier and Cabinet |

| DTF | Department of Treasury and Finance |

| FMA | Financial Management Act 1994 |

| FRD | Financial Reporting Direction |

| HVHR | High Value High Risk |

| ICT | Information and communications technology |

| IT | Information technology |

| MW | Melbourne Water |

| PMO | Project management office |

| PR | Public reporting |

| PTV | Public Transport Victoria |

| RAG | Red/amber/green |

| USA | United States of America |

| VAGO | Victorian Auditor-General's Office |

| VAHI | Victorian Agency for Health Information |

| VSB | Victorian Secretaries' Board |

Audit overview

Information and communications technology (ICT) is integral to how governments manage information and deliver programs and services. ICT projects need to be diligently monitored and successfully implemented, so that services to the government, public sector and community can be efficient and effective.

Comprehensive reporting on ICT expenditure and projects is important to improve transparency and provide assurance that public sector agencies have used public resources in an efficient, effective and economic way.

Our previous 2015 audit

In our 2015 report Digital Dashboard: Status Review of ICT Projects and Initiatives (Digital Dashboard Phase 1 report) we found that public sector agencies' financial and management processes did not enable the comprehensive reporting of actual ICT expenditure across the public sector.

During that audit, many agencies found it difficult to provide basic information on their ICT spend and projects. Because of this difficulty, we had to estimate the Victorian Government's total ICT expenditure, which we conservatively estimated to be about $3 billion per year.

Within this total spend we found that, for capital ICT expenditure, agencies spent an average of $720 million each year, from 2011–12 to 2013–14. Capital expenditure is usually the largest component of an ICT project's budget so it is a useful indicator of total project expenditure. Typically, this type of expenditure is recorded as an asset in the balance sheet.

We also found that agencies involved in the 2015 audit were not able to assure Parliament and the Victorian community that their ICT investments had resulted in sufficient public value to justify the significant expenditure of taxpayers' money.

As a result, we recommended that the Department of Premier and Cabinet (DPC) publicly report on ICT projects across the public sector. This reporting was to include relevant project status information, such as costs, time lines, governance and benefits realisation.

The Victorian Government ICT Dashboard

DPC launched the Victorian Government ICT Dashboard (ICT Dashboard) in March 2016 to improve transparency and provide assurance that agencies have efficiently, effectively and economically used public resources in their ICT projects.

Agencies subject to the Financial Management Act 1994 (FMA) must provide quarterly updates on their ICT projects valued at more than $1 million.

Since the dashboard's inception, 84 of the 184 agencies that are required to report projects to the ICT Dashboard have reported on 439 projects. There have been 191 projects reported as complete, with a combined value of $907.9 million.

In March 2018, DPC went live with a new dashboard system, which offers more functionality than the previous tool.

Conclusion

The implementation of the ICT Dashboard has improved the transparency of public sector ICT projects. Information reported on the ICT Dashboard is accessible, interactive and easy to understand.

It is also reasonably timely, taking into consideration the data handling and sign‑off processes in place for entering, checking and publishing the dashboard data.

It is reasonable for the public to expect information sources like the ICT Dashboard to be authoritative and reliable. If they are not, then public confidence in the integrity of this information may be eroded.

The information on the ICT Dashboard is 'complete' in terms of reporting agencies entering relevant and coherent data in mandatory fields for the projects that they have disclosed.

However, we are not able to give assurance on the overall completeness, accuracy or integrity of the data on the dashboard because:

- we detected a number of data errors for the projects we reviewed

- we detected some projects that were not reported by the agencies we reviewed

- we observed that nearly one-third of all projects reported on the ICT Dashboard were disclosed later than they should have been.

Based on the anomalies we detected in a small subset of all the reported data, we suspect that this problem is more widespread.

These inaccuracies show that DPC and agency processes are not adequate to properly assure the integrity and reliability of source data, which is fundamental to the overall accuracy and completeness of the ICT Dashboard.

Despite these accuracy and completeness challenges, the ICT Dashboard is a marked improvement in the availability and visibility of ICT project data. There are further opportunities for the ICT Dashboard to improve transparency as it matures, particularly by:

- providing more useful and descriptive narratives on the purpose and status of projects

- capturing and reporting expected project benefits

- better identifying and confirming what ICT category a project fits into.

Findings

ICT Dashboard accessibility and ease of understanding

The ICT Dashboard is publicly available and can be viewed from any internet connection. It is designed to be compatible with multiple devices such as desktop computers, tablets or mobile phones.

DPC conducted accessibility testing of the new ICT Dashboard before it was publicly rolled out in March 2018. At the time of this audit, DPC stated that it intends to conduct regular accessibility audits of the ICT Dashboard.

All the source data files used to populate the ICT Dashboard can be accessed and downloaded at the Victorian Government's public data repository known as data.vic.

We downloaded this data into a typical spreadsheet program and observed that it correctly tabulated against column headings and was consistent from quarter to quarter.

ICT Dashboard timeliness and completeness

By its nature, the ICT Dashboard is a repository of data from public sector agencies sent to DPC. This means that its content relies on the completeness, accuracy and candour of the data and status updates that agencies provide.

Historically, DPC and the Department of Treasury and Finance (DTF) have taken an 'arm's length' approach to their roles and responsibilities in assuring the accuracy and integrity of government frameworks and reporting processes.

DPC and DTF both stated in their response to the Digital Dashboard Phase 1 report that they believed that primary responsibility for compliance with government policies, including expenditure policies (such as specific ICT reporting requirements), rests with individual entities in accordance with Victoria's financial management framework.

If this approach to governance and oversight is to work effectively, then reporting agencies need to be committed to, and capable of, providing accurate and complete data in a timely fashion.

Timeliness

Due to the various processes required to collect, upload, review, approve and report the ICT Dashboard data, there is a lag of at least three months before data is published.

Many of these processes are discretionary, and DPC and reporting agencies could streamline them to reduce the time taken from data collection to data publication.

However, process reform may not improve the frequency of dashboard updates. We observed that most of the agencies in this audit have limited capability to provide updates in real time or more frequently than quarterly, due to internal resource and system constraints.

This data lag means that the ICT Dashboard has limited utility as a management support tool. For many fast-moving ICT projects, a three-month data delay can mean that what is publicly reported does not reflect what is actually currently happening with the project.

On a positive note, the upgraded ICT Dashboard, launched in March 2018, has automated some of the more laborious data entry and formatting activities that DPC previously needed to do. This should free up DPC resources to give more focus to higher-order analytical tasks rather than mundane data validation and data cleansing activities.

Completeness

For this audit, we considered two perspectives of completeness when reviewing the dashboard.

The first was about the completeness or integrity of the data reported to DPC, requiring all mandatory fields to be filled in with relevant data. We found no omissions in the uploaded data files that we reviewed. The new tool has an inbuilt data verification process that will not allow an agency to upload its data unless all required fields are filled in with data that meets the required parameters.

The second view of completeness relates to whether all the projects that should be reported on the ICT Dashboard have been reported.

During the audit, we identified five eligible ICT projects which the agencies involved in this audit had not reported on the ICT Dashboard.

Agencies are not consistently identifying whether their ICT projects will have, or already have, reached the threshold of $1 million.

ICT Dashboard reporting systems and processes

The agencies that we audited are very reliant on manual processes to identify reportable projects. DPC and DTF have no real-time visibility of agency financial systems to help identify projects that should be reported on, apart from public information such as media mentions, press releases or Budget Papers.

In Victoria, the devolved financial accountability system means that responsibility for the accuracy and completeness of data rests with agencies.

However, DPC does not have a process to assure government or the public that agencies have correctly identified and reported all the ICT projects that should be reported on the ICT Dashboard.

As a consequence, there is limited oversight and assurance of the completeness and accuracy of reported projects and data. Errors in the reported data highlight the need for both agencies and DPC to have stronger systems in place to report accurately.

We tested 18 projects and their source documents in detail and found that the accuracy of the information reported on the ICT Dashboard varies by agency; DPC, Melbourne Water (MW) and Public Transport Victoria (PTV) were mostly accurate, but the Department of Health and Human Services (DHHS) was not accurate.

Agencies' compliance in disclosing and reporting their projects to the ICT Dashboard in a timely manner also varied. Since the ICT Dashboard commenced, 128 projects out of 439 (29 per cent) were reported later than they should have been. Agencies advised us that this can be due to the cost being unknown at the start of the project, or scope changes that increase the cost of the project.

Agencies have processes in place to report data to the ICT Dashboard, but these processes do not always ensure that data is reported in accordance with the ICT Reporting Standard for the Victorian Public Service (ICT Reporting Standard). We found instances where the project's red/amber/green (RAG) status and items included in project budgets were inconsistent with the ICT Reporting Standard.

For example, we found that the four projects we examined at MW, and the one project we examined at DPC, reported the whole project cost as delivery costs, rather than separately identifying the initiation and delivery costs, as required by the ICT Reporting Standard.

Agencies have adequate processes to complete their mandatory ICT expenditure reporting, however, better coordination between Chief Financial Officers (CFO) and Chief Information Officers (CIO), or their equivalent, could help systematically identify projects that need to be reported on the ICT Dashboard.

Recommendations

We recommend that the Department of Premier and Cabinet:

- amend the ICT Reporting Standard for the Victorian Public Service to:

- require that agencies provide a more descriptive and standardised narrative about their ICT projects, including:

- information on the purpose of the project and overall value proposition (see Section 2.5)

- a description of the expected impact on the efficiency and effectiveness of service delivery (see Section 2.5)

- information on the benefits expected from the project's implementation (see Section 2.5)

- require the capture and reporting of expected project benefits on the Victorian Government ICT Dashboard, including a capability for reporting agencies to monitor benefits realisation (see Section 2.5)

- clarify that any agency-derived red/amber/green statuses used for a quarterly data update must align with the high-level red/amber/green definitions specified by the Department of Premier and Cabinet to ensure a consistent view across the public sector of ICT project status (see Section 3.4)

- require that the Chief Information Officer and Chief Financial Officer (or equivalent roles) jointly sign-off the list of ICT projects that underpins the Financial Reporting Direction 22H reporting process and attest that all required projects have been identified and correctly reported (see Section 3.2)

- continue to consult with agencies subject to the Financial Management Act 1994 to determine the most useful data fields to be included in the ICT Reporting Standard for the Victorian Public Service with a key focus on avoiding any unnecessary reporting burden for agencies (see Section 2.4)

- conduct strategic analysis of ICT project categories and spend to support the intent of the Information Technology Strategy: Victorian Government 2016–2020 for agencies to share existing solutions within the public service or identify services that could be transitioned into a shared services model (see Section 2.5)

- identify methods to review and confirm the accuracy and completeness of data reported on the Victorian Government ICT Dashboard and communicate the results back to agencies (see Section 2.2).

We recommend that the Department of Treasury and Finance:

- implement a common chart of accounts across agencies subject to the Financial Management Act 1994, to consistently capture and code ICT‑related expenditure, to allow better assessment and analysis across all these entities, regardless of their size or portfolio (see Section 3.2).

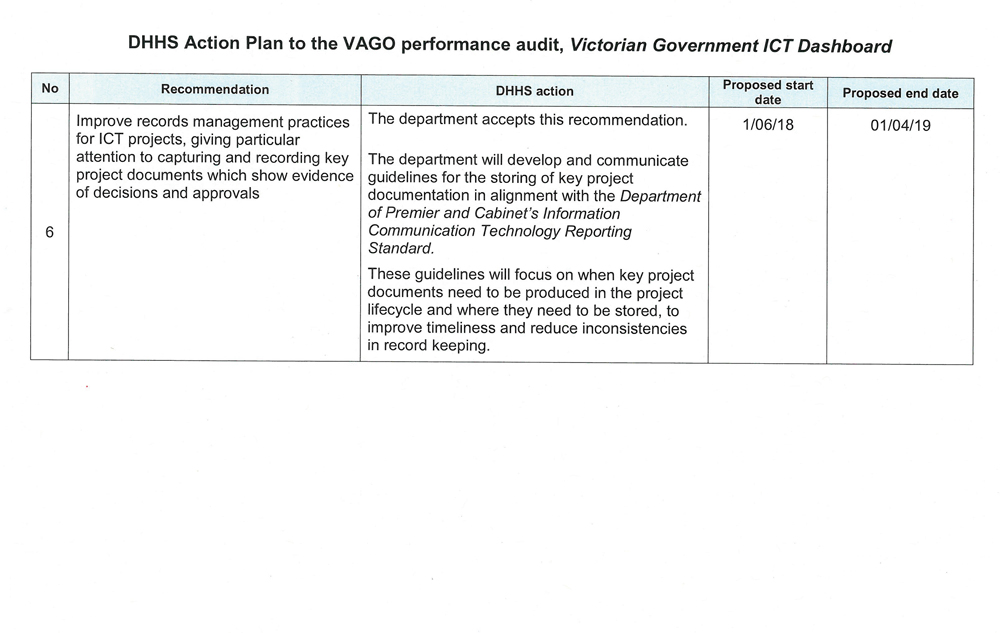

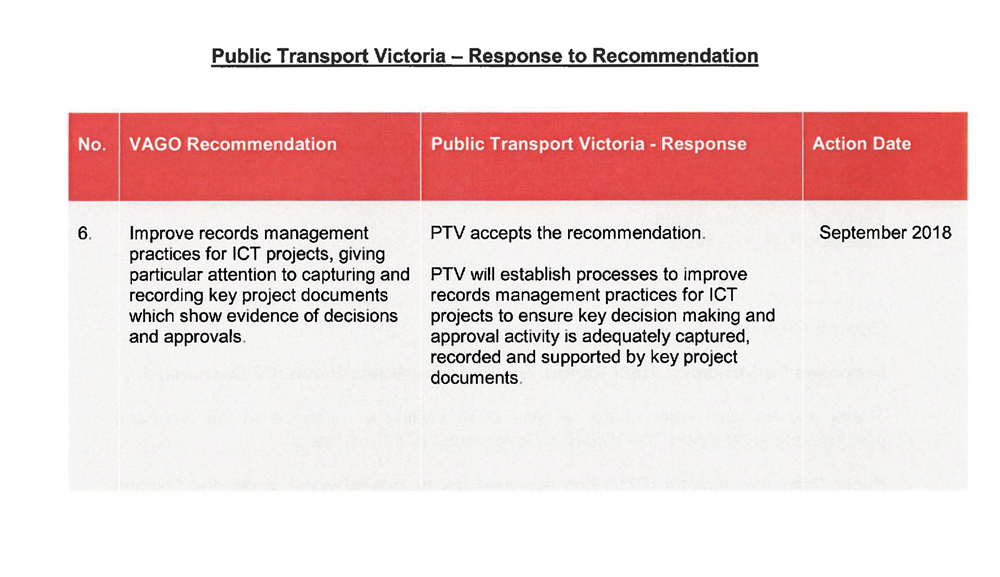

We recommend that the Department of Health and Human Services, the Department of Premier and Cabinet, Melbourne Water and Public Transport Victoria:

- improve records management practices for ICT projects, giving particular attention to capturing and recording key project documents which show evidence of decisions and approvals (see Section 3.3).

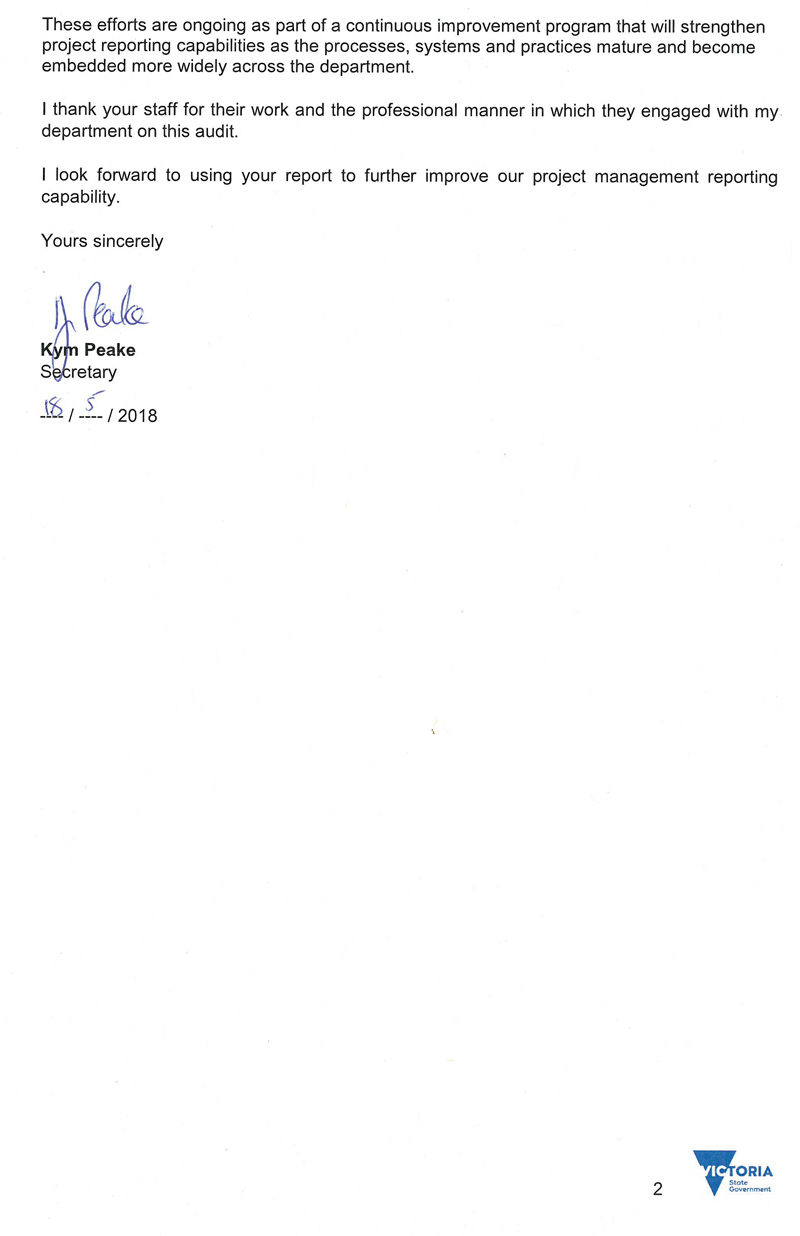

Responses to recommendations

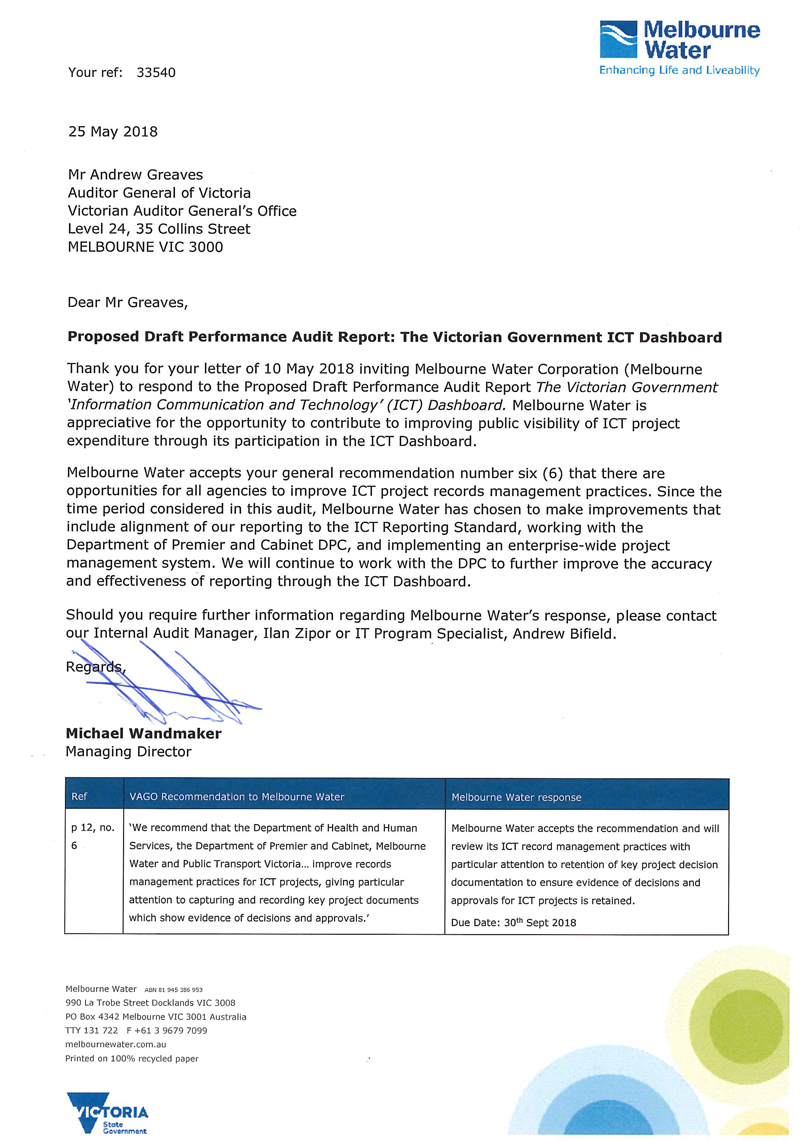

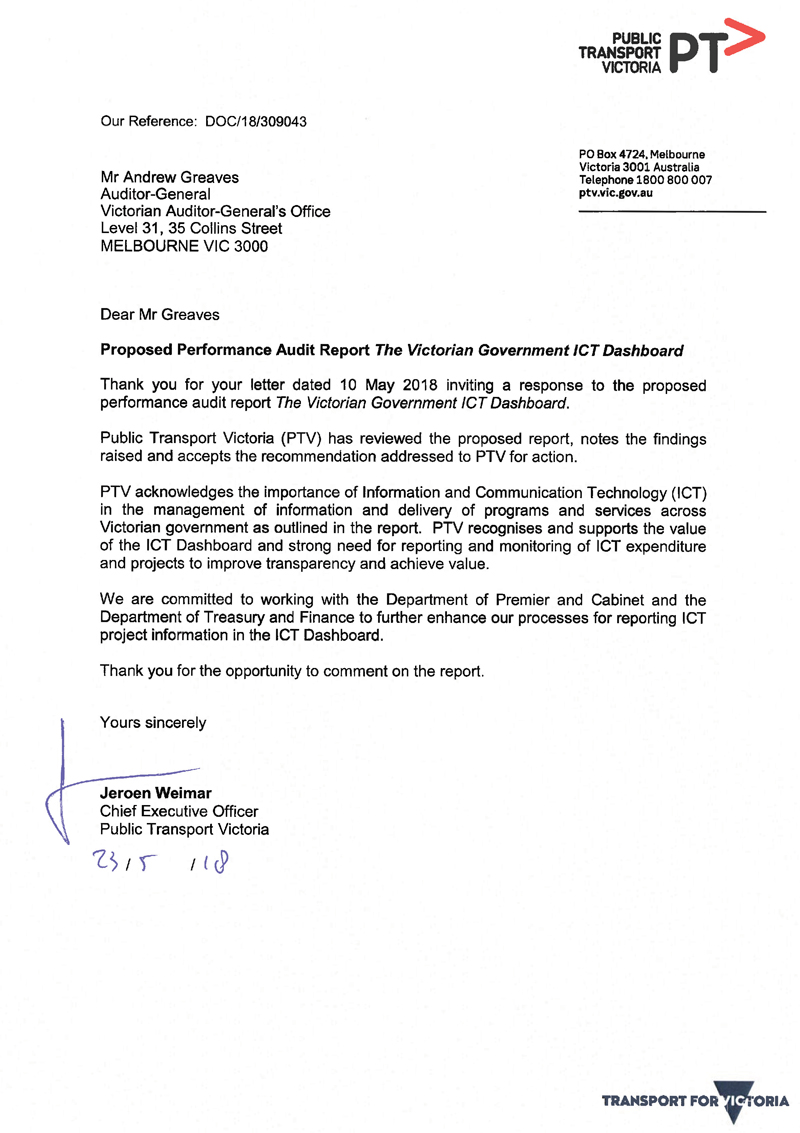

We have consulted with DHHS, DPC, DTF, MW and PTV. We considered their views when reaching our audit conclusions. As required by section 16(3) of the Audit Act 1994, we gave a draft copy of this report to those agencies and asked for their submissions or comments.

The following is a summary of those responses. The full responses are included in Appendix A.

DPC fully accepted four of the five recommendations directed to it, and accepted the majority of the other recommendation. DPC stated that it shares VAGO's focus on improving transparency in ICT investments across government and provided an action plan for the recommendations it had accepted.

DTF noted the findings of the report and accepted in principle the recommendation to establish a common chart of accounts to consistently capture and code ICT-related expenditure. DTF agreed that improving the quality of performance data, both financial and non-financial is essential to better inform government policy decisions and prioritisation of resource allocation, including for ICT investment.

DHHS, DPC, MW and PTV accepted the recommendation directed to them to improve records management practices for ICT projects. DHHS committed to developing and communicating guidelines for the storing of key project documentation and project status reporting. All agencies provided an action plan detailing how they will address the recommendation.

1 Audit context

1.1 Background

ICT is integral to how governments manage information and deliver programs and services.

Our April 2015 Digital Dashboard Phase 1 report found that, in general, government agencies' financial and management processes did not enable the comprehensive reporting of actual ICT expenditure across the public sector. During that audit, many agencies found it difficult to provide basic information on their ICT spend and projects.

We also found that agencies involved in the 2015 audit were not able to assure Parliament and the Victorian community that their ICT investments had resulted in sufficient public value to justify the significant expenditure of taxpayers' money.

As a result, we recommended that DPC publicly report on ICT projects across the public sector. This reporting was to include relevant project status information, such as costs, time lines, governance and benefits realisation.

DPC, in conjunction with DTF, accepted this and all the other recommendations directed to it, and committed to implementing a quarterly report covering all ICT projects with a budget greater than $1 million, by 31 March 2016.

DPC noted that it did not have the authority to require departments and agencies to report the status of their ICT project, however, it would work with public sector bodies to request this information.

DTF and DPC also noted that primary responsibility for compliance with government policies, including with expenditure policies, rests with individual entities in accordance with the financial management framework.

Victorian Government ICT expenditure

The Digital Dashboard Phase 1 report estimated that the Victorian Government's total ICT expenditure was about $3 billion per year. This was a conservative estimate because we found that financial processes in Victorian agencies did not enable comprehensive accounting of actual ICT expenditure.

We found that agencies spent an average of $720 million on capital ICT expenditure each year, between 2011–12 and 2013–14.

From March 2016 to December 2017, agencies have reported 191 projects as complete, valued at $907.9 million. These are shown in Figure 1A.

Figure 1A

Number of completed projects and reported cost, by quarter

Source: VAGO, based on the data files available from data.vic.

Information Technology Strategy: Victorian Government 2016–2020

The Information Technology Strategy: Victorian Government 2016–2020 (the Victorian IT Strategy) was released in May 2016. The Victorian IT Strategy gives direction and targets for public sector information management and technology over a five-year time frame.

The Victorian IT Strategy has four priorities:

- reform how government manages its information and data and makes these transparent

- seize opportunities from the digital revolution

- reform government's underlying technology

- lift the capability of government employees to implement ICT solutions that are innovative, contemporary and beneficial.

In response to one of our recommendations from the Digital Dashboard Phase 1 report, one of the Victorian IT Strategy's actions was to launch a public dashboard covering ICT projects worth over $1 million.

Other ICT dashboards across the world

Governments in other countries and Australian states have established mechanisms to publicly report on their ICT projects.

The format of this reporting varies, with some dashboards showing data at an aggregate level, such as expenditure by department, while others show very detailed project-specific information, and even identify key individuals by name and photograph.

International ICT dashboards

United States of America

The United States of America's (USA) IT Dashboard was launched in 2009 to provide information about 26 federal agencies' IT expenditure and the progress of their projects.

In March 2018, this dashboard contained data on over 7 000 projects, including 700 identified as 'major' projects. Major projects are required to submit a business case detailing the cost, schedule, and some performance data, however, there is not a dollar figure specifying when a project requires a business case.

The USA IT Dashboard gives an overview of historical IT spending and forecasts future spending. In the 2019 fiscal year (1 October to 30 September), the USA expects to spend $83.4 billion on ICT projects, as shown in Figure 1B. The USA IT Dashboard also provides an overview of all project's risk ratings, as shown in Figure 1C.

Figure 1B

USA's total IT spending by fiscal year

Source: USA IT Dashboard, www.itdashboard.gov.

Figure 1C

USA's collated risk ratings for all reported ICT projects

Source: USA IT Dashboard, www.itdashboard.gov.

The Netherlands

The Netherlands' dashboard, called the Central ICT Dashboard, provides information about major ICT projects, defined as valued at more than Ä5 million. In March 2018, this dashboard reported on 199 projects, with 96 of them in progress.

The dashboard compares total spend on multi-year projects by ministries (equivalent to departments). The most recent data on the dashboard is current to 31 December 2016.

Australian ICT dashboards

Queensland

Queensland's ICT Dashboard was launched in August 2013 and gives information on all major ICT projects overseen by the Queensland Government. By March 2018, the dashboard reported on 156 projects worth a total of $1.4 billion. Each department decides which projects to report on the dashboard.

Queensland ICT projects use a RAG status rating. The dashboard provides summary statistics on projects by department, RAG status, and expenditure per department.

Figure 1D shows the Queensland ICT Dashboard landing page, and summary snapshot.

Figure 1D

Landing page of Queensland's ICT Dashboard

Source: Queensland ICT Dashboard, www.qld.gov.au/ictdashboard.

New South Wales

New South Wales launched the digital.nsw dashboard in November 2017. This dashboard reports on the New South Wales Government's progress on projects that contribute to achieving its three digital government priorities.

The website gives an overview of each project and its budget. Figure 1E displays the landing page, and summary information.

Figure 1E

Summary information provided on digital.nsw

Source: New South Wales dashboard, www.digital.nsw.gov.au.

1.2 The Victorian Government ICT Dashboard

DPC manages the Victorian Government ICT Dashboard, which was launched in March 2016. The ICT Dashboard is a reporting tool that shows key metrics from public sector ICT projects valued at more than $1 million.

The ICT Dashboard fulfils a component of action 22 of the Victorian IT Strategy.

The ICT Dashboard is available to anyone with an internet connection, is interactive, and allows users to see and filter data in different ways.

Figure 1F shows the dashboard's overview of ICT projects, as at April 2018.

Figure 1F

Overview of reported ICT projects on the ICT Dashboard, April 2018

Source: ICT Dashboard.

Project information is required for every ICT project that has a total projected, estimated, current or actual ICT cost of $1 million or more and is initiated or in delivery, or was completed, postponed or terminated, in the quarter.

Since the dashboard's creation, 84 of the 184 agencies that are required to report on the ICT Dashboard have reported on 439 projects. The number of reported projects has gradually risen since March 2016, as shown in Figure 1G.

Figure 1G

Number of projects reported on the ICT Dashboard, by quarter

Note: Until Q2 2017–18, agencies were required to report on projects until the end of the financial year, even if the project was concluded. It was therefore expected that the number of projects would increase throughout the financial year. From Q2 2017–18, DPC advised that it would now remove projects from the dashboard as they were completed, terminated or merged.

Source: VAGO, based on the data files available from data.vic.

ICT projects reported on the ICT Dashboard

The latest available data, for the December quarter 2017, shows 246 projects from 65 agencies. These projects are valued at $1.8 billion.

Figure 1H shows the number of projects reported and the budgeted cost, by government domain. The Health domain has the largest number of projects at 55 and second highest cost at $337.1 million. The Law and Justice domain has the highest combined project cost of $730.2 million.

Figure 1H

Number and cost of projects, by government domain, December quarter 2017

Source: VAGO, based on the data files available from data.vic.

Agencies are required to report on their projects' current implementation stage. The dashboard has seven implementation status categories, as shown in Figure 1I.

Figure 1I

Stages of implementation

|

Stage |

Definition |

|---|---|

|

Initiated |

Pre 'project delivery' activities are underway including preliminary planning, feasibility study, business case development and/or funding request. |

|

Business Case Approved |

Project business case has been approved or funding has been allocated. |

|

Delivery |

Project has commenced delivery. |

|

Completed |

Project has delivered its outcomes, and is being, or has been closed. |

|

Postponed |

Project has been temporarily put on hold. |

|

Terminated prior to 'project completed' |

Work on the project has ended prematurely (i.e,. a decision has been made to stop work). |

|

Merged |

Scope of the project has been merged into another project. |

Source: Version 2.0 of the ICT Reporting Standard.

Under version 1.0 of the ICT Reporting Standard, agencies reported against six stages:

- initiated

- in progress—pre-implementation activities

- in progress—implementation activities

- project completed—project closed

- project postponed

- project terminated (prior to completion).

As shown in Figure 1I, version 2.0 of the standard has not significantly altered the project stages.

Figure 1J shows the breakdown of the 246 reported projects, by stage. The majority (149, or 61 per cent) are listed as in 'delivery'.

Figure 1J

Projects by stage of implementation, December quarter 2017

Source: VAGO, based on the data files available from data.vic.

The ICT Dashboard reports the status of projects using a RAG format. Agencies report the status of the project, as per their most recent project control board (or equivalent) report, using the definitions outlined in Figure 1K.

Under version 1.0 of the ICT Reporting Standard, the RAG status used the same definitions, however, the status only reflected whether the current implementation time line was on schedule. It did not take account of other matters such as risk, functionality or cost.

Figure 1K

RAG status definitions

|

Status |

Definition |

|---|---|

|

● |

On track |

|

● |

Issues exist but they are under the project manager's control |

|

● |

Serious issues exist that are beyond the project manager's control |

Source: Version 2.0 of the ICT Reporting Standard.

There is no guidance in the ICT Reporting Standard regarding when a project needs to start reporting a RAG status. DPC advised that its new online reporting tool has built-in functionality to activate RAG status reporting when a project moves into the delivery stage. However, this has not been observed or tested by the audit team.

In the December quarter 2017, 178 of the 246 projects (72 per cent) reported a RAG status.

Figure 1L shows the RAG status of reported projects, for the December quarter 2017. Just under 80 per cent of projects were reported as on track.

Figure 1L

Reported projects by RAG status, December quarter 2017

Source: VAGO, based on the data files available from data.vic.

New dashboard tool launched in early 2018

In March 2018, DPC went live with a new dashboard system, which offers more functionality than the previous tool.

The new tool continues most of the previous dashboard's public-facing functionality and is highly interactive. It allows a user to receive a high-level overview, such as RAG status, domain or ICT project type, or to specifically focus on a single project or agency. Users can apply filters for project costs and duration.

The new dashboard home page has three categories for users—understand, explore and improve.

The 'understand' section provides the dashboard's strategic objectives and data collection process, and discusses how the data is used.

New features give users a historical overview of all ICT projects. Users can download quarterly data files and, as of March 2018, this download includes how projects have progressed over the previous five quarters.

The 'explore' section allows users to explore the reported government ICT projects across stage, status and domain. A dashboard overview summarises projects by stage and status, by cost tiers, top five departments/agencies ranking, government domains and ICT project categories.

A search, filter and sort function has been added in the new dashboard system to improve useability. A revision history is now available for each agency to track any changes to project schedules and costs.

The new dashboard system can also generate a 'project on a page' summary, which gives agencies snapshots of their projects' status and data.

The last section is 'improve' where users can provide feedback to dashboard administrators.

1.3 Legislation and standards

Financial Management Act 1994

The FMA governs Victorian Government financial investments and reporting. The FMA requires government to apply the principles of sound financial management.

In the context of this legislation, sound financial management is to be achieved by:

- establishing and maintaining a budgeting and reporting framework

- prudent management of financial risks, including risks arising from the management of assets

- considering the financial impact of decisions or actions on future generations

- disclosing government and agency financial decisions in a full, accurate and timely way.

Under section 8 of the FMA, the Minister for Finance issues directions, which apply to public sector bodies.

These include Financial Reporting Directions (FRD), which are mandatory and must be applied if the public agency is subject to the FMA.

Financial Reporting Direction 22H—Standard disclosures in the report of operations

The purpose of FRD 22H is to prescribe the content of agencies' report of operations within their annual report to ensure consistency in reporting.

One of the requirements of FRD 22H is for agencies to disclose total ICT business as usual (BAU) expenditure and total ICT non-BAU expenditure for the relevant reporting period.

This part of FRD 22H was first applied in the 2016–17 financial year and attestations on ICT expenditure were included in agencies' annual reports for that period.

ICT Reporting Standard for the Victorian Public Service

Prior to the issuing of the FRD 22H reporting requirements for ICT expenditure, DPC released version 1.0 of the ICT Reporting Standard in September 2015.

The ICT Reporting Standard sets the business reporting requirements for ICT expenditure and ICT projects with budgets over $1 million and applies to all departments and bodies, as defined by the FMA.

At the same time, DPC released the ICT Expenditure Reporting Guideline for the Victorian Government. The guideline gives data reporting recommendations and guidance to agencies.

In September 2017, DPC issued version 2.0 of the ICT Reporting Standard. Version 2.0 references the requirement for agencies to comply with FRD 22H and added seven extra reporting fields.

The revised ICT Reporting Standard was first used for the 2017–18 December quarter data which was released to the public in late March 2018.

1.4 Governance framework

There are a number of governance bodies that are relevant for ICT:

- The Minister for Finance is the responsible minister under the FMA and also issues FRDs.

- The Special Minister of State is responsible for the Victorian IT Strategy, including the ICT Dashboard.

- The Victorian Secretaries' Board (VSB) comprises the Secretaries of each department, the Chief Commissioner of Victoria Police and the Victorian Public Sector Commissioner. The aim of the VSB is to coordinate policy initiatives across the public sector, and promote leadership and information exchange. The VSB was the approval authority for the original and revised ICT reporting standards.

- The Integrity and Corporate Reform subcommittee of the VSB was delegated responsibility, by the VSB, for dealing with operational matters in relation to the ICT Dashboard.

- The Victorian CIOs' Leadership Group (formerly known as the CIOs' Council) includes the CIOs from bodies who are members of the VSB plus any invited observers. The group is responsible for setting technical ICT standards and guidelines and for coordination of major ICT issues across agencies.

1.5 Agency roles

The following describes the departments with responsibilities for the ICT Dashboard and gives information on the agencies that we included in this audit for focused testing.

Department of Premier and Cabinet

DPC is the owner of the ICT Dashboard and oversees the reporting process. It collects data from FMA agencies and publishes quarterly reports on the ICT Dashboard.

DPC is also a reporting agency and has reported six projects on the ICT Dashboard. We reviewed one of DPC's ICT projects in detail.

Department of Treasury and Finance

DTF is the owner of the overall State Budget process that is key to the funding approval for most ICT projects. DTF also manages the High Value High Risk (HVHR) process which requires the ongoing monitoring of more complex, higher-risk ICT projects.

As an entity, DTF has reported three projects on the ICT Dashboard since it launched in March 2016, with two of these currently in the initiation stage.

Department of Health and Human Services

DHHS develops and delivers policies, programs and services that support and enhance the health and wellbeing of all Victorians.

DHHS has reported 51 projects on the ICT Dashboard since the dashboard's inception. We reviewed eight of DHHS's ICT projects in detail.

Melbourne Water

MW is a statutory authority that manages and protects Melbourne's major water resources on behalf of the community.

MW has reported 33 projects on the ICT Dashboard since its launch. We reviewed four of MW's ICT projects in detail.

Public Transport Victoria

PTV is a statutory authority that acts as a system coordinator for all public transport in Victoria. It aims to improve public transport in Victoria, by ensuring better coordination between modes, facilitating expansion of the network, auditing public transport assets and promoting public transport.

PTV has reported 20 projects on the ICT Dashboard since its launch. We reviewed five of PTV's ICT projects in detail.

1.6 Previous audits

Our Digital Dashboard Phase 1 report estimated that the Victorian Government's ICT expenditure was $3 billion per year and found that Victorian agencies did not have comprehensive accounting in place to identify actual ICT expenditure.

Our follow-up March 2016 report Digital Dashboard: Status Review of ICT Projects and Initiatives – Phase 2 examined a selection of ICT projects in detail.

Although some elements of better practice were identified, the audit confirmed that the Victorian Government needed to be more effective in planning and managing ICT projects, as they continued to show poor planning and implementation, resulting in significant delays and budget blowouts.

1.7 Why this audit is important

ICT projects are a significant component of the Victorian public sector's annual expenditure.

It is important to track information on the status and outcomes of public sector ICT initiatives to monitor whether public resources have been spent in an efficient and effective manner. Greater transparency also helps to assess whether these investments have enhanced government services or addressed the problems they were meant to resolve.

The ICT Dashboard was developed to provide accurate, reliable and complete information about ICT expenditure by Victorian Government departments and agencies and to provide assurance and transparency to the public.

The ICT Dashboard has now reported eight quarters of data and this audit gives some insight into whether agencies are now more reliably monitoring and recording—as well as transparently reporting—their ICT investments.

1.8 What this audit examined and how

The objective of this audit was to examine whether transparency in government ICT investments has improved since the development of the ICT Dashboard.

We examined whether:

- information reported on the ICT Dashboard is accurate, timely and complete

- information reported on the ICT Dashboard is accessible and easy to understand

- agencies have systems and processes to ensure that data reported on the ICT Dashboard meets the ICT Reporting Standard.

We examined DPC in its role as the system owner of the ICT Dashboard and also examined one of its ICT projects which is also scrutinised by DTF's HVHR process.

We examined DTF due to its role in overseeing the State Budget process, financial compliance frameworks and the HVHR process.

DHHS, PTV and MW were included in the audit to allow us to examine a spread of projects across different areas of the public sector. Across these three entities we examined 17 ICT projects in detail.

These projects were chosen on a risk and materiality basis, which included factors such as schedule, cost, type of project and reported RAG status.

The full list of projects we examined in detail can be found in Appendix B.

The methods for this audit included:

- a review of corporate documents, including project plans, policies, frameworks, and briefings at selected departments and agencies

- interviews with staff and senior management at selected departments and agencies

- a review of processes and key information systems used to collect, analyse and report information to the ICT Dashboard

- a review of financial data related to the case study ICT projects and expenditure at selected departments and agencies.

We conducted our audit in accordance with section 15 of the Audit Act 1994 and ASAE 3500 Performance Engagements. We complied with the independence and other relevant ethical requirements related to assurance engagements. The cost of this audit was $310 000.

1.9 Report structure

The structure of this report is as follows:

- Part 2 discusses our review of the ICT Dashboard

- Part 3 discusses our review of case study projects at selected agencies.

2 The ICT Dashboard—transparency and oversight

The ICT Dashboard launched in March 2016 and, since then, there have been eight quarters of data reported. From the potential 184 FMA agencies required to report on the ICT Dashboard, 84 have reported 439 ICT projects.

The ICT Reporting Standard requires applicable agencies to report data on the ICT Dashboard for projects worth over $1 million. The ICT Reporting Standard's key objectives are to:

- increase government transparency in managing ICT expenditure and project status

- identify emerging trends in ICT expenditure and project types

- discover collaboration and shared services opportunities

- promote consistency in tracking ICT expenditure and the performance of ICT projects

- enable clear oversight for a more effective approach to future information management and technology

- meet our Digital Dashboard Phase 1 report audit recommendations.

This part of the report discusses our review of the application of the ICT Reporting Standard, with a particular focus on the transparency, accuracy and usefulness of the data that the ICT Dashboard displays.

2.1 Conclusion

The information reported on the ICT Dashboard is accessible and easy to understand. It is also reasonably timely, taking into consideration the processes that need to be followed for the data to be collected and published.

The information is also 'complete' from a data entry perspective for the projects that agencies are reporting. However, we are not able to give assurance that all projects that should be included in the dashboard were reported, because we detected a number that had been omitted. We found the omissions were due to human error and had no discernible pattern.

Although the ICT Dashboard has been a marked improvement on the previous quality and availability of ICT project data, it could further mature and improve transparency, particularly by:

- providing more useful descriptive narratives of the purpose and status of projects

- capturing and reporting expected project benefits

- better identifying and confirming what ICT category a project fits into, such as 'records management'.

2.2 Has the dashboard improved transparency?

Since its launch, the ICT Dashboard has created a substantial increase in the quality and availability of information about public sector ICT projects.

Our Digital Dashboard Phase 1 report identified the need for such a tool due to historically poor transparency within the public sector around what was being spent on ICT, in which agency and for what purpose.

In response to our Digital Dashboard Phase 1 report, both DPC and DTF agreed that there was a need to set up an ICT-specific dashboard.

The Victorian IT Strategy authorised the establishment of the ICT Dashboard and the ICT Reporting Standard gives operational authority to the content and requirements of the ICT Dashboard. The ICT Reporting Standard was approved by the VSB in September 2015 and updated in September 2017.

The ICT Reporting Standard also notes that where the project includes non-ICT-related investment, agencies should only report on the ICT project component.

By its nature, the ICT Dashboard is a repository of data from public sector agencies sent to DPC. This means that its content is driven by the completeness, accuracy and candour of the data that agencies provide when responding to the mandatory elements of the ICT Reporting Standard.

An apparent transparency challenge is that reporting agencies are not consistently identifying whether their ICT projects will have, or already have, reached the ICT Reporting Standard's threshold of $1 million.

The agencies that we audited rely on staff manually checking lists extracted from different systems to identify reportable projects. DPC and DTF have no real-time visibility of agency financial systems to help identify projects that should be reported, apart from public information sources such as media mentions, press releases or Budget Papers.

During the audit we identified five eligible ICT projects that agencies did not report on the ICT Dashboard. We discuss this in detail in Part 3.

DPC does not have a process to assure itself, government or the public that agencies have correctly identified and reported all ICT projects that should be on the ICT Dashboard. During this audit DPC stated that it does not believe it has a role in assuring the data that agencies provide.

The devolved financial accountability system in Victoria means that the responsibility for the accuracy and completeness of data rests with agencies. The consequence of this approach is that there is limited oversight of and assurance about the accuracy and completeness of reported data.

Likewise, the agencies where we detected errors and omissions do not have adequate processes to make sure the data they report is accurate and complete.

2.3 Is it easy to understand and accessible?

The ICT Dashboard is publicly available and can be viewed from any internet connection. It is designed to be compatible with multiple devices such as desktop computers, tablets and mobile phones.

Accessibility of the ICT Dashboard tool

In addition to the continuous availability of the ICT Dashboard for any internet user, DPC states that it strives for the ICT Dashboard to comply with the World Wide Web Consortium's Web Content Accessibility Guidelines (WCAG) 2.0. These guidelines are designed to help people with disabilities who face challenges when accessing material on the internet.

The Victorian Government has publicly stated that it will ensure its online content is available to the widest possible audience, including readers using assistive technology or other accessibility features.

DPC conducted accessibility testing of the new ICT Dashboard tool before it publicly rolled out in March 2018. DPC also advised us that it had commissioned a disability advocacy agency to conduct a user experience test for people with impaired vision. At the time of this audit, DPC stated that it intends to conduct regular accessibility audits of the ICT Dashboard.

Accessibility of the ICT Dashboard data

During the audit, we were able to access and download all the source data files that had been used to populate the ICT Dashboard since it was launched.

A data file for the most recent quarter is available on the dashboard's 'understand' page, while previous quarters are located on the Victorian Government's public data repository known as data.vic.

There are no hyperlinks to historical datasets from the ICT Dashboard page to data.vic and identifying and downloading historical files was not a simple process.

We downloaded the data into a typical spreadsheet program and observed that it correctly tabulated against column headings and had consistency from quarter to quarter.

We identified some minor discrepancies related to project codes and naming conventions. We understand DPC resolved this when transitioning to the new ICT Dashboard.

2.4 Is the information timely and complete?

The technology used to display the ICT Dashboard data has matured over the last two years.

The new ICT Dashboard is a cloud-based ICT platform which allows reporting agencies to directly upload required data into the system. The most recent data upload (December quarter 2017) is now in the cloud-based database and displayed through a data visualisation tool.

This is an efficiency improvement over the previous system, which required agencies' spreadsheet data files to be uploaded into a shared repository for checking and formatting offline by DPC, before being imported into a data visualisation tool for online publication.

The new system's data entry approach has streamlined the review process for agencies and DPC because validation checks are now built into data fields that will only accept a data input that meets required parameters.

Once entered into the system, the data is staged from 'draft' to 'approved' by the reporting agency, then held by DPC as 'confirmed' until all the dashboard data is 'published' at the end of the quarter, after the Special Minister of State is provided with a briefing.

Is the dashboard timely?

Due to the various processes required to collect, upload, review, approve and report the ICT Dashboard data, there is a lag of at least three months before data is published on the dashboard.

This means that data could be nearly six months old just before the dashboard has its quarterly refresh.

For many fast-moving ICT projects, this can mean that what is publicly reported does not reflect what is actually currently happening.

We observed that, at present, there are limited opportunities to reduce this data lag due to:

- manual and time-consuming data collation and data entry processes at agencies

- agency internal sign-off for RAG status and progress commentary

- cross-checking with DTF information related to ICT projects that are subject to HVHR processes

- DPC-initiated reviews of any major data anomalies and projects with consecutive red or amber RAG statuses

- a quarterly briefing to the Special Minister of State, which gives a summary of the ICT Dashboard's results prior to publication.

On a positive note, the new tool has automated some of the more laborious data entry and data formatting activities which should free up DPC resources to give more focus to higher order analytical tasks rather than mundane data validation and data cleansing activities.

Is the dashboard complete?

Between March 2016 and September 2017, agencies reported against 17 data fields. Since December 2017, when version 2.0 of the ICT Reporting Standard became operational, agencies have reported against 24 fields.

A complete list of the data fields agencies need to report against can be found in Appendix C.

For this audit, we considered two views of completeness when reviewing the dashboard.

The first view was about the completeness or integrity of the data reported to DPC, requiring all mandatory fields to be filled in with relevant and coherent data.

We reviewed the data for the September 2017 quarter that was published in December 2017. We found no omissions in the mandatory data fields that we reviewed.

The new tool has an in-built data verification process that will not allow an agency to upload its data unless all required fields are complete. An incomplete upload is listed as 'draft' and will attract a follow up from DPC if it is not actioned promptly by the reporting agency.

The second view of completeness related to whether all the projects that should be reported on the ICT Dashboard have been reported.

As stated earlier, we identified five eligible ICT projects that agencies had not reported on the ICT Dashboard. We discuss this further in Part 3 of this report.

We also found that a significant number of projects have not been reported on the dashboard according to the time frame set by the ICT Reporting Standard.

We analysed when a project commenced and when the project was first reported on the ICT Dashboard. We found that 128 of 439 (29 per cent) projects were reported later than they should have been. The longest disclosure delay was 21 months late for two projects, followed by 18 months late for three projects. The average delay in disclosure was seven months.

2.5 Does it provide better oversight?

Between March 2016 and the end of December 2017, the ICT Dashboard site had about 10 400 unique page views from internet users. The tracking software for the site does not show which domain these users come from so it is not possible to attribute these views to a particular type of user.

The new system's functionality increases the opportunity for better oversight of all ICT projects. In particular, showing RAG status trends over time will give users of the ICT Dashboard a longer-term view of how a project is progressing.

The new system also allows reporting agencies to use non-mandatory fields in the system to track a wider range of project attributes beyond the cost or schedule data, such as issues, risks, and benefits.

Identifying opportunities to better align investments across agencies

One of the actions of the Victorian IT Strategy is 'Following on from the ICT Projects Dashboard, establish a high level “portfolio management office” function to form a portfolio view of government ICT projects over $1 million, providing templates, advice and assistance where relevant'.

Establishing this function requires data to be visible across all portfolios, to gain a broad view of ICT projects across the public sector. The current dashboard has substantively achieved this.

However, to move to a more strategic view, the ICT Dashboard should aim to provide more meaningful, qualitative information on the nature, purpose and benefits of an ICT project. It also needs to categorise ICT projects consistently.

Quality and usefulness of project descriptive narratives

One of the goals of the ICT Reporting Standard is to identify emerging trends in ICT expenditure and project types across government.

The latest ICT Dashboard data has taken the first step towards collecting standardised ICT project category descriptions. The 30 project type descriptions are from the Australian Government Architecture Reference Models published in 2011 by the now defunct Australian Government Information Management Office.

At present, it is difficult to derive a detailed understanding of the nature and purpose of a reported ICT project from the descriptive material that agencies report on the ICT Dashboard.

Project titles do not allow users to understand the functionality or capability of the project or why it is being implemented.

We also observed that although the project type descriptor is standardised, DPC has included an extra category of 'other' and allows agencies to select multiple categories for their ICT projects. DPC advised us that the 'other' option was provided because the project category field is mandatory.

DPC also advised us that where an agency selected 'other', DPC worked with the agency to assist them to choose a more relevant field, and that currently no agencies have projects categorised as 'other' on the ICT Dashboard.

We query whether allowing multiple selections or the 'other' category will provide a more granular view of ICT investments, without some level of justification by the agency or more quality control and oversight by DPC.

Benefits and impacts from ICT projects

Our Digital Dashboard Phase 1 report found that Victorian agencies and entities were not in a position to assure Parliament and the Victorian community that their ICT investments resulted in sufficient public value to justify the significant expenditure of taxpayers' money.

This was because the agencies were unable to demonstrate that the expected benefits from ICT investments had been realised. At the time of the Digital Dashboard Phase 1 report, only a quarter of the 1 249 projects that were reported to VAGO had a benefits realisation plan. Only 33 per cent of the reviewed sample effectively laid out the expected benefits and set out measures and targets for these.

The Digital Dashboard Phase 1 report also found that it was very difficult to obtain consistent and meaningful data on benefits realisation. Of the 788 projects reported as 'completed', a little over 10 per cent had had their expected benefits assessed.

Based on these findings we recommended establishing a public reporting (PR) mechanism that provides relevant project status information on ICT projects across the public sector, with key metrics and project information to be included in this reporting such as costs, time lines, governance and benefits realisation.

Low rates of tracking or assessment of benefits realisation is not confined to the ICT domain. We have commented in other reports that a lack of a methodical evaluation culture in the Victorian public sector often means there is no systematic and objective collection of lessons learned, to better inform the planning and execution of future projects.

We have observed this deficiency in audits of projects across many sectors and have also identified a comparatively low number of post-implementation Gate 6 'Benefits Realisation' reviews done under DTF's gateway review process, compared to other earlier stage gates.

Our review of the new ICT Dashboard software tool identified that it has capacity to include more information about ICT projects such as issues, stakeholders, risks and benefits.

We understand that the system also has a purpose-designed module which agencies can use to track benefits for each of their reported ICT projects.

To fully meet the benefits realisation component of our 2015 recommendation, DPC should promptly examine the implementation of this functionality. DPC should also amend the ICT Reporting Standard to require agencies to report on the expected impact of their ICT investments and to track benefits as they are realised.

3 The ICT Dashboard—agency data and reporting

In Victoria's devolved financial accountability system, responsibility for the accuracy and completeness of data rests with the board or accountable officer of each entity, and is certified through the entity's governance process, rather than by DPC or DTF.

This part of the report discusses the results of our testing of the data reported on the ICT Dashboard by the agencies involved in this audit.

3.1 Conclusion

The accuracy of the information reported on the ICT Dashboard varies by agency. We found that the information entered by DPC, MW and PTV was mostly accurate, but the information entered by DHHS was not accurate.

Agencies' compliance in reporting projects to the ICT Dashboard was varied. We found two projects at MW were not disclosed for 18 months and one project at DHHS was not reported until 15 months after it commenced.

Project-related data reported on the ICT Dashboard is complete, in that all necessary fields have been filled in correctly, however, because we detected five projects that were omitted in the agencies we reviewed, we cannot be sure that all ICT projects that should be reported have been reported.

Agencies have manual processes to report data on the ICT Dashboard, but these processes do not always ensure that data is reported in accordance with the ICT Reporting Standard. We found instances where a project's RAG status was inconsistent with the ICT Reporting Standard's definition, as well as inconsistencies in recording items in project budgets, such as the allocation of staffing costs.

Agencies have adequate processes to complete their mandatory reporting on ICT expenditure, however, better coordination between CFOs and CIOs (or their equivalent) could help systematically identify ICT projects that should be reported on the ICT Dashboard.

3.2 Are agencies following the ICT Reporting Standard?

The current ICT Reporting Standard defines 24 data fields that agencies are required to publish on the ICT Dashboard. It also specifies agency requirements for reporting their BAU and non-BAU ICT expenditure in their annual financial reports.

For this audit, we focused on the fields reported on the dashboard and non‑BAU expenditure. We did not assess BAU expenditure, apart from examining the most recent attestation made by the agencies in this audit, as ICT projects are not typically funded from this expenditure category.

Are ICT Reporting Standard requirements being met?

We expected to see that agencies were timely in identifying and reporting relevant projects on the ICT Dashboard, in accordance with the ICT Reporting Standard.

High-level results by agency

The four agencies we reviewed are meeting most of the ICT Dashboard reporting requirements. However, we observed some anomalies and small errors, which shows that they are not consistently meeting the ICT Reporting Standard.

We found that some agencies were using different criteria to determine their RAG status than those required by version 1.0 of the ICT Reporting Standard. There was also often a delay between the start of the project and when the project was reported on the ICT Dashboard.

We also detected examples where a project was omitted from the dashboard. We discuss this further in the section on mandatory ICT expenditure reporting requirements.

Department of Health and Human Services

We found that although DHHS generally follows the ICT Reporting Standard, it did not follow the standard for all projects when determining RAG status.

DHHS previously defined and reported its RAG status based on a combination of schedule, budget and risks, whereas under the version 1.0 of the ICT Reporting Standard, the RAG status should only have been based on schedule.

We found that half of the projects we reviewed were late in being reporting on the dashboard:

- One project commenced in May 2016 and was reported in December 2016—seven months after starting.

- One project that commenced in June 2016 was not reported until September 2017—15 months after starting.

- One project commenced in December 2016 but was not reported until September 2017—nine months after starting.

- One project commenced in February 2017 and was first reported in September 2017—seven months after starting.

DHHS advised us that during the early stages of analysis and planning a project, the scope of the project may increase or decrease. As a result, some projects are not immediately identified as likely to exceed the $1 million threshold and have to be reported on the ICT Dashboard.

Department of Premier and Cabinet

We assessed one project at DPC and found that it did not report an initiation cost, but reported all costs as implementation costs.

The project team advised that the project's use of two different project management methodologies meant that classifying the phases of the project as initiation or delivery was difficult. In order to provide some transparency about the project's cost, DPC decided to report the full amount as implementation costs

DPC advised that some of the product concepts it developed during the planning phase actually delivered some aspects of the final product. So, while these concepts included both initiation and delivery costs, the costs were only reported as delivery costs.

Melbourne Water

MW adhered to the ICT Reporting Standard, except for reporting of initiation costs and RAG status.

MW does not separately report initiation costs, with all costs reported as delivery costs. This means that there is no transparency about planning costs. MW advised that it believed that, at the time the report was developed, it was not practicable to reliably automate the reporting of separate initiation and delivery costs.

MW previously reported its RAG status based on the overall status of the project, whereas under version 1.0 of the ICT Reporting Standard, the RAG status should have only been based on schedule.

MW previously only reported projects to the ICT Dashboard when the project was confirmed as proceeding. This resulted in MW reporting 19 projects later than they should have been. MW told us that it sought advice from DPC in late 2017 regarding when projects should start being reported on the ICT Dashboard and, as a result of that advice, will now report projects earlier in their planning phase.

This earlier identification and reporting of projects will be a positive step towards transparency.

Public Transport Victoria

For the projects that we assessed at PTV, we found that the agency adheres to the ICT Reporting Standard.

However, PTV may face challenges reporting its project RAG status going forward, as the RAG status definitions were altered in version 2.0 of the ICT Reporting Standard and PTV's internal RAG status definitions do not match the revised definitions.

This means that if PTV were to report internally that a project had a 'red' status, it would not necessarily equate to the 'red' status description in the ICT Reporting Standard, that 'serious issues exist and they are beyond the project manager's control'.

PTV advised us that there is a variation between its internal RAG status definitions and the ICT Reporting Standard definitions because PTV has implemented a project methodology that it believes suits the wide range and type of projects that it delivers.

FRD 22H requirements

FRD 22H prescribes ICT expenditure disclosures that agencies must make in their annual report of operations.

Under the Standing Directions of the Minister for Finance, the accountable officer or governing board of an agency must attest—that is, personally sign and date—this disclosure within the annual report of operations.

Attestation assurance processes in agencies

All the agencies we examined completed the required FRD 22H attestation in their 2016–17 annual report.

Because the FRD 22H attestation is a component of the report and not a component of FMA agencies' yearly financial statements audited by VAGO, there is no external assurance provided in regard to its accuracy.

Non-BAU ICT expenditure disclosure

FRD 22H requires that entities report their total non-BAU ICT expenditure, broken down between operational expenditure and capital expenditure.

We examined the non-BAU expenditure for agencies in this audit, to identify if any ICT projects were underway that met the $1 million reporting threshold but were not included on the ICT Dashboard.

We also asked agencies to provide us with a list of their current ICT projects, including the name of the project, start and expected completion date and forecast or approved total cost. We used this list to cross-check information reported under FRD 22H and ICT projects reported on the ICT Dashboard.

Department of Health and Human Services

All DHHS's ICT projects that should have been reported were correctly disclosed on the ICT Dashboard, although four projects we reviewed were disclosed late.

Department of Premier Cabinet

We identified one project that should have been reported on the ICT Dashboard earlier than it was. DPC identified this project through its internal financial management processes when project expenditure reached $1 million, however the project's projected cost had been known for at least six months prior to this.

As DPC does not centrally track projects until project expenditure reaches $1 million, DPC cannot be sure that it is reporting all the projects that should be on the ICT Dashboard.

Department of Treasury and Finance

All DTF's ICT projects that should have been reported were correctly disclosed on the ICT Dashboard.

Melbourne Water

We found one project currently underway at MW that should have been reported on the ICT Dashboard but was not.

After we told MW about this, it advised us that this project had been missed due to a data entry error in its project management tool. The project's final delivery cost was under $1 million but should have been reported under the process that MW follows. MW advised us that it has since changed to a new project management tool where reporting is set up in a way that this particular error can no longer occur.

Public Transport Victoria

We identified four projects that were not, but should have been, reported on the ICT Dashboard.

PTV advised us that two of these had the status 'postponed' when the ICT Dashboard launched in March 2016, and were not reported after the projects recommenced. The third project was omitted due to an administrative error. The two projects that were postponed have since been completed and the third will be included in the March 2018 quarter reporting, which is expected to be published on the ICT Dashboard in June 2018.

The fourth missing project is a joined-up project with the Department of Economic Development, Jobs, Transport and Resources (DEDJTR), which was missed due to an assumption by PTV that the project would be reported by DEDJTR.

PTV told us that it did not think it needed to report this project, as it was part of a broader DEDJTR project. However, DEDJTR only reports its component of this project on the ICT Dashboard, not the PTV component. PTV has rectified this oversight, with the project included in the March 2018 reporting upload, which is expected to be published in June 2018.

3.3 Are agencies reporting accurate data?

For the ICT Dashboard to effectively improve the transparency of ICT projects, it is important that the data underpinning agency reporting is verifiable and accurate. We assessed the accuracy of the data reported on the ICT Dashboard by reviewing 18 projects from four agencies.

To determine the accuracy of this data (dates, dollar figures, RAG status), we assessed whether the data on the dashboard was verifiable against source documents held at the agency.

We found that the reported data is mostly accurate in three of the four agencies we tested, however one agency had a larger number of inaccuracies, as shown in Figure 3A.

Figure 3A

Agencies reviewed and overall accuracy assessment for selected projects

|

Agency |

Number of projects reported on the ICT Dashboard (1 March 2016 to 30 September 2017) |

Number of projects we examined |

Overall accuracy assessment against projects we examined |

|---|---|---|---|

|

DHHS |

51 |

8 |

Not accurate |

|

DPC |

6 |

1 |

Mostly accurate |

|

MW |

33 |

4 |

Mostly accurate |

|

PTV |

20 |

5 |

Mostly accurate |

Source: VAGO and data from the data files, available from data.vic.

We found that the reviewed agencies had the most difficulty when providing documentation to verify the initiation phase end date and cost of the initiation phase.

A summary of results from our 18 detailed project data reviews is in Appendix B.

High-level results by agency

We expected that agencies would be able to provide us with source documentation, such as project status reports, project initiation approvals and approved budgets and time lines, for the 17 data fields that were reported on the ICT Dashboard under version 1.0 of the ICT Reporting Standard. This version was applicable between March 2016 and September 2017.

Appendix C contains details of the data fields required under both versions of the ICT Reporting Standard.

Department of Health and Human Services

DHHS has reported 51 projects since the ICT Dashboard's inception. For the December quarter 2017, DHHS is reporting 32 projects, which are valued at $132.6 million.

Figure 3B displays the implementation stages of these projects, and their status.

Figure 3B

DHHS project breakdown, by implementation stage and status, December quarter 2017

Note: Agencies apply RAG statuses at different implementation stages, so not all projects reported on the ICT Dashboard have a RAG status.

Source: VAGO, based on the data files available from data.vic.

For this audit, we reviewed eight of these DHHS projects in detail.

For the projects we reviewed, DHHS could not verify all the data reported on the ICT Dashboard and we experienced substantial difficulty in obtaining documentation for some data fields.

We identified inaccuracies in the eight projects we examined at DHHS. Although, some of the inaccuracies are minor, there was a large number of data fields where the documentation provided did not match published data, or source documentation could not be provided.

DHHS told us that it had not had a consistent project management office (PMO) or project reporting tool. This has resulted in differing maturity of practices in project status reporting and knowledge management.

Appendix B has further details of our testing.

Department of Premier and Cabinet

DPC has reported a total of six projects on the ICT Dashboard, since its launch.

For the December quarter 2017, DPC reported four ICT projects with a total planned expenditure of $102.5 million. The implementation stage and RAG status of these projects are shown in Figure 3C.

Figure 3C

DPC project breakdown, by implementation stage and status, December quarter 2017

Note: Agencies apply RAG statuses at different implementation stages, so not all projects reported on the ICT Dashboard have a RAG status.

Source: VAGO, based on the data files available from data.vic.

We reviewed one project in detail at DPC, which is also designated as a HVHR project.

DPC provided us with most of the information we needed to verify project data reported on the dashboard. We observed, however, that all of the project's costs were reported as delivery costs, when, under the ICT Reporting Standard, initiation costs should have been separately reported.

Melbourne Water

Since the ICT Dashboard's launch, MW has reported 33 projects.

MW is currently reporting 13 projects with a planned expenditure of $16.7 million, as shown in Figure 3D.

Figure 3D

MW project breakdown, by implementation stage and status, December quarter 2017

Note: Agencies apply RAG statuses at different implementation stages, so not all projects reported on the ICT Dashboard have a RAG status.

Source: VAGO, based on the data files available from data.vic.

MW provided us with documentation to verify the majority of the data reported on the ICT Dashboard.

We found six inaccuracies in MW's projects revised completion dates and costs. These inaccuracies do not materially detract from the time and cost reporting of MW's ICT projects.

Public Transport Victoria

PTV has reported a total of 20 projects on the ICT Dashboard, since its inception.

In the December quarter 2017, PTV reported 10 projects with a planned expenditure of $89.9 million.

Figure 3E shows the breakdown of PTV's projects by implementation stage and status.

Figure 3E

PTV project breakdown, by implementation stage and status, December quarter 2017

Note: Agencies apply RAG statuses at different implementation stages, so not all projects reported on the ICT Dashboard have a RAG status.

Source: VAGO, based on the data files available from data.vic.

PTV provided us with documentation to verify the majority of the data reported on the ICT Dashboard. We found that reported dollar figures were mostly accurate, although, in one case, the amount was rounded up from an internal estimate of $9.3 million to $10 million.

PTV could not provide us with documentation to verify some data for two projects that were approved and commenced under the former Department of Transport.

PTV told us that this was due to limited documentation being handed over when the former Department of Transport transferred the projects to PTV.

3.4 Do agencies consistently apply the guidance?

Consistency of interpretation and categorisation

The ICT Reporting Standard and ICT Expenditure Reporting Guideline for the Victorian Government provide guidance on how agencies should compile their data for reporting on the ICT Dashboard, and to comply with FRD 22H.

The agencies we audited are not consistently applying the ICT Reporting Standard. We noted different interpretations by agencies of the criteria they should use to determine their overall RAG status, allocate internal staff costs to projects, and select a project category.

Application of RAG status

Agencies we audited were aware of the ICT Reporting Standard, although some of their staff were not overly familiar with its details, especially the requirements around allocating a RAG status.