Effectiveness of the Victorian Public Sector Commission

Overview

The Victorian public sector comprises approximately 3 388 entities, employs about 286 000 people, and delivers a diverse range of services, such as healthcare, schools and roads. This requires a skilled public service capable of giving comprehensive and impartial advice to government, effectively and efficiently implementing policy, and delivering services.

The Victorian Public Sector Commission (VPSC) was established in 2014 to replace the State Services Authority. Its objectives are to strengthen the efficiency, effectiveness and capability of the public sector, and to maintain and advocate for public sector professionalism and integrity.

In this audit, we examine the effectiveness of VPSC, its governance and its oversight. We focus on organisational planning across VPSC and look at how it prioritises its resources and measures its performance.

We make three recommendations for VPSC and one for the Department of Premier and Cabinet.

Effectiveness of the Victorian Public Sector Commission: Message

Ordered to be published

VICTORIAN GOVERNMENT PRINTER June 2017

PP No 255, Session 2014–17

President

Legislative Council

Parliament House

Melbourne

Speaker

Legislative Assembly

Parliament House

Melbourne

Dear Presiding Officers

Under the provisions of section 16AB of the Audit Act 1994 , I transmit my report Effectiveness of the Victorian Public Sector Commission.

Yours faithfully

Andrew Greaves

Auditor-General

8 June 2017

Audit overview

Impartiality, professionalism and integrity are important foundations for the public sector. Public sector staff must act with these values in mind, to ensure the use of public funds is as effective and efficient as possible, to meet community needs and to maintain public trust. Government and Parliament must be able to rely on the public sector to deliver effective services and provide robust advice to guide decision-making.

In previous VAGO audits, we have found instances of poor leadership across multiple public sector agencies, including difficulties in creating positive and ethical organisational cultures. Agencies have not always provided robust, complete, high‑quality advice to government—a key aspect of an impartial public sector. These examples, coupled with recent integrity failures, demonstrate the public sector's need for guidance and support to deliver its work.

In Australia, public sector commissions have important responsibilities for supporting the professionalism, integrity and capability of the public sector. The Victorian Public Sector Commission (VPSC) was established in 2014 to replace the State Services Authority (SSA). Under the Public Administration Act 2004 (the Act), VPSC's objectives are to strengthen the efficiency, effectiveness and capability of the public sector, and to maintain and advocate for public sector professionalism and integrity. It undertakes many activities aimed at maintaining and improving the skills required of an effective public sector.

As VPSC's portfolio department, the Department of Premier and Cabinet (DPC) is responsible for supporting VPSC to meet its legislated obligations, and advising the Premier and Special Minister of State on the performance of VPSC and the Act.

There have been significant recent changes in the funding for Victoria's public sector commission function and in its structure. In 2013, SSA's budget was reduced by 36 per cent. SSA, and then VPSC, was expected to continue to deliver its activities within the reduced budget. In 2014, when VPSC was established, the governance structure changed with the introduction of an advisory board and the establishment of a single Commissioner.

In this audit, we examine the effectiveness of VPSC, its governance and its oversight. We focused on organisational planning across VPSC, and looked at how it prioritises its resources and measures its performance.

To understand the effectiveness of the individual activities VPSC carries out, we examined four aspects of its work—its administration of the Graduate Recruitment and Development Scheme (GRADS) on behalf of the public sector, its promulgation of codes of conduct and standards, the maintenance of two key datasets, and VPSC's organisational review function.

Conclusion

VPSC is delivering important work that is valued by its public sector clients. However, its effectiveness in strengthening the performance, capability and professionalism of the public sector is hampered by its lack of robust planning and its inadequate understanding of the impacts of its activities.

VPSC's planning activities do not comply with the Act, and it has not prioritised its activities based on a solid understanding of their costs and outcomes. While VPSC is fulfilling its statutory functions, the work it is not performing—thorough planning informed by consultation with stakeholders, as well as activities to measure and understand its impact and better target its work—is also critically important. Without an evidence-based understanding of the costs and outcomes of its work, VPSC cannot effectively target its work to the risks, challenges and opportunities facing the public sector.

VPSC's performance measurement and reporting provides information on its activities, but little insight into what these activities achieve. We found examples of VPSC's work leading to positive outcomes, but also inefficiencies and gaps that compromise VPSC's efficiency and effectiveness. Encouragingly, VPSC has recently taken action that will contribute to addressing key weaknesses we have identified.

Finally, VPSC's governance and oversight arrangements are not operating effectively. Because the advisory board is not operating as required under legislation, it is unable to make a meaningful contribution to VPSC's strategic direction. While DPC closely supports VPSC's work, it has not been fully effective as the portfolio department. Gaps in DPC's advice to government about VPSC's performance and legislative compliance mean that government has lacked a complete picture of VPSC's performance.

Findings

Strategic and annual planning

VPSC's strategic planning and annual planning are not adequate, do not provide it with clear direction and do not help it to strategically prioritise its efforts.

Since its establishment, VPSC has not complied with all of the planning requirements in its legislation, including obtaining required inputs and approvals. VPSC has made recent improvements, but more work is necessary to ensure it is systematically prioritising its resources appropriately.

When VPSC was established, it continued to deliver the same activities as its predecessor agency, SSA. It did not undertake a systematic and thorough review of activities against its functions under the Act, which would have informed its plans and helped it to work more effectively and sustainably.

In the context of financial challenges arising from a reduced budget, VPSC considered this exercise to be too resource intensive. Recognising the substantial similarity between its functions and SSA's, VPSC chose to continue delivering the existing activities.

In the past 12 months, VPSC has introduced a defined set of strategic outcomes and an annual plan that links VPSC's activities to its objectives, and is tracking the progress of these activities.

Governance and oversight

DPC and VPSC have a close working relationship. However, DPC has not fulfilled its obligations to forward VPSC's strategic and annual plans to the Premier of Victoria for consideration and approval. DPC has also not performed its role of advising the Special Minister of State and the Premier about VPSC's noncompliance with the Act.

DPC did not establish VPSC's advisory board until more than 12 months after VPSC was established. Due to delays in appointing board members, it has only met twice in VPSC's three years of operation. As a result, the advisory board has not tangibly contributed to VPSC's strategic or day-to-day operations, and has not fulfilled its original policy objective of facilitating a longer-term strategic outlook for VPSC.

However, we note that recent changes to the advisory board's terms of reference, combined with a shift of the secretariat function to VPSC, aim to help VPSC benefit from the advisory board's expertise.

Measuring performance

We were not able to obtain sufficient assurance as to how well VPSC is performing its functions. This is largely because VPSC does not have a comprehensive understanding of its performance because of its lack of performance measures, reporting and evaluation of its activities.

VPSC's Budget Paper 3 (BP3) measures are not adequate—they do not accurately reflect VPSC's performance and outcomes because they do not comprehensively measure the effectiveness of its activities.

Case studies of key activities

We examined four of VPSC's key activities and found examples of good performance. We also found inefficiencies and gaps that compromise how efficiently and effectively VPSC works.

Our first case study focused on the organisational reviews and other reviews that VPSC conducts in response to requests from public sector agencies, the Premier and the Victorian Secretaries Board. VPSC follows well-developed project management practices to deliver these reviews and seeks opportunities for continuous improvement. Feedback from stakeholders indicates these reviews are effective and highly valued. However, VPSC could improve its performance measurement to better understand the effectiveness of these reviews.

The second case study focused on VPSC's delivery of GRADS for the Victorian public sector. Although VPSC has a solid understanding of the outputs it delivers each year, it does not have a comprehensive understanding of the program's impact. Within VPSC, an ongoing lack of strategic oversight of the program means that the long-term outcomes are not known.

The third case study examined the data collection activities that allow VPSC to fulfil its statutory obligations. There are flaws in VPSC's processes and controls which have resulted in inefficient data management. These flaws threaten the reliability of VPSC's data and the effectiveness of activities that use this data. VPSC is aware of these issues and has developed a strategy to address them.

The final case study considered VPSC's promulgation of codes of conduct and standards, which is an obligation under the Act. VPSC has not yet developed systems to understand whether its work on codes and standards is effective—instead, it relies on output-based performance measures and only measures compliance.

VPSC's Integrity Strategy, introduced in 2016, includes more active monitoring and a defined program of work, which VPSC has already begun to implement. This is a positive development that has given VPSC's codes and standards work a more strategic focus, and provides a good foundation for VPSC to improve its understanding of the impact of its work.

Recommendations

We recommend that the Victorian Public Sector Commission:

1. undertake strategic and annual planning activities that comply with its statutory obligations (see Section 2.2)

2. develop and implement a performance measurement system that demonstrates the impact of the activities it undertakes to achieve its statutory objectives (see Section 3.2)

3. implement its planned improvements to data storage, management and use (see Section 3.3).

We recommend that the Department of Premier and Cabinet:

4. advise government on the Victorian Public Sector Commission's compliance with its legislative planning obligations (see Section 2.3).

Responses to recommendations

We have consulted with Victorian Public Sector Commission and the Department of Premier and Cabinet, and we considered their views when reaching our audit conclusions. As required by section 16(3) of the Audit Act 1994 , we gave a draft copy of this report to those agencies and asked for their submissions and comments.

The following is a summary of those responses. The full responses are included in Appendix A. VPSC accepted the recommendations, but commented that VAGO's conclusions about the inadequacy of planning and prioritisation are flawed. VPSC asserted that the limitations with these activities were caused by factors outside its control. DPC accepted its recommendation and referred to existing work it is undertaking that will address the recommendation.

1 Audit context

High-quality public services are critical to the wellbeing and prosperity of the community. The Victorian public sector comprises approximately 3 388 entities, employs about 286 000 people, and delivers a diverse range of services, such as healthcare, schools and roads. This requires a skilled public service capable of giving comprehensive and impartial advice to government, effectively and efficiently implementing policy, and delivering services. The public sector must operate with absolute integrity to meet the needs of the Victorian community and maintain its trust.

Public sector commissions exist in all Australian jurisdictions, and play an important role in supporting an effective public sector. They provide guidance and set standards in areas such as merit-based employment, professional codes of conduct and good governance practice.

1.1 The public sector commission in Victoria

In Victoria, the public sector commission function was performed by the State Services Authority (SSA) from 2004 until 2014, and by the Victorian Public Sector Commission (VPSC) from 2014 onwards.

1.1.1 State Services Authority

SSA was established in 2004, under the Public Administration Act 2004 (the Act). Its role was to:

- identify opportunities to improve the delivery and integration of government services and report on the outcomes of these services

- strengthen the professionalism and adaptability of the public sector

- promote high standards of integrity and conduct in the public sector

- promote high standards of governance, accountability and performance for public entities.

In July 2012, the creation of the Independent Broad-based Anti-Corruption Commission (IBAC) changed the Victorian integrity landscape and provided an opportunity for government to reconsider the role of SSA. The Department of Premier and Cabinet (DPC) led a review of SSA in 2012, which recommended changes to SSA's governance structure and new requirements for long-term strategic planning. The Act was subsequently amended in 2014, replacing SSA with VPSC.

1.1.2 Victorian Public Sector Commission

VPSC began operating on 1 April 2014, with two objectives:

- to strengthen the efficiency, effectiveness and capability of the public sector in order to meet existing and emerging needs and deliver high-quality services

- to maintain and advocate for the professionalism and integrity of the public sector.

The Act prescribes VPSC's functions, as shown in Figure 1A. Six of the functions are specific, such as issuing codes of conduct, while others can be fulfilled in various ways.

Figure 1A

VPSC's functions under the Public Administration Act 2004

|

Section 39: Public sector efficiency, effectiveness and capability (a) to assess and provide advice and support on issues relevant to public sector administration, governance, service delivery and workforce management and development (b) to conduct research and disseminate best practice in relation to public sector administration, governance, service delivery and workforce management and development (c) to collect and report on whole-of-government data (d) to conduct inquiries as directed by the Premier. (e) The Commission must perform any work falling within section 39 as requested by the Premier and may perform any work as requested by a minister or a public sector body. Section 40: Public sector professionalism and integrity (a) to advocate for an apolitical and professional public sector (b) to issue and apply codes of conduct and standards (c) to monitor and report to public sector body heads on compliance with the public sector values, codes of conduct, and public sector employment principles and standards (d) to review employment-related actions and make recommendations following those reviews (e) to maintain a register of lobbyists and a register of instruments. |

Note: VPSC is not subject to ministerial direction or control in performing any of the functions listed in section 40.

Source: VAGO, based on the Public Administration Act 2004 .

These functions are substantially the same as those of SSA, with two noteworthy differences:

- the addition of a research function—section 39(b)

- a new role as an advocate for an apolitical and professional public sector—section 40(a).

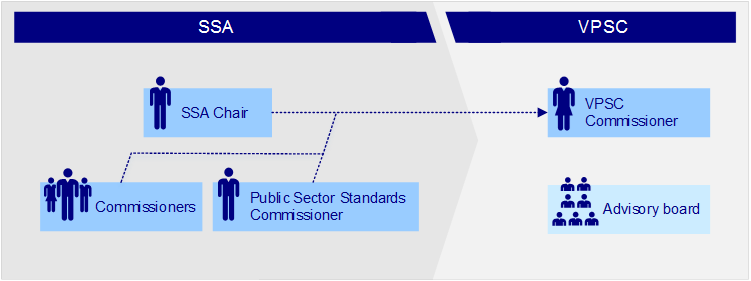

When SSA transitioned to VPSC, the number of leadership positions was reduced. Figure 1B shows these changes.

Figure 1B

Changes in governance in the transition from SSA to VPSC

Note: SSA appointed commissioners as required for short-term projects. VPSC's advisory board is appointed by the Premier and consists of up to seven members who collectively have knowledge, skills and experience in public sector, business, service delivery and regional matters.

Source: VAGO.

Under the Act, VPSC is accountable to the Premier and the advisory board. VPSC's functions under section 40 of the Act are independent and not subject to ministerial direction. Provisions in section 39 of the Act can be subject to direction from the Premier.

The Commissioner is a member of the Victorian Secretaries Board (VSB), along with the secretaries of the seven departments and the Chief Commissioner of Victoria Police. Although not a formal part of VPSC's governance structure, VSB makes requests to VPSC for work, and VPSC considers VSB an important stakeholder in its activities.

Advisory board

Under the Act, the advisory board must provide:

- assistance to ensure VPSC's work is appropriately targeted and reflects emerging challenges and opportunities

- advice on and help with the development of draft three-year strategic plans and annual plans, before they are submitted to the Premier

- strategic advice on matters relevant to VPSC's objectives and functions.

The Secretary of DPC is the chair of the advisory board, and DPC held secretariat responsibility until it transferred this responsibility to VPSC in November 2016.

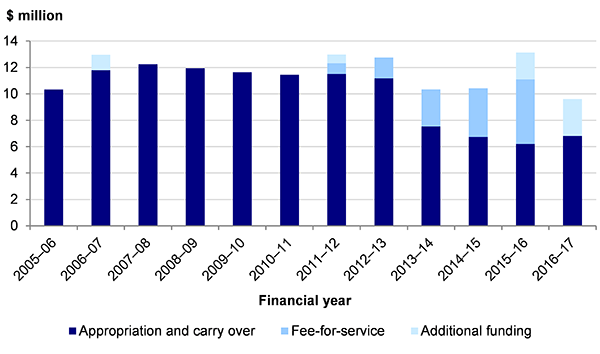

1.1.3 Operating budgets

Between 2005 and 2013, SSA operated with a budget of about $10.5–$12 million. In May 2013, SSA's budget was reduced by 36 per cent.

Since VPSC's establishment, its recurrent budget has remained around $6 million, with supplementary funding of:

- $2.05 million in the 2015–16 State Budget—for development of a strategic vision and medium-term plan, a review of internal capability and staffing profile, a business case for ongoing funding in 2016–17, and to undertake organisational reviews that align with government priorities

- $2.8 million in the 2016–17 State Budget—to cover its projected deficit, and enable it to continue to deliver its activities.

Figure 1C shows SSA and VPSC's operating budget over time.

Figure 1C

SSA and VPSC operating budget, 2005–06 to 2016–17

Source: VAGO, based on data from VPSC.

In response to the reduced budget, SSA lowered its costs by reducing staff numbers via voluntary departure packages, and reducing overheads. It also stopped promoting its guidance, and carried forward its previous‑year commitments to avoid a deficit. VPSC has continued these activities.

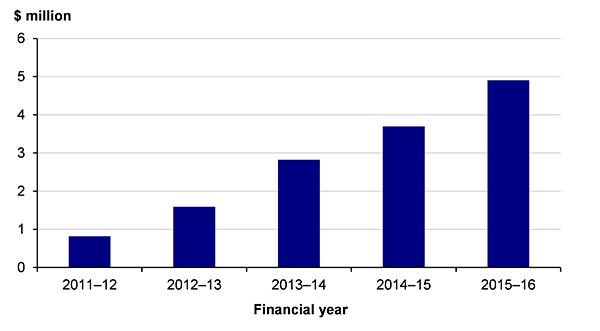

SSA also responded to the reduced budget by shifting some of its services to a fee‑for‑service model—an approach that VPSC has continued.

SSA already charged fees for several activities, such as its management of the Victorian government careers website and delivering the graduate recruitment scheme for the Victorian public service. From 2014 onwards, VPSC increased fees for these activities to better reflect their total costs. It also shifted its public sector agency review function, previously funded through appropriations, to a fee-for-service model.

Figure 1D shows the increase in SSA and VPSC's fee-for-service income from 2011−12 to 2015−16.

Figure 1D

SSA and VPSC fee-for-service income, 2011–12 to 2015–16

Source: VAGO, based on data from VPSC.

1.2 Oversight of the Victorian Public Sector Commission

VPSC is a portfolio agency of DPC. DPC is responsible for supporting the Premier of Victoria and the Special Minister of State in promoting good governance, public administration and workforce management, as well as providing advice on the Act. In fulfilling this role, DPC has an interest in the effective operation of VPSC.

From 2016, amendments to the Standing Directions of the Minister for Finance (Standing Directions), derived from the Financial Management Act 1994, have given departments clearer information on how they should conduct relationships with their portfolio agencies.

Under the Standing Directions, DPC is responsible for establishing and maintaining an effective relationship with VPSC, including:

- providing advice and support to VPSC on financial management, performance and sustainability

- supporting the Special Minister of State's oversight of VPSC, including providing information on its financial management, performance and sustainability

- providing information to the Department of Treasury and Finance's accountable officer to support government's sound financial management.

1.3 Why this audit is important

VPSC's statutory objectives focus on strengthening key elements of the Victorian public sector—effectiveness, efficiency, capability and integrity. In this way, effective delivery of VPSC's functions has a significant impact on the way the broader public sector performs its roles.

Recent investigations by IBAC have exposed significant instances of fraud and corruption within the Victorian public sector, which point to weaknesses in the structures and processes intended to ensure the public sector acts with integrity. These findings demonstrate the public sector's need for more guidance and support to ensure agencies and employees act with integrity.

VPSC has not previously been subject to a performance audit. Given recent integrity failures, this audit will provide timely and important insight into VPSC's impact and effectiveness.

1.4 What this audit examined and how

In this audit, we examined whether VPSC is effectively performing its functions. Specifically, we assessed whether:

- VPSC effectively plans and prioritises its resources to strengthen the performance, capability and professionalism of the public sector

- VPSC's governance and oversight arrangements—incorporating the advisory board and DPC—are effective and support the achievement of its objectives

- VPSC is effectively and efficiently achieving its objectives.

The audited agencies were VPSC and DPC.

We conducted our audit in accordance with Section 15 of the Audit Act 1994 and Australian Auditing and Assurance Standards. The cost of this audit was $575 000.

1.5 Report structure

The remainder of this report is structured as follows:

- Part 2 examines VPSC's planning and oversight, including the operation of the advisory board and DPC's role as VPSC's portfolio department

- Part 3 examines how effectively and efficiently VPSC delivers its key activities.

2 Planning and oversight

Strategic plans and annual plans are necessary for agencies to identify priorities and set out how they will meet them. Planning should meet legislative requirements, be linked to an agency's objectives, and support allocation of resources to achieve priorities.

Under the Public Administration Act 2004 (the Act), the Victorian Public Sector Commission (VPSC) must develop:

- a three-year strategic plan every third year, identifying key challenges and opportunities for the public sector and how VPSC's priorities align with them

- an annual plan, linked to the strategic plan, detailing priority areas and key outputs and specific activities for each area.

The Act requires VPSC to develop these plans with input from its advisory board and submit them to the Premier of Victoria for approval.

As VPSC's portfolio department, the Department of Premier and Cabinet (DPC) is responsible for maintaining an effective portfolio relationship with VPSC and supporting it to meet its obligations under the Act.

2.1 Conclusion

VPSC's strategic and annual planning is inadequate—it has not met the requirements of the Act and, for most of the time VPSC has operated, it has focused on delivering immediate priorities at the expense of long-term planning that would help it achieve its objectives. As a result, VPSC cannot be sure that its short-term decision-making is helping it to achieve its long-term strategic goals.

VPSC has recently improved its planning activities, but more work is necessary to ensure it is systematically and appropriately prioritising its resources to achieve its medium- and long-term objectives.

Governance and oversight of VPSC has not been effective in helping it to overcome these issues. DPC did not successfully establish the advisory board until more than 12 months after VPSC was established. As a result of this delay, and confusion about which agency had responsibility for the secretariat function, the advisory board has not yet achieved its original policy intention.

Recent revisions to the advisory board's secretariat arrangements and terms of reference represent a new opportunity for the advisory board to perform the role intended for it under legislation.

DPC and VPSC have a close working relationship that is supportive and collaborative. Despite this, DPC has not operated effectively as a portfolio department when important issues have occurred, such as VPSC's noncompliance with its planning obligations under the Act.

2.2 Strategic and annual planning

We assessed whether VPSC has developed strategic and annual plans that meet the Act's requirements and support VPSC to achieve its objectives.

2.2.1 Preliminary planning activities following establishment

VPSC was established on 1 April 2014. We expected to see evidence that it engaged in a range of activities at its establishment, such as:

- reviewing the State Services Authority's (SSA) existing activities to understand costs, effectiveness and opportunities for efficiencies

- checking that the activities inherited from SSA aligned with VPSC's revised legislative obligations, and identifying any gaps

- engaging with stakeholders across the public sector

- assessing and diagnosing the risks facing the public sector to underpin the way VPSC prioritises its activities

- gathering any additional evidence needed to inform VPSC's first strategic plan.

These activities did not take place until February 2016. VPSC stated that it was not able to undertake such activities because it lacked sufficient resources. As the majority of its functions continued SSA's work, VPSC focused on continuing to deliver the existing services rather than reconfiguring its activities to reflect its status as a new agency.

When VPSC was established, changes were also made to its functions legislative obligations and funding. This created a need for the new agency to review its activities, to ensure they were financially sustainable and fulfilled its legislative obligations. By not undertaking this work at its establishment, VPSC lacked a robust evidence base to inform its planning, help prioritise its activities and ensure it was able to operate effectively and sustainably within its budget.

2.2.2 Complying with planning obligations

VPSC has developed strategic plans and annual work plans, but it has not complied with its planning obligations under the Act.

The delay in establishing the advisory board meant that VPSC was unable to meet its obligation to consult with the advisory board during the development of its strategic and annual plans until October 2015.

Establishing the advisory board was DPC's responsibility and outside VPSC's control, but there are further instances of noncompliance with planning requirements that were within VPSC's control.

Strategic plans

Contrary to the requirements of the Act, VPSC has not developed a three-year strategic plan informed by its advisory board and approved by the Premier. Under the Act, the three-year strategic plan must include prescribed content, including challenges and opportunities for the public sector and how VPSC's priorities strategically align with these matters.

Instead, VPSC has developed a range of different plans, outlined below:

- A seven-year strategic plan (2014–2021)—comprising a one-page chart that did not include challenges and opportunities facing the public sector or how VPSC's strategic priorities align with them. It was not developed with input from the advisory board and was not submitted to the Premier for approval.

- A four-year strategic plan (2016–2020)—which never became active. It was underpinned by research and analysis, and includes challenges and opportunities and how VPSC's strategic priorities align with them. It was developed with input from the advisory board but was not approved by the Premier.

- A one-year strategic plan (2016–2017)—which is VPSC's current plan. It does not comply with the required three-year time frame, does not include challenges and opportunities, or how VPSC's strategic priorities align with them. It was not developed with input from the advisory board and was not submitted to the Premier for approval.

The four-year 2016–20 strategic plan is VPSC's most thorough planning exercise to date.

In 2015–16, VPSC received additional funding of $2 million to review current operations and their alignment with government priorities for strengthening the public sector. Within this funding, $200 000 was earmarked for the development of a strategic vision and plan, and a business case to underpin a bid for additional recurrent funding.

VPSC did a range of work to develop these documents, including contextual analysis of the issues facing the Victorian public sector, consultation with other public sector commissions in Australia and New Zealand, and engagement with DPC and the Victorian Secretaries Board (VSB). The plan was discussed at the advisory board's first meeting in October 2015. However, the funding bid was unsuccessful and the plan never became active.

In February 2016, VPSC began a separate planning exercise to develop the one-year 2016–17 strategic plan, which overlapped with its work on the 2016–20 strategic plan and business case. This involved distilling the ideas in the four-year strategic plan into three strategic outcomes:

- a public sector leadership group and workforce that can deliver excellence

- public sector organisational capacity that delivers high performance

- a public sector committed to values, integrity and performance.

VPSC used this planning to inform an organisational restructure to improve the clarity and accountability of roles, and support achievement of the three strategic outcomes. VPSC advised that this new strategic planning exercise incorporated work undertaken for the 2016–20 strategic plan.

Although this plan does not meet all of the requirements of the Act, VPSC's work to align the new strategic plan to its objectives, the structure of the organisation and its activities is positive, and has helped VPSC to gain a more strategic focus.

Annual plans

Since its establishment in 2014, VPSC has not developed an annual plan that meets the requirements of the Act.

The Act prescribes specific content that should be included in the annual plan, including links with the strategic plan, priority areas for the forthcoming financial year, and key outputs and specific activities for those areas. The plan should be developed prior to the commencement of each financial year, with input from the advisory board, and should be approved by the Premier.

VPSC has prepared two plans in the past three financial years, but neither fulfils all of these requirements.

Following its establishment, VPSC developed a 2014–15 annual plan that contained the prescribed content. The advisory board was not yet in place and therefore could not be consulted, but VPSC attempted to comply with its legislated requirements by submitting the plan to DPC for transmission to the Premier for approval.

DPC reported that it provided feedback on this draft and expected that it would be revised and resubmitted. This expectation was detailed in an agenda for a meeting between the Secretary of DPC and the Commissioner. However, we have not received evidence that this feedback was provided to VPSC or that the plan was resubmitted to DPC. The plan remained in draft form and did not receive the required approvals.

VPSC did not develop an annual plan for 2015−16, advising that it instead continued to deliver its core statutory functions while developing its business case and the 2016–20 strategic directions document. It did not seek endorsement of this approach or raise it with the Special Minister of State or the Premier.

VPSC again developed an annual plan in 2016–17. This annual plan links to the current strategic plan, and identifies priority areas and specific activities. VPSC developed it between February and June of 2016 without input from the advisory board.

Following the plan's completion in June 2016, VPSC asked DPC to schedule a meeting of the advisory board to discuss the plan. This meeting did not take place until September 2016, rather than before the beginning of the financial year as required by the Act. As a result, the plan had already been in use for four months when it was discussed by the advisory board. The plan was not submitted to the Premier for approval.

Planning and responding to requests

The lack of a clearly defined plan is significant because of VPSC's legislated obligation to be responsive to requests—under the Act, VPSC is required to perform any action relating to its functions if it is asked to do so by the Premier. If a minister or a public agency makes a request related to VPSC's functions, VPSC has discretion over whether it will perform the requested action.

In practice, VPSC's resource constraints have led it to decline ad hoc requests for work from other agencies or ministers, for example, when VPSC's informal assessment of the task deems it to be a lower priority than other review activity. This assessment occurs on an informal basis, in consideration of the relevance of the request to VPSC's core responsibilities, and is not guided by criteria nor informed by proper planning that defines VPSC's priorities based on an assessment of public sector risks and challenges. Effective planning would enable VPSC to assess ad hoc requests against defined priorities or predetermined risks. This would provide a transparent basis for accepting, declining or deferring requests for work and reprioritising resources where necessary.

2.3 Governance and oversight

When SSA transitioned to VPSC, the number of leadership positions was reduced. VPSC's governance structure consists of:

- a single Commissioner, combining the formerly separate roles held by the SSA chair, commissioners and the public sector standards commissioner

- an advisory board, appointed by the Premier, of up to seven members, who provide a mix of knowledge, skills and experience in public sector, business, service delivery and regional matters.

We examined how effectively VPSC's governance and oversight arrangements are working, including the roles of DPC—as VPSC's portfolio department—and the advisory board.

2.3.1 Role of the Department of Premier and Cabinet

DPC and VPSC have a close working relationship, which includes:

- regular meetings between the Secretary of DPC and the Commissioner of VPSC

- regular meetings between senior executives of DPC and VPSC

- joint workshops on budget and strategic planning

- participation by DPC staff in VPSC projects, where relevant

- six-monthly Budget Paper 3 reporting from VPSC to DPC

- DPC support for VPSC management activities, including preparation of budget submissions.

Through this close and multifaceted relationship, DPC has a strong grasp of VPSC's activities, priorities and challenges. It has also worked closely with VPSC on preparing budget submissions to enhance VPSC's capability and performance. This is an important foundation for effective oversight by a portfolio department.

However, DPC could not demonstrate that it had adequately fulfilled its broader role as a portfolio department by systematically advising government about VPSC's performance, including VPSC's noncompliance with its legislated planning obligations. DPC considers that advice on legislative obligations should avoid an overly legalistic approach that prioritises advice on compliance issues over broader strategic issues. However, receiving this sort of advice gives government a chance to address issues if it chooses to, but DPC has not provided such information about VPSC's performance.

DPC has briefed the Minister on VPSC's budget and funding bids. DPC provides covering briefs with any materials that VPSC submits to the Special Minister of State and the Premier. It sees this approach as a way of providing real-time monitoring of VPSC's performance. However, this approach is reactive in nature and therefore does not enable DPC to give timely advice to government about any gaps in VPSC's performance or instances where work that should be occurring is not.

DPC's responsibilities for VPSC's advisory board

DPC was responsible for establishing VPSC's advisory board and for performing secretariat functions. It began work to identify and appoint advisory board members in late 2013, but these efforts were not successful until August 2015—more than 12 months after VPSC was established. VPSC did not try to expedite the establishment of this key component of its governance structure.

Though the Secretary of DPC is required to be chair of the advisory board, DPC did not raise concerns about the advisory board's establishment and operation or compliance with legislative requirements.

DPC also did not fulfil its secretariat role, responsible for coordinating three meetings per year. DPC advised that this was because of an understanding that DPC would arrange the first meeting of the advisory board, and VPSC would arrange subsequent meetings and effectively assume secretariat responsibility. VPSC did not share this understanding.

Briefing materials that DPC prepared for discussions between the Secretary of DPC and the Commissioner of VPSC show that it intended to communicate this arrangement to VPSC, but it is not possible to verify whether this discussion took place.

However, the terms of reference for the advisory board—drafted by DPC and approved by the Special Minister of State in July 2015—specify that DPC has secretariat responsibility. This inconsistency between working knowledge and documented procedures resulted in confusion about which agency was responsible for the secretariat function.

This confusion persisted until June 2016, when VPSC contacted DPC twice to arrange the second meeting of the advisory board. This second meeting took place in September 2016, and included discussion of the secretariat function to clarify responsibilities going forward.

2.3.2 Role of the advisory board

VPSC's advisory board has not operated as required under the Act. As a result, VPSC's governance structure has not been functioning as it is intended to under the legislation, and VPSC has not been able to realise the policy intention that underpinned the establishment of the advisory board.

This includes providing assistance in developing strategic and annual plans, advice on appropriately targeting VPSC's work, and advice on matters relevant to VPSC's objectives and functions.

Under the Act, VPSC must consult the advisory board:

- to inform development of its three-year strategic plan

- before each financial year, to develop its annual work plan.

The advisory board did not meet until October 2015—17 months after VPSC began operating—and it has met only twice since VPSC was established. With the exception of the 2016–17 strategic plan, which was never enacted, VPSC has not met requirements to consult the advisory board on its strategic and annual plans, as outlined in Section 2.2.2.

Fulfilling the policy intention of the advisory board

The policy intention that underpinned the establishment of the advisory board was to give VPSC a longer-term strategic outlook. This need was first identified in DPC's 2012 review of SSA. The review noted that, under SSA's governance structure, multiple commissioners were appointed to lead individual projects, which led to a short-term focus on outputs. It went on to recommend the establishment of an advisory committee to set strategic direction for the agency by developing triennial strategic plans and annual work plans.

These recommendations were reflected in amendments to the Act that coincided with the establishment of VPSC. The Act defined the composition of the advisory board—specifically that its members have a mix of knowledge and skills pertaining to the public sector, business, service delivery and regional matters. DPC advised government that the advisory board would also help VPSC to operate within the recently reduced budget, by ensuring it appropriately targeted its efforts.

The delay in establishing the board has exposed VPSC to multiple risks, specifically that:

- it may remain focused on outputs at the expense of longer-term priorities and delivering activities that make progress towards them

- its work program and allocation of resources may be open to influence from other stakeholders, such as the members of the VSB who do not have a legislated role in shaping VPSC's organisational priorities

- its work program may not be shaped by the mix of expertise offered by members of the advisory board

- it may not be able to redefine its budget and expenditure in response to priorities defined by the advisory board.

VPSC recognises that it has not yet benefited from the input that the advisory board was intended to provide, but it has taken actions to do so.

Following discussions at the second meeting of the advisory board, its terms of reference were amended to:

- transfer secretariat responsibility to VPSC

- reduce the minimum number of meetings to one per year

- make the Commissioner of VPSC a standing invitee to board meetings

- introduce the opportunity for board members to contribute to individual VPSC projects in an advisory capacity.

These changes reflect the critical role of the advisory board in VPSC's governance structure. They should help VPSC to meet the schedule for strategic and annual planning and coordinate the advisory board's activities.

Since this change, VPSC has engaged individual advisory board members on a range of projects, including its executive officer review program, the Victorian Leadership Academy and the Integrity Strategy. These are positive steps towards achieving the intended benefits of having an appropriately skilled and engaged advisory board.

VPSC will need to ensure that it also engages the advisory board in annual and strategic planning to comply with its legislation, and realise the board's policy intention.

3 Efficiency and effectiveness

Working efficiently and effectively enables public sector agencies to make the best use of scarce resources and achieve their objectives. Robust performance information is important for agencies to understand how well they are performing. However, measuring performance can be complex, particularly when agencies are trying to measure the impact of their activities.

The Victorian Public Sector Commission's (VPSC) objectives, set out in the Public Administration Act 2004 (the Act) , are complex to measure. This is because they have multiple parts that are not all within VPSC's control. A 2012 review of the State Services Authority (SSA) conducted by the Department of Premier and Cabinet (DPC) identified the need for improved performance reporting but also noted the difficulty of doing so for a central agency like SSA—later VPSC—that has no clear, attributable outcomes.

In this Part, we examined four case studies to understand the effectiveness of individual activities VPSC carries out, as well as its overall performance measurement.

3.1 Conclusion

We were unable to conclude on how effectively VPSC is achieving its objectives. VPSC's performance measurement is too limited to provide insight into the impact of its work. As a result, it is not possible to determine the extent to which it is achieving its objectives to strengthen the efficiency, effectiveness and capability of the public sector, and to maintain and advocate for public sector professionalism and integrity.

Our assessment of four of VPSC's key activities highlighted that it fulfils its statutory obligations. We found examples of good practice, where VPSC has effectively managed activities to achieve positive results. We also found examples of inefficiencies and gaps that compromise the efficiency and effectiveness of VPSC's work. VPSC has implemented some recent improvements across some of the areas that we examined and it is planning more. These are encouraging signs.

A critical gap is VPSC's understanding of its own performance. Addressing this gap is not just good practice, but also provides better assurance that VPSC's resources are being used effectively.

3.2 Understanding performance

To understand VPSC's efficiency and effectiveness, we examined VPSC's performance measurement, monitoring and reporting, and the extent to which VPSC's Budget Paper 3 (BP3) measures accurately measure its performance.

Performance measurement

VPSC's performance measurement is limited, and provides little insight into the effectiveness or efficiency of its activities. This affects VPSC's ability to understand the effectiveness of its operations, but also its ability to advocate for additional funding. As an example, VPSC's 2016 business case lacked evidence of VPSC's effectiveness and was not supported by robust performance information. The Department of Treasury and Finance advised government that the case for additional funding had not been fully demonstrated.

In the development of its 2016–17 annual plan, VPSC developed 101 mostly output‑based performance measures for the activities included in the plan, but it does not report against these measures. This is a key weakness and it means that VPSC cannot be sure that it is directing its resources to activities that are most effective in addressing the key risks and challenges facing the public sector.

Instead, VPSC's executive team monitors progress of some, but not all, of the activities in the annual plan through bi-monthly status reports. These status reports began in 2016, and indicate whether the activity is on track or complete, and what stage it is at—however, the reports do not contain all of the activities from the annual plan. Prior to this, no status reporting of annual plan activities occurred. Individual business areas, such as VPSC's Integrity and Advisory team, also undertake routine status updates against their specific projects and activities.

This lack of performance measurement is a significant gap. While status updates are a recent improvement, they need to be supplemented with robust performance information. This is essential for VPSC's executive team to make informed decisions about how it will allocate public resources. Further, this limited performance measurement compromises VPSC's understanding of the effectiveness and efficiency of its activities, and whether it is achieving its objectives.

Other ways of understanding performance

VPSC's annual report is the principal tool that it uses to demonstrate its accountability to Parliament and the public. VPSC's annual reports consist mainly of descriptive information about activities and the volume of work undertaken, rather than focusing on the quality of the work or outcomes.

VPSC's 2015–16 annual report gives little insight into VPSC's performance, and provides limited information about the effectiveness of VPSC's activities. The information included about the Graduate Recruitment and Development Scheme (GRADS) reports on its increased communications activities and subsequent increases in application rates, but this information is limited and it is not included for all of VPSC's activities.

Ineffective planning is one factor that has contributed to the weakness of VPSC's annual reporting (see Section 2.2). Without better measures and performance reporting, VPSC's annual reports will not be able to provide useful information.

We acknowledge the challenges associated with measuring and reporting performance, but it is essential for transparent and accountable use of public funds and the achievement of policy goals. Furthermore, not effectively measuring performance hampers VPSC's requests for increased funding, as evidenced by VPSC's unsuccessful 2016 business case.

The New Zealand State Services Commission (NZSSC) has a well-developed set of performance measures that could be a useful guide for VPSC. NZSSC's four-year plan contains a set of objectives, outcomes, impact and output measures and targets that help it understand its performance (see Appendix B for an example).

Most of VPSC's activities have only one measure, which limits understanding of its performance. In contrast, NZSSC uses a set of measures for each outcome, enabling a more comprehensive understanding of performance against cost, quality and output‑based measures.

Although NZSSC has a different role to VPSC, VPSC should consider adapting these measures or adopting a more comprehensive set of measures for each of its activities to enable a better understanding of its performance.

BP3 measures—service delivery

BP3 is published annually, and details the goods and services or outputs that departments are funded to deliver, and how they support government's strategic objectives. BP3 includes performance measures for monitoring departments' outputs.

The Victorian Government Performance Management Framework (PMF) requires measures to be appropriate and easily understood. They must consider timeliness, quality, quantity and cost, and be benchmarked over time and for comparison with similar activities in other jurisdictions. Performance measures should also demonstrate efficiency and effectiveness.

Portfolio departments are subject to the mandatory requirements of the PMF, and the BP3 measures should be consistent with the requirements and guidance in the PMF.

VPSC's BP3 measures do not provide an adequate understanding of VPSC's effectiveness. DPC has not effectively met its responsibility to ensure that the BP3 measures set for VPSC meet the requirements of the PMF.

Figure 3A shows VPSC's BP3 measures.

Figure 3A

VPSC's BP3 measures

|

Measure |

Description |

Target |

2014–15 actual |

2015–16 actual |

2016–17 expected |

|---|---|---|---|---|---|

|

Quantity |

Advice and support provided to the public sector on relevant issues |

80 |

80 |

80 |

80 |

|

Number of referred reviews(a) underway or completed aimed at improving service delivery, governance and/or public administration efficiency and effectiveness |

5 |

5 |

5 |

5 |

|

|

Quality(b) |

Proportion of recommendations arising from reviews of actions reported to be implemented by the public service |

100% |

100% |

100% |

100% |

|

Timeliness(c) |

Proportion of data collection and reporting activities completed within target time frames |

100% |

100% |

100% |

100% |

(a)Referred reviews are organisational reviews requested by the Premier or ministers, not fee-for-service reviews.

(b)VPSC's quality measure refers to reviews undertaken in relation to Employment Standards.

(c)For 2015–16, VPSC's target for timeliness was 90 per cent.

Source: VAGO, based on BP3, Service Delivery.

From 2014–15 to 2015–16, VPSC met all of the targets in its BP3 measures, and is expected to do so in 2016–17. However, VPSC's BP3 measures are not a reliable means of understanding its performance because they do not comprehensively demonstrate its effectiveness.

VPSC has met both of its quantity measures every year since 2010. It does not provide any supplementary information that demonstrates why these quantity targets are reasonable, and the targets themselves do not provide any insight into performance.

In contrast, the quality measure addresses effectiveness by measuring the recommendations arising from reviews of actions in relation only to the Employment Standards—that is, agencies' actions following VSPC's recommendations after investigating complaints from public service employees about recruitment or other employment related activities.

This is a proxy measure for understanding the impact of recommendations, and it provides an understanding of the quality or effectiveness of VPSC's work on promulgating codes of conduct and standards. However, VPSC has no control over the reliability of this measure because it relies on unverified reports from departments. Further, this work is not a large part of VPSC's activities.

VPSC's timeliness measure is a more useful measure of its efficiency—it measures the volume of services provided within a specific time frame.

As VPSC's role is to lead good governance in the public sector, its BP3 measures should sufficiently measure its performance.

3.3 Case studies of key activities

Because of VPSC's limited performance measurement and reporting, we examined its efficiency and effectiveness by looking at its delivery of four key activities:

- organisational reviews

- GRADS

- codes of conduct and employment standards for the Victorian public sector

- Workforce Data Collection and People Matter Survey.

We selected these activities to cover VPSC's two key divisions—leadership and workforce, and performance and integrity. These activities also reflect differences in the legislative basis of VPSC's activities—organisational reviews and GRADS align with VPSC's responsibilities under the Act, but are not explicitly stated functions. In contrast, its work on codes of conduct, employment standards and data collection are explicitly stated functions.

We considered the effectiveness and efficiency of these activities.

To understand the effectiveness and efficiency of the four key activities we examined, VPSC undertakes a range of activities including seeking stakeholder feedback, analysing data and undertaking reviews of its work. However, these activities provide a limited understanding of VPSC's performance, and it does not have a clear understanding of the overall effectiveness of these activities and what outcomes they are achieving.

The following Sections provide further insights from our assessments of the four activities we looked at.

3.3.1 Organisational reviews

One of the established functions of VPSC, and SSA before it, is undertaking reviews of part or all of a public sector agency. From 2005 to 2014, SSA completed 75 reviews, and from April 2014 to 2016, VPSC completed 22. VPSC's reviews are delivered under section 39(1)(a) of the Act (see Section 1.1.2).

We examined two reviews:

- Organisational Capability Review of Ambulance Victoria, 2016 (the AV review), commissioned by Ambulance Victoria (AV)

- Monitoring of Department of Education and Training (DET) Integrity Reforms(the DET review), a two-stage review undertaken in 2015–16, commissioned by the Minister for Education.

VPSC develops the method, deliverables, project schedule and project management tools for each individual review, to reflect its specific terms of reference and context.

Key findings

Feedback from stakeholders indicates that VPSC's organisational reviews are effective and highly valued. We also found that VPSC follows well-developed project management practices in undertaking its reviews. Further, VPSC uses client surveys to gain insights into its performance and is committed to continuously improving its review practice.

However, VPSC could make improvements to better understand and improve the efficiency and effectiveness of its review activities—this would enhance the effectiveness of VPSC's broader work by ensuring its review activities address public sector issues directly relating to VPSC's priorities.

Planning and delivery

Both the AV review and the DET review demonstrated sound planning and were delivered efficiently within agreed time frames. The AV review was also delivered within budget. Both were subject to ongoing monitoring while they were underway and, at their conclusion, VPSC's team conducted an internal review to reflect on the process, identify effective practices, and identify room for improvement.

The documentary evidence we examined for the AV review demonstrated more comprehensive project planning and monitoring than that for the DET review, which reflects the different requirements for fee-for-service reviews compared to reviews funded out of VPSC's budget.

The chief executive officer (CEO) of AV approved the project brief, which defined the objectives, methodology, governance, cost and time line of the AV review. The AV review's progress and costs were monitored through weekly meetings between the review director and VPSC executives. The AV CEO confirmed that VPSC worked to efficiently manage the costs and time lines of the project.

The Minister for Education approved the project plan, time line and deliverables for the DET review. Regular monitoring occurred through meetings between the review director and VPSC executives. Because the review was funded from VPSC's budget, it was not necessary to monitor all costs, but doing so may assist VPSC to find efficiencies in its work and apply these to future reviews.

The AV CEO highlighted the value of the AV review and the professionalism of VPSC staff. This value is further evidenced by the fact that VPSC continues to receive requests to undertake reviews from public sector clients.

Use of lead reviewers

For many of its reviews, VPSC appoints one or more external lead reviewers, who are usually senior, highly experienced public servants. Both the AV and DET reviews used lead reviewers. However, the costs associated with a lead reviewer are high—for example, they accounted for 23 per cent of the AV review budget. Despite this, VPSC does not evaluate the contribution of lead reviewers, nor do VPSC's client surveys ask questions about the value of the lead reviewer from the client's perspective.

VPSC has advised that it will begin evaluating the contribution of lead reviewers, including seeking clients' views. This should enable it to find efficiencies in the way it allocates resources and understand the effectiveness of lead reviewers.

Tracking the implementation of recommendations

Both DET and AV accepted the findings and recommendations of the reviews. However, although VPSC makes recommendations through its reviews, it does not have a formal practice of tracking their implementation. Implementation is outside VPSC's control, but tracking the changes that result from VPSC's review work would provide important evidence of how it is fulfilling its statutory objectives.

VPSC is helping AV to implement its review recommendations, but this occurs on a case-by-case basis rather than for every review.

Similarly, VPSC has not systematically leveraged insights gained through its organisational reviews as a way to identify broader issues across the public sector. VPSC undertook an important first step towards doing this in April 2017, by aggregating the issues it had identified in its organisational review to shape its future initiatives.

Measuring effectiveness

VPSC reviewed the effectiveness of its review activities both during and at the end of the DET review. It did so by determining what work practices were effective and how to manage the relationship, given that DET did not commission the review.

VPSC has 10 measures relating to seven review activities in its 2016–17 annual plan, but these are output based or relate to endorsement from the review commissioner. Figure 3B shows the performance measures for three of the activities.

Figure 3B

Review activities and measures

|

Activity |

Performance measure |

|---|---|

|

Subject to the direction of the Premier, lead implementation of key recommendations arising from the Review of Victoria's Executive Officer Employment and Remuneration Framework: |

|

|

Reviews supported by Premier and relevant minister and portfolio secretary |

|

Panel established |

|

Commission designed |

Source: VAGO, based on VPSC.

The last two of these measures are output-based and do not provide an understanding of effectiveness. However, the first measure, which focuses on support for the review from the Premier and relevant minister and portfolio secretary, is appropriate, because it measures whether the output aligns with the specifications of the review—in this way, it acts as a proxy measure for quality. However, it does not provide an understanding of the efficiency of the activity, and as a result, is not comprehensive.

VPSC does not report against these measures, but provides high-level information to the VPSC executive about the status of projects. This limits VPSC's ability to improve its review activity, to understand whether reviews are successful, and to apply review findings to its broader activities within the public sector.

Responses to the reviews from AV and the Minister for Education indicate that review commissioners value VPSC's reviews. However, lack of reporting against these measures is a missed opportunity for VPSC to demonstrate its effectiveness rather than relying on the ongoing demand for its review services and positive feedback from clients.

3.3.2 Victorian Graduate Recruitment and Development Scheme

VPSC delivers GRADS on behalf of participating public sector agencies. GRADS is a 12‑month employment program intended to recruit high-performing graduates to meet the current and future needs of the Victorian public service. VPSC is responsible for coordinating agencies' participation in the program, marketing and promotion, procurement and contract management, delivery of a learning and development program for graduates, and supporting stakeholders.

In 2016, VPSC's management of GRADS resulted in recruitment and learning and development of 83 people in 11 different agencies.

The total cost of delivering the program in 2016 was $1.596 million. VPSC delivers the GRAD program on a cost-recovery basis—participating agencies cover all costs, which are charged on a fee-per-graduate basis. This fee model means that the higher the number of participants in the program, the lower the cost per graduate for departments.

Key findings

VPSC has a solid understanding of the GRADS program's performance each year, but does not have a comprehensive understanding of its impact. There is an ongoing lack of strategic oversight of the program, which means that the long-term outcomes are not known.

Seeking feedback and reviewing the program

VPSC regularly seeks feedback on the management and operation of the program from graduates and other stakeholders, both formally—through committees and surveys—and informally. VPSC uses this feedback to improve the way it manages GRADS—for example, it recently changed its model for delivering learning and development from multiple providers to a single provider.

While VPSC reviews the program and seeks feedback, it could improve the way it delivers GRADS so it can gain a greater understanding of the effectiveness and efficiency of the program. A comprehensive review of GRADS is scheduled for 2017, which should assist VPSC to do this.

Strategic oversight

SSA established the GRADS Governance Board in 2013, to improve strategic oversight of the program in response to a recommendation from a 2012 review. The governance board continued to operate under VPSC. However, VPSC advised that the board did not have any strategic impact, and it was disbanded in 2015.

After the board was disbanded, the Human Resources Directors Network, made up of representatives from the seven departments, was intended to provide a strategic oversight role. However, VPSC's engagement with the network has been limited and the network is largely informal. This arrangement has not provided any strategic oversight of GRADS.

As a result, VPSC's management of GRADS does not incorporate the strategic direction needed to identify and respond to the future needs of the Victorian public sector. There is little understanding of the long-term effectiveness of the program, and GRADS does not have defined objectives to work towards or measures to help evaluate its performance. There is currently no governance structure in place to facilitate these activities.

Measuring effectiveness

VPSC's 2016–17 annual plan contains a range of measures for GRADS, but they are output-based and VPSC does not report against them. However, VPSC did report on the effectiveness of its marketing and attraction strategies for GRADS in its 2015–16 annual report—for the 2016 intake, VPSC created a video and changed its broader attraction strategy, which led to a 27 per cent increase in applications for graduate roles.

Gathering data

VPSC could improve its review activities for GRADS by collecting longer-term data on retention, which would help it to understand the longer-term effectiveness of the program and whether the costs of participating are an efficient means of recruitment for agencies. VPSC does not currently undertake this work.

VPSC does not collect raw application and assessment data on education, cultural background or other demographic information from applicants. VPSC could use this data to better understand the pool of applicants which, in turn, could help it to identify possible efficiencies within the application and assessment process and contribute to broader VPSC initiatives around workforce management. Given the strategic priority that VPSC places on data, this is a missed opportunity.

3.3.3 People Matter Survey and Workforce Data Collection

Collecting and reporting on whole-of-government data is one of VPSC's core functions under the Act. VPSC collects and maintains six major datasets, shown in Figure 3C.

Figure 3C

Major datasets maintained by VPSC

|

Dataset |

Description |

|---|---|

|

People Matter Survey (PMS) |

Results of an annual survey on employees' views about the application of public sector values and employment principles in their workplaces |

|

Workforce Data Collection (WDC) |

Data from an annual census of all public sector employees |

|

Executive Data Collection |

Data from an annual census of public sector executives |

|

Government Sector Executive Remuneration Panel (GSERP) |

Data on remuneration of public sector executives |

|

Progression Data Collection |

Data on progression outcomes for the public sector |

|

Government Appointments and Public Entities Database (GAPED) |

Data on appointments and composition of public sector boards |

Source: VAGO.

These datasets are essential for VPSC to fulfil its statutory functions of:

- monitoring adherence to public sector values and codes of conduct

- reporting to agency heads on their organisations' workforces, and their adherence to the codes of conduct.

These data collections are also an important input for publications such as the annual report State of the Public Sector in Victoria and other initiatives.

We examined VPSC's management of two key data sets—PMS and WDC.

Key findings

Timeliness of data collection activities

VPSC has delivered its data collection activities within agreed time frames. VPSC's BP3 timeliness measure looks at the proportion of its data collection activities completed within agreed time frames, as an indicator of the efficiency of its activities.

In 2015–16, the timeliness target was 90 per cent, but VPSC exceeded this target, delivering 100 per cent of its activities on time.

Addressing issues with data accuracy, sustainability and security

Although VPSC exceeded its timeliness measures, issues with data management documentation, data storage, obsolescence of data collection platforms and manual data validation methods create risks to the accuracy, sustainability and security of the data. VPSC's Data System Strategy 2016–19 details these issues and their impact on its efficiency, as well as how they will be addressed.

One example of this is VPSC's decision to replace the obsolete Workforce Analysis and Collection Application (WACA) tool—the first stage of this strategy. VPSC included this action in its 2016–17 annual plan and is currently considering options for how to progress this action.

VPSC previously made efforts to replace the WACA tool. It prepared procurement documentation in 2015, but the project did not proceed.

It is important for VPSC to follow through on its current attempt.

Processes and controls

Our analysis of the processes associated with PMS and WDC show they are sufficient for VPSC to fulfil its statutory obligations. However, there are flaws in VPSC's processes and controls that create a risk that the data may be unreliable. These risks have not materialised, and the PMS and WDC data we tested was accurate. However, these risks continue to threaten VPSC's ability to deliver activities that rely on data analysis.

VPSC validates and investigates its data, but documentation for these activities is incomplete.

WDC is collected through WACA, but this tool is no longer supported by the original developer and cannot be repaired or enhanced. VPSC has attempted to manage this issue by using additional spreadsheets to support its own data validation. However, this process is manual and highly inefficient.

VPSC previously used the WACA tool for WDC and VPS executive data collections. However, due to WACA's declining functionality the VPS executive data collection is now received by email and manually stored in spreadsheets, so it is not subject to appropriate data warehousing or security. These issues highlight the importance of VPSC following through on its plans to replace WACA.

Reporting survey results back to clients

VPSC has recently worked to enhance its use of data by developing new products to report the results of its data collection back to clients. These include:

- presenting PMS results as heat maps to make data more meaningful

- preparing 'data insights' reports on a range of specific topics, using data from the 2016 PMS survey

- piloting data dashboards for each department to enable them to gain insights and use this data for their planning.

These are positive developments that demonstrate VPSC's efforts to leverage its valuable datasets. However, as VPSC's use of data increases, so too does the need for it to address issues with management and storage of its datasets.

3.3.4 Code of conduct and employment standards

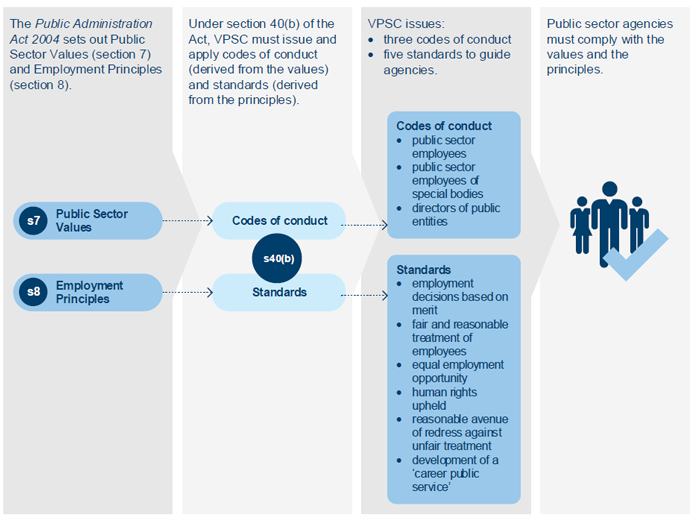

Under the Act, two of VPSC's functions are issuing and applying codes of conduct derived from the Public Sector Values, and issuing and applying standards derived from the Employment Principles. VPSC's codes of conduct and standards work is linked with its statutory objective of maintaining and advocating for public sector professionalism and integrity.

Figure 3D shows VPSC's responsibilities for issuing and applying codes of conduct and standards.

Figure 3D

Values, principles, standards and codes

Note: The Codes of Conduct for VPS Employees and Employees of Special Bodies were issued in 2007, and updated and reissued in 2015. The Code of Conduct for Directors of Victorian Public Entities was issued in 2006, and updated and reissued in 2016.

Source: VAGO.

Public sector agencies' employment processes must be consistent with the principles and the standards. There are six standards:

- fair and reasonable treatment

- merit in employment

- equal employment opportunity

- human rights

- reasonable avenue of redress

- career public service.

Codes of conduct are derived from the values set out in the Act, so they remain relatively stable unless there are changes to the legislation. VPSC updated the codes of conduct in 2015 and 2016, prompted by the changes to the Act in 2014 that created VPSC.

The Standards for the Application of Public Sector Employment Principles (the standards) are mandatory. They were originally issued in 2006 and were updated and reissued by VPSC in January 2017. VPSC communicates these changes to heads of public agencies.

Section 40(1)(c) of the Act requires VPSC to monitor and report to the heads of public sector agencies on compliance with the values, codes, principles and standards. VPSC's performance and analytics team does this annually, using the results of the PMS.

VPSC's integrity and advisory team responds to enquiries from public sector employees about their employer's compliance with the standards, investigates inquiries and makes recommendations to public sector agencies to help them comply. In 2016, VPSC received 179 enquiries. VPSC exercised formal information request powers and made recommendations on 13 of these.

Key findings

VPSC's codes and standards work complies with the requirements of the Act.

Developing a program of work

Through the Integrity Strategy, introduced in 2016, VPSC has introduced more active monitoring of its codes of conduct and standards work and a more defined, consolidated program of work than it had previously. These are positive steps.

The Integrity Strategy aims to strengthen integrity and promote sustained community and government trust in the Victorian public sector. The strategy sets a program of work for the Integrity and Advisory team, but VPSC has not yet developed systems to help it understand how effective its codes of conduct and standards work has been.

VPSC's performance measurement in this area is output-based and measures compliance with the Act, rather than the effectiveness of its activities.

VPSC has undertaken activities to apply PMS results to planning codes of conduct and standards work under the Integrity Strategy, but this work is not systematic and occurs on an ad hoc basis. For example, VPSC's analysis of 2016 PMS results helped it to identify a cohort of departments with bullying and harassment issues. It is currently developing resources to help departments improve their performance in these areas.

Identifying issues and monitoring compliance

As part of the Integrity Strategy, VPSC is in the early stages of developing a matrix to identify public sector agencies that need help to comply with codes and standards. A small number of them will be selected for annual review. These reviews aim to identify noncompliance before a breach occurs and should enable VPSC to become more effective in targeting compliance issues with the codes and standards.

The Integrity Strategy includes an initiative to use data from the PMS, reviews and enquiries to identify emerging issues, which should enable VPSC to better exploit its datasets to anticipate sector issues and, potentially, to reduce the number of enquiries that VPSC must address.

VPSC has also undertaken some preliminary analysis activities, using the results of its organisational reviews to identify key areas of concern.

VPSC's activities in relation to bullying and harassment, the Integrity Strategy and its anticipation of future demand demonstrate a willingness to be responsive to emerging issues. While this work is in its early stages, VPSC is taking positive steps to develop a more strategic approach to its work.

Appendix A. Audit Act 1994 section 16—submissions and comments

We have consulted with the Victorian Public Sector Commission and the Department of Premier and Cabinet, and we considered their views when reaching our audit conclusions. As required by section 16(3) of the Audit Act 1994 , we gave a draft copy of this report, or relevant extracts, to those agencies and asked for their submissions and comments.

Responsibility for the accuracy, fairness and balance of those comments rests solely with the agency head.

Responses were received as follows:

- Victorian Public Sector Commission

- Department of Premier and Cabinet

RESPONSE provided by the Commissioner, Victorian Public Sector Commission

RESPONSE provided by the Secretary, Department of Premier and Cabinet

Appendix B. Public Sector Values and Employment Principles

Under the Public Administration Act 2004 (the Act), two of VPSC's functions are issuing and applying codes of conduct derived from the public sector values, and issuing and applying standards derived from the Employment Principles.

Figure B1 outlines the Public Sector Values outlined in the Act.

Figure B1

Public Sector Values

|

(a)Responsiveness—public officials should demonstrate responsiveness by—

(b)Integrity—public officials should demonstrate integrity by—

(c)Impartiality—public officials should demonstrate impartiality by—

(d)Accountability—public officials should demonstrate accountability by—

(e)Respect—public officials should demonstrate respect for colleagues, other public officials and members of the Victorian community by—