Responses to Performance Engagement Recommendations: Annual Status Update 2025

Review snapshot

Are public sector agencies implementing our performance engagement recommendations in a timely way?

Why we did this review

Our performance engagements assess if government agencies are effectively and efficiently meeting their objectives, economically using resources and complying with legislation.

They often identify opportunities for improvement, which we reflect in our recommendations.

There is no legislative requirement for audited agencies to accept, complete or publicly report on our recommendations.

We do this annual review to monitor how agencies have addressed our recommendations. This makes agencies’ responses and actions more transparent to Parliament and Victorians.

Key background information

Source: VAGO.

What we concluded

Agencies told us they accepted and have completed most of our recommendations. Nothing came to our attention to suggest agency attestations are unreliable.

Of the 1,260 recommendations from reports we tabled between 1 July 2019 and 30 June 2024, agencies:

- accepted 96 per cent, the same percentage as last year

- completed 82 per cent of the accepted recommendations, which is a decrease from 85 per cent last year

- took a median of 11 months to complete them, which is faster than a median of 13 months last year

- only completed 35 per cent by their initial target completion date.

Agencies reported they had not resolved 18 per cent of accepted recommendations, compared with 15 per cent last year. Agencies should complete these recommendations to address the risks we identified in our reports.

Data dashboard

1. Our key findings

What we examined

Our review followed 2 lines of inquiry:

1. What is the status of all recommendations between 1 January 2017 and 30 June 2024, including those that were accepted but unresolved at 30 June 2024?

2. Do agencies have an evidence-based and reliable assurance process about the status of recommendations they accepted?

To answer these questions we examined 60 agencies we made recommendations to between 1 July 2019 and 20 June 2024. We also surveyed agencies with unresolved recommendations from before July 2019.

Terms used in this report

Agency

In this report, an agency is any public service organisation that meets the definition of a public body under the Audit Act 1994. This includes government departments, local councils, health and education services and other authorities.

Attestation

An attestation is a confirmation from a staff member on behalf of a surveyed agency that its response is accurate and complete to the best of their knowledge.

Engagement

An engagement is a performance audit or review.

Recommendation

A recommendation is a formal suggestion we provide to an agency after a performance audit or review, and publish in our reports. Recommendations usually focus on how an agency can improve its performance or address a risk.

Tabling date

The tabling date is the date we formally present a report to Parliament.

Background information

We do performance engagements to assess if Victorian public sector agencies, programs and services are operating effectively, efficiently and economically and comply with relevant legislation.

At the end of an engagement we table a report in Parliament that outlines our findings and recommendations to agencies about how they can improve their performance.

We give agencies the opportunity to respond to our recommendations before we table our reports. We publish their responses in our reports. Agencies can accept each recommendation:

- in full

- in part

- in principle

- not at all.

Some agencies also provide an action plan that outlines how they will address each recommendation with target completion dates.

We do this review each year to survey agencies about their progress.

Following up on unresolved recommendations

For this review we followed up agencies with unresolved recommendations. We asked these agencies:

- if they still accepted each unresolved recommendation

- if they had now completed the recommendation

- when they completed the recommendation or updated the target completion date.

This year we also interviewed a sample of 13 agencies about their attestation process for completing our survey.

See Appendix E or the accompanying dashboard on our website for more information about the original recommendations.

What we found

This section focuses on our key findings, which fall into 2 areas:

1. Agencies told us they have accepted and completed most of our recommendations.

2. While some agencies had gaps in their assurance processes, we found nothing to suggest their attestations are not reliable.

Consultation with agencies

When reaching our conclusions, we consulted with the reviewed agencies and considered their views.

You can read their full responses in Appendix A.

Key finding 1: Agencies told us they have accepted and completed most of our recommendations

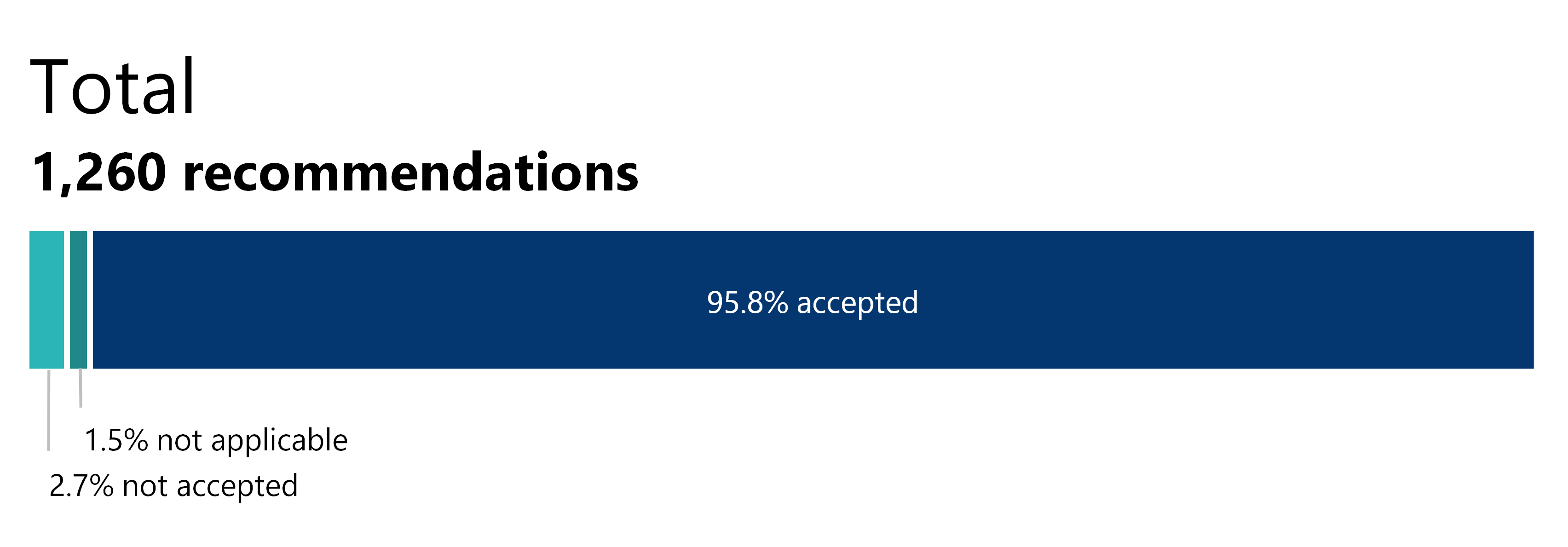

Figure 1 shows the status of recommendations we made as of December 2024.

Figure 1: Status of recommendations we examined in this report

*Not applicable refers to recommendations an agency accepted at the time the report was tabled but are no longer being implemented by the agency.

Source: VAGO.

Key finding 2: While some agencies had gaps in their assurance processes, we found nothing to suggest their attestations are not reliable

This year we interviewed 13 agencies about their process for responding to our survey. We list these agencies in Appendix C.

Through our interviews we found nothing to suggest that these agencies' attestations for their survey responses are not reliable.

We identified the following examples of best practice:

- regularly checking the status of an agency's recommendations with its responsible officers and reporting results to its executives

- requiring the responsible officer to explain any extensive delay in a recommendation's completion date

- storing evidence of a recommendation's completion in a record that is accessible to relevant staff

- informing the agency's head of its response to our survey and receiving their written approval for the attestation.

Some agencies did not do all of these steps, but all 13 completed at least some of them.

2. How agencies responded to our recommendations

Covered in this section:

- Agencies told us they accepted and completed most of our recommendations

- Agencies completed most recommendations after their initial target completion date

- There are still 25 unresolved recommendations from before July 2019

- While some agencies had gaps in their assurance processes, we found nothing to suggest their attestations are not reliable

Agencies told us they accepted and completed most of our recommendations

Acceptance status

Before tabling a report we ask the audited agencies if they accept our recommendations.

In this review we asked agencies if they had changed any responses to ‘not accepted’ or ‘not applicable’. Common reasons for this are:

- the recommendation is no longer relevant, for example:

- the agency's circumstances have changed

- the agency is doing other work that makes the recommendation no longer relevant

- the agency does not have the resources (time or money) to implement the recommendation.

Of the 1,260 recommendations we made between 1 July 2019 and 30 June 2024, agencies accepted 1,208 (96 per cent) when we tabled the report.

As Figure 2 shows, at the time of this review agencies told us they still accepted 1,207 (96 per cent).

Figure 2: Acceptance status of recommendations at the time of this review

Source: VAGO.

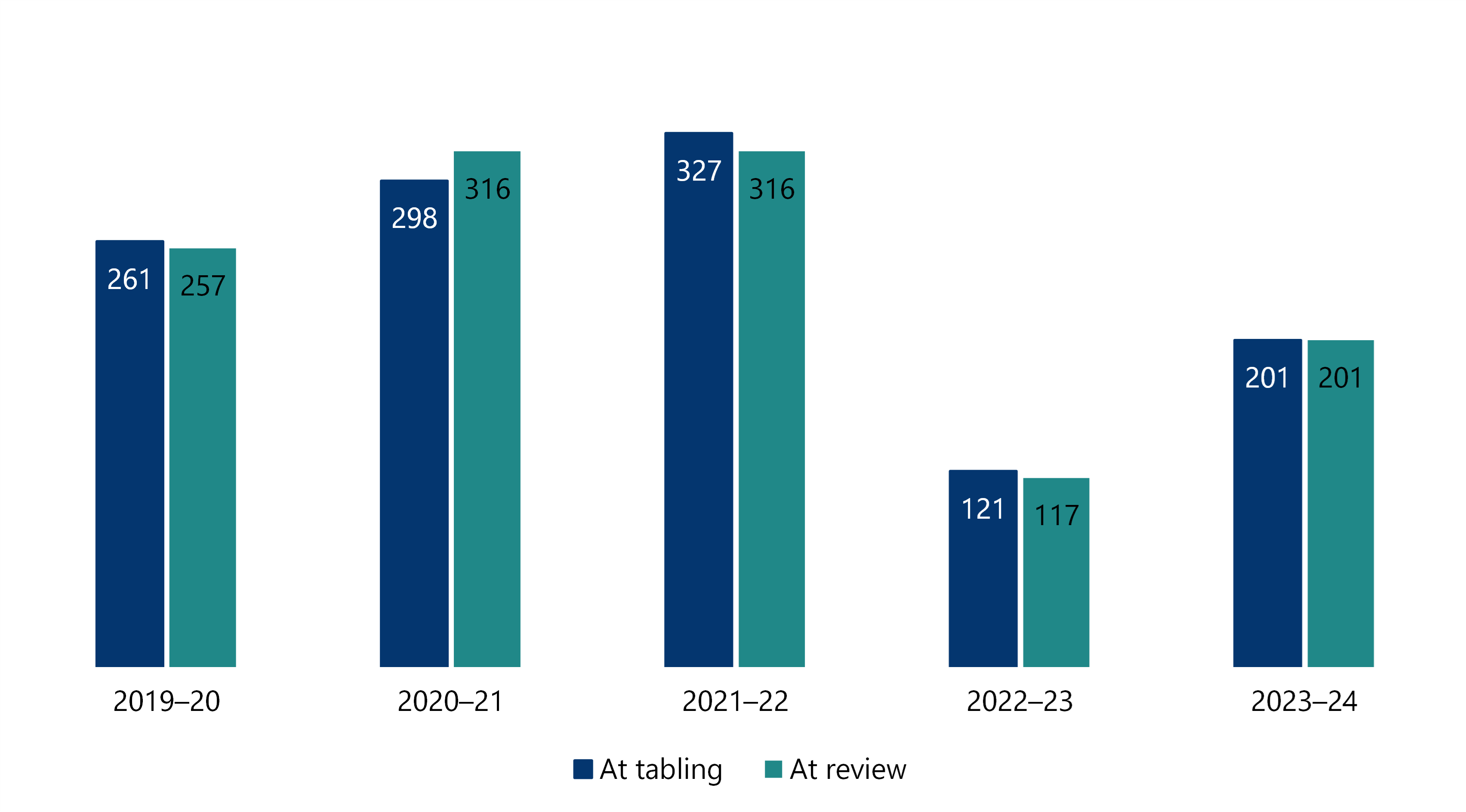

Figure 3 shows the breakdown of recommendations that agencies:

- accepted when the report tabled

- still accepted when we surveyed them for this report.

Figure 3: Number of recommendations accepted at the time of tabling and at the time of this review

Source: VAGO.

Completion status

In this review agencies told us they had:

- completed 991 (82 per cent) of the 1,207 accepted recommendations

- not completed 216 (18 per cent) of the 1,207 accepted recommendations.

This year's completion rate is slightly below last year's. In last year's review agencies told us they had completed 85 per cent of accepted recommendations.

We categorise unresolved recommendations as either ‘in progress’ or ‘not started’.

As Figure 4 shows, agencies told us that of the 216 unresolved recommendations:

- 202 were in progress

- 14 were not started.

Figure 4: Status of accepted recommendations included in this review by tabling year

| 2019–20 | 2020–21 | 2021–22 | 2022–23 | 2023–24 | Total | |

|---|---|---|---|---|---|---|

| Complete | 252 | 283 | 297 | 67 | 92 | 991 |

| In progress | 5 | 33 | 19 | 49 | 96 | 202 |

| Not started | 1 | 13 | 14 |

Source: VAGO.

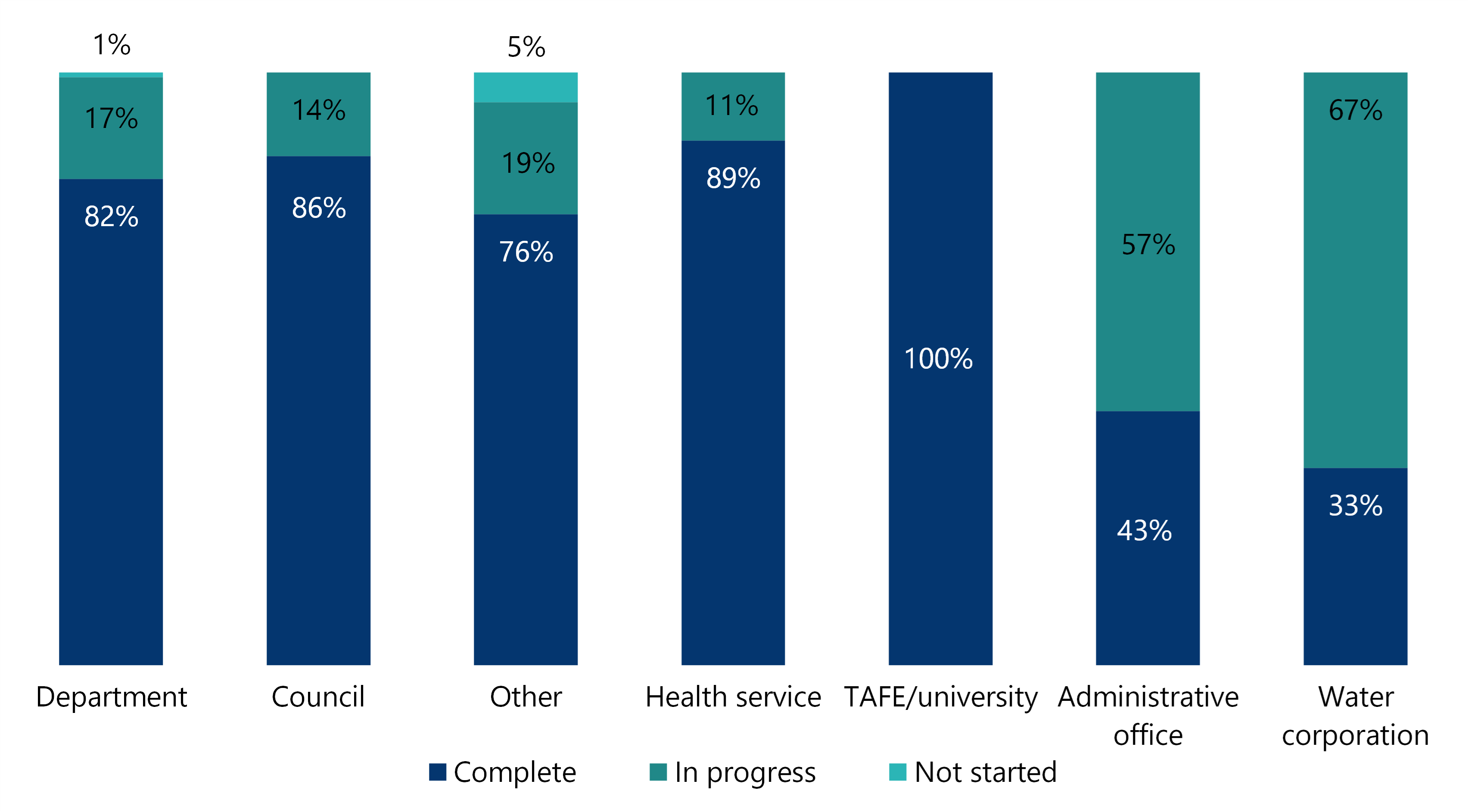

Completion status by type of agency

Figure 5 shows the percentage of accepted recommendations by agency type and completion status.

We categorised agencies into the following groups:

- government department

- council

- health service

- TAFE/university

- water authority

- administrative office

- other (for example, Victorian Planning Authority, Victoria Police and Parks Victoria).

Figure 5: Accepted recommendations by agency type and completion status

Source: VAGO.

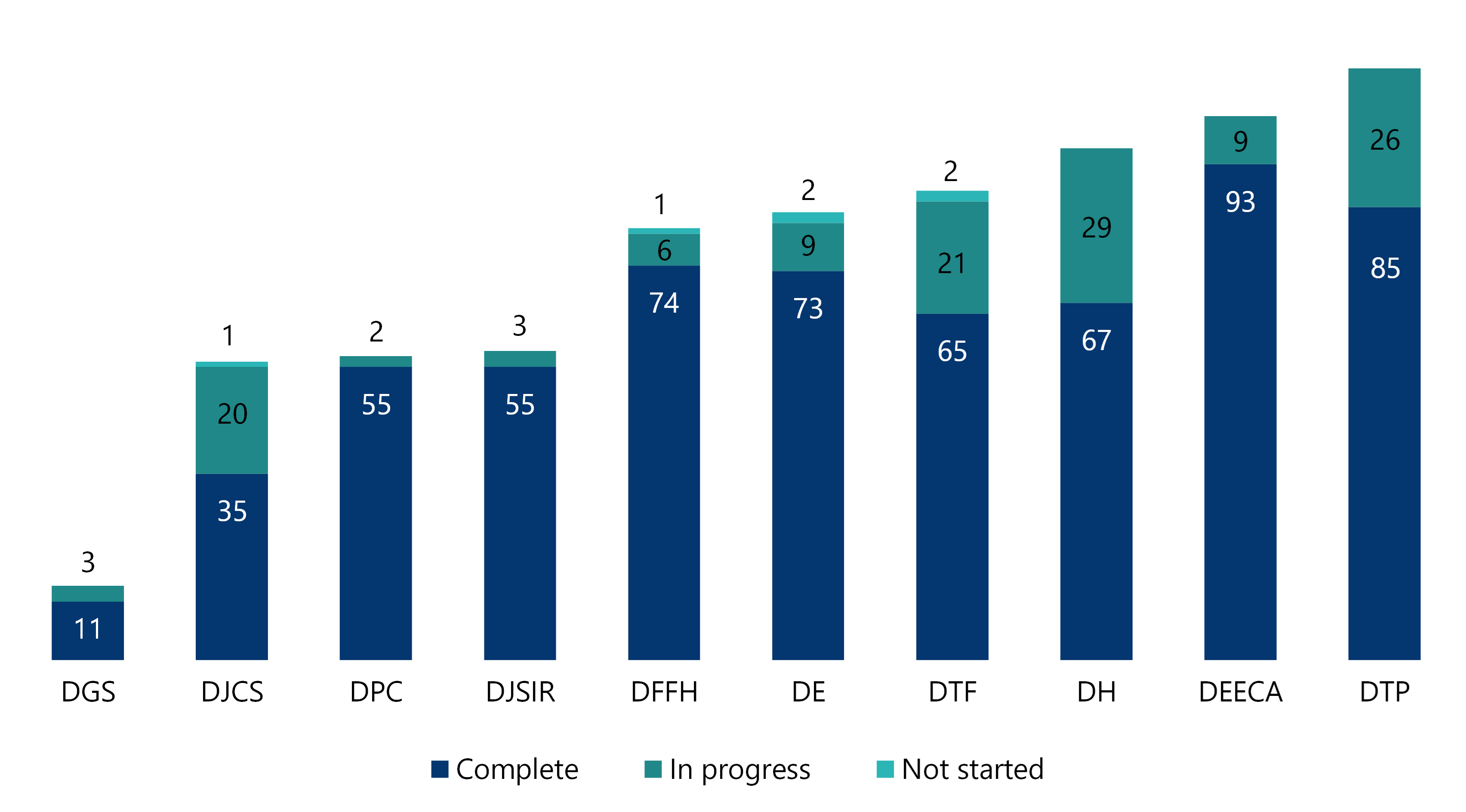

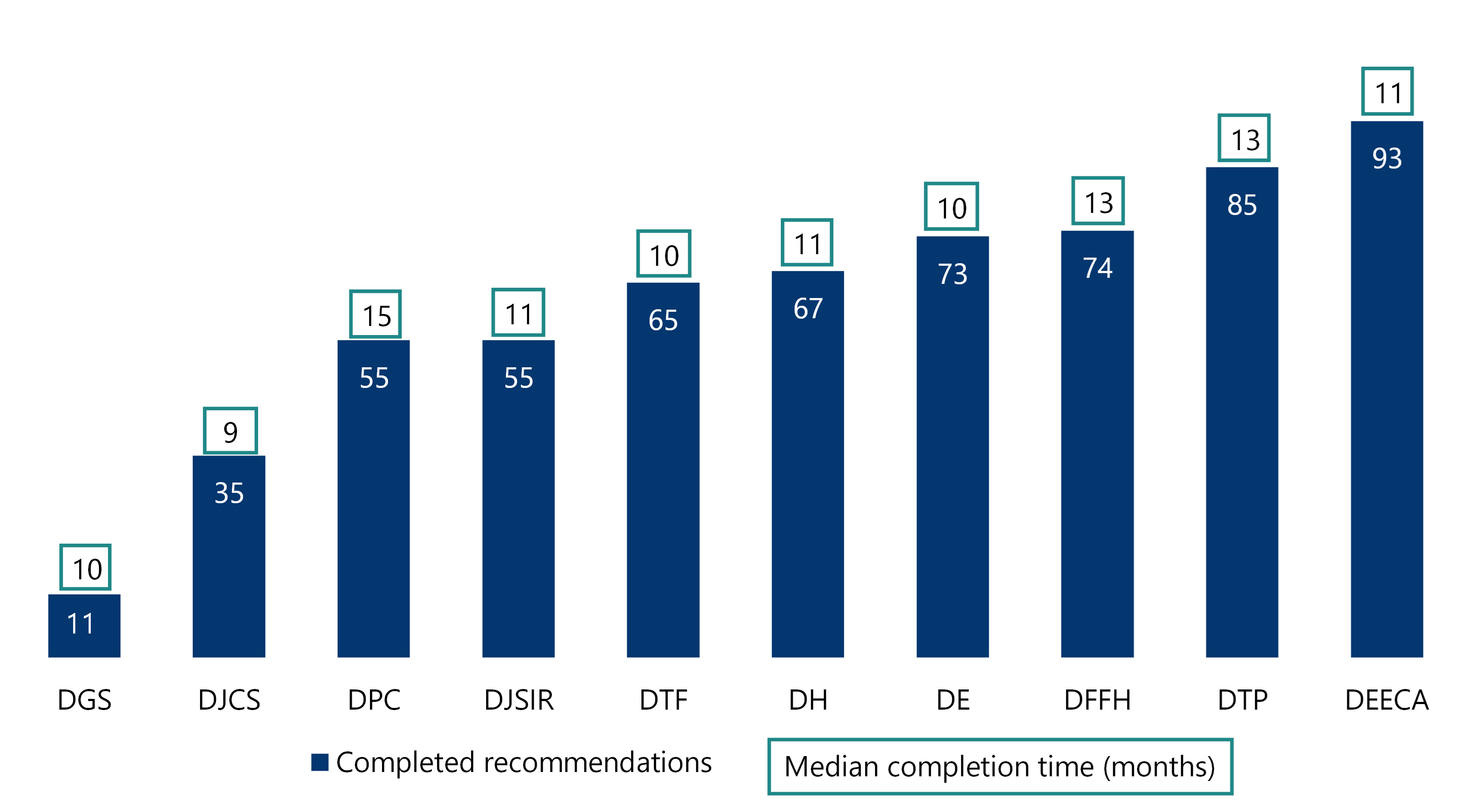

Completion status by department

Of the 1,260 recommendations we made between 1 July 2019 and 30 June 2024, 777 (62 per cent) were to government departments.

Departments accepted 747 (96 per cent) of these recommendations. They have completed 613 (82 per cent) of the accepted recommendations.

Figure 6 shows the status of accepted recommendations by department.

Figure 6: Status of accepted recommendations by department

Note: See Appendix B for the list of department name acronyms.

Source: VAGO.

Agencies completed most recommendations after their initial target completion date

Setting and revising completion dates

Our recommendations help agencies address the risks and issues we identify in our performance engagements.

When an agency first accepts a recommendation it can set a target completion date. It can revise this target date as many times as needed.

While some recommendations are more complex than others, agencies should seek to complete recommendations:

- in a timely way

- in line with their own target completion dates.

Overall completion time

Agencies took a median of 11 months to complete recommendations tabled between 1 July 2019 and 30 June 2024. This is faster than the median of 13 months in last year's report.

The shortest completion time in this year's review was zero months. The longest was 51 months.

Completion time by department

Departments took a median of 11 months to complete their recommendations. Notably:

- the Department of Premier and Cabinet (DPC) had the longest median completion time at 15 months

- the Department of Justice and Community Safety (DJCS) had the shortest median completion time at 9 months

- the Department of Energy, Environment and Climate Action (DEECA) completed the highest number of recommendations (93) at a median time of 11 months.

Figure 7: Number of completed recommendations and median completion time by department

Note: See Appendix B for the list of department name acronyms.

Source: VAGO.

Initial target completion dates

Of the 1,207 accepted recommendations, agencies set an initial target completion date for 1,070 (89 per cent).

The median target date was 7 months after tabling.

Agencies completed:

- 370 recommendations (35 per cent) by the initial target date

- 496 recommendations (46 per cent) after the initial target date.

The longest delay in completing a recommendation was 45 months after the initial target date.

Revised completion dates

Agencies revised the initial target completion date for 406 recommendations in this year’s review (38 per cent of recommendations with an initial target date).

The median revision was a 12-month extension from the initial date, or 22 months from the tabling date.

Of the 406 the recommendations with a revised date, agencies:

- extended the target completion date for 361 (89 per cent)

- brought forward the target completion date for 45 (11 per cent).

In this year's survey agencies told us they completed 257 of the 406 recommendations with revised dates. However, some agencies did not meet their revised completion dates.

Of the 217 completed recommendations with extended dates, agencies completed 102 (47 per cent) after the revised date. The length of the additional delay was a median of 70 days.

Completion dates for unresolved recommendations

Agencies set an initial target completion date for 204 (94 per cent) of the 216 unresolved recommendations.

They went on to revise the target completion date for 149 of these 204 recommendations (73 per cent).

The median extension was an 18-month delay from the initial target completion date.

There are still 25 unresolved recommendations from before July 2019

Older recommendations

We asked agencies for updates on 40 unresolved recommendations we made before July 2019.

Of these recommendations, 25 are still unresolved.

As Figure 8 shows, these 25 recommendations relate to 13 agencies across 12 engagements.

These older recommendations have been tabled for a median of 77 months (over 6 years).

We urge the responsible agencies to resolve these recommendations. This will help them address the risks we found and make necessary improvements.

Figure 8: Engagements tabled before July 2019 with unresolved recommendations (in order of tabling date)

| Engagement title | Tabling date | Unresolved recommendations | Current responsible agency |

|---|---|---|---|

| Managing Public Sector Records | 8 March 2017 | 4 | Department of Government Services (DGS) |

| ICT Disaster Recovery Planning | 29 November 2017 | 1 | Department of Families, Fairness and Housing (DFFH) |

| 2 | Department of Health (DH) | ||

| Protecting Victoria's Coastal Assets | 29 March 2018 | 2 | Mornington Peninsula Shire Council |

| 1 | East Gippsland Shire Council | ||

| 1 | Great Ocean Road Coast and Parks Authority | ||

| Safety and Cost Effectiveness of Private Prisons | 29 March 2018 | 1 | DJCS |

| Local Government Insurance Risks | 25 July 2018 | 1 | Municipal Association of Victoria |

| Managing Rehabilitation Services in Youth Detention | 8 August 2018 | 1 | DJCS |

| Managing the Environmental Impacts of Domestic Wastewater | 19 September 2018 | 1 | Mornington Peninsula Shire Council |

| Compliance with the Asset Management Accountability Framework | 23 May 2019 | 1 | DJCS |

| 1 | Department of Treasury and Finance (DTF) | ||

| Local Government Assets: Asset Management and Compliance | 23 May 2019 | 2 | Colac Otway Shire Council |

| 1 | City of Darebin | ||

| 1 | Mildura Rural City Council | ||

| Security of Government Buildings | 29 May 2019 | 1 | DGS |

| 1 | DJCS | ||

| Security of Patients' Hospital Data | 29 May 2019 | 2 | Barwon Health |

Source: VAGO.

The longest-standing unresolved recommendations are from the Managing Public Sector Records report in March 2017. DGS told us it plans to complete them by July 2027.

These 4 recommendations, which we originally addressed to DPC, did not have an initial target completion date.

The DH and DFFH told us they plan to complete the remaining recommendations from the November 2017 report ICT Disaster Recovery Planning by the end of June 2025.

Their original target completion date was the end of 2018.

See Appendix E for more information about the recommendations listed in Figure 8.

While some agencies had gaps in their assurance processes, we found nothing to suggest their attestations are not reliable

Attestation process for survey responses

For this review we interviewed 13 surveyed agencies about:

- their process or procedure for assessing and reporting on the status of their recommendations

- how they assess and store evidence about the status of their recommendations

- if a responsible officer approves the status of each recommendation

- if the head of the agency approves its survey attestation or delegates approval to another person.

We found nothing to suggest that agencies’ attestations are not reliable.

Best-practice assurance processes

| An agency should ... | Of the 13 agencies interviewed … |

|---|---|

have a clear process for assessing and reporting on the status of each recommendation.

| all agencies had a clear process and could show us examples of that process.

|

assess and store evidence of completed recommendations.

| all agencies demonstrated they assess submitted evidence when a responsible officer reports a recommendation as completed. However, 3 agencies did not store that evidence in a clear, accessible way.

|

confirm the status of each recommendation with the responsible officer.

| all agencies confirmed the status of each recommendation with the responsible officer before completing our survey.

|

inform the head of the agency or their approved delegate about the status of each recommendation before submitting its survey responses.

| one agency did not have documentation to prove it informed its agency head. However, the agency told us it did get their approval.

|

get the head of the agency or an approved delegate to attest to its survey responses.

|

|

We also saw other examples of good practice. For example, some agencies:

- made quarterly reports to their executives on the progress of recommendations and gave them an additional briefing when completing our survey

- kept documentation of each recommendation’s status in a centralised system

- required a responsible officer to explain why when a recommendation was more than 6 months overdue.

Appendix A: Submissions and comments

Download a PDF copy of Appendix A: Submissions and comments.

Appendix B: Acronyms and glossary

Download a PDF copy of Appendix B: Acronyms and glossary.

Appendix C: Review scope and method

Download a PDF copy of Appendix C: Review scope and method.

Appendix D: Performance engagements and agencies included in this review

Download a PDF copy of Appendix D: Performance engagements and agencies included in this review.

Download Appendix D: Performance engagements and agencies included in this review

Appendix E: Agency responses to our survey

Download a PDF copy of Appendix E: Agency responses to our survey.