Child and Youth Mental Health

Overview

Mental health problems are the most common health issues facing young people worldwide, according to the Global Burden of Disease Study 2017. One in four Australians aged 16–24 will experience mental illness in any given year.

For children and young people, intervention early in life and early in mental illness can reduce its duration and impact. Without access to mental health services, young people are at risk of ongoing problems that may affect their engagement with education and employment, and lead to greater contact with human services and the justice system.

This audit assessed whether child and youth mental health services are effectively preventing, supporting and treating child and youth mental illness. We examined how the Department of Health and Human Services (DHHS) designs and administers child and youth mental services, and facilitates access and service coordination for the most vulnerable and complex clients.

We made 20 recommendations for DHHS.

Transmittal letter

Independent assurance report to Parliament

Ordered to be published

VICTORIAN GOVERNMENT PRINTER June 2019

PP No 36, Session 2018–19

President

Legislative Council

Parliament House

Melbourne

Speaker

Legislative Assembly

Parliament House

Melbourne

Dear Presiding Officers

Under the provisions of section 16AB of the Audit Act 1994, I transmit my report Child and Youth Mental Health.

Yours faithfully

Andrew Greaves

Auditor-General

5 June 2019

Acronyms

| AWH | Albury Wodonga Health |

| CAMHS | child and adolescent mental health services |

| CAP | child and adolescent psychiatrists |

| CEO | chief executive officer |

| CMI | Client Management Interface |

| CYMHS | Child and youth mental health services |

| DHHS | Department of Health and Human Services |

| DJCS | Department of Justice and Community Safety |

| DTF | Department of Treasury and Finance |

| ECT | electroconvulsive treatment |

| KPI | key performance indicator |

| MACNI | Multiple and Complex Needs Initiative |

| MHIDI | Mental Health and Intellectual Disability |

| NDIS | National Disability Insurance Scheme |

| NOCC | National Outcomes and Casemix Collection |

| OCP | Office of the Chief Psychiatrist |

| ODS | operational data score |

| PCP | Primary Care Partnership |

| PHN | Primary Health Networks |

| PRISM | Program Report for Integrated Service Monitoring |

| RANZCP | Royal Australian and New Zealand College of Psychiatrists |

| RCH | Royal Children's Hospital |

| SCV | Safer Care Victoria |

| SDQ | Strengths and Difficulties Questionnaire |

| SoP | Statement of Priorities |

| VAGO | Victorian Auditor-General's Office |

| VAHI | Victorian Agency for Health Information |

| Y-PARC | Youth Prevention and Recovery Centres |

Audit overview

|

There are many different terms used for children and young people in different contexts. In this report:

'Children and young people' is used in this report as a generic term that has no specific age grouping or may refer to several different groupings that are later specified. |

Mental health problems are the most common health issues facing young people worldwide, according to the Global Burden of Disease Study 2017. Mental health problems encompass mild and short-term problems to severe, lifelong and debilitating, or life-threatening problems.

Three-quarters of all mental health problems manifest in people under the age of 25. One in 50 Australian children and adolescents has a severe mental health problem. Severe mental health problems include acute psychiatric disorders, such as schizophrenia, that are persistent and make daily tasks difficult. Some severe mental health problems can be triggered by trauma such as abuse or neglect, or by developmental disorders or physical trauma that leads to disability.

The likelihood of mental health problems increases exponentially where there are other indicators of vulnerability such as unstable housing and poverty, neglect and abuse, intergenerational trauma or developmental disabilities.

Intervention early in life, and early in mental illness, can reduce the duration and impact. Early intervention is especially important for children and young people because many mental health problems can affect psychosocial growth and development, which can lead to difficulties later in life.

Victoria's public mental health services focus on the treatment of more severe mental health problems and support infants, children, and young people through a mix of community, outreach, and inpatient hospital services. They also provide education, upskilling and leadership on managing mental health problems to the services and agencies that involve children and young people, which include schools, child protection, and disability services.

There has never been an independent review of clinical mental health services for children and young people in Victoria, despite significant changes in the service system with the introduction of the National Disability Insurance Scheme (NDIS) and headspace centres (youth-specific community mental health services).

This audit assessed the effectiveness of public child and youth mental health services (CYMHS) in one regional and four metropolitan health services. After our planning process identified the most significant risks for CYMHS, we focused on whether the services have been designed appropriately, and whether the Department of Health and Human Services (DHHS) is administering them effectively. The audit did not investigate the clinical effectiveness of individual patient care.

Conclusion

Not all Victorian children and young people with dangerous and debilitating mental health problems receive the services that they and their families need. This can lead to ongoing health problems, increasing the risk that children and young people will disengage from education and employment and be more likely to be involved with human services and the justice system.

Specialist child, adolescent and youth mental health services do improve many of their clients' outcomes, but they do not meet service demand or operate as a coordinated system. This can lead to significant deterioration in the health and wellbeing of some of Victoria's most vulnerable citizens.

DHHS has neither established strategic directions for CYMHS nor set expected outcomes for most of its CYMHS funding. This key issue inhibits service and program managers from realising efficiencies and improvements to service delivery such as working to a common purpose, sharing lessons or benchmarking progress.

Problems with the CYMHS performance monitoring system create oversight gaps for DHHS, which leaves it unable to address significant issues that require a system-level response. These issues include clinically unnecessary stays in inpatient mental health wards, and the admission of children and young people to adult mental health beds.

Health services express that due to DHHS's limited engagement with them, and monitoring systems that do not accurately reflect services' performance, DHHS does not sufficiently understand the CYMHS system and the challenges it faces. DHHS's lack of understanding contributes to a climate of uncertainty and distrust, which inhibits systemic improvement and creates significant variability and inequity in the care that children and young people receive.

DHHS has predominantly taken a one-size-fits-all approach to the mental health system's design and monitoring, which does not adequately identify and respond to the unique needs of children and young people.

Findings

Design of child and youth mental health services

There is no strategic framework to guide and coordinate DHHS or health services that are responsible for CYMHS, which is evident in a range of issues with the CYMHS design:

- DHHS lacks a rationale for the programs and services it funds and there has been a lack of transparency in how some programs and services have been funded.

- Some health services receive funding for programs that technically have ceased, and health services that provide similar activities receive different funding.

- DHHS does not set expectations for service delivery for most funded programs and does not monitor what programs and activities health services deliver.

- DHHS has not adequately considered the geographic distribution of services relative to the population, which creates inequities in service provision.

- There is a confusing mix of age eligibility arrangements across services—some treat young people up to the age of 25 and some up to 18. This is because in 2006, DHHS began increasing service eligibility to 25, but stopped the rollout midway through when the government changed.

- DHHS has not considered CYMHS's particular workforce challenges and needs that can vary from the adult sector, and the recent DHHS mental health workforce strategy does not specifically address CYMHS workforce issues. DHHS advises that the new Centre for Mental Health Learning is now mapping workforce needs, including for CYMHS.

While the cause of this lack of a strategic approach to CYMHS is unclear, high staff turnover in leadership roles and lack of specific performance oversight of CYMHS are likely contributing factors.

DHHS's commitment to reform the mental health funding model into activity‑based funding has not progressed for CYMHS. DHHS has taken no action to address the known problems with transparency and equity in the current funding model.

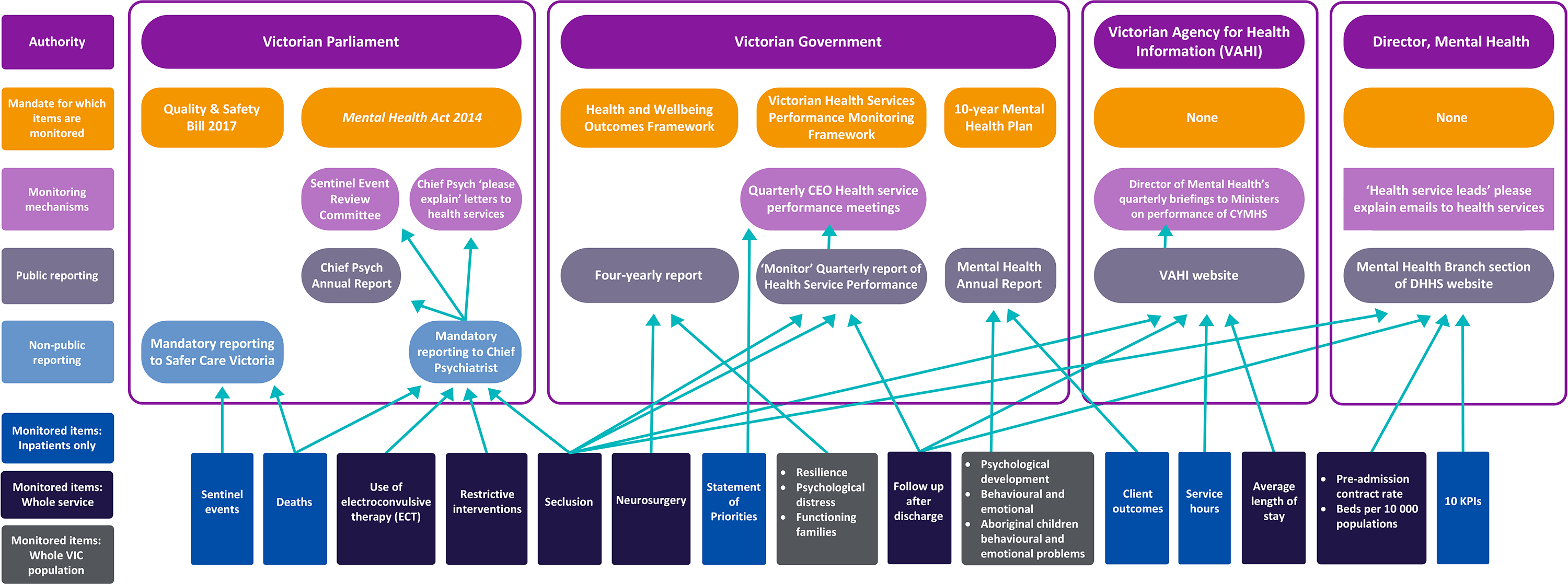

Monitoring performance, quality and outcomes

DHHS's performance monitoring of CYMHS comprises seven separate systems that are conducted in silos. The different areas that hold responsibility for monitoring within DHHS do not coordinate to identify common or systemic issues, nor do they share the information they collect. The current arrangement offers significantly limited oversight of CYMHS:

- The Mental Health Branch's program meetings discussed CYMHS only once in four years for four health services.

- Key performance indicators (KPI) to measure CYMHS differ from the national mental health performance framework limiting performance benchmarking.

- The two CYMHS KPIs that inform DHHS's quarterly performance discussions with chief executive officers (CEO) only concern services provided to patients who have had an inpatient admission.

- There are no KPIs or monitoring of some significant issues in CYMHS, such as long inpatient stays, accessibility, service coordination or family engagement in care.

The lack of service performance expectations and the limitations of DHHS's performance monitoring systems for CYMHS also hinder its ability to accurately advise government on how this important system performs, or what improvements are needed.

Monitoring the quality and safety of service delivery

The Chief Psychiatrist has legislated responsibilities to monitor service quality and safety in CYMHS. The Office of the Chief Psychiatrist (OCP) has delivered a large program of activities to review and improve service quality across mental health services; however, there has been no attention to the unique issues in CYMHS. Rather, the monitoring is reactive and crisis-driven, with limited focus on systemic issues.

DHHS does not routinely monitor the quality of CYMHS service delivery. Further, DHHS has commissioned two significant evaluations and reviews of new CYMHS services that have not been publicly released, and their findings have not been communicated to the CYMHS that were reviewed. A further nine reviews and analyses that DHHS conducted internally include information about CYMHS that could contribute to broader service quality improvement; however, these have not been provided to health services.

Access for vulnerable populations

DHHS has not identified priority populations or enabled health services to provide priority access to those most in need. Only one of the five audited health services has implemented the Chief Psychiatrist's 2011 guideline to prioritise children in out-of-home care. DHHS has not reviewed the guideline or its implementation since its release. Furthermore, DHHS's data does not record a client's legal status, which means there is no mechanism to reliably identify children in out-of-home care in the CYMHS system.

DHHS has not reviewed its triage scale since its introduction in 2010. DHHS is aware that the triage scale is not optimal for children and young people because it does not consider developmental risks and does not enable prioritisation of access for high-risk population groups.

Service coordination around multiple and complex needs

Young people are routinely getting 'stuck' in CYMHS inpatient beds when they should be discharged, because they cannot access family or carer support and/or services such as disability accommodation or child protection and out-of-home care. DHHS does not monitor this issue of inpatient stays that are clinically unnecessary despite health services having raised it repeatedly.

Current data systems prevent definitive monitoring of clinically unnecessary stays in CYMHS inpatient beds. However, the five audited health services provided to this audit 29 case studies from the prior 12 months that show at least 1 054 bed days used by patients without clinical need to be mental health inpatients. While some of the drivers of this problem are complex social and family issues, DHHS has not taken strategic action to address systemic issues with service coordination that they have the authority to resolve.

Monitoring long inpatient stays would provide a partial indicator of clinically unnecessary stays. DHHS could not explain why it monitors long stays over 35 days for the adult mental health system, but not for CYMHS, despite our data analysis showing that there have been 228 long stays in four health services over three years, of which 107 were children under 18 years.

In one region, there has been a long‐running dispute between two service providers over referral and discharge processes. DHHS has acted to resolve this issue, which caused longer inpatient stays for approximately 300 adolescents per year, by advising the health services to meet monthly and to escalate matters that cannot be resolved to the Chief Psychiatrist.

Clients with intellectual or developmental disabilities complicated by mental health problems account for many long and/or clinically unnecessary inpatient stays. DHHS has not responded adequately to CYMHS's reports about the service gap for young people with dual disability and the significant negative impacts on CYMHS's resources, workforce and the young people and their families.

DHHS and the Department of Justice and Community Safety's (DJCS) shared Multiple and Complex Needs Initiative (MACNI) provides case management for people aged 16 and over with mental illness, complex needs, and dangerous behaviours. Eligibility criteria are too narrow and processes too slow for this service to assist CYMHS with complex clients who are 'stuck' in inpatient units.

A $5.5 million pilot project that DHHS funded three years ago, and another CYMHS's independent service development, have lessons and resources that DHHS has not shared with other CYMHS. DHHS has not responded to either of these services' recommendations to address gaps in accommodation and service coordination for these young people, nor taken any action to support other CYMHS with providing services to clients with dual disability who have complex needs.

Recommendations

We recommend that the Department of Health and Human Services:

- in conjunction with child, adolescent and youth mental health services and consumers, develop strategic directions for child, adolescent and youth mental health services that include objectives, outcome measures with targets, and an implementation plan that is supported by evidence‑based strategies at both the system and health service levels (see Section 2.2)

- when implementing the six recommendations from the VAGO audit Access to Mental Health Services, ensure that the needs of children, adolescents and young people as well as child, adolescent and youth mental health services are considered and applied, wherever appropriate (see Section 1.4)

- establish and implement a consistent service response for 0–25 year-olds in regional Victoria that need crisis or specialised support beyond what their local child, adolescent and youth mental health services' community programs can provide, including reviewing the extent to which the six funded regional beds are able to provide an evidence-based child and adolescent service (see Sections 2.4 and 3.2)

- establish and implement a transition plan towards achieving a consistent service response for 19–25 year-olds with moderate and severe mental health problems (see Section 2.5)

- develop and implement a child, adolescent and youth mental health workforce plan that includes understanding the specific capability needs of the sector and specifically increasing capabilities in the area of dual disability, that is, intellectual or developmental disabilities complicated by mental health problems (see Section 2.7)

- refine, document and disseminate the performance monitoring approach for child and youth mental health services so it consolidates current disparate reporting requirements and includes:

- measures that allow monitoring of long inpatient stays, priority client groups, clinical outcomes and accessibility of child and youth mental health services

- introducing quality and safety measures of child and youth mental health services community programs in the Victorian Health Services Performance Monitoring Framework

- the role of the Chief Psychiatrist in performance monitoring, and how the information it receives from mandatory reporting informs the Department of Health and Human Services' performance monitoring

- documenting in one place all reporting requirements for child and youth mental health services from all areas of the Department of Health and Human Services, including administrative offices Safer Care Victoria and the Victorian Agency for Health Information

- how the Department of Health and Human Services will respond to performance issues (see Sections 3.2 and 3.6)

- ensure that six-monthly mental health program meetings occur and information received is consolidated to identify systemic and persistent issues (see Section 3.4)

- initiate negotiations with the Department of Treasury and Finance during the state budget process to ensure that Budget Paper 3 performance measures include monitoring of child, adolescent and youth mental health services (see Section 3.6)

- disseminate evaluations and reviews of child, adolescent and youth mental health service projects and services to all child, adolescent and youth mental health service leaders (see Section 3.7)

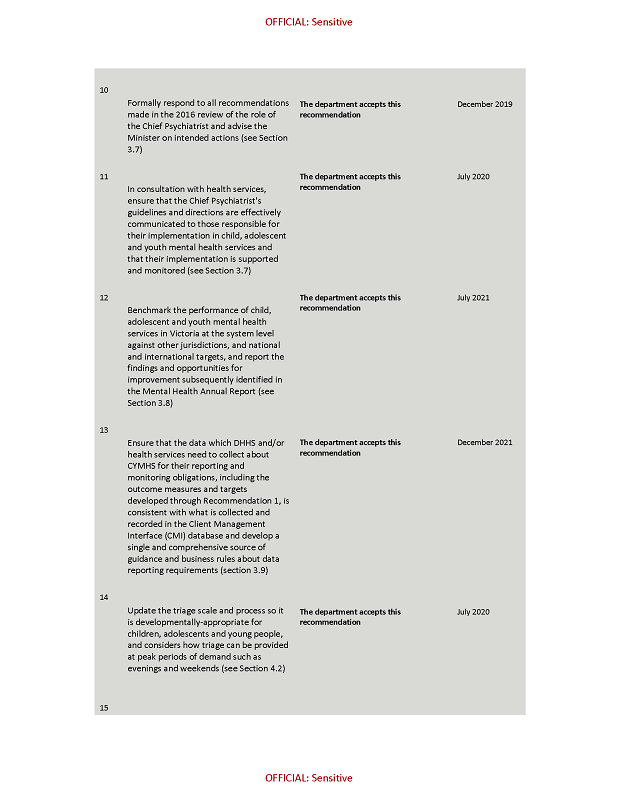

- formally respond to all recommendations made in the 2016 review of the role of the Chief Psychiatrist and advise the Minister for Mental Health on intended actions (see Section 3.7)

- in consultation with health services, ensure that the Chief Psychiatrist's guidelines and directions are effectively communicated to those responsible for their implementation in child, adolescent and youth mental health services and that their implementation is supported and monitored (see Section 3.7)

- benchmark the performance of child, adolescent and youth mental health services in Victoria at the system level against other jurisdictions, and national and international targets, and report the findings and opportunities for improvement subsequently identified in the Mental Health Annual Report (see Section 3.8)

- ensure that the data that the Department of Health and Human Services and/or health services need to collect about child and youth mental health services for their reporting and monitoring obligations, including the outcome measures and targets developed through Recommendation 1, is consistent with what is collected and recorded in the Client Management Interface database and develop a single and comprehensive source of guidance and business rules about data reporting requirements (see Section 3.9)

- update the triage scale and process so it is developmentally appropriate for children, adolescents and young people, and considers how triage can be provided at peak periods of demand such as evenings and weekends (see Section 4.2)

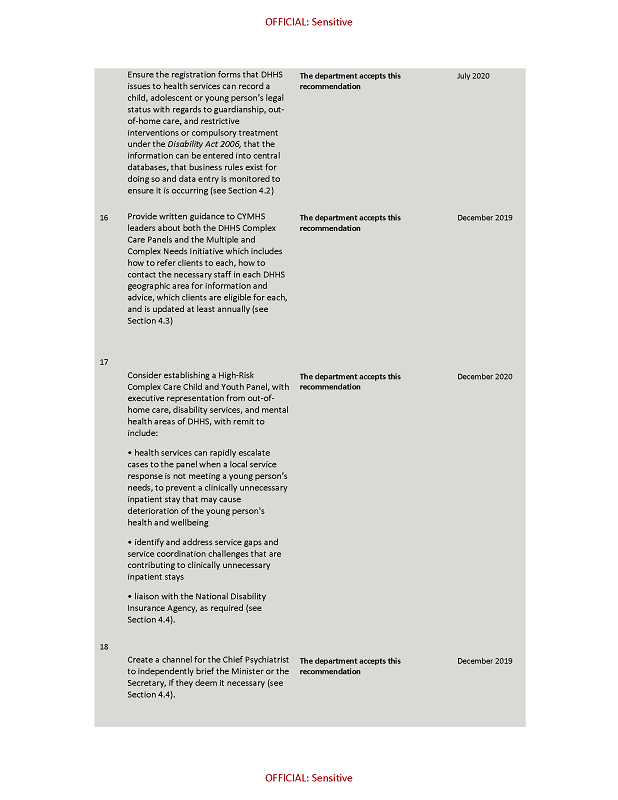

- ensure the registration forms that the Department of Health and Human Services issues to health services can record a child, adolescent or young person's legal status with regards to guardianship, out-of-home care, and restrictive interventions or compulsory treatment under the Disability Act 2006, that the information can be entered into central databases, that business rules exist for doing so and data entry is monitored to ensure it is occurring (see Section 4.2)

- provide written guidance to child and youth mental health services' leaders about both the Department of Health and Human Services' Complex Care Panels and the Multiple and Complex Needs Initiative, which includes how to refer clients to each, how to contact the necessary staff in each Department of Health and Human Services geographic area for information and advice, which clients are eligible for each, and is updated at least annually (see Section 4.3)

- consider establishing a High-Risk Complex Care Child and Youth Panel, with executive representation from out-of-home care, disability services, and mental health areas of the Department of Health and Human Services, with remit to:

- allow health services to rapidly escalate cases to the panel when a local service response is not meeting a young person's needs, to prevent a clinically unnecessary inpatient stay that may cause deterioration of the young person's health and wellbeing

- identify and address service gaps and service coordination challenges that are contributing to clinically unnecessary inpatient stays

- liaise with the National Disability Insurance Agency, as required (see Section 4.4)

- create a channel for the Chief Psychiatrist to independently brief the Minister for Mental Health or the Secretary, if they deem it necessary (see Section 4.4)

- establish and implement a consistent service response for 0–25 year-olds who have intellectual or developmental disabilities and moderate to severe mental health problems (see Section 4.5)

- establish a mechanism for operational and clinical leaders of all child, adolescent and youth mental health services to collaborate with each other and with the Department of Health and Human Services to improve service response consistency, and strengthen pathways between services for clients and families, including reviewing catchment boundaries and access to specialised statewide programs (see Section 4.5).

Responses to recommendations

We have consulted with DHHS, Albury Wodonga Health (AWH), Austin Health, Eastern Health, Monash Health and the Royal Children's Hospital (RCH) and we considered their views when reaching our audit conclusions. As required by section 16(3) of the Audit Act 1994, we gave a draft copy of this report to those agencies and asked for their submissions or comments. We also provided a copy of this report to the Department of Premier and Cabinet.

DHHS provided a response. The following is a summary of its response. The full response is included in Appendix A.

DHHS accepted each of the 20 recommendations, noting that implementation of the recommendations will be informed by the outcomes of the Royal Commission into Mental Health, particularly recommendations relating to system design. DHHS will develop strategic directions and refine the performance monitoring approach for services, share reviews and evaluations, update triage and registration processes, provide guidance around complex care panels, consider establishing a High-Risk Complex Care Child and Youth Panel, establish a mechanism for health services to collaborate and create a means for the Chief Psychiatrist to independently brief the Secretary or Minister for Mental Health.

1 Audit context

Mental health problems are the most common health issues facing young people worldwide, according to the Global Burden of Disease Study 2017. Three-quarters of all mental health problems manifest in people under the age of 25. One in four Australians aged 16–24 years will experience mental health problems in any given year, while 2.1 per cent of Australian children and adolescents have a severe mental health problem and a further 3.5 per cent have moderate mental health problem. One in 10 Australian adolescents (10.9 per cent) have deliberately injured themselves, and each year one in 40 (2.4 per cent) attempt suicide.

The Australian Government's national survey of child and adolescent mental health defines the most common and disabling mental health problems that children and young people in Australia experience as:

- major depressive disorder

- attention-deficit/hyperactivity disorder

- conduct disorder

- social phobia

- separation anxiety

- generalised anxiety

- obsessive-compulsive disorder.

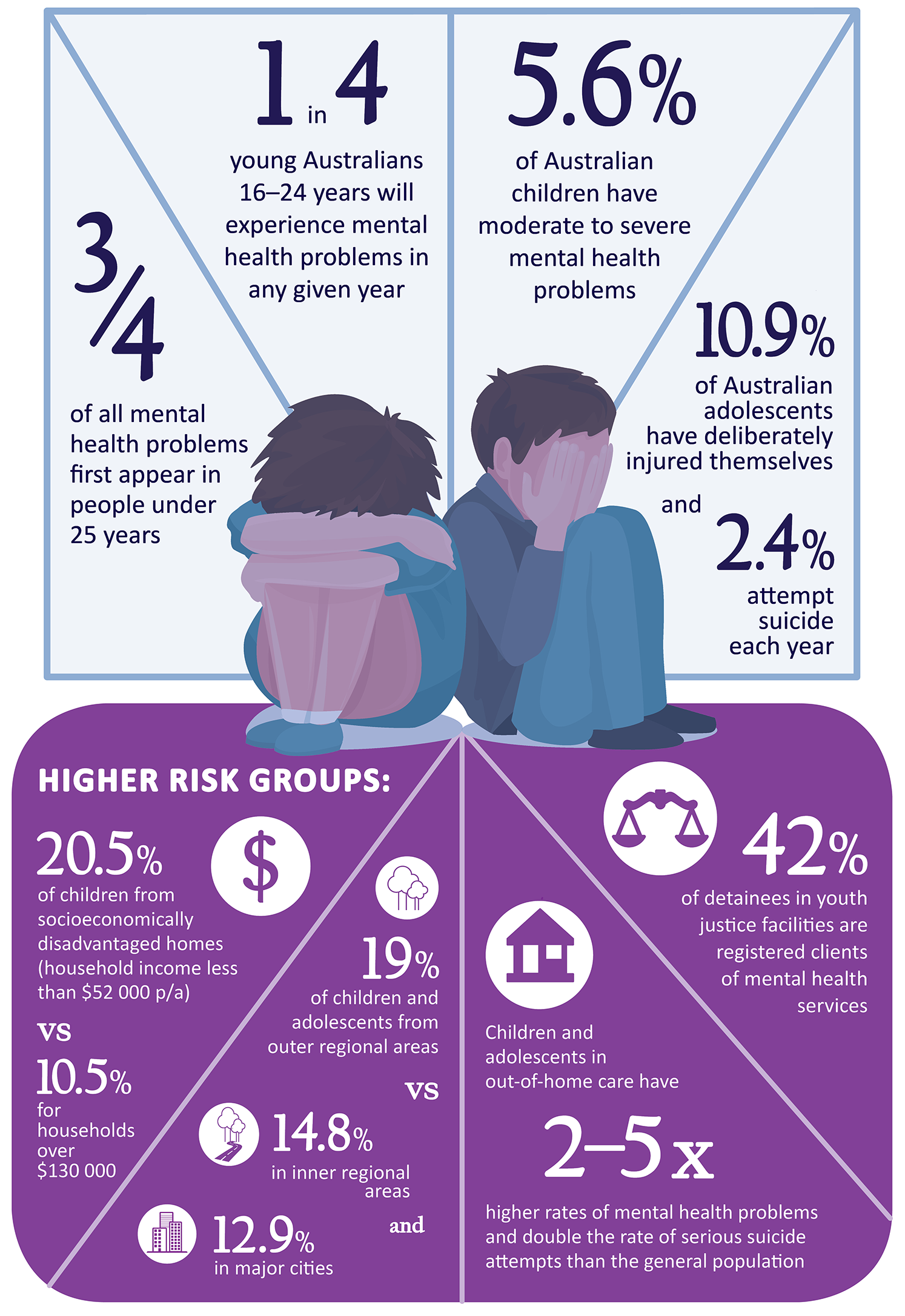

Figure 1A summarises the most recent data on children and young people's mental health problems. This data references various years and sources, and highlights that some children and young people are at higher risk of having a mental health problem. For example, children and young people from socio‑economically disadvantaged families have higher rates of mental health problems. Children living in out-of-home care experience two to five times higher rates of mental health problems and more than double the rate of serious suicide attempts.

Figure 1A

Prevalence of mental health problems in Australian children and young people

Note: A serious suicide attempt is a suicide attempt that required medical treatment.

Source: VAGO with information from Lawrence D, et al, (2015), The Mental Health of Children and Adolescents: Report on the second Australian Child and Adolescent Survey of Mental Health and Wellbeing; Department of Health, Canberra; Sawyer, M. et al (2007), 'The mental health and wellbeing of children and adolescents in home-based foster care', Medical Journal of Australia, 186:4; Kessler RC, et al, (2005), Lifetime prevalence and age-of-onset distributions of DSM-IV disorders in the National Comorbidity Survey Replication; Australian Institute of Health and Welfare, (2007), Young Australians: their health and wellbeing; DHHS (2018), Mental Health 2018–2023 Services Strategy analysis – Draft, Linkage, Modelling and Forecasting Section.

Services used by children and young people with mental health problems

|

In 2017–18, there were 11 945 registered clients of CYMHS, 331 058 contacts and 2 014 inpatient stays. |

For children and young people, intervention early in life and at an early stage of mental health problems can reduce the duration and impact of the problems. Services that recognise the significance of family and social support are particularly important for children and young people. These principles informed the Australian Government's National Mental Health Plan, published in 2003.

A national survey by the University of Western Australia and Roy Morgan Research for the Australian Government in 2013–14 (published in 2015) on mental health of children and adolescents estimated that 3.3 per cent of Australian children and young people with mental health problems access clinical mental health services. However, 12 per cent with severe mental health problems do not access any services, including those provided through schools, general practitioners, telephone helplines or online. For children and young people with moderate or severe mental health problems, this figure rises to 27.5 per cent. Service use varies by age as well as the severity of problems, as shown in Figure 1B.

Figure 1B

2015 Australian Government report of service use by 4–17 year-old Australians with mental health problems by age group and severity of problem

Note: Services include any service provided by a qualified health professional regardless of where that service was provided including in the community, hospital inpatient, outpatient and emergency, and private rooms; school or other educational institution; telephone counselling; online services that provided personalised assessment, support or counselling.

Source: VAGO based on information from the Australian Government's 2015 report, The mental health of Australian children and young people: Report on the second Australian child and adolescent survey of mental health and wellbeing.

1.1 Agency roles and responsibilities

Department of Health and Human Services

DHHS is responsible for ensuring the delivery of good-quality health services to the community on behalf of the Minister for Health and the Minister for Mental Health. DHHS plans services, develops policy, and funds and regulates health service providers and activities that promote and protect the health of Victorians.

The Secretary of DHHS is responsible for working with, and providing guidance to, health services to assist them on matters relating to public administration and governance.

The Mental Health Branch of DHHS has 84 staff who carry out the duties prescribed to the Secretary.

The Chief Psychiatrist

The Secretary of DHHS appoints a Chief Psychiatrist, who has legislated responsibilities under the Victorian Mental Health Act 2014 (the Act) for:

- providing clinical leadership and advice to public mental health services

- promoting continuous improvement in quality and safety

- promoting the rights of persons receiving treatment

- providing advice to the minister and the Secretary about the provision of mental health services.

The Chief Psychiatrist is subject to the general direction and control of the Secretary of DHHS in the exercise of their duties, functions and powers under the Act. The Secretary has specific powers to request the Chief Psychiatrist take action, for example, to conduct a clinical audit. The Chief Psychiatrist also has statutory obligations to the Secretary to provide a report of any investigation undertaken. Operationally, the Chief Psychiatrist reports to DHHS's Director of Mental Health.

|

Clinical mental health services targeting people up to 18 years only are known as child and adolescent mental health services (CAMHS) and those who have expanded their models to 0–25 years refer to themselves as child and youth mental health services (CYMHS). The subtle difference in terminology creates frequent confusion in discussions around these services. In this report, 'child and youth mental health services (CYMHS)' is used to refer to all clinical services that the Victorian Government funds to support children, adolescents and youth aged 0–25 years who have moderate to severe mental health problems, and includes services named CAMHS. |

Child and Youth Mental Health Services

CYMHS support children and adolescents through a mix of community-based or outpatient programs and inpatient treatment in hospitals, as well as a small number of community residential programs. They also provide education, upskilling and leadership on managing mental health problems to the services and agencies that care for children and young people, which includes schools, child protection and disability services. Health services manage CYMHS and are responsible for ensuring compliance with relevant legislation, regulations and policies.

DHHS provides $11.4 million per year for an early psychosis program to target young people aged 16–25 who are experiencing their first episode of psychosis. The program provides case management, medication, psychological therapies, social, educational and employment support, and family work.

In addition to accessing CYMHS, some young people in Victoria use adult mental health services because eligibility for the adult stream commences at 16 years. DHHS funds specialist perinatal mental health services, mother and baby units, and a statewide program that aims to reduce the impact of parental mental illness on family members through the adult mental health system. While these programs directly impact children and young people, this audit does not examine them.

Mental health problems have many causes, including abuse and neglect in childhood, developmental disorders, and physical disability. Children and young people who access clinical mental health services commonly also need services such as child protection or disability support. Coordinating these different services is an important and complex part of best-practice care for many children and young people with mental health problems.

Figure 1C shows one CYMHS's description of the service system for its clients and their role within it.

Figure 1C

Other services that clients of CYMHS routinely require

Source: RCH response to DHHS's 10-Year Mental Health Plan, 2016.

Commonwealth-funded services

|

There are 27 headspace centres around Victoria that offer enhanced primary care services, which include mental health, physical and sexual health, and life skill support around work and study in an accessible, youth-friendly environment. |

Children and young people can access Commonwealth-funded services to support their mental health needs. The Australian Government funds Primary Health Networks (PHN), which provide early-intervention services for young people with, or at risk of, severe mental illness, alongside its funding of primary care through general practitioners and headspace centres. Victoria has six PHNs that provide 28 different services. CYMHS are involved with supporting some Commonwealth-funded initiatives. Many CYMHS have active roles in managing and supporting their local headspace centres.

Workforce challenges

Attracting, training and retaining a sufficient and appropriately skilled mental health workforce, and making mental health services safe places to work, is a major challenge for health services and DHHS.

The Royal Australian and New Zealand College of Psychiatrists' (RANZCP) review of workforce issues, which it publicly reported in 2018, noted a shortage of Victorian child and adolescent psychiatrists (CAP). Victoria has 31 CAP training positions, of which two are in regional areas. RANZCP says that Victoria urgently needs 12 additional CAP training positions.

A 2016 study by the University of Melbourne and the Health and Community Services Union, published in the International Journal of Mental Health Nursing, found that 83 per cent of 411 surveyed staff in Victoria's mental health workforce had experienced violence in the prior 12 months, mostly comprising verbal abuse (80 per cent) followed by physical violence (34 per cent) and bullying (30 per cent). One in three victims of violence rated themselves as being in psychological distress, 54 per cent of whom reported being in severe psychological distress. The survey did not report on these matters specific to CYMHS.

1.2 Relevant legislation

In 2014, the Act came into effect. The Act prescribed many changes to the mental health system including ensuring that treatment is provided in the least restrictive way possible.

Under the Act, mental illness is defined as 'a medical condition that is characterised by a significant disturbance of thought, mood, perception or memory'.

The Act mandates that health services report to the Chief Psychiatrist on the use of electroconvulsive treatment (ECT), results of neurosurgery, the use of restrictive interventions, and any deaths that meet the Coroners Act 2008's definition for a reportable death.

The Act sets out roles and responsibilities for the Chief Psychiatrist and the Secretary of DHHS.

The 'Mental Health Principles' in the Act state that:

Children and young persons receiving mental health services should have their best interests recognised and promoted as a primary consideration, including receiving services separately from adults, whenever this is possible.

The Act does not specify an age grouping for children and young persons to which this principle would apply.

1.3 Why this audit is important

There have been considerable changes to the environment in which CYMHS operate in recent years. The types and complexity of mental health problems that children and young people seek support for is increasingly challenging for health services. Demand for services is growing rapidly due to interconnected factors including reduced stigma around mental health problems, and more youth-friendly access points for young people to seek help, such as headspace centres.

This is the first Victorian Auditor-General's Office (VAGO) audit of Victoria's child and youth mental health system, and there has been no other substantial public, external review of this topic. VAGO's 2019 audit Access to Mental Health Services found that DHHS has not done enough to address the imbalance between demand for, and supply of, mental health services in Victoria. VAGO made six recommendations to DHHS about investment planning, monitoring access, funding reforms, catchment boundaries, and internal governance.

During VAGO's audit, the Victorian Government established a Royal Commission to inquire into mental health, which is due to provide an interim report to the Governor of Victoria in November 2019 and a final report in October 2020. The terms of reference released in February 2019 give the Royal Commission an extensive brief to report on how to improve many matters in the mental health system, including access, governance, funding, accountability, commissioning, infrastructure planning, workforce, and information sharing.

The Productivity Commission is currently conducting an inquiry into The Social and Economic Benefits of Improving Mental Health, which will focus on the largest potential improvements, including for young people and disadvantaged groups. This audit adds a detailed review of this particular part of the mental health system to these larger and broader inquiries.

1.4 What this audit examined and how

The objective of this audit was to determine whether child and adolescent mental health services effectively prevent, support and treat child and youth mental health problems. We considered whether the services are appropriately designed and whether DHHS administers them effectively. The audit focused on clinical mental health services for young people with moderate to severe mental health problems.

We selected five health services for the audit, alongside DHHS, in order to sample the varied services in Victoria's devolved health system:

- AWH

- Austin Health

- Eastern Health

- Monash Health

- RCH.

The audit focused on current service delivery, with some reference to significant changes over the past three to five years.

We conducted our audit in accordance with section 15 of the Audit Act 1994 and ASAE 3500 Performance Engagements. We complied with the independence and other relevant ethical requirements related to assurance engagements. The cost of this audit was $530 000.

In accordance with section 20(3) of the Audit Act 1994, unless otherwise indicated, any persons named in this report are not the subject of adverse comment or opinion.

1.5 Report structure

The remainder of this report is structured as follows:

- Part 2 examines the design of CYMHS.

- Part 3 examines how DHHS monitors performance, quality and outcomes of child and youth mental health services.

- Part 4 examines access and coordination of care for the most vulnerable and complex clients.

2 Design of child and youth mental health services

The Victorian Government funds 17 health services to provide clinical services to children or young people with moderate to severe mental health problems.

In 2017–18, clinical mental health services in Victoria treated 11 945 children and young people up to the age of 18 years—an 11.5 per cent increase on the previous year—and admitted 2 014 to hospital, a 9.8 per cent increase. DHHS was not able to provide any information about young people aged 19–25 years in either the adolescent or adult mental health system.

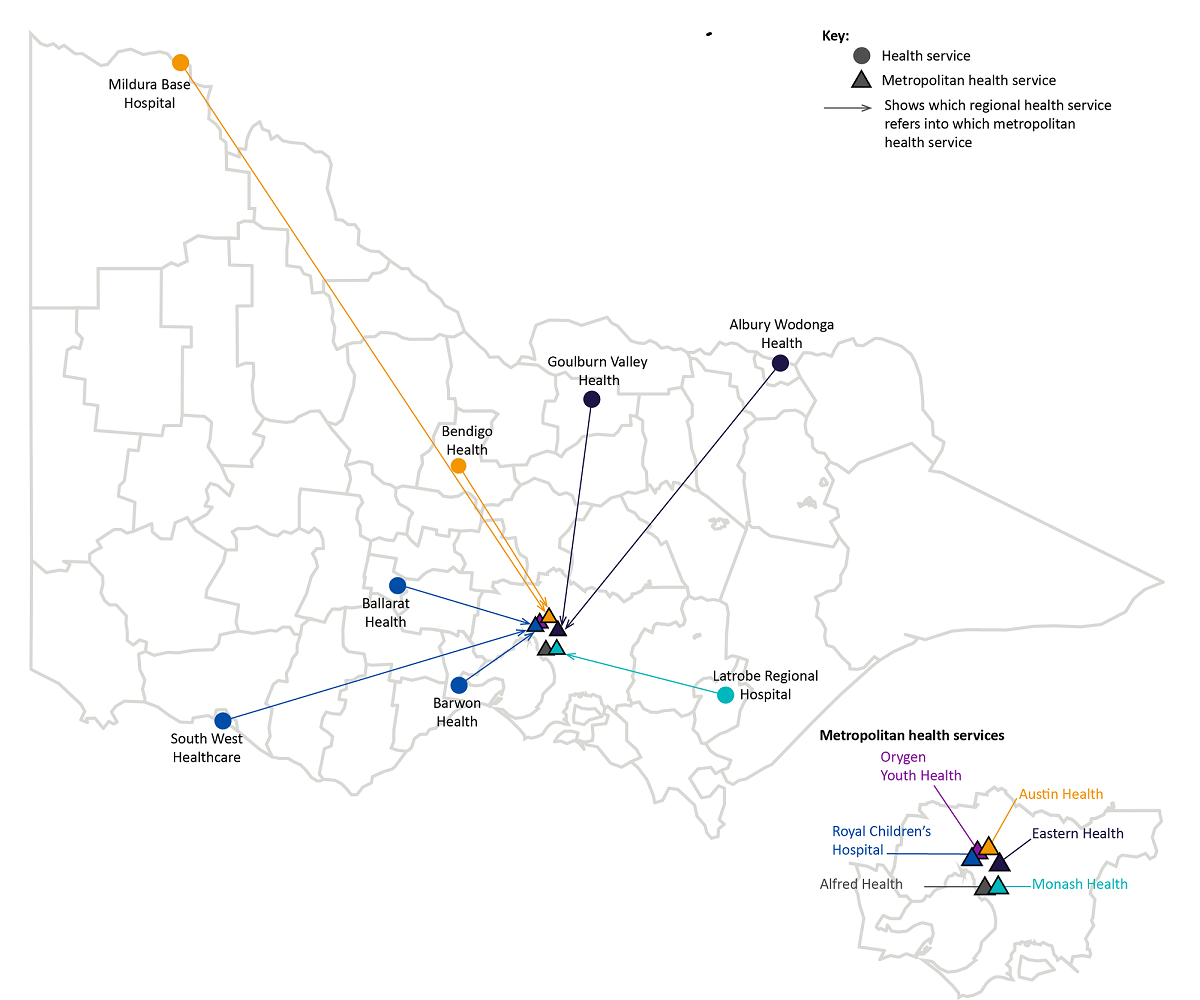

There are six CYMHS in metropolitan Melbourne and eight in regional Victoria, as shown in Figure 2A. Clients must attend the CYMHS located in the catchment where they live, unless they require a specialised service that is not provided or available in their own catchment. Regional CYMHS are attached to one of four metropolitan services as their primary referral option for specialist or inpatient support that is not available locally, as illustrated by Figure 2A.

Three provide limited, specialised services to children and adolescents such as forensic services and treatment of eating disorders. The remaining 14 provide a general service, often alongside a suite of specialised programs. Eight of these provide inpatient services, of which two include inpatient services specifically for children under 12 years of age. One of these services is specifically 'youth‑focused', providing both inpatient and community services to 15–24 year-olds in its catchment area.

Figure 2A

Location of CYMHS and their partner agencies for inpatient and specialised service referrals

Source: VAGO mapping of DHHS information.

2.1 Conclusion

DHHS has not provided the strategic leadership necessary to effectively plan, fund and manage CYMHS. Consequently, the system consists of a collection of fragmented and overstretched health services. DHHS has created a system that cannot effectively work together even when client need requires it.

DHHS has not met the obligations of its role as a system manager—to set clear strategic directions and service expectations for CYMHS, to establish a transparent and equitable funding model, and to ensure service design supports the infrastructure and service accessibility that children and young people need. Where health services have made innovative attempts to improve services, there are no mechanisms for sharing or collaborating.

This lack of leadership and strong communication and collaboration with the CYMHS sector has created a culture of distrust, and has impeded knowledge sharing among health services and between health services and DHHS. All of this results in a service system that is not meeting the needs of some vulnerable children and young people.

2.2 Strategic direction

In 2015, DHHS published Victoria's 10-year Mental Health Plan (the 10-year plan), which is a high-level framework for mental health service reform. There is one action in the plan that is relevant to child and youth clinical mental health services:

strengthening collaboration between public specialist mental health services for children and young people and paediatricians, other social and community services and schools

The 10-year plan does not provide a strategic framework for child and youth mental health. DHHS formed the Mental Health Expert Taskforce (the taskforce) to advise on the 10-year plan and its implementation. The taskforce identified child and youth mental health as one of the highest priority areas for action; however, no plan was developed on what that action should be.

DHHS's Clinical Mental Health Services Improvement Implementation Plan—endorsed internally in December 2018—commits to developing a 'child and youth service framework' with immediate priorities identified by June 2019 and the framework complete by June 2020. DHHS has not progressed this work and has not determined the framework's scope, purpose or how it will be developed. In early 2019, DHHS took a decision to not progress this work until the Victorian Government's Royal Commission into Mental Health concludes.

During the audit, DHHS became aware of a proposal by RCH for a strategic direction for CYMHS, which calls for bringing all Victorian CYMHS together into a network that undertakes shared work on quality improvement, workforce development and practice innovation. The proposal identified nine priority areas for this work, which are:

- development with consumers and carers of a central dataset that monitors clinical needs, accessibility, collaboration with child and family service providers, and outcome evaluation to allow benchmarking and collaboration across mental health services

- workforce development

- establishing a research forum

- clinical pathways between services

- policy directions to specify the role of public mental health services

- expanding targeted interventions for dual disability, gender dysphoria, eating disorders, Aboriginal and Torres Strait Islander children, trauma, children in out-of-home care, and refugees

- clinical guidelines commencing with psychopharmacology, dual disability, and children involved with child protection

- implementation research

- engaging with schools.

Given our observations of a fragmented system and the lack of strategic direction, RCH's proposal has merit. DHHS should consider this proposal and provide a response to RCH at the earliest opportunity.

The only evidence that DHHS provided strategic guidance to CYMHS about how they should operate is the following statement in the Clinical Specialist Child Initiative program guidelines issued in 2016:

It is expected that the new, expanded and existing services will work together to form a comprehensive response to the mental health needs of children aged 0–12. It is expected that the local operation of these services will interlink and not be delivered in isolation.

Despite giving these directions, DHHS has not taken action to enable services to achieve them and it has not monitored progress.

There has been significant change in the position of Director of Mental Health at DHHS. In the three years from 2016 to 2018, eight people have held the role, as illustrated in Figure 2B. Three permanent appointments covered 15 months out of the three years. Five other individuals held the role in acting positions, and two of the permanent appointees began their tenure with temporary secondments. For 537 days, or 49 per cent, of the three years, there was a temporary appointee in the role.

DHHS advises that there has not been instability in the leadership of the Mental Health Branch because the same people rotated through the temporary appointments, which enabled continuity. However, the temporary, albeit rotational, leadership of the branch likely impacts its ability to maintain a clear long-term vision, and make and follow through on decisive actions.

Figure 2B

Individuals who have held the Director of Mental Health role at DHHS in 2016–18, by month

Note: Each colour represents a different individual, with an 'A' or a 'P' indicating whether that individual was in an acting or a permanent role at that time.

Source: VAGO analysis of information supplied by DHHS from 'SAP Organisational Management' database, December 2018.

It is also unclear what resources are dedicated to DHHS's oversight and monitoring of CYMHS, as this is predominantly embedded within positions responsible for broader performance monitoring of individual health services and/or the mental health system more generally. There is one position within the Mental Health Branch whose responsibilities include the 'child and adolescent portfolio' alongside being the lead contact for monitoring performance for several health services. Portfolio responsibilities include program development, service planning and system oversight. These portfolio responsibilities form approximately 20 per cent of the workload in the position, dependent on other responsibilities.

2.3 Funding model

DHHS has not acted to address the transparency and equity problems with the current funding model, and there has been no progress towards introducing activity-based funding for CYMHS.

Mental health is funded through a block funding model, where a sum of funding is provided by DHHS for the health service to deliver an agreed number of services—a number of 'beds' for inpatient services and 'service hours' for community-based services.

DHHS determines each year's funding allocation by considering the previous year's funding, some analysis of services provided in the previous year, and any new government commitments in the State Budget.

As noted in a 2018 report to DHHS's Audit and Risk Committee, Hospital Budget Governance Framework – Internal Audit Report, there is a 'medium-level' risk that models of funding that are not activity-based have 'a lack of governance and documentation of decisions taken'. Figure 2C shows the funded units that are relevant to CYMHS.

Figure 2C

Mental health—funded units applicable to clinical bed-based services 2018–19

|

Service element |

Funded unit |

All health services ($) |

|---|---|---|

|

Admitted care |

||

|

Acute Care—child/adolescent, adult, aged(a) |

Available bed day |

712.00 |

|

Youth Prevention and Recovery Centre |

Available bed day |

600.13 |

|

Clinical community care |

||

|

Ambulatory |

Community service hour |

402.58 |

Note: (a) Supplement grant provided to support the acute care unit price.

Source: VAGO based on DHHS Policy and Funding Guidelines 2018–19.

DHHS's internal analysis shows that its funding covers 65 per cent of the costs of inpatient mental health beds while other health services are funded at more than 80 per cent of the actual cost. Audited health services confirmed that they 'cross-subsidise' from their community CYMHS funding to cover the costs of meeting demand for their inpatient beds.

In addition to the 'available bed day' funding unit, Monash Health receives $1 092 774 per year labelled 'child bed supplement' in DHHS's funding system. DHHS advises that the extra funding is to support Monash Health to provide inpatient services for children. However, we found that Austin Health does not receive this supplement despite also providing inpatient beds for children. DHHS's explanation for the funding discrepancy is that each health service provides a different model of care and that if Austin Health transitioned to the same model as Monash Health, it would also receive the supplement. DHHS has not communicated this to Austin Health, which demonstrates an example of the risk raised in the internal audit report of a lack of transparency and clarity around block funding arrangements.

DHHS provides a total of $9.3 million for eating disorder services to seven health services. As we were unable to determine what proportion of this funding is spent in CYMHS, we have only included eating disorder funding for RCH in our funding analysis as this health service only treats children and young people.

Activity-based funding is used for most other services provided through Victorian hospitals—the amount paid reflects a service's complexity and cost with some additional loadings for especially complex and high-risk patient groups. In its Policy and Funding Guidelines 2018–19, DHHS states its commitment to introducing an activity-based funding model, also noting its benefits:

There will be a focus on developing a new mental health funding model for specialist community-based adult mental health services in 2018–19. The new model will link funding to the delivery of services and will provide different levels of funding depending on the complexity of the consumer needs. The reforms, and related and revised performance and outcomes monitoring, will improve the transparency and drive improvements in services' performance and consumer outcomes.

During the audit, DHHS was in the early stages of planning a trial of activity-based funding for one adult mental health program. DHHS advises that in future, it will consider using activity-based funding for CYMHS.

2.4 Services funded

DHHS does not have a transparent and clear rationale for the suite of programs and services that it funds, or how it determines the distribution of funding across programs, regions and health services.

DHHS specifies the number of inpatient beds for children and adolescents, but for community or outpatient programs it provides guidance only on how health services should use the funding for two of the 14 funded programs. Health services must deduce what DHHS expects them to deliver and develop their own rationale for what they will deliver with the funding.

In 2018–19, DHHS is providing $127.7 million to Victorian health services for a range of clinical mental health services targeted at children and young people with mental health problems, which is a significant increase in funding. In 2016–17, the Victorian Government committed $73.8 million over four years, primarily to increase accessibility to CYMHS for children under 13 years. In the same year, the government also provided $59 million to support the construction of a new facility to house Orygen, the National Centre of Excellence in Youth Mental Health, which opened during this audit, in Parkville.

The programs delivered by each of the 17 funded health services vary significantly. Four receive more than $15 million per year each for a large array of different programs and services. A fifth receives $7.7 million for services specifically targeted at young people aged 15–24 years. Nine are in regional areas with either no inpatients or two inpatient beds and funding between $2 and $5 million per year each. Funding by service type and health service is shown in Appendix B.

Each service has different client eligibility arrangements—some accept children and young people up to the age of 25 years, while others only accept those up to 18 years. Eligibility also varies by age for specific programs provided within CYMHS. In one region, the client's age determines whether the same service that provides inpatient care will provide ongoing care or whether they need to be referred to another service in the catchment.

DHHS currently distributes funding across seven different types of services, as shown in Figure 2D.

Figure 2D

Distribution of CYMHS funding by type of service

|

Type of services |

Proportion of total funding (%) |

|---|---|

|

Community-based assessment and treatment services |

43.9 |

|

Inpatient services |

25.6 |

|

Other specialised community programs |

14.6 |

|

Early psychosis services |

8.9 |

|

Eating disorders specialised services |

1.1 |

|

School outreach program |

5.0 |

|

Autism coordinators |

0.8 |

Source: VAGO, with information provided by DHHS.

Inpatient beds

DHHS determines how many inpatient mental health beds each health service provides and whether these beds are for children (0–12 years), adolescents (13–18 years) or young people (15–25 years). DHHS could not provide a clear rationale for how it determines the location and number of these beds, and there is no evidence that DHHS consults with CYMHS when making these decisions.

Figure 2E shows DHHS distribution of inpatient mental health beds for children, adolescents and young people.

Figure 2E

Number of inpatient mental health beds for children, adolescents and young people funded by DHHS

|

Health service |

Children aged |

Adolescents aged |

Young people aged |

Funding |

|---|---|---|---|---|

|

Monash Health |

8 |

15 |

* |

7.07 |

|

Austin Health |

12 |

11 |

5.98 |

|

|

Melbourne Health (as auspice of Orygen Youth Health) |

16 |

4.16 |

||

|

RCH |

16 |

4.16 |

||

|

Eastern Health |

12 |

3.12 |

||

|

Ballarat Health |

2 |

0.52 |

||

|

Latrobe Regional Hospital |

2 |

0.52 |

||

|

Ramsay Healthcare (as managers of Mildura Base Hospital) |

2 |

0.52 |

||

|

Total |

20 |

60 |

16 |

26.06 |

Note: * Monash Health operates a youth inpatient service for 18–25 year-olds that is not included in this table because DHHS does not fund it as part of its child and youth mental health funding. Monash Health created the dedicated youth service as part of its adult mental health service.

Source: VAGO from DHHS Policy and Funding Guidelines 2018–19 and DHHS website.

There are complex catchment arrangements across the system. DHHS has acknowledged the catchment arrangements are problematic because they are not aligned with the catchments that other government services use. Clients must attend the CYMHS located in the catchment area they live in, unless they require a specialised service that is not provided or available in their own catchment. Figure 2A shows how regional CYMHS are attached to one of four metropolitan services as their primary referral option for specialist or inpatient support. DHHS has not yet taken action to improve or resolve the catchment issues.

If a child or young person urgently requires inpatient care, health services may admit them 'out-of-area' for care at a CYMHS in another catchment, when one or more of the following occurs:

- Their local CYMHS does not have an available bed.

- Their local CYMHS does not provide inpatient services.

- The health service that their local CYMHS refers to for inpatient services does not have an available bed.

These scenarios can require a child or young person to be transferred to a health service located several hours from their home. Once treatment has ended, the child or young person is 'repatriated' to the CYMHS within their catchment for follow-up care. Health services do not have a consistent process for repatriation. Each repatriation varies depending on the clinical needs of the child or young person, and the availability of their catchment CYMHS service to engage in the repatriation process. Given services are stretched across all CYMHS, it can be difficult for health services to effect repatriations effectively and efficiently.

Children and young people in adult inpatient beds

There are systemic conflicts around inpatient services for young people, which DHHS acknowledges, but has not acted to address:

- The Act says 'children and young people' should receive separate services from adults wherever possible. The Act does not define the age of 'young people' though this terminology is commonly understood to include people aged approximately 18–25 years of age.

- DHHS does not define eligibility for CYMHS adolescent inpatient beds beyond a description on its website stating that 'CAMHS inpatient units Ö mostly admit young people aged 13–18 years' and that RCH admits young people 13–15 years and Orygen Youth Health 15–24 years.

- Audited health services received guidance from DHHS that eligibility for CYMHS adolescent beds ceases on a young person's 18th birthday, but they can use the beds for 18-year-olds if clinically appropriate—there is no written evidence of this guidance.

- DHHS only funds dedicated inpatient beds for young people aged 18–24 in one metropolitan catchment.

- DHHS defines eligibility for adult inpatient beds as 16 years and over.

DHHS has never analysed the use of adult inpatient beds for children and adolescents and does not monitor the issue despite it being clinically inappropriate, inconsistent with legislation, and a potential indicator of significant demand pressures on CYMHS.

When we analysed inpatient admissions data over three years (2016–18) for our audited health services, we found young people admitted to adult mental health services as young as 13 years, as shown in Figure 2F.

RCH is excluded from this table because it is a paediatric hospital. Monash Health was also excluded as the data could not distinguish between inpatient wards and Youth Prevention and Recovery Centres (Y-PARC) that are designed for 16–25 year-olds.

Figure 2F

Adolescents and young people in adult mental health services at audited health services during 2016–18, by age at admission

Note: This assumes that all 18–25 year-olds at Austin Health and Eastern Health are in their adult service, but a small but undetermined number are known to be admitted to adolescent beds based on clinical assessments of individual need and availability of beds.

Source: VAGO analysis of Client Management Interface data provided by five audited health services. See Appendix D for data analysis scope and methodology.

Austin Health and Eastern Health had 2 154 admissions of young people aged 18–25 years in the three years between 2016 and 2018. These health services routinely place these patients in adult mental health beds because their age limit for adolescent beds is a child's 18th birthday.

Monash Health recorded 3 131 admissions of young people aged 18–25 years; however, they have established a separate youth ward of 25 beds within their adult service and they also have a Y-PARC community residential facility. It was not possible through our data analysis to confirm that all of the 18–25 year-olds were treated in the separate youth ward or the breakdown between the Y-PARC and the hospital inpatient services.

Monash Health's service development work to create a youth ward within an adult service without dedicated resources is an important initiative. DHHS should review and monitor this youth ward to identify opportunities to share the learnings with other CYMHS and inform service development that could increase compliance with the Act's principle to provide services for young people separate to adults wherever possible.

AWH admitted 403 mental health inpatients aged 0–25 years to its adult mental health ward. This included 86 admissions for adolescents aged 13–18 years. Some were admitted more than once. Sixty-nine individual adolescents had inpatient admissions to an adult facility.

Our analysis considered four health services. It is likely that admission of children and young people to adult mental health beds has occurred in other health services, particularly in regional and rural areas. This warrants broader review by DHHS.

During this audit, AWH undertook a snapshot audit of 13 adolescents admitted to its adult inpatient facility and found that the reasons for admission across the different cases included:

- a family who refused a referral to the metropolitan adolescent inpatient unit due to the costs and time of travel involved, including a prior experience of having to arrange return transport without assistance

- a patient who was scheduled for transfer to the metropolitan adolescent unit the following day, and spent one night 'contained' in the adult mental health bed while transport was arranged

- a patient's residence being out-of-area for AWH CYMHS, but who was admitted after a crisis assessment

- the crisis assessment team admitting a patient with no explanation in the notes of why a referral to the metropolitan adolescent service was not considered.

AWH has committed to investigate these adolescent admissions to the adult inpatient unit to understand and address the underlying issues.

DHHS has not reviewed, and does not monitor, the effectiveness and appropriateness of transfers between regional areas and metropolitan CYMHS and has not taken action to understand or alleviate the challenges this poses for both families and health services.

Access to inpatient beds for regional areas

At three regional health services, DHHS provides funding for two CYMHS inpatient beds. DHHS has not reviewed the use of these beds and does not monitor the extent to which CYMHS allocate them to children and young people. It would be challenging for the three regional health services to run a separate, dedicated child and adolescent inpatient service given the small number of CYMHS-funded beds. Not only do the beds need to be physically separate from their adult mental health service to meet the principle of care in the Act, but the clinical staff also need to be trained in the child and youth mental health specialty.

Given the significant demand pressures that we reported in our March 2019 audit Access to Mental Health Services together with the workforce challenges of attracting sufficient child and adolescent psychiatrists to regional areas, as described in Sections 1.2 and 2.7, it is unrealistic to expect these services to deliver appropriate CYMHS inpatient services. There is a high risk that the beds are used for adult mental health patients instead, which could mean that CYMHS funding is diverted from children and young people. In 2018–19, these beds represented $1.56 million of the ongoing CYMHS funding.

All regional CYMHS, including those with their own inpatient bed funding, are designated one of four metropolitan services to refer their clients to if they require inpatient or other specialised care that is not available locally, as shown in Figure 2A. For example, AWH CYMHS refers to Eastern Health's CYMHS.

Over the three years between 2016 and 2018, our analysis of health services' data shows that Eastern Health and AWH shared 59 clients.

AWH believes it may have enough demand for its own CYMHS inpatient service. As shown in Figure 2G, AWH had 86 admissions for adolescents aged 13–18 years and a further 317 admissions for young people aged 19–25 years during the three years between 2016 and 2018. In addition, AWH keeps a manual record of transfers to other health service inpatient units, which shows 44 transfers for admission elsewhere, mostly to Eastern Health, between 2016 and 2018. DHHS has advised that services can submit a business case to DHHS requesting additional resources, but this process is not documented anywhere and AWH was not aware of this process.

Figure 2G

Number of mental health inpatient admissions at AWH for 0–25 year-olds, 2016–18, by age at admission

Source: VAGO analysis of DHHS information, February 2019.

Youth Prevention and Recovery Centres

In addition to the inpatient beds located in hospitals, Y-PARCs offer short-term, subacute, intervention and recovery-focused clinical treatment services in residential settings. They are a voluntary program sometimes described as a 'step up, step down' service for young people aged 16–25 years who are unwell, but not so unwell that they need to be in hospital, or who have been released from hospital, but would benefit from further recovery before going home.

There are currently three Y-PARCs—in Bendigo, Frankston and Dandenong—with 10 beds in each. Each received $2.19 million funding from DHHS in 2018‑19. In 2018–19, DHHS allocated $11.9 million over three years to Melbourne Health to establish a fourth Y-PARC in Parkville. DHHS cannot demonstrate how it determined where the Y‑PARCs should be located.

The University of Melbourne evaluated the Frankston Y-PARC and found that it had positive impacts on reducing client crisis episodes and reducing emergency department presentations.

In addition to Y-PARCs, young people use adult Prevention and Recovery Centres (PARC) of which there are 23 in Victoria. All the audited health services except RCH manage at least one PARC. Our data analysis found that for the audited agencies, 212 young people up to 25 years had spent time in an adult PARC in the past three years, of which five were aged 16–18 years.

Community programs

The majority of CYMHS services are delivered to people living in the community through outpatient clinics at hospitals, or outreach programs at other community locations (community programs). These programs represent 74.4 per cent of CYMHS annual funding—$95 million in 2018–19.

DHHS does not advise health services on the intent or deliverables for eight of the 14 CYMHS community programs it funds—totalling over $19 million.

DHHS's lack of guidance on what community programs health services should deliver makes it impossible to understand funding distribution across programs and to monitor performance of health services against program objectives, where they exist. We discuss this further in Section 3.2.

DHHS asserts that health services are best placed to determine local need; however, it should still articulate what it expects health services to deliver based on statewide analysis of need and demographics. Without such guidance, DHHS cannot hold health services to account for the funding they receive and assure itself that the right services are provided in the right places.

Under the current devolved system, children and young people receive different services and care based on the catchment they live in, which leads to inequity across the system and can also mean that clients who move between catchments may lose services that were previously available to them.

With a lack of any other guidance on what they are expected to deliver with their community funding, some health services have developed their own internal systems based on how DHHS provides their CYMHS funding through up to 14 'funding lines' in their financial system. Figure 2H shows how DHHS's financial system identifies 14 'funding lines' and the availability of guidance for each.

Figure 2H

DHHS funding in 2018–19 and guidance to health services for clinical mental health services for children and young people, by funding line

|

Program |

Total program funding 2018–19 ($) |

Number of health services receiving this program funding |

Guidelines available |

Program description in policy and funding guidelines |

|---|---|---|---|---|

|

Child and adolescent treatment services |

56 042 170 |

13 |

No |

Yes |

|

Early psychosis |

11 425 522 |

15 |

No |

Yes |

|

CAMHS and Schools Early Action (CASEA) |

6 440 097 |

13 |

Yes |

No |

|

Youth integrated community service |

5 650 860 |

7 |

No |

No |

|

Intensive youth support |

4 807 444 |

9 |

No |

Yes |

|

Child clinical specialist initiatives |

2 115 152 |

13 |

Yes |

No |

|

Gender dysphoria |

2 047 896 |

1 |

No |

No |

|

Mental health output eating disorders funding |

1 432 591 |

1 |

No |

Yes(a) |

|

Mental health and intellectual disability initiative (MHIDI) |

1 321 000 |

1 |

No |

No |

|

Autism coordinator |

1 071 258 |

14 |

No |

No |

|

Homeless youth dual diagnosis initiative (one-off funding) |

1 004 272 |

8 |

No |

No |

|

Community forensic youth mental health |

752 019 |

2 |

No |

No |

|

Youth justice mental health |

724 711 |

5 |

No |

No |

|

Refugee |

200 000 |

1 |

No |

No |

(a) There is a program description for 'Community Specialist Statewide Services – Eating Disorders', which we have assumed to be the same program as 'Mental health output eating disorders' funding.

Source: VAGO analysis of DHHS information, February 2019.

Lack of program guidelines or expectations

|

DHHS commissioned the Reform of Victoria's specialist clinical mental health services: Advice to the Secretary, DHHS in 2017. It provided advice on future directions for Victoria's specialist clinical mental health services. The report has not been released to health services or the public. |

The 2017 Reform of Victoria's specialist clinical mental health services review recommended that DHHS 'develop clinical guidelines that specify expectations of the level and mix of services', but DHHS disputes that it should develop program guidelines for mental health programs, and does not plan to develop guidelines for the remaining programs.

The DHHS Policy and Funding Guidelines 2018–19 provides a one or two-sentence 'program description' for six mental health community programs that mention children and young people, but only four align with the CYMHS funded programs. The brief program descriptions, where they exist, do not provide sufficient guidance to health services about the intent or expected deliverables for their funded programs.

Some funding lines are historical and reflect programs that have ceased while the funding has continued. For example, the youth integrated community service funding line provides $5.7 million per year to seven CYMHS. DHHS advises that this was originally funding to trial new Youth Early Intervention Teams in 2010 and that DHHS did not continue that program after a change in policy directions in 2013. DHHS does not know why it has continued to provide CYMHS with this funding given the program does not exist, nor could it provide guidance on how health services should use this funding.

2.5 Inconsistency in treatment age

When requiring inpatient care, all 18–25 year-olds must use the adult mental health system with the exception of the 16 beds at Orygen Youth Health in the Western region and the youth inpatient service at Monash Health. Only four of 13 CYMHS provide youth-targeted community programs for young Victorians aged 18–25 years, as shown in Figure 2I.

Historically, all health services treated children only to age 18 years. However, it is now commonly understood that it is not in the best interests of young people to transfer to the adult system at 18 years, as this is a vulnerable time in their life. This is especially true for adolescents in out-of-home care, who must manage the significant transition to independent living at this age.

Figure 2I

Age eligibility for programs, by CYMHS

|

Health service |

Child inpatient |

Adolescent inpatient |

Youth inpatient/ residential |

Community programs |

|---|---|---|---|---|

|

AWH |

No |

No—refers to Eastern Health |

No |

0–18 |

|

Alfred Health |

No |

No—refers to Monash health |

No |

0–18 for residents of Bayside and Kingston 0–25 for residents of Port Phillip, Stonnington and Glen Eira |

|

Austin Health |

Yes— up to age 13 |

Yes—ages 13–18 |

No |

5–18 for community teams Three specialist/outreach teams extend to 24 or 25 years |

|

Ballarat Health |

No |

Two local beds and also refers to RCH |

No |

0–14 Child and infant 15–25 Youth |

|

Barwon Health |

No |

No—refers to RCH |

No |

0–15 |

|

Bendigo Health |

No |

No—refers to Austin Health |

Y-PARC—ages |

0–18 |

|

Eastern Health |

No |

Yes—ages 13–18 |

No |

0–25 |

|

Goulburn Valley Health |

No |

No—refers to Eastern Health |

No |

0–18 |

|

Latrobe Regional Hospital |

No |

Two local beds and also refers to Monash Health |

No |

0–18 |

|

Melbourne Health (Orygen) |

No |

Yes—ages 15–24 |

Yes—ages 15–24 |

15–24 |

|

Monash Health |

Yes—ages 0–12 |

Yes—ages 12–18 |

Yes—Youth mental health service for 19–25 years Y‑PARC—ages |

0–18 Early in Life Mental Health Service 19–25 Youth mental health service |

|

Ramsay Healthcare (Mildura) |

No |

Two local beds and also refers to Austin Health |

No |

0–18 |

|

RCH |

No |

Yes—ages 13–18 |

No |

0–14 (15+ referred to Melbourne Health) 0–18 (some statewide services) |

|

Southwest Healthcare |

No |

No—refers to RCH |

No |

0–18 |

Source: VAGO interviews and document review with audited health services; public websites service descriptions for other services.

The inconsistencies exist because DHHS introduced, then ceased midway, a suite of pilot projects to increase the age eligibility to 25 at some health services. DHHS has taken no action to address the subsequent differences in age eligibility across the system.

The Victorian Government introduced a reform strategy for mental health services in 2009, Because Mental Health Matters, which included a specific commitment to 'redeveloping services within a 0–25 years framework'. The government provided $34 million for the following projects to progress this commitment:

- child and youth mental health service redesign—two demonstration projects

- youth early intervention teams—six sites

- youth justice mental health initiative—six sites

- youth crisis response team—two sites

- Y-PARCs—two sites.

This reform strategy ceased in 2013, during its fourth year of implementation, following the change of government. No alternative strategic direction or policy replaced it. Despite this, DHHS continues to provide funding for defunct programs, but does not tell health services what to do with it.

The reform strategy introduced fundamental changes to the whole service system; however, DHHS did not advise health services whether they should continue with the changes or revert to the previous system. This has resulted in inconsistency as some CYMHS chose to continue with the reforms independently while others did not.

DHHS commissioned external consultants to undertake two formal evaluations of the reform projects in 2012 and 2013. These reports contain valuable lessons about effective strategies for undertaking the reforms and delivering services to 0–25 year-olds. The reports would be particularly beneficial for the CYMHS that were part of the projects, as well as those that have chosen to, or are considering, reforming their services to a 0–25 year-old framework. DHHS has not shared this information with those CYMHS and has no plans to do so.

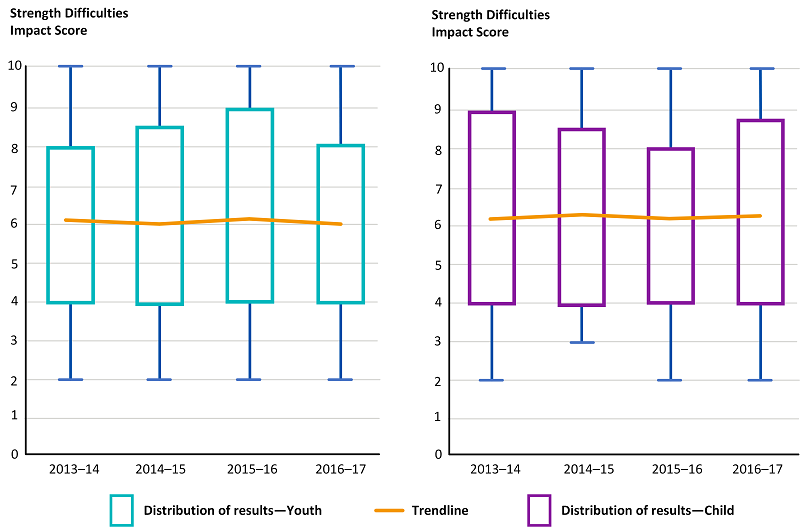

2.6 Geographic distribution of services